Building tylerbutler.com, Part 4: The Main Home Page and Migrating Content

Part 1 :: Part 2 :: Part 3 :: Part 4 :: Part 5 :: Part 6

This is part 4 of an ongoing series of posts about my experience building a custom site on top of Office SharePoint Server 2007. If you haven't yet read parts 1 through 3, I'd strongly suggest starting there.

In my last post, I discussed customizing the XSL styles to get my rollup pages looking exactly how I wanted them, and talked a bit about some of the limitations I ran into with my design. In this post, I'll cover creating my main site home page using a "detached page" and migrating the content from my old site into the new one.

The Main Home Page

On my old site, every category rollup page was pretty much the same, each of them just rolled up different content. My main home page was slightly different than the other rollup pages, because I wanted to hide the right navigation, and display some other special content on it, such as my Xbox Live gamertag and some RSS feeds.

One option was to create another page layout and override the right navigation with custom content. However, the layout was going to be pretty much the same as a typical rollup page, so why create a whole other page layout just for this one instance? Plus, there weren't going to be a lot of other pages that looked the way the home page did. As I was talking with some other PM's on my team about how I could solve this in an elegant way, George suggested I use a "detached page." I'm glad he brought it up, because I had almost forgotten about the feature, and it allows me to do exactly what I want.

Typically when we talk about publishing pages in MOSS, we are talking about "templatized pages." That is, pages that have an associated page layout that maps the pages content, which is stored in columns in the Pages list, to their actual position and appearance on the rendered page. This allows the great flexibility to change the appearance of pages because of the separation between data and presentation. However, there are most definitely things called "untemplatized pages." These are just aspx pages that are dropped in a library. MOSS is running on top of ASP.NET, so these pages will happily render.

You can make an untemplatized page by simply creating your own aspx page and putting it in a library. However, using SharePoint Designer, you can also create an untemplatized page from an existing templatized page. We refer to this process as "detaching," since you're essentially severing the link between the page layout and the page, and copying the appropriate markup from the layout into the actual detached aspx page. Once this is done, you can make changes to the page without changing the layout or impacting any of the other pages that are using that layout. This makes it perfect for situations like mine, where essentially everything was the same as another page layout, but there were some one-off changes I wanted to make, like overriding the right navigation.

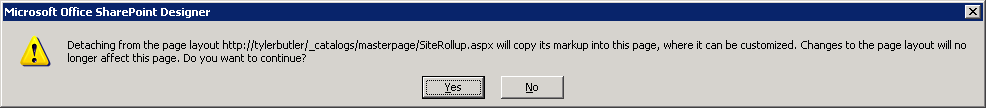

In order to do this, you need to have a templatized page first. I went ahead and created one using the Site Rollup page layout that I created earlier. Once the page was created, I went to the Pages library of the site in SharePoint Designer, right-clicked on the page, and selected Detach from Page Layout. I received the following warning about the ramifications of detaching the page from its layout.

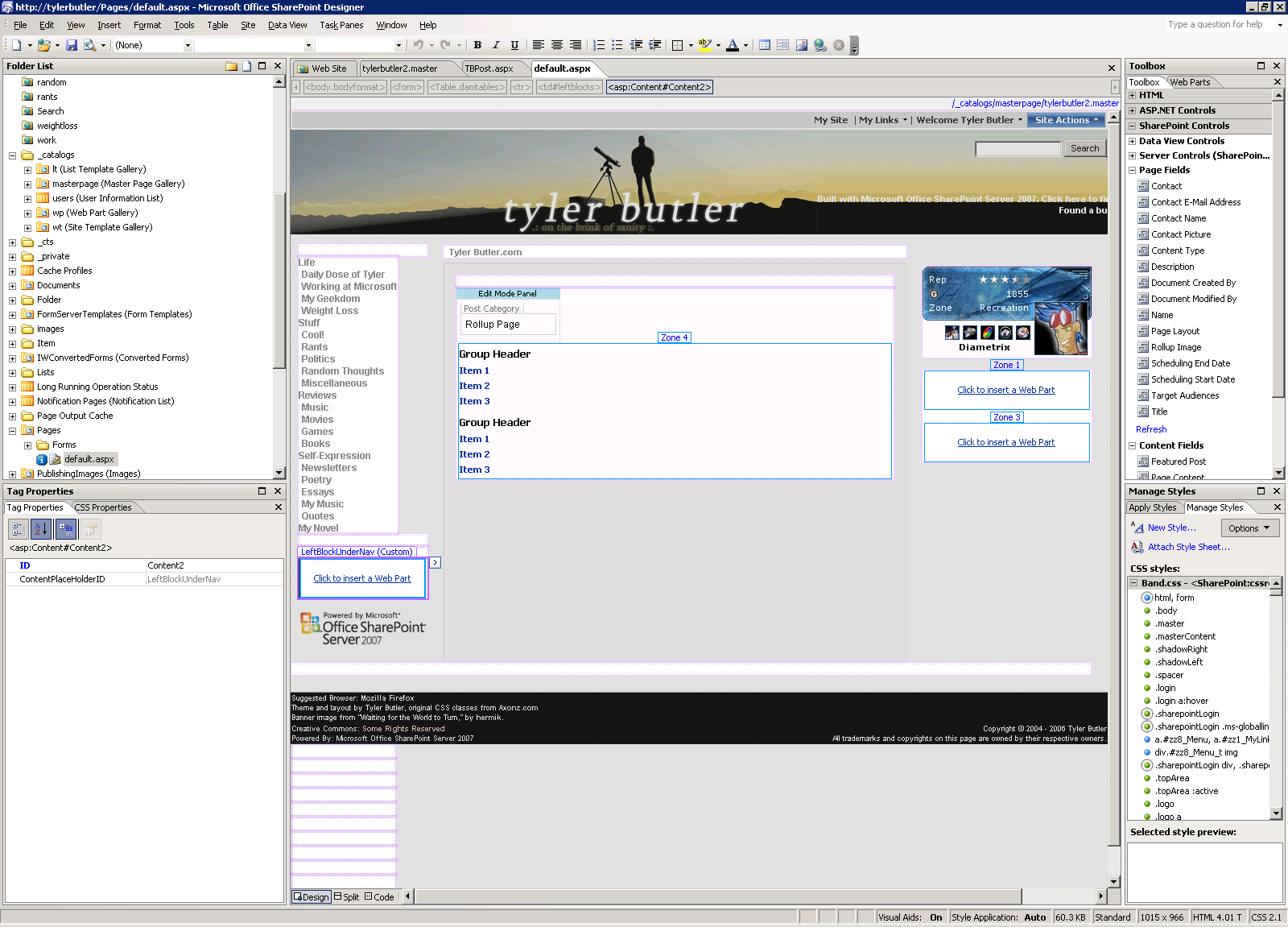

After clicking Yes on the warning dialog, my page was detached. I opened it up is SharePoint Designer and customized its placeholders, saved it, and my homepage was done.

There are some things to note about detached pages. As the warning dialog clearly points out, changes to layouts do not affect detached pages, because they're no longer associated with a layout. However, they are still associated with the site master page, so changes to it will be reflected in the detached page. Also, the contents of the page itself is still stored in the columns of the list, and gets versioned, just like you'd expect. There's still a page edit mode in the browser that you can use to change the contents of the page, so you don't have to use SharePoint Designer to make content changes on detached pages. When you think about it, this makes sense. The detachment process is copying all of the markup from the page layout, so the resulting page behaves just like a normal page, except that instead of grabbing the layout markup from the page layout file, it's stored in the page itself.

You can also reattach a detached page. When you do this, you lose all of the customizations that you've made, and the page starts getting changes from its associated page layout, since it's reattached to it. The actual data in the page is still preserved though, just like it is when you detach from the page layout.

One other potential negative to detached pages: they don't get the benefit of output caching. In my case, since my site is relatively low traffic, I am not concerned about this quite yet. However, if you want to use a detached page on a very high traffic site, you'd want to consider the performance impact of not having caching on this page. Kai has a great post about caching in MOSS that is a fantastic place to start if you're new to the whole caching thing.

Migrating Content

The basic look and feel of the site was done. I had created my master page, layouts, customized my XSLT's, and created the rollup pages and homepage. However, my old site had around 200 posts that I wanted to move over to the MOSS site. I had several options for migrating the content over.

First, I could do it manually. I could open every post in my old site, create a corresponding page in the new site, and copy/paste the content. With 200 posts, this was doable, but I wasn't exactly excited about the prospect of hitting Ctrl-C, Ctrl-V that much.

Another option was to create a package using the WSS Content Migration API (known internally as PRIME, and I'll refer to it as such through the remainder of this post). PRIME is designed to export and import content from WSS, and since MOSS is built on top of WSS, it can handle MOSS content as well. Because PRIME has a public schema, I could craft a custom package that I then fed into the PRIME import code, which would handle importing all of my content for me. This is essentially what we do when we migrate content from Content Management Server 2002. We connect up to the CMS 02 DB and create a custom PRIME package, which we then feed into PRIME to import for us.

The final option was for me to write some custom code to do the migration. The logic would be pretty simple: connect to the GeekLog DB, get the post data, then create a page in the right subweb and put all of the content from the GeekLog database into the right field on the page. In the end, this is what I opted for. Like I said, I like writing code! The code is below. Please note that this code is very barebones and specific to my needs. The huge if/else logic for mapping the categories to the right subweb is particularly ugly, but it was just the most straightforward way for me to get it done. I leave the formulation of a more elegant approach as an exercise for the reader. :-)using System;<br>using System.Collections.Generic;<br>using System.Text;<br>using MySql.Data.MySqlClient;<br>using Microsoft.SharePoint;<br>using Microsoft.SharePoint.Publishing;<br>using System.Text.RegularExpressions;<br>namespace GeeklogMigration<br>{<br> class Program<br> {<br> static void Main( string[] args )<br> {<br> string connectionString = "Server=localhost;Database=geeklog;User ID=root;Password=password";<br> string query = "SELECT tid,date,title,introtext,bodytext FROM gl_stories order by tid;";<br> // connect to the MySql DB that has my geeklog data<br> MySqlConnection dbCon = new MySqlConnection( connectionString );<br> using ( SPSite site = new SPSite( "https://tylerbutler/sites/tylerbu/" ) )<br> {<br> // get a publishing site by passing in an SPSite<br> PublishingSite pubSite = new PublishingSite( site );<br> // Get the root publishing web<br> PublishingWeb rootWeb = PublishingWeb.GetPublishingWeb( pubSite.RootWeb );<br> // Get the layout to use for the posts<br> PageLayout layout = pubSite.PageLayouts["_catalogs/masterpage/TBPost.aspx"];<br> // Connect to MySql, execute the query, and get the result set<br> dbCon.Open();<br> MySqlCommand cmd = new MySqlCommand( query, dbCon );<br> MySqlDataReader results = cmd.ExecuteReader();<br> while ( results.Read() )<br> {<br> // Get the various pieces of data from the result row<br> string title = results["title"] as string;<br> string category = results["tid"] as string;<br> DateTime date = (DateTime)results["date"];<br> string intro = results["introtext"] as string;<br> string story = results["bodytext"] as string;<br> <br> PublishingPage page;<br> SPListItem pageItem;<br> if ( category.Equals( "books", StringComparison.OrdinalIgnoreCase ) )<br> {<br> // Get the specific PublishingWeb for the category<br> PublishingWeb web = rootWeb.GetPublishingWebs()["books"];<br> <br> // Add a new page to the PublishingWeb using the layout<br> page = web.GetPublishingPages().Add( cleanPageName( title ), layout );<br> // Get the page’s backing list item<br> pageItem = page.ListItem;<br> // Set the category for the page<br> pageItem["PostCategory1"] = "8;#Books";<br> }<br> else if ( category.Equals( "cool", StringComparison.OrdinalIgnoreCase ) )<br> {<br> PublishingWeb web = rootWeb.GetPublishingWebs()["cool"];<br> string pageName = cleanPageName( title );<br> page = web.GetPublishingPages().Add( cleanPageName( title ), layout );<br> pageItem = page.ListItem;<br> pageItem["PostCategory1"] = "5;#Cool";<br> }<br> else if ( category.Equals( "essays", StringComparison.OrdinalIgnoreCase ) )<br> {<br> PublishingWeb web = rootWeb.GetPublishingWebs()["essays"];<br> page = web.GetPublishingPages().Add( cleanPageName( title ), layout );<br> pageItem = page.ListItem;<br> pageItem["PostCategory1"] = "9;#Essays";<br> }<br>... more else if statements for each of the categories ...<br> else<br> {<br> // we should never get here...<br> page = null;<br> pageItem = null;<br> throw new Exception( "something went horribly wrong..." );<br> }<br> // Set all of the page’s properties<br> pageItem["PostAuthor"] = "1;#Tyler Butler";<br> pageItem["Post_x0020_Time"] = date;<br> pageItem["Title"] = title;<br> pageItem["Comments"] = removeHTML( intro );<br> pageItem["PublishingPageContent"] = story;<br> // Commit the changes and check the page in (but don’t approve)<br> page.Update();<br> page.CheckIn( "Migrated from Geeklog" );<br> Console.WriteLine( "Migrated " + title );<br> }<br> dbCon.Close();<br> }<br> }<br> static string cleanPageName( string name )<br> {<br> // Remove invalid characters from page titles<br> string newName;<br> newName = name.Trim().Replace( " ", "" );<br> newName = newName.Replace( ":", "" );<br> newName = newName.Replace( ",", "" );<br> newName = newName.Replace( ".", "" );<br> newName = newName.Replace( "?", "" );<br> newName = newName.Replace( "\\", "" );<br> newName = newName.Replace( "/", "" );<br> newName = newName.Replace( "=", "" );<br> newName = newName.Replace( "!", "" );<br> newName = newName.Replace( ";", "" );<br> newName = newName.Replace( "&", "" );<br> newName = newName.Replace( "*", "" );<br> newName = newName.Replace( "#", "" );<br> newName += ".aspx";<br> return newName;<br> }<br> static string removeHTML( string s )<br> {<br> // Remove HTML tags from a string<br> Regex r = new Regex( "<[^>]*>" );<br> return r.Replace( s, "" );<br> }<br> }<br>}

The code took about 2 hours to write, and the actual migration of the data took around 20 minutes I think. I think this was the right route to go, since crafting the custom PRIME package would have taken at least as long as the code did to write, and this was more straightforward and easier to debug. However, if I anticipated having to do this more regularly, or cared a lot about importing multiple versions or a bunch of documents, then the custom PRIME package would definitely have been the best option.

A couple of things to point out about the code: you'll notice that I set the Person/Group and Lookup fields to values like 8;#Books. The reason for this is because of the way WSS internally stores values of these types. The number is the index of the item in the list (the Users list or the Categories list), and the string is the name of the item in the list. This is admittedly a bit annoying to program with, since you need to not only know the name of the item, but its index as well. I simply hardcoded these values in my code, but you could conceivably lookup the appropriate index values in code and go that route.

Also, the cleanPageName method was necessary because there are certain characters that cannot be contained in a page name in MOSS. Note that this is not the Page title, but the name; the actual name of the aspx page. I wrote this method by trial and error. Every time I got an exception about the title containing an invalid character, I just added some additional logic. I think there's a list of the invalid characters in the WSS SDK; if I find it I'll link to it here.

I hope this code is somewhat useful in illustrating how you can create pages in code, set values of the fields in the resulting page, and check the pages in. You'll find a lot more examples of things like this in the MOSS SDK.

Now that my content was migrated, I had a site that actually looked like a site! I still had a couple of things to do, like creating an "All posts" page, setting up RSS feeds, output the current date and time in my master page, etc. All that and more in my next post! Until next time...