Leverage VM Fleet Testing the Performance of Storage Space Direct

Back to 2 years ago, when I worked with Lenovo COSN team to ship their public cloud based on Windows Server 2012 R2, System Center 2012 R2 and Windows Azure Pack, we worked out a test procedure and a set of test scripts to validate the cloud platform. In order to simulate IO intensive workload, we deployed a bunch of VMs with auto logon scripts. Those auto logon scripts use fio to generate IO right after VM provisioned and started.

Now the project Diskspd (https://github.com/microsoft/diskspd) includes a set of scripts called VM Fleet (https://github.com/Microsoft/diskspd/tree/master/Frameworks/VMFleet), which use the similar mechanism to test the performance of Storage Space Direct.

In this post, I will demonstrate how I use the VM fleet to test the performance of my Hyper-Converged Cluster.

First of all, I deployed a 3-node Storage Space Direct Cluster. Here are the configurations of the testing machines.

DELL R720 (ECGCAT-R4-22, ECGCAT-R4-24, ECGCAT-R4-26)

- CPU: E5-2650

- Memory: 192GB

- Boot Drive: H710 with 4 15K 600GB SAS HDDs (RAID10)

- Data Drive: Micron P420m 1400GB

- NIC: Chelsio T580 40Gb (RDMA capable)

After running Enable-ClusterS2D , I run the following cmdlet to create 3 CSVs, one for each node.

Get-ClusterNode |% {New-Volume -StoragePoolFriendlyName S2D* -FriendlyName $_ -FileSystem CSVFS_ReFS -Size 600GB -PhysicalDiskRedundancy 1}

Then run the cmdlet below to create an addition CSV to store scripts, tools, image and results.

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName collect -FileSystem CSVFS_ReFS -Size 138GB -PhysicalDiskRedundancy 1

Then I downloaded Diskspd with VM Fleet from https://github.com/microsoft/diskspd. Copy the VMFleet folder to the one of the node in the above cluster (in my case, I copied it to D:\) and run the script from that node.

.\install-vmfleet.ps1 -source D:\VMFleet

Download the latest Diskspd from https://aka.ms/diskspd and copy the DISKSPD.exe into the folder C:\ClusterStorage\Collect\Control\Tools.

Prepared a Server Core VHD with the following steps if you don't have one.

- I used Convert-WindowsImage.ps1 (https://gallery.technet.microsoft.com/scriptcenter/Convert-WindowsImageps1-0fe23a8f) to convert a Windows Server 2016 WIM image to a Server Core VHD.

- Use the above VHD to start a VM and set the initial password. (In my case, I use the password User@123.)

- Shutdown the VM (DON'T syspreped the VM.)

- Copy the VHD to C:\ClusterStorage\Collect\. (In my case, the VHD is ENGWS2016COREG2VM.vhdx.)

Run the following script from folder C:\ClusterStorage\Collect\Control\. It created 10 VMs in each node. The step might take from minutes to hours. It depends on how many VMs you will provision and hardware performance.

.\create-vmfleet.ps1 -basevhd "C:\ClusterStorage\Collect\ENGWS2016COREG2VM.vhdx" -vms 10 -adminpass User@123 -connectpass User@123 -connectuser "infra\administrator" -nodes "ECGCAT-R4-22","ECGCAT-R4-24","ECGCAT-R4-26"

I used the script below to change the VM configuration to 8 v-cores and 16GB static memory.

.\set-vmfleet.ps1 -ProcessorCount 8 -MemoryStartupBytes 16GB -DynamicMemory $false

Run script below to start all the VMs

.\start-vmfleet.ps1

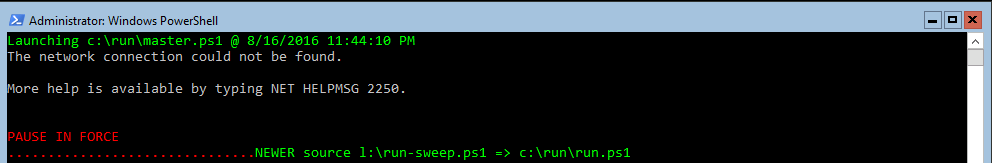

You may check the console of one or more VMs and make sure there is no error.

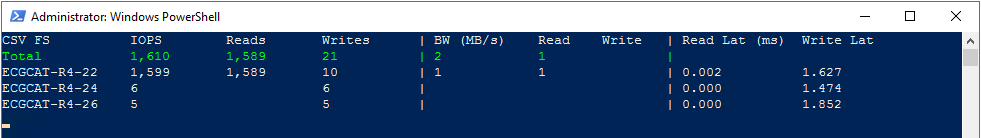

Monitor the storage performance by running script below.

.\Watch-Cluster.ps1

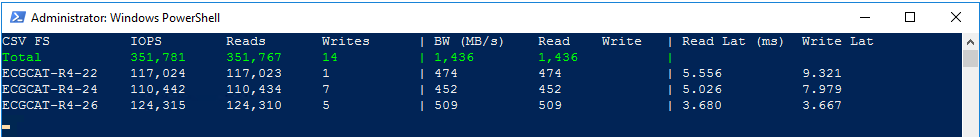

Last but the most important, you may stress IO by the script below. Here is an example, all the VMs run Diskspd to test 4KB 100% read random IO for 5 minutes (2 thread, QD=40).

.\start-sweep.ps1 -b 4 -t 2 -o 40 -w 0 -d 300

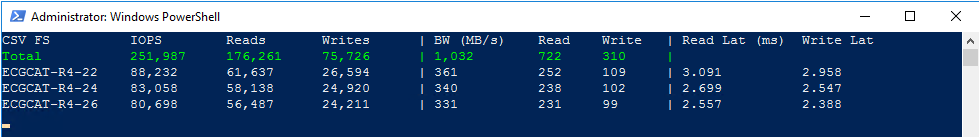

You may also test other IO profile like 4KB random read/write mixed IO (R/W: 70/30), 2 thread, QD=20 with the cmdlet below.

.\start-sweep.ps1 -b 4 -t 2 -o 20 -w 30 -d 300