Measuring Concurrent Site Users – SharePoint 2010 and other

This post was written as I was working to analyze traffic on SharePoint 2010, but it could be used for any website log traffic analysis.

Measuring concurrent users on your web site over time is important to understand characteristics of the site’s performance—its overall throughput—based on its load. In fact, planning worksheets on TechNet tell us to find a number for concurrent users, but they don’t tell us how to get that number.

However, the idea of concurrent users is hard to pin down. What exactly does that mean? Conceptually, you might think of it as the number of users who are hitting the site right now. Ok, but what does right now mean? We really must define it as a specific duration of time, but how long? I’ve seen many calculations count the number of unique users every hour. Is that meaningful? I think in many cases, it’s not.

Let’s assume for the moment that your web site doesn’t use session state, it just gets requests and returns results. For this, it’s got to do some work to assemble the result. SharePoint especially has a lot of construction to do, gathering data from the database, the user profile, the file system, applying master pages, page layouts, maybe executing page controls and web parts, phew! So this is going to take some time on the server, and if another user sends a request, then those tasks will add to the overall load. But if that doesn’t happen until later in the same hour, then those tasks don’t really add to the overall load. So I think the duration should be less than an hour. Note, if your site does use session state, that changes things slightly… more later.

How short should the duration be?

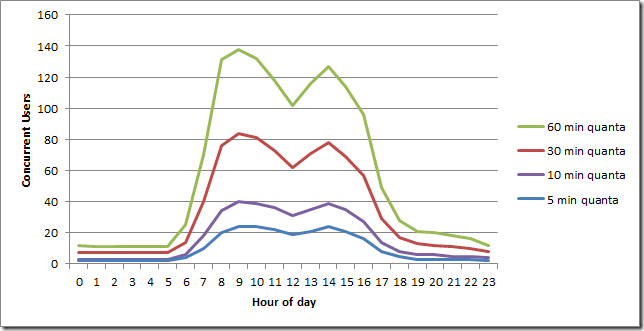

Let’s look at the impact of measuring logs using different durations. I used LogParser to query through some logs from a small SharePoint 2010 farm; 8 million requests over a 2 week period, collated and quantized into behavior per hour of day. This graph counts the number of distinct (unique) IP addresses that were found in four different-sized “buckets of time” which I’m calling quanta. It does not show the number of requests. For 5 minute quanta, I count the total number of unique IP addresses in each 5 minute period throughout the day, then average those results over each hour. For 60 minute quanta, I count the total number of unique IP addresses in that whole hour.

Wow, that’s quite a difference. If we quantize users into 5 minute buckets versus 60-minute buckets, we find a wide range of results. Designing a production infrastructure for SharePoint, I’m certainly going to want to know if it has to support 20 or 120 concurrent users during those busy parts of the day.

Abstractly, I think the size of the quanta into which we count distinct users hitting the farm should be somewhat longer than it would take the server to “come to rest” after a heavy stream of incoming requests suddenly stopped, allowing all requests to be finished and results returned. It seems to me that after this time, since the server is done processing those requests, it can take more without additional load. Am I going to try and compute this time? Erm, no. I’m going to WAG it at about 5 minutes in general, based on some undocumented experiences over many years. But maybe the server could only finish all of its work in that time because nothing else was coming in. Ok, so let’s double it: 10 minutes.

Of course there’s a body of knowledge around load testing that might help us here. One site that has a lot of great detailed technical information about load testing is the blog at webperformance.com. For load testing, ramp-up is more important than the ramp-down abstraction above. They like to ramp-up over a long period—many minutes to several hours—in order to understand the behavior of the system under different loads. But ramp-down is more about leaving the system in a stable state, allowing all user requests to complete normally, instead of just killing all virtual user threads in-request. In this case, the ramp-down is dependent mostly on maximum request processing time, which is pretty much page latency. Now, we may want our page latency to be just a few seconds in most cases, allowing more on pages that do “complex” things like generate reports. But even with relatively fast pages, ramp-down takes a while longer. Following this line of thinking, the 5 minute and 10 minute durations for concurrency continue to seem more reasonable than larger buckets.

For another sanity check. How do people typically use SharePoint and other publishing/collaboration intranet sites? Again from personal experience, I tend to use individual sites in relatively short bursts. If I’m searching for information, I’ll want some results in just a few minutes, or I’m going to give up and try something different. Or if I’m collaborating, I’ll be uploading and downloading documents one at a time or a few at a time, then going off to work with those documents. So, I might have multiple SharePoint sites open all day long, but my direct interactions with them are bunched together, separated by other work. For me, the idea of 10 minutes well encompasses each of my individual direct interactions with collaborative sites such as SharePoint.

I’m really talking myself into this figure of 10 minutes as a guideline for measuring concurrency. And I welcome any debate on the topic… please post comments!

So, what if you do use session state on your site? For SharePoint, the typical session state available for developers is disabled by default (see my Session State blog posting for more information). But if you enable it, or are using another web site host with session state, then whenever a user sends an authenticated request to the server, it will set aside some resources to maintain that session, for some period of time. Way back in my ASP days, I recall 20 minutes being a common default, and it’s always configurable. If session state is maintained in the database, that’s fewer resources that the WFE servers must maintain, but it’s still something. In any event, if you’re using session state, you might want to use the session duration as your guideline for quantizing users to measure concurrency.

The script

Here’s the batch file with LogParser commands that I used to compute concurrent users by hour throughout the day. I know, I should have used PowerShell, but I only started with a couple lines and it just grew from there. Typical.

SET inputCSV=%1

if '%inputCSV%'=='' GOTO USAGEREM outputCSV has no extension, it's added later

SET outputCSV=UserConcurrencySET concurrencyPeriod=%2

if '%concurrencyPeriod%'=='' GOTO USAGESET concurrencyField=%3

if '%concurrencyField%'=='' SET concurrencyField=c-ipREM Set a filter to match requests that should be excluded

REM %%Service%% matches our service accounts (like search), exclude them

REM ...if you don't want a filter, use SET filter=0 IS NULL

SET filter=(cs-username LIKE '%%Service%%')echo.

echo Counting Concurrent Users

echo inputCSV : %inputCSV%

echo outputCSV : %outputCSV%.csv

echo concurrencyField : %concurrencyField%

echo concurrencyPeriod: %concurrencyPeriod% secondsecho.

echo First stage, quantizing to %concurrencyPeriod% seconds...logparser -i:CSV -o:CSV "SELECT DISTINCT %concurrencyField%, date, QUANTIZE(TO_TIMESTAMP(time,'hx:mx:sx'), %concurrencyPeriod%) AS Period, COUNT(*) as Requests INTO temp1-%outputCSV%.csv FROM %inputCSV% WHERE %concurrencyField% IS NOT NULL AND NOT %filter% Group By Date, Period, %concurrencyField%"

echo.

echo Second stage, grouping...logparser -i:CSV -o:CSV "SELECT date, to_string(to_timestamp(Period,'hx:mx:sx'),'hh') as Hour, Period, count(%concurrencyField%) as UserCount, sum(Requests) as RequestCount INTO temp2-%outputCSV%.csv From temp1-%outputCSV%.csv Group by date, Period"

logparser -i:CSV -o:CSV "SELECT Hour, avg(UserCount) as Concurrent-Users(q%concurrencyPeriod%), sum(RequestCount) as Total-Requests(q%concurrencyPeriod%) INTO %outputCSV%-%concurrencyPeriod%.csv From temp2-%outputCSV%.csv GROUP BY Hour ORDER BY Hour"

GOTO DONE

:USAGE

echo.

echo # Usage:

echo #

echo # ConcurrentUsers inputCSV seconds [fieldname]

echo #

echo # inputCSV : csv file (or *.csv) of log entries with fields: date, time, c-ip or [fieldname], other

echo # seconds : concurrency quantization level. Typical values 300 and 600 seconds

echo # fieldname: field to evaluate for concurrency. Typical values c-ip (default) and cs-username

echo #

echo # Example : ConcurrentUsers BigO.csv 300

echo.:DONE

Several things about this script.

The input is a CSV file, it must have fields named “date” and “time” at the very least, then another field named “c-ip” This field could have a different name, which you pass in as a parameter. This allows you to specify a different way to count users, such as cs-username, or maybe you want to count some other information quantized by time, maybe cs(User-Agent). I just default this to c-ip because I was working with a site that had anonymous access, and most of the cs-username values were null.

The file may have many other fields that come from the IIS logs.

The way I got my BigO.csv file was by following Mike Wise’s excellent whitepaper Analyzing SharePoint Usage: https://www.microsoft.com/download/en/details.aspx?id=4616. This will help you get started with LogParser in about 10 minutes (there’s that number again… an omen!). Some of his first steps show how to run LogParser on a whole set of raw IIS logs and collate them into a CSV file that has some extra info added, in order to do more analyses on that.

My script doesn’t require a CSV made in this way, this is just a good example of how to get one pretty easily from your logs. And of course, LogParser could just as easily be run directly against the logs, by using the option –i:IISW3C instead of –i:CSV. Mike’s paper talks about this too.

So, you can run this a few times and get results for different intervals that define your idea of concurrent use.

ConcurrentUsers BigO.csv 300

ConcurrentUsers BigO.csv 1200

ConcurrentUsers BigO.csv 3600

Running these in succession will output 3 files, UserConcurrency-300.csv, UserConcurrency-1200.csv and UserConcurrency-3600.csv, so you can easily compare for your analysis. It also creates some intermediate temp csv files that are useful to look into for sanity checking and understanding what the final output is telling you.

Oh, what’s that “SET filter=0 IS NULL” for? I just wanted an easy way to shut off the filter. Since the filter appears in the WHERE clause as AND NOT %filter% , I need something that evaluates to FALSE, meaning “don’t filter,” and as everyone knows, 0 is not null. Stupid geeks and their jokes.

Well, best of luck in this grey area. Let me know if any of this looks wrong please!!!

Comments

Anonymous

March 15, 2012

Hi Mark, excellent post. The number of concurrent users is definitely very useful for planning your SharePoint farm. If it should be 10 mins? I tend to agree. What I often find useful as well is to analyze the performance from the client side. I have extended the so called CopyTimer tool from MSFT where certain tests are performed and stats uploaded to a SharePoint list. Very nice. I have distributed the .exe around the world, asking users to schedule the tool to run a number of times each day during their office hours. I have found that information even more useful than server logs as this really tells you that uploading a document might take 6 times longer from the APAC region when compared to AMERICAS for instance. Just a thought. :-) Keep up the good work! My blog: hermansberghem.blogspot.com Twitter:: @servehermansAnonymous

April 18, 2012

Do you have any decent resources that can map these results to recommended server specs or service architecture. for example, at what point is a good idea to perhaps take a heavly used service on a larger farm and place it on its own app server. There is a lot of info on technet about how to set up service architectures, but not as much as why or when to split off services, in terms of an objective set of criteria. Thanks for the excellent post!