Kubernetes Ingress in Azure (Government)

In a previous blog post, I discussed that it is easy to set up a Kubernetes cluster in Azure Government (or Commercial) and deploy web applications to the cluster. The example deployment lagged some features. Specifically, we did not configure an SSL certificate for the application. Doing so is pretty straightforward with Kubernetes Ingress and in this blog post, I will discuss what that is and show how we can add HTTPS support to web applications.

What is Kubernetes Ingress and Ingress Controller

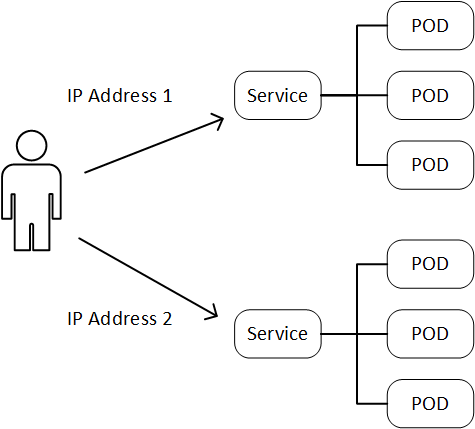

Workloads deployed in Kubernetes Pods can be exposed outside the cluster through a Service resource. A service is a logical grouping of pods and a definition of the policy by which they can be accessed. In my previous blog, I demonstrated this with a simple web app workload. Imagine that you have multiple web applications running in the same Kubernetes cluster. If each of them is exposed with its own Load Balancer Service, there would be separate IP addresses for each web application:

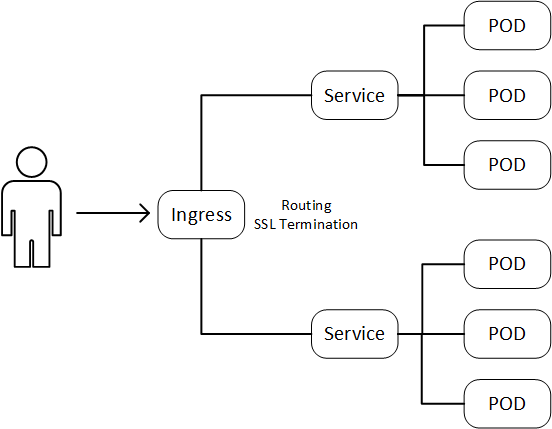

This doesn't scale particularly well. Furthermore, there may be other things that you would want to do before the traffic actually hits the backend application, e.g. SSL termination. All of this can be handled by Kubernetes Ingress rules. The topology would look like this:

Ingress is essentially a way for you to define a reverse proxy in front of your applications. The simplistic diagram hides that there are actually a couple of components involved. There is an Ingress Controller, which handles all the actual routing and the Ingress Resource with the rules that you define for each application. You have a number of choices for which Ingress Controller to use, I will use the Nginx Ingress Controller in this example. Once you have set up the Ingress controller you can define rules for accessing the applications. Until you have an Ingress Controller, the Ingress rules will not have any effect.

Deploying the Ingress Controller

You can read more about Ingress in the Kubernetes docs and some details on how to set up an Nginx Ingress Controller.

To set up an Ingress Controller, you can use the command:

[shell]

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

[/shell]

Next step is to add a Service to get connections to the Ingress controller:

[shell]

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/provider/cloud-generic.yaml

[/shell]

This last command will expose an IP address for the Ingress Controller outside the Kubernetes cluster. The default is for the Ingress Controller to have a public IP. In a later blog post, I will discuss setting up private Kubernetes clusters, and we will use a modified version of this service deployment for the Ingress Controller.

You can check that the Ingress Controller is running:

[shell]

kubectl get pods --all-namespaces -l app=ingress-nginx

[/shell]

And you should see something like this:

[shell]

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx nginx-ingress-controller-58c9df5856-tjl5z 1/1 Running 0 42s

[/shell]

To find the IP address of the Ingress Controller (Service):

[shell]

kubectl get svc --all-namespaces -l app=ingress-nginx

[/shell]

The output should look like this:

[shell]

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx ingress-nginx LoadBalancer 10.0.219.211 23.XX.X.XX 80:30360/TCP,443:30901/TCP 4m

[/shell]

Now we are ready to deploy a workload that leverages the Ingress Controller.

Deploying a web application with SSL certificate

Now that we have an Ingress Controller we can define Ingress rules to use it. There is an example manifest for deploying a web application with an SSL certificate in my acs-utils GitHub repository. I have repeated it here:

[plain]

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: web-service

spec:

replicas: 1

template:

metadata:

labels:

app: web-service

spec:

containers:

- name: dotnetwebapp

image: hansenms/aspdotnet:0.1

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: frontend-service

spec:

type: ClusterIP

selector:

app: web-service

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-ingress

spec:

tls:

- hosts:

- mycrazysite.cloudynerd.us

secretName: cloudynerd-us

rules:

- host: mycrazysite.cloudynerd.us

http:

paths:

- backend:

serviceName: frontend-service

servicePort: 80

path: /

[/plain]

Note that in the manifest, we are referencing a "secretName" for the tls settings. To upload the SSL certificate into a secret in Kubernetes, use the following command:

[shell]

kubectl create secret tls SECRET-NAME --cert FULL-CHAIN-CERT.pem --key KEY-FOR-CERT.pem

[/shell]

If you don't have a certificate, you can get one from Let's Encrypt. I have written some stuff about creating wildcard certificates with Let's Encrypt. You will want to use the full chain certificate.

After deploying the secret, you can deploy the web app:

[shell]

kubectl apply -f manifests/web-service-ingress.yaml

[/shell]

If you have the web application running with a Load Balancer Service in front (if you followed the examples in my previous post), you may have to delete the frontend service first (kubectl delete svc SERVICE-NAME).

You can validate that the certificate is working with curl:

[shell]

curl --resolve mycrazysite.cloudynerd.us:443:XX.XX.XX.XX https://mycrazysite.cloudynerd.us

[/shell]

Replace XX.XX.XX.XX with the IP address of your Ingress Controller and use your own domain name. You should not see any SSL validation errors. You can also set up a DNS record and validate in a browser that the site is secure:

Conclusions and next steps

In this blog post, we have seen that it is straightforward to set up an Ingress Controller on a Kubernetes cluster in Azure (Government). Once the Ingress Controller is configured, you can use it as a reverse proxy for multiple workloads and it can also be used for SSL termination as demonstrated here. So far we have focused on Kubernetes clusters with public endpoints for workloads. This may not be the preferred approach for Government applications. In a future blog post, I will show how to set up a private Kubernetes cluster and deploy workloads to it.

Let me know if you have questions/comments/suggestions.

Comments

- Anonymous

August 06, 2018

great article Michael, thanks!