Notes

L’accès à cette page nécessite une autorisation. Vous pouvez essayer de vous connecter ou de modifier des répertoires.

L’accès à cette page nécessite une autorisation. Vous pouvez essayer de modifier des répertoires.

As mentioned in my previous post, you should run the log parser on logs for a specific day. You can do that by copying the log files from the different load balanced Front-end servers to a centralized location and run the logparser queries in there.

Since you will get the results for a specific day, then the choice of that day is critical. Weekend days usually have lower use than the weekdays. Moreover, sometimes there are low months due to higher vacation rates or business reasons. A good sample for this is summer months where the number of users using the system is lower than other months.

A good strategy is to apply the scripts for different days to get clearer view on the current system usage. Keep in mind when designing the new environment that you need it to be able to handle the peak load at the peak period; moreover, you need to keep room for future growth. Thus, it is important to identify peak period and apply usage analysis on it.

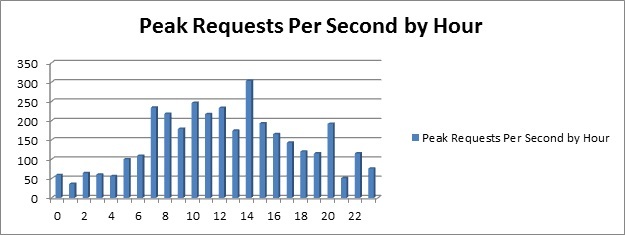

The output of logparser queries created in the last blog is in tabular format, but you can use them to build graph that would highlight the required values. Samples of the results of the pervious queries in graph format are as follows:

Peak Requests Per Second (Peak RPS)

Average Requests Per Second (Average RPS)

Distinct Users Per Hour:

Using these graphs and the accompanying tables, it should be clear how to build the modeling tables mentioned earlier. They should be enough for identifying the current load on the system and be the basis for estimating the future load for the new system.

Comments

Anonymous

September 29, 2011

This is an amazing tool and a very nice blog article ! Other than user analysis, in what other ways can the log data be used? May be, the Request Type and Target info can be used to analyze things like most commonly accessed sites/webparts/features etc?Anonymous

October 11, 2011

Yes, this tool can do much more than I used in the analysis mentioned above. Just keep in mind that it gets its input from the IIS logs, so it is limited by information in these logs. One possiblity is to analyze data based on agent type (user-agent). In such a case, the query will look like this: logparser -i:IISW3C "select count(*) as ct,cs(user-agent) from .log group by cs(user-agent) order by ct desc" Another possibility is to analyze based on web pages and use "cs-uri-stem" and "cs-uri-query" fields for that..An example is logparser -i:CSV "select count() as ct,TO_LOWERCASE(EXTRACT_EXTENSION(cs-uri-stem)) as ext from *.log group by ext order by ct desc"