Monitoring Runbooks / Azure Automation + Log Analytics

A while ago, I wrote a couple of posts showing how we are collecting and storing Azure Automation runbook job history for monitoring and support using a custom runbook and Azure SQL database.

Since then I have learned that Log Analytics for Azure Automation is in available in preview. After setting it up and taking it for a quick spin around the block I've found it to be a fantastic resource for proactive monitoring of the workflows we have running in Azure Automation.

We have been using OMS alerting for a couple of weeks now to alert us when certain workflows have failed with great success. Operations teams are notified when there are problems and fix them before customers have to escalate.

In addition to alerting, Log Analytics also allows an alternative for getting counts and amounts of job history - it's really pretty neat.

Below are details on setup - it is pretty simple, the tricky part is learning to create the queries you will need to use for monitoring and alert rules.

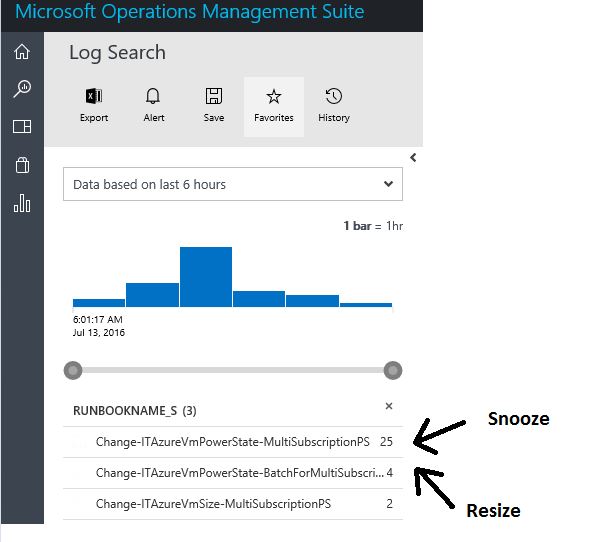

Below is an example of a view on the OMS portal which shows counts of failures for a couple of important runbooks we have made available to customers to enable self-service jobs we call: Snooze (shutdown and deallocate an Azure VM or bring it back online) and Resize (Change the SKU of an Azure VM.):

[caption id="attachment_185" align="alignnone" width="612"] Failures by runbook[/caption]

Failures by runbook[/caption]

Setting up Log Analytics

Setup is pretty straight forward, below is an example of how we got everything up and running:

# Connect to Azure

Login-AzureRmAccount

# Configure storage account for logs and set up diagnostics on your Automation Account

# assumes ARM storage account in the same regions as the automation account

$AA = Find-AzureRmResource -ResourceNameContains "MyAutomationAccount" -ResourceType Microsoft.Automation/AutomationAccounts

$SA = Find-AzureRmResource -ResourceNameContains "MyStorageAccount"

Set-AzureRmDiagnosticSetting -ResourceId $AA.ResourceId -StorageAccountId $SA.ResourceId

# Enable logs in Log Analytics following these instructions

# https://azure.microsoft.com/en-us/documentation/articles/log-analytics-powershell-azure-diagnostics-json

# storage account that logs will be written to...

$storageAccountId = "/subscriptions/1aba1abc-1234-5678-acb1-234567def1234/resourceGroups/automation_analytics/providers/Microsoft.Storage/storageAccounts/MyStorageAccount"

$supportedResourceTypes = ("Microsoft.Automation/AutomationAccounts")

# location to match your storage account location

$resources = (Get-AzureRmResource).Where({$_.ResourceType -in $supportedResourceTypes -and $_.Location -eq "eastus2"})

foreach ($resource in $resources) {

Set-AzureRmDiagnosticSetting -ResourceId $resource.ResourceId -StorageAccountId $storageAccountId -Enabled $true -RetentionEnabled $true -RetentionInDays 1

}

# Import the log analytics module

Install-Module -Name AzureDiagnosticsAndLogAnalytics

# Run the UI configuration script

# -guides you through configuring Log Analytics to collect logs from Azure diagnostics storage

Add-AzureDiagnosticsToLogAnalyticsUI

# Open your OMS workspace and search Log Analytics for Type=AutomationAccounts

# https://mysuite.portal.mms.microsoft.com/

Comments

- Anonymous

January 17, 2017

So I did all that. But how do I make a log search on the run book so I can put alerts on it?TYPE=AutomationAccount?- Anonymous

January 17, 2017

Something like this should work if you are looking for executions of a given runbook with a particular string in the output, a failure for instance that you may want to alert on.Type=AutomationAccounts RunbookName_s="MyRunbookName" "SomeStringInTheRunbookOutput"- Anonymous

January 18, 2017

Thanks for that, and thank you for the post!I was trying type=AutomationAccounts need the capital T.Also there was no data for the runbooks as none had been run since I enabled it for logging. All the previous jobs are not in the log as only the new ones when they are run are put in there. (FYI for future newbs like me to run the books after you set it up, so you have data to look at, looking at it the next day helped because the script ran at night).

- Anonymous

- Anonymous