Simple and robust way to operationalise Spark models on Azure

Let's operationalise a DataBricks regression model

Lately I've been doing a lot of work with Azure DataBricks which is a superb Spark-based analytics platform fully integrated with Azure. It gives you everything that Open Source Spark does and then some. I've been especially enjoying the effortless ways to move large datasets around and the ease of MLlib for my AI-projects.

One of the questions with the simpler models like regressions and clusterings is always how to operationalise the models so that the rest of the organisation could consume the models by calling webservices. Luckily there exists model export functionality that takes us almost there. It exports Spark MLlib models so that they can be run anywhere where there is a JVM available ... no Spark needed.

So I decided to build the missing half : wrap the model inside a simple java web app and deploy to Azure to be consumed.

Some theory

First read this article to properly understand the model export feature:https://docs.databricks.com/spark/latest/mllib/model-export.html

Then you might want to take a look at some sample code on instancing the exported models and how to use them for predicting stuff:

https://github.com/databricks/databricks-ml-examples/tree/master/model-export-demo

Finally you should download my sample IntelliJ project and use it as base for you own operationalising :

https://petsablob.blob.core.windows.net/share/siirto/modeltestwebapp.zip

Important bits

The solution itself is ridiculously simple and I'll go throug it here for your pleasure.

I set up a basic maven archetype web app project with IntelliJ Idea and added one utility class and one library (read the docs if in doubt).

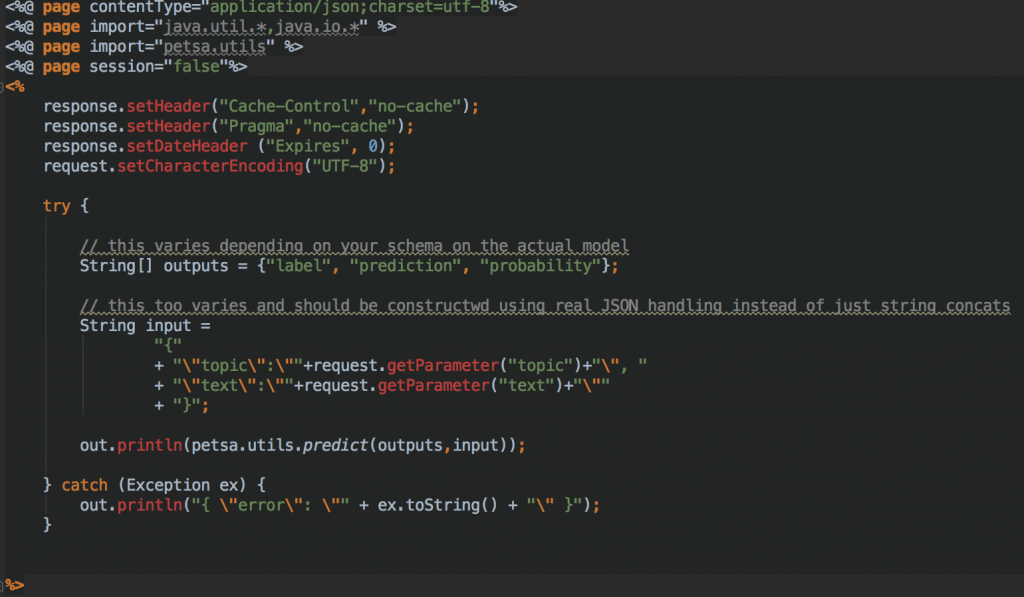

This is my index.jsp-file that does the interfacing with the caller.

Here we basically set up the parameter block structure that goes into the model and take values from the web request that was sent to us. The request could be something like this: https://YOURSERVER.azurewebsites.net/?topic=sci.space\&text=space%20the%20final%20frontier

Then the values are handed over to our utility class to be reacted upon and the result is returned to the caller ... not too complex , eh ?.

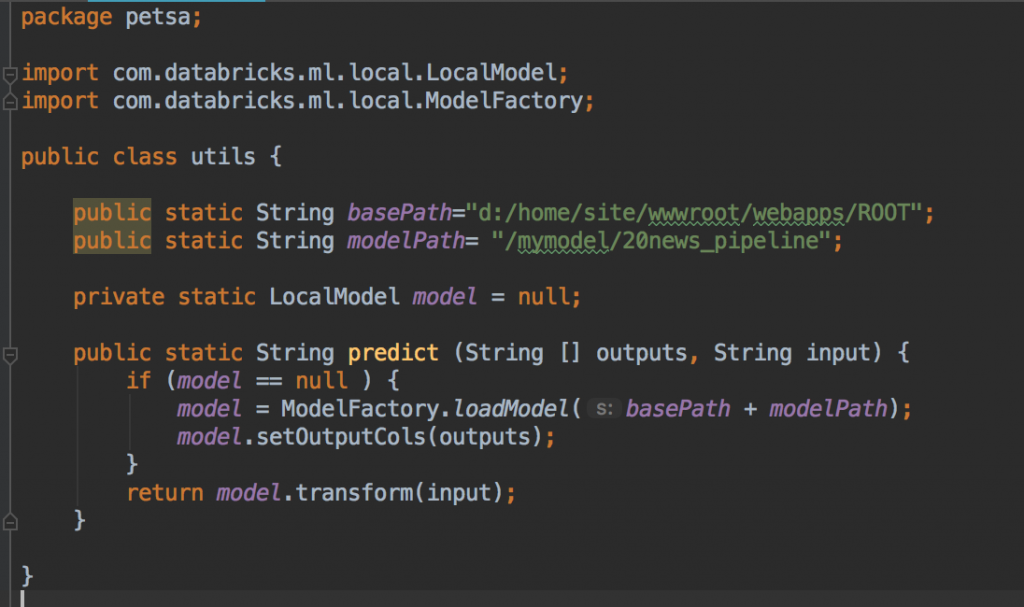

The utility class is even simpler ...

It knows how azure treats java apps and how you can refer to files contained in the war-package (our model files, that is).

Knowing all this it creates a static instance of the model and starts churning out predictions when asked to do so.

I then used Microsofts excellent IntelliJ Azure plugin to deploy this project to ... you guessed it ... to Azure.

Technical side of things

So this becomes a Java web app running on Azure.

Web Apps can scale automatically (so can our model now), web apps can use Azure Ad authentication (Easy Auth, and yes, it works with Java too) . Web Apps are fully in GA and they support VNets and all kinds of metrics and professional monitoring.

Not a very bad place to host your models as web service ...

Next step would of course be to make this wrapper into Azure Function so I would only pay for the actual seconds my model is creating predictions. So, why don't I ... well, in this particular case my customer does not want any "preview" software on their stack and Azure Functions Java is not in GA yet ... but it will get there one day and once it does I'll create a sequel to this post.

The other obvious thing of course is containers, we could wrap this up in a container , and maybe I'll do that next time when I have some free time on my hands.

Summary

The funny thing is that done this way your service is automagically enjoying the benefits of autoscaling, authentication, monitoring and all other Azure perks making this a real production ready enterprise solution.

The power of PaaS once again.