Batch testing with a set of example utterances

Important

LUIS will be retired on October 1st 2025 and starting April 1st 2023 you will not be able to create new LUIS resources. We recommend migrating your LUIS applications to conversational language understanding to benefit from continued product support and multilingual capabilities.

Batch testing validates your active trained version to measure its prediction accuracy. A batch test helps you view the accuracy of each intent and entity in your active version. Review the batch test results to take appropriate action to improve accuracy, such as adding more example utterances to an intent if your app frequently fails to identify the correct intent or labeling entities within the utterance.

Group data for batch test

It is important that utterances used for batch testing are new to LUIS. If you have a data set of utterances, divide the utterances into three sets: example utterances added to an intent, utterances received from the published endpoint, and utterances used to batch test LUIS after it is trained.

The batch JSON file you use should include utterances with top-level machine-learning entities labeled including start and end position. The utterances should not be part of the examples already in the app. They should be utterances you want to positively predict for intent and entities.

You can separate out tests by intent and/or entity or have all the tests (up to 1000 utterances) in the same file.

Common errors importing a batch

If you run into errors uploading your batch file to LUIS, check for the following common issues:

- More than 1,000 utterances in a batch file

- An utterance JSON object that doesn't have an entities property. The property can be an empty array.

- Word(s) labeled in multiple entities

- Entity labels starting or ending on a space.

Fixing batch errors

If there are errors in the batch testing, you can either add more utterances to an intent, and/or label more utterances with the entity to help LUIS make the discrimination between intents. If you have added utterances, and labeled them, and still get prediction errors in batch testing, consider adding a phrase list feature with domain-specific vocabulary to help LUIS learn faster.

Batch testing using the LUIS portal

Import and train an example app

Import an app that takes a pizza order such as 1 pepperoni pizza on thin crust.

Download and save app JSON file.

Sign in to the LUIS portal, and select your Subscription and Authoring resource to see the apps assigned to that authoring resource.

Select the arrow next to New app and click Import as JSON to import the JSON into a new app. Name the app

Pizza app.Select Train in the top-right corner of the navigation to train the app.

Roles in batch testing

Caution

Entity roles are not supported in batch testing.

Batch test file

The example JSON includes one utterance with a labeled entity to illustrate what a test file looks like. In your own tests, you should have many utterances with correct intent and machine-learning entity labeled.

Create

pizza-with-machine-learned-entity-test.jsonin a text editor or download it.In the JSON-formatted batch file, add an utterance with the Intent you want predicted in the test.

[ { "text": "I want to pick up 1 cheese pizza", "intent": "ModifyOrder", "entities": [ { "entity": "Order", "startPos": 18, "endPos": 31 }, { "entity": "ToppingList", "startPos": 20, "endPos": 25 } ] } ]

Run the batch

Select Test in the top navigation bar.

Select Batch testing panel in the right-side panel.

Select Import. In the dialog box that appears, select Choose File and locate a JSON file with the correct JSON format that contains no more than 1,000 utterances to test.

Import errors are reported in a red notification bar at the top of the browser. When an import has errors, no dataset is created. For more information, see Common errors.

Choose the file location of the

pizza-with-machine-learned-entity-test.jsonfile.Name the dataset

pizza testand select Done.Select the Run button.

After the batch test completes, you can see the following columns:

Column Description State Status of the test. See results is only visible after the test is completed. Name The name you have given to the test. Size Number of tests in this batch test file. Last Run Date of last run of this batch test file. Last result Number of successful predictions in the test. To view detailed results of the test, select See results.

Tip

- Selecting Download will download the same file that you uploaded.

- If you see the batch test failed, at least one utterance intent did not match the prediction.

Review batch results for intents

To review the batch test results, select See results. The test results show graphically how the test utterances were predicted against the active version.

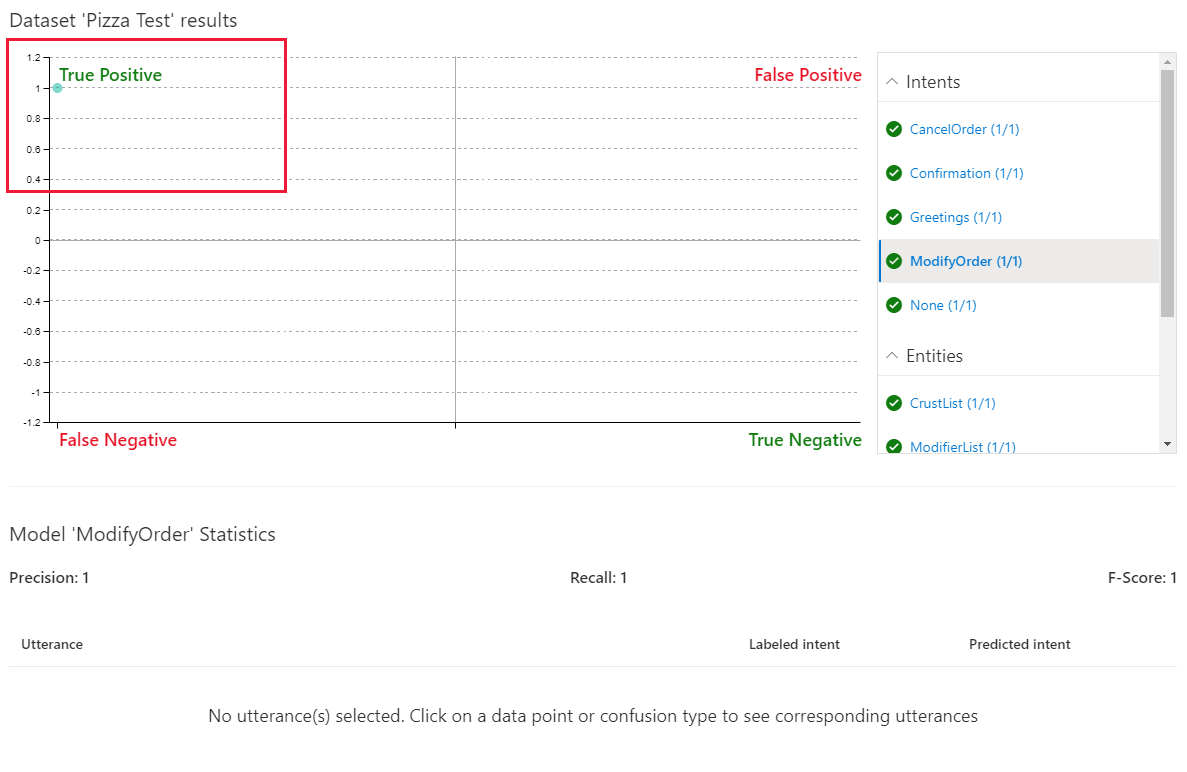

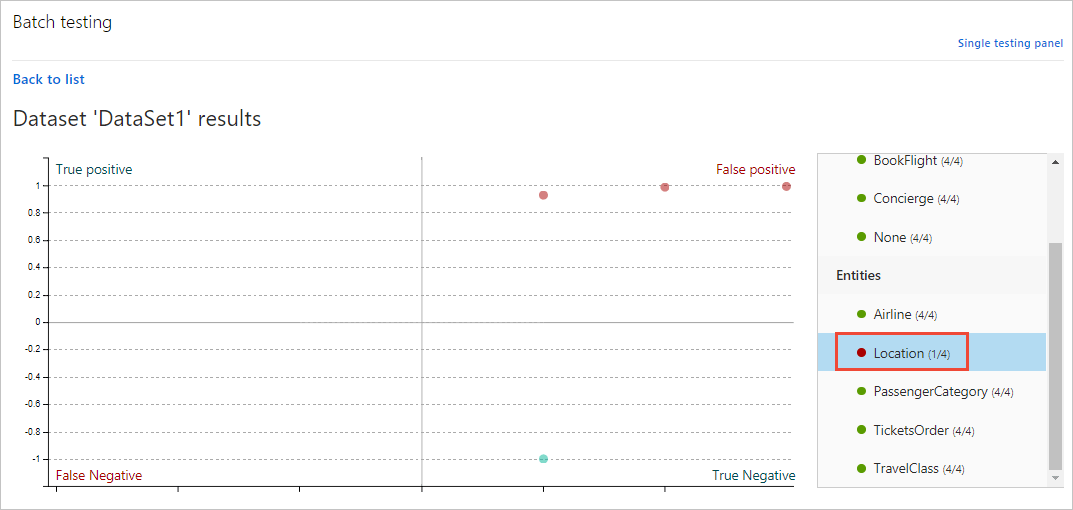

The batch chart displays four quadrants of results. To the right of the chart is a filter. The filter contains intents and entities. When you select a section of the chart or a point within the chart, the associated utterance(s) display below the chart.

While hovering over the chart, a mouse wheel can enlarge or reduce the display in the chart. This is useful when there are many points on the chart clustered tightly together.

The chart is in four quadrants, with two of the sections displayed in red.

Select the ModifyOrder intent in the filter list. The utterance is predicted as a True Positive meaning the utterance successfully matched its positive prediction listed in the batch file.

The green checkmarks in the filters list also indicate the success of the test for each intent. All the other intents are listed with a 1/1 positive score because the utterance was tested against each intent, as a negative test for any intents not listed in the batch test.

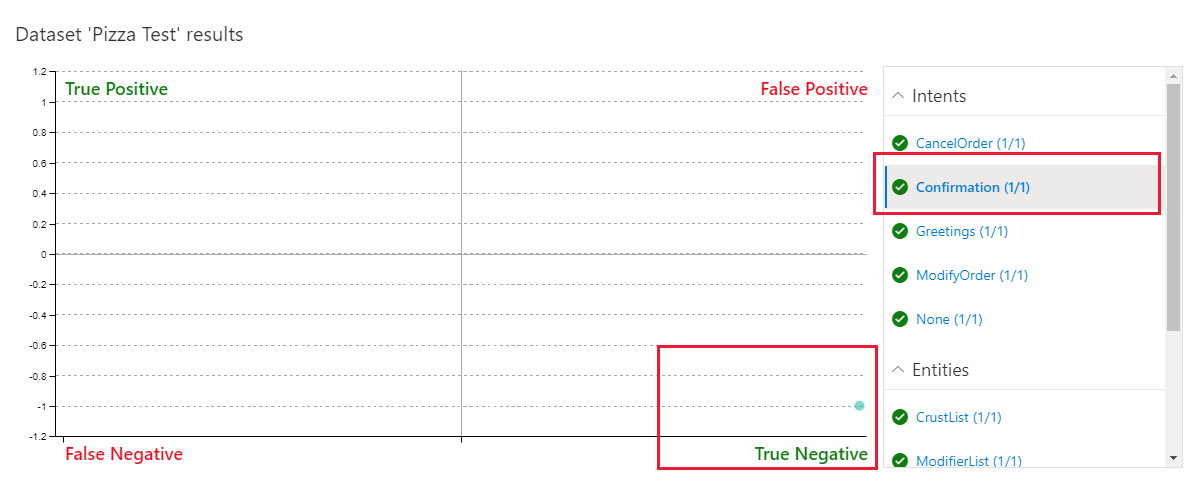

Select the Confirmation intent. This intent isn't listed in the batch test so this is a negative test of the utterance that is listed in the batch test.

The negative test was successful, as noted with the green text in the filter, and the grid.

Review batch test results for entities

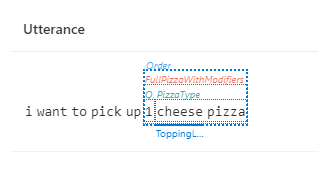

The ModifyOrder entity, as a machine entity with subentities, displays if the top-level entity matched and how the subentities are predicted.

Select the ModifyOrder entity in the filter list then select the circle in the grid.

The entity prediction displays below the chart. The display includes solid lines for predictions that match the expectation and dotted lines for predictions that don't match the expectation.

Filter chart results

To filter the chart by a specific intent or entity, select the intent or entity in the right-side filtering panel. The data points and their distribution update in the graph according to your selection.

Chart result examples

The chart in the LUIS portal, you can perform the following actions:

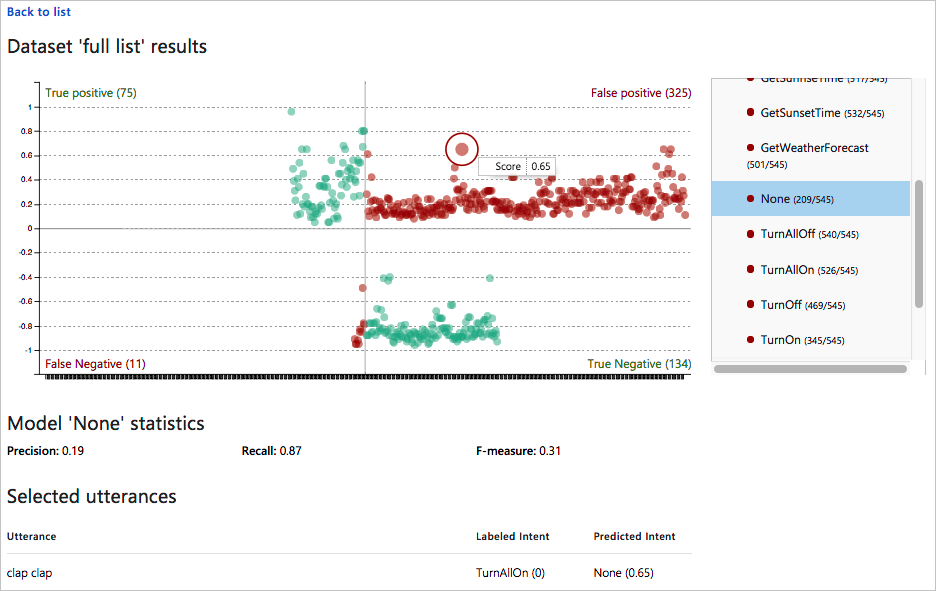

View single-point utterance data

In the chart, hover over a data point to see the certainty score of its prediction. Select a data point to retrieve its corresponding utterance in the utterances list at the bottom of the page.

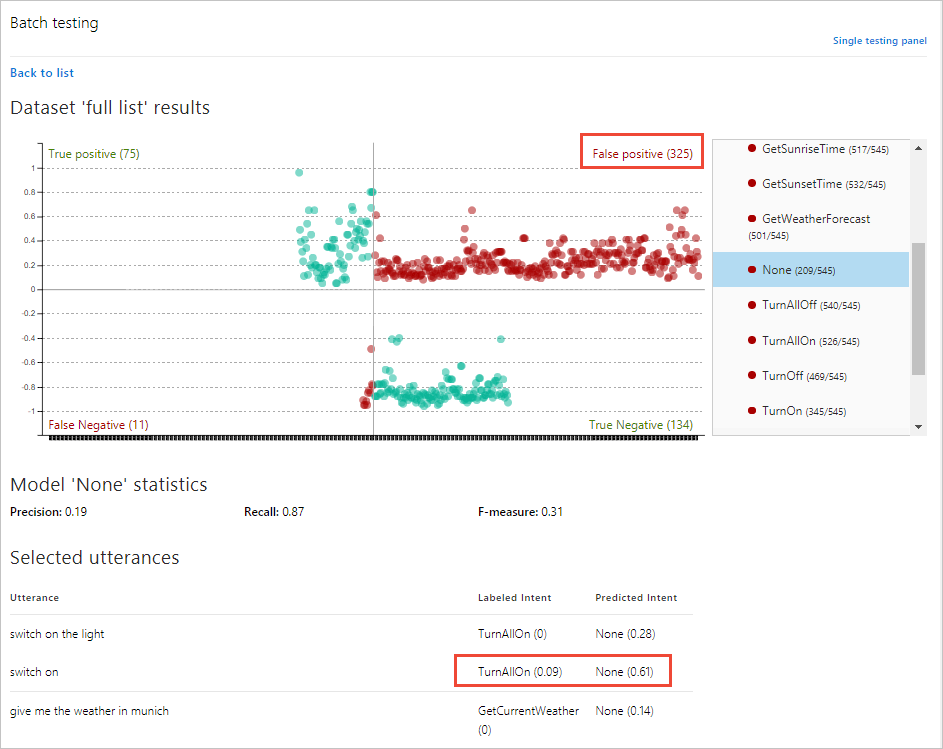

View section data

In the four-section chart, select the section name, such as False Positive at the top-right of the chart. Below the chart, all utterances in that section display below the chart in a list.

In this preceding image, the utterance switch on is labeled with the TurnAllOn intent, but received the prediction of None intent. This is an indication that the TurnAllOn intent needs more example utterances in order to make the expected prediction.

The two sections of the chart in red indicate utterances that did not match the expected prediction. These indicate utterances which LUIS needs more training.

The two sections of the chart in green did match the expected prediction.

Next steps

If testing indicates that your LUIS app doesn't recognize the correct intents and entities, you can work to improve your LUIS app's performance by labeling more utterances or adding features.