Copy data to and from Azure Table storage using Azure Data Factory or Synapse Analytics

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

This article outlines how to use Copy Activity in Azure Data Factory and Synapse Analytics pipelines to copy data to and from Azure Table storage. It builds on the Copy Activity overview article that presents a general overview of Copy Activity.

Note

We recommend that you use the Azure Az PowerShell module to interact with Azure. To get started, see Install Azure PowerShell. To learn how to migrate to the Az PowerShell module, see Migrate Azure PowerShell from AzureRM to Az.

Supported capabilities

This Azure Table storage connector is supported for the following capabilities:

| Supported capabilities | IR | Managed private endpoint |

|---|---|---|

| Copy activity (source/sink) | ① ② | ✓ Exclude storage account V1 |

| Lookup activity | ① ② | ✓ Exclude storage account V1 |

① Azure integration runtime ② Self-hosted integration runtime

You can copy data from any supported source data store to Table storage. You also can copy data from Table storage to any supported sink data store. For a list of data stores that are supported as sources or sinks by the copy activity, see the Supported data stores table.

Specifically, this Azure Table connector supports copying data by using account key and service shared access signature authentications.

Get started

To perform the Copy activity with a pipeline, you can use one of the following tools or SDKs:

- The Copy Data tool

- The Azure portal

- The .NET SDK

- The Python SDK

- Azure PowerShell

- The REST API

- The Azure Resource Manager template

Create an Azure Table storage linked service using UI

Use the following steps to create an Azure Table storage linked service in the Azure portal UI.

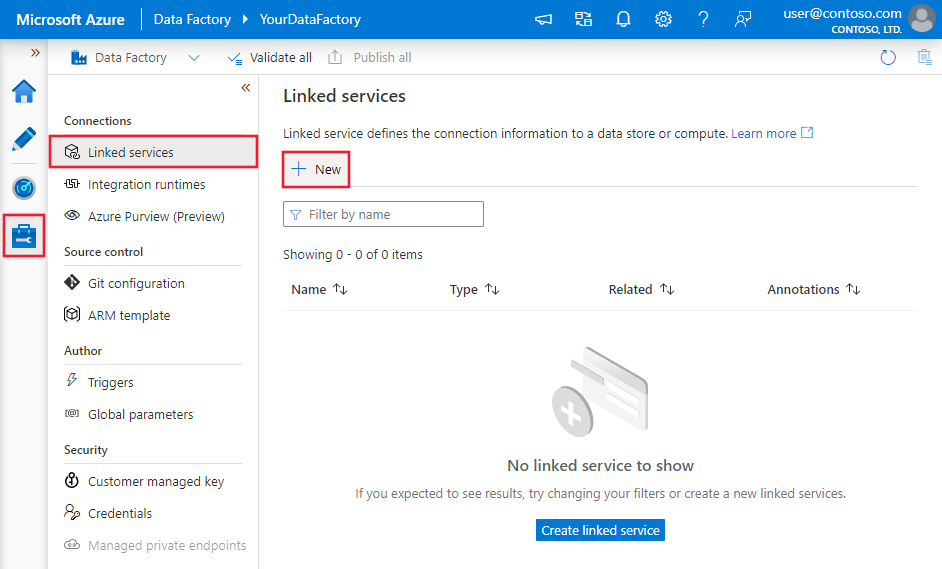

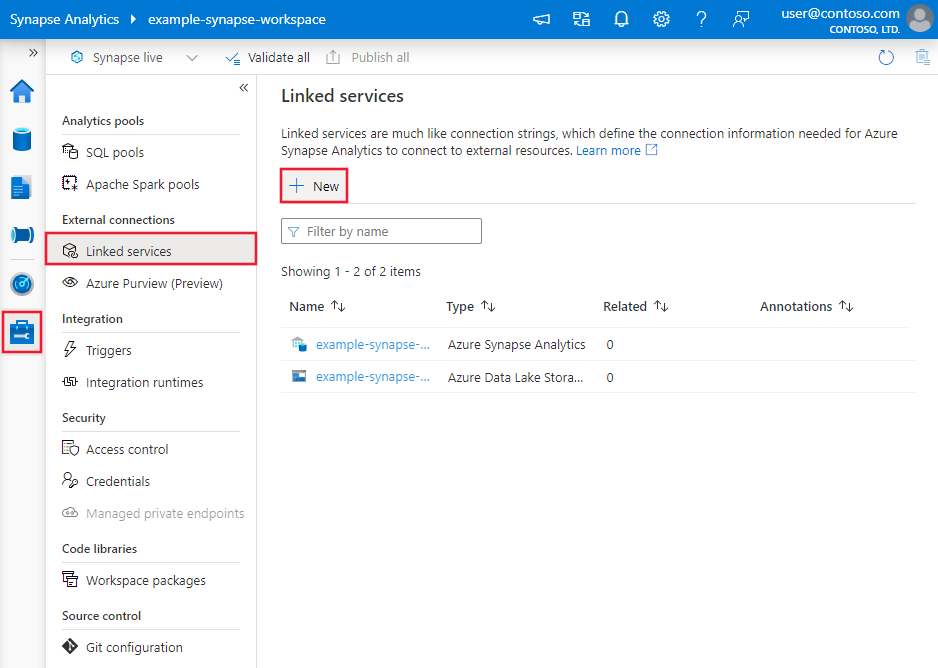

Browse to the Manage tab in your Azure Data Factory or Synapse workspace and select Linked Services, then click New:

Search for Azure Table and select the Azure Table storage connector.

Configure the service details, test the connection, and create the new linked service.

Connector configuration details

The following sections provide details about properties that are used to define entities specific to Azure Table storage.

Linked service properties

This Azure Table Storage connector supports the following authentication types. See the corresponding sections for details.

- Account key authentication

- Shared access signature authentication

- System-assigned managed identity authentication

- User-assigned managed identity authentication

Account key authentication

You can create an Azure Storage linked service by using the account key. It provides the service with global access to Storage. The following properties are supported.

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to AzureTableStorage. | Yes |

| connectionString | Specify the information needed to connect to Storage for the connectionString property. You can also put account key in Azure Key Vault and pull the accountKey configuration out of the connection string. Refer to the following samples and Store credentials in Azure Key Vault article with more details. |

Yes |

| connectVia | The integration runtime to be used to connect to the data store. You can use Azure Integration Runtime or Self-hosted Integration Runtime (if your data store is located in a private network). If not specified, it uses the default Azure Integration Runtime. | No |

Note

If you were using "AzureStorage" type linked service, it is still supported as-is, while you are suggested to use this new "AzureTableStorage" linked service type going forward.

Example:

{

"name": "AzureTableStorageLinkedService",

"properties": {

"type": "AzureTableStorage",

"typeProperties": {

"connectionString": "DefaultEndpointsProtocol=https;AccountName=<accountname>;AccountKey=<accountkey>"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Example: store account key in Azure Key Vault

{

"name": "AzureTableStorageLinkedService",

"properties": {

"type": "AzureTableStorage",

"typeProperties": {

"connectionString": "DefaultEndpointsProtocol=https;AccountName=<accountname>;",

"accountKey": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "<Azure Key Vault linked service name>",

"type": "LinkedServiceReference"

},

"secretName": "<secretName>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Shared access signature authentication

You also can create a Storage linked service by using a shared access signature. It provides the service with restricted/time-bound access to all/specific resources in the storage.

A shared access signature provides delegated access to resources in your storage account. You can use it to grant a client limited permissions to objects in your storage account for a specified time and with a specified set of permissions. You don't have to share your account access keys. The shared access signature is a URI that encompasses in its query parameters all the information necessary for authenticated access to a storage resource. To access storage resources with the shared access signature, the client only needs to pass in the shared access signature to the appropriate constructor or method. For more information about shared access signatures, see Shared access signatures: Understand the shared access signature model.

Note

Both service shared access signatures and account shared access signatures are now supported. For more information about shared access signatures, see Grant limited access to Azure Storage resources using shared access signatures (SAS).

Tip

To generate a service shared access signature for your storage account, you can execute the following PowerShell commands. Replace the placeholders and grant the needed permission.

$context = New-AzStorageContext -StorageAccountName <accountName> -StorageAccountKey <accountKey>

New-AzStorageContainerSASToken -Name <containerName> -Context $context -Permission rwdl -StartTime <startTime> -ExpiryTime <endTime> -FullUri

To use shared access signature authentication, the following properties are supported.

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to AzureTableStorage. | Yes |

| sasUri | Specify SAS URI of the shared access signature URI to the table. Mark this field as a SecureString to store it securely. You can also put SAS token in Azure Key Vault to leverage auto rotation and remove the token portion. Refer to the following samples and Store credentials in Azure Key Vault article with more details. |

Yes |

| connectVia | The integration runtime to be used to connect to the data store. You can use the Azure Integration Runtime or the Self-hosted Integration Runtime (if your data store is located in a private network). If not specified, it uses the default Azure Integration Runtime. | No |

Note

If you were using "AzureStorage" type linked service, it is still supported as-is, while you are suggested to use this new "AzureTableStorage" linked service type going forward.

Example:

{

"name": "AzureTableStorageLinkedService",

"properties": {

"type": "AzureTableStorage",

"typeProperties": {

"sasUri": {

"type": "SecureString",

"value": "<SAS URI of the Azure Storage resource e.g. https://<account>.table.core.windows.net/<table>?sv=<storage version>&st=<start time>&se=<expire time>&sr=<resource>&sp=<permissions>&sip=<ip range>&spr=<protocol>&sig=<signature>>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Example: store account key in Azure Key Vault

{

"name": "AzureTableStorageLinkedService",

"properties": {

"type": "AzureTableStorage",

"typeProperties": {

"sasUri": {

"type": "SecureString",

"value": "<SAS URI of the Azure Storage resource without token e.g. https://<account>.table.core.windows.net/<table>>"

},

"sasToken": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "<Azure Key Vault linked service name>",

"type": "LinkedServiceReference"

},

"secretName": "<secretName>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

When you create a shared access signature URI, consider the following points:

- Set appropriate read/write permissions on objects based on how the linked service (read, write, read/write) is used.

- Set Expiry time appropriately. Make sure that the access to Storage objects doesn't expire within the active period of the pipeline.

- The URI should be created at the right table level based on the need.

System-assigned managed identity authentication

A data factory or Synapse pipeline can be associated with a system-assigned managed identity for Azure resources, which represents that resource for authentication to other Azure services. You can use this system-assigned managed identity for Azure Table Storage authentication. To learn more about managed identities for Azure resources, see Managed identities for Azure resources

To use system-assigned managed identity authentication, follow these steps:

Retrieve system-assigned managed identity information by copying the value of the system-assigned managed identity object ID generated along with your factory or Synapse workspace.

Grant the managed identity permission in Azure Table Storage. For more information on the roles, see this article.

- As source, in Access control (IAM), grant at least the Storage Table Data Reader role.

- As sink, in Access control (IAM), grant at least the Storage Table Data Contributor role.

These properties are supported for an Azure Table Storage linked service:

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to AzureTableStorage. | Yes |

| serviceEndpoint | Specify the Azure Table Storage service endpoint with the pattern of https://<accountName>.table.core.windows.net/. |

Yes |

| connectVia | The integration runtime to be used to connect to the data store. You can use the Azure Integration Runtime. If not specified, it uses the default Azure Integration Runtime. | No |

Note

System-assigned managed identity authentication is only supported by Azure integration runtime.

Example:

{

"name": "AzureTableStorageLinkedService",

"properties": {

"type": "AzureTableStorage",

"typeProperties": {

"serviceEndpoint": "https://<accountName>.table.core.windows.net/"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

User-assigned managed identity authentication

A data factory can be assigned with one or multiple user-assigned managed identities. You can use this user-assigned managed identity for Azure Table Storage authentication, which allows to access and copy data from or to Azure Table Storage. To learn more about managed identities for Azure resources, see Managed identities for Azure resources

To use user-assigned managed identity authentication, follow these steps:

Create one or multiple user-assigned managed identities and grant permission in Azure Table Storage. For more information on the roles, see this article.

- As source, in Access control (IAM), grant at least the Storage Table Data Reader role.

- As sink, in Access control (IAM), grant at least the Storage Table Data Contributor role.

Assign one or multiple user-assigned managed identities to your data factory and create credentials for each user-assigned managed identity.

These properties are supported for an Azure Table Storage linked service:

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to AzureTableStorage. | Yes |

| serviceEndpoint | Specify the Azure Table Storage service endpoint with the pattern of https://<accountName>.table.core.windows.net/. |

Yes |

| credentials | Specify the user-assigned managed identity as the credential object. | Yes |

| connectVia | The integration runtime to be used to connect to the data store. You can use the Azure Integration Runtime or the Self-hosted Integration Runtime (if your data store is located in a private network). If not specified, it uses the default Azure Integration Runtime. | No |

Example:

{

"name": "AzureTableStorageLinkedService",

"properties": {

"type": "AzureTableStorage",

"typeProperties": {

"serviceEndpoint": "https://<accountName>.table.core.windows.net/",

"credential": {

"referenceName": "credential1",

"type": "CredentialReference"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Dataset properties

For a full list of sections and properties available for defining datasets, see the Datasets article. This section provides a list of properties supported by the Azure Table dataset.

To copy data to and from Azure Table, set the type property of the dataset to AzureTable. The following properties are supported.

| Property | Description | Required |

|---|---|---|

| type | The type property of the dataset must be set to AzureTable. | Yes |

| tableName | The name of the table in the Table storage database instance that the linked service refers to. | Yes |

Example:

{

"name": "AzureTableDataset",

"properties":

{

"type": "AzureTable",

"typeProperties": {

"tableName": "MyTable"

},

"schema": [],

"linkedServiceName": {

"referenceName": "<Azure Table storage linked service name>",

"type": "LinkedServiceReference"

}

}

}

Schema inference by the service

For schema-free data stores such as Azure Table, the service infers the schema in one of the following ways:

- If you specify the column mapping in copy activity, the service uses the source side column list to retrieve data. In this case, if a row doesn't contain a value for a column, a null value is provided for it.

- If you don't specify the column mapping in copy activity, the service infers the schema by using the first row in the data. In this case, if the first row doesn't contain the full schema (e.g. some columns have null value), some columns are missed in the result of the copy operation.

Copy activity properties

For a full list of sections and properties available for defining activities, see the Pipelines article. This section provides a list of properties supported by the Azure Table source and sink.

Azure Table as a source type

To copy data from Azure Table, set the source type in the copy activity to AzureTableSource. The following properties are supported in the copy activity source section.

| Property | Description | Required |

|---|---|---|

| type | The type property of the copy activity source must be set to AzureTableSource. | Yes |

| azureTableSourceQuery | Use the custom Table storage query to read data. The source query is a direct map from the $filter query option supported by Azure Table Storage, learn more about the syntax from this doc, and see the examples in the following azureTableSourceQuery examples section. |

No |

| azureTableSourceIgnoreTableNotFound | Indicates whether to allow the exception of the table to not exist. Allowed values are True and False (default). |

No |

azureTableSourceQuery examples

Note

Azure Table query operation times out in 30 seconds as enforced by Azure Table service. Learn how to optimize the query from Design for querying article.

If you want to filter the data against a datetime type column, refer to this example:

"azureTableSourceQuery": "LastModifiedTime gt datetime'2017-10-01T00:00:00' and LastModifiedTime le datetime'2017-10-02T00:00:00'"

If you want to filter the data against a string type column, refer to this example:

"azureTableSourceQuery": "LastModifiedTime ge '201710010000_0000' and LastModifiedTime le '201710010000_9999'"

If you use the pipeline parameter, cast the datetime value to proper format according to the previous samples.

Azure Table as a sink type

To copy data to Azure Table, set the sink type in the copy activity to AzureTableSink. The following properties are supported in the copy activity sink section.

| Property | Description | Required |

|---|---|---|

| type | The type property of the copy activity sink must be set to AzureTableSink. | Yes |

| azureTableDefaultPartitionKeyValue | The default partition key value that can be used by the sink. | No |

| azureTablePartitionKeyName | Specify the name of the column whose values are used as partition keys. If not specified, "AzureTableDefaultPartitionKeyValue" is used as the partition key. | No |

| azureTableRowKeyName | Specify the name of the column whose column values are used as the row key. If not specified, use a GUID for each row. | No |

| azureTableInsertType | The mode to insert data into Azure Table. This property controls whether existing rows in the output table with matching partition and row keys have their values replaced or merged. Allowed values are merge (default) and replace. This setting applies at the row level not the table level. Neither option deletes rows in the output table that do not exist in the input. To learn about how the merge and replace settings work, see Insert or merge entity and Insert or replace entity. |

No |

| writeBatchSize | Inserts data into Azure Table when writeBatchSize or writeBatchTimeout is hit. Allowed values are integer (number of rows). |

No (default is 10,000) |

| writeBatchTimeout | Inserts data into Azure Table when writeBatchSize or writeBatchTimeout is hit. Allowed values are timespan. An example is "00:20:00" (20 minutes). |

No (default is 90 seconds, storage client's default timeout) |

| maxConcurrentConnections | The upper limit of concurrent connections established to the data store during the activity run. Specify a value only when you want to limit concurrent connections. | No |

Example:

"activities":[

{

"name": "CopyToAzureTable",

"type": "Copy",

"inputs": [

{

"referenceName": "<input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<Azure Table output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "<source type>"

},

"sink": {

"type": "AzureTableSink",

"azureTablePartitionKeyName": "<column name>",

"azureTableRowKeyName": "<column name>"

}

}

}

]

azureTablePartitionKeyName

Map a source column to a destination column by using the "translator" property before you can use the destination column as azureTablePartitionKeyName.

In the following example, source column DivisionID is mapped to the destination column DivisionID:

"translator": {

"type": "TabularTranslator",

"columnMappings": "DivisionID: DivisionID, FirstName: FirstName, LastName: LastName"

}

"DivisionID" is specified as the partition key.

"sink": {

"type": "AzureTableSink",

"azureTablePartitionKeyName": "DivisionID"

}

Data type mapping for Azure Table

When you copy data from and to Azure Table, the following mappings are used from Azure Table data types to interim data types used internally within the service. To learn about how the copy activity maps the source schema and data type to the sink, see Schema and data type mappings.

When you move data to and from Azure Table, the following mappings defined by Azure Table are used from Azure Table OData types to .NET type and vice versa.

| Azure Table data type | Interim service data type | Details |

|---|---|---|

| Edm.Binary | byte[] | An array of bytes up to 64 KB. |

| Edm.Boolean | bool | A Boolean value. |

| Edm.DateTime | DateTime | A 64-bit value expressed as Coordinated Universal Time (UTC). The supported DateTime range begins midnight, January 1, 1601 A.D. (C.E.), UTC. The range ends December 31, 9999. |

| Edm.Double | double | A 64-bit floating point value. |

| Edm.Guid | Guid | A 128-bit globally unique identifier. |

| Edm.Int32 | Int32 | A 32-bit integer. |

| Edm.Int64 | Int64 | A 64-bit integer. |

| Edm.String | String | A UTF-16-encoded value. String values can be up to 64 KB. |

Lookup activity properties

To learn details about the properties, check Lookup activity.

Related content

For a list of data stores supported as sources and sinks by the copy activity, see Supported data stores.