How does Azure Workflow Orchestration Manager work?

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

Note

Workflow Orchestration Manager is powered by Apache Airflow.

Note

Workflow Orchestration Manager for Azure Data Factory relies on the open source Apache Airflow application. Documentation and more tutorials for Airflow can be found on the Apache Airflow Documentation or Community pages.

Workflow Orchestration Manager in Azure Data Factory uses Python-based Directed Acyclic Graphs (DAGs) to run your orchestration workflows. To use this feature, you need to provide your DAGs and plugins in Azure Blob Storage or via GitHub repository. You can launch the Airflow UI from ADF using a command line interface (CLI) or a software development kit (SDK) to manage your DAGs.

Create a Workflow Orchestration Manager environment

Refer to: Create a Workflow Orchestration Manager environment

Import DAGs

Workflow Orchestration Manager provides two distinct methods for loading DAGs from python source files into Airflow's environment. These methods are:

Enable Git Sync: This service allows you to synchronize your GitHub repository with Workflow Orchestration Manager, enabling you to import DAGs directly from your GitHub repository. Refer to: Sync a GitHub repository in Workflow Orchestration Manager

Azure Blob Storage: You can upload your DAGs, plugins etc. to a designated folder within a blob storage account that is linked with Azure Data Factory. Then, you import the file path of the folder in Workflow Orchestration Manager. Refer to: Import DAGs using Azure Blob Storage

Remove DAGs from the Airflow environment

Refer to: Delete DAGs in Workflow Orchestration Manager

Monitor DAG runs

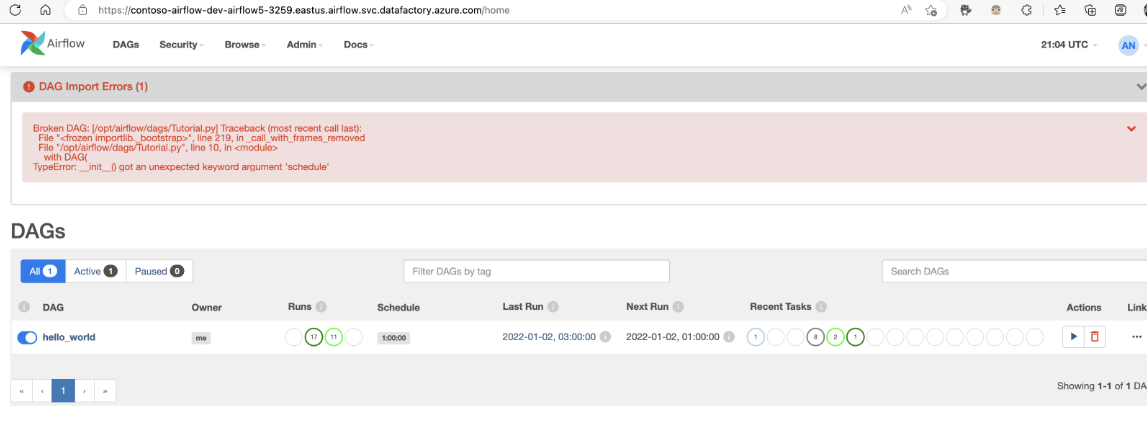

To monitor the Airflow DAGs, sign in into Airflow UI with the earlier created username and password.

Select on the Airflow environment created.

Sign in using the username-password provided during the Airflow Integration Runtime creation. (You can reset the username or password by editing the Airflow Integration runtime if needed)

Troubleshooting import DAG issues

Problem: DAG import is taking over 5 minutes Mitigation: Reduce the size of the imported DAGs with a single import. One way to achieve this is by creating multiple DAG folders with lesser DAGs across multiple containers.

Problem: Imported DAGs don't show up when you sign in into the Airflow UI. Mitigation: Sign in into the Airflow UI and see if there are any DAG parsing errors. This could happen if the DAG files contain any incompatible code. You'll find the exact line numbers and the files, which have the issue through the Airflow UI.