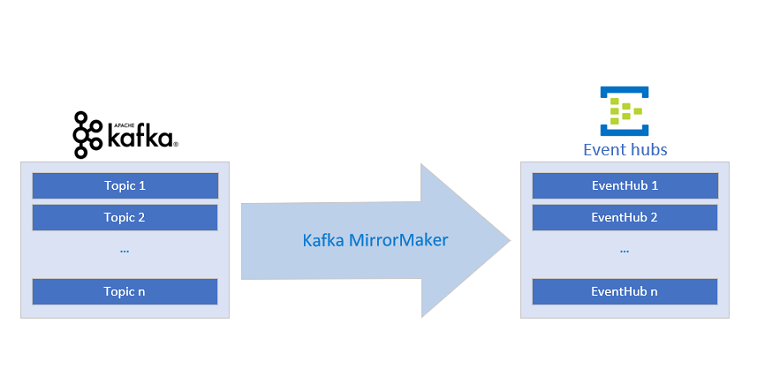

Replicate data from a Kafka cluster to Event Hubs using Apache Kafka Mirror Maker 1

This tutorial shows how to mirror a Kafka broker into an Azure Event Hubs using Kafka Mirror Maker 1.

Note

This sample is available on GitHub

Note

This article contains references to a term that Microsoft no longer uses. When the term is removed from the software, we'll remove it from this article.

In this tutorial, you learn how to:

- Create an Event Hubs namespace

- Clone the example project

- Set up a Kafka cluster

- Configure Kafka MirrorMaker

- Run Kafka MirrorMaker

Introduction

This tutorial shows how an event hub and Kafka MirrorMaker can integrate an existing Kafka pipeline into Azure by "mirroring" the Kafka input stream in the Event Hubs service, which allows for integration of Apache Kafka streams using several federation patterns.

An Azure Event Hubs Kafka endpoint enables you to connect to Azure Event Hubs using the Kafka protocol (that is, Kafka clients). By making minimal changes to a Kafka application, you can connect to Azure Event Hubs and enjoy the benefits of the Azure ecosystem. Event Hubs currently supports the protocol of Apache Kafka versions 1.0 and later.

You can use Apache Kafka's MirrorMaker 1 unidirectionally from Apache Kafka to Event Hubs. MirrorMaker 2 can be used in both directions, but the MirrorCheckpointConnector and MirrorHeartbeatConnector that are configurable in MirrorMaker 2 must both be configured to point to the Apache Kafka broker and not to Event Hubs. This tutorial shows configuring MirrorMaker 1.

Prerequisites

To complete this tutorial, make sure you have:

- Read through the Event Hubs for Apache Kafka article.

- An Azure subscription. If you do not have one, create a free account before you begin.

- Java Development Kit (JDK) 1.7+

- On Ubuntu, run

apt-get install default-jdkto install the JDK. - Be sure to set the JAVA_HOME environment variable to point to the folder where the JDK is installed.

- On Ubuntu, run

- Download and install a Maven binary archive

- On Ubuntu, you can run

apt-get install mavento install Maven.

- On Ubuntu, you can run

- Git

- On Ubuntu, you can run

sudo apt-get install gitto install Git.

- On Ubuntu, you can run

Create an Event Hubs namespace

An Event Hubs namespace is required to send and receive from any Event Hubs service. See Creating an event hub for instructions to create a namespace and an event hub. Make sure to copy the Event Hubs connection string for later use.

Clone the example project

Now that you have an Event Hubs connection string, clone the Azure Event Hubs for Kafka repository and navigate to the mirror-maker subfolder:

git clone https://github.com/Azure/azure-event-hubs-for-kafka.git

cd azure-event-hubs-for-kafka/tutorials/mirror-maker

Set up a Kafka cluster

Use the Kafka quickstart guide to set up a cluster with the desired settings (or use an existing Kafka cluster).

Configure Kafka MirrorMaker

Kafka MirrorMaker enables the "mirroring" of a stream. Given source and destination Kafka clusters, MirrorMaker ensures any messages sent to the source cluster are received by both the source and destination clusters. This example shows how to mirror a source Kafka cluster with a destination event hub. This scenario can be used to send data from an existing Kafka pipeline to Event Hubs without interrupting the flow of data.

For more detailed information on Kafka MirrorMaker, see the Kafka Mirroring/MirrorMaker guide.

To configure Kafka MirrorMaker, give it a Kafka cluster as its consumer/source and an event hub as its producer/destination.

Consumer configuration

Update the consumer configuration file source-kafka.config, which tells MirrorMaker the properties of the source Kafka cluster.

source-kafka.config

bootstrap.servers={SOURCE.KAFKA.IP.ADDRESS1}:{SOURCE.KAFKA.PORT1},{SOURCE.KAFKA.IP.ADDRESS2}:{SOURCE.KAFKA.PORT2},etc

group.id=example-mirrormaker-group

exclude.internal.topics=true

client.id=mirror_maker_consumer

Producer configuration

Now update the producer configuration file mirror-eventhub.config, which tells MirrorMaker to send the duplicated (or "mirrored") data to the Event Hubs service. Specifically, change bootstrap.servers and sasl.jaas.config to point to your Event Hubs Kafka endpoint. The Event Hubs service requires secure (SASL) communication, which is achieved by setting the last three properties in the following configuration:

mirror-eventhub.config

bootstrap.servers={YOUR.EVENTHUBS.FQDN}:9093

client.id=mirror_maker_producer

#Required for Event Hubs

sasl.mechanism=PLAIN

security.protocol=SASL_SSL

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{YOUR.EVENTHUBS.CONNECTION.STRING}";

Important

Replace {YOUR.EVENTHUBS.CONNECTION.STRING} with the connection string for your Event Hubs namespace. For instructions on getting the connection string, see Get an Event Hubs connection string. Here's an example configuration: sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="Endpoint=sb://mynamespace.servicebus.windows.net/;SharedAccessKeyName=RootManageSharedAccessKey;SharedAccessKey=XXXXXXXXXXXXXXXX";

Run Kafka MirrorMaker

Run the Kafka MirrorMaker script from the root Kafka directory using the newly updated configuration files. Make sure to either copy the config files to the root Kafka directory, or update their paths in the following command.

bin/kafka-mirror-maker.sh --consumer.config source-kafka.config --num.streams 1 --producer.config mirror-eventhub.config --whitelist=".*"

To verify that events are reaching the event hub, see the ingress statistics in the Azure portal, or run a consumer against the event hub.

With MirrorMaker running, any events sent to the source Kafka cluster are received by both the Kafka cluster and the mirrored event hub. By using MirrorMaker and an Event Hubs Kafka endpoint, you can migrate an existing Kafka pipeline to the managed Azure Event Hubs service without changing the existing cluster or interrupting any ongoing data flow.

Samples

See the following samples on GitHub:

- Sample code for this tutorial on GitHub

- Azure Event Hubs Kafka MirrorMaker running on an Azure Container Instance

Next steps

To learn more about Event Hubs for Kafka, see the following articles: