Manage and increase quotas and limits for resources with Azure Machine Learning

Azure uses quotas and limits to prevent budget overruns due to fraud, and to honor Azure capacity constraints. Consider these limits as you scale for production workloads. In this article, you learn about:

- Default limits on Azure resources related to Azure Machine Learning.

- Creating workspace-level quotas.

- Viewing your quotas and limits.

- Requesting quota increases.

Along with managing quotas and limits, you can learn how to plan and manage costs for Azure Machine Learning or learn about the service limits in Azure Machine Learning.

Special considerations

Quotas are applied to each subscription in your account. If you have multiple subscriptions, you must request a quota increase for each subscription.

A quota is a credit limit on Azure resources, not a capacity guarantee. If you have large-scale capacity needs, contact Azure support to increase your quota.

A quota is shared across all the services in your subscriptions, including Azure Machine Learning. Calculate usage across all services when you're evaluating capacity.

Note

Azure Machine Learning compute is an exception. It has a separate quota from the core compute quota.

Default limits vary by offer category type, such as free trial, pay-as-you-go, and virtual machine (VM) series (such as Dv2, F, and G).

Default resource quotas and limits

In this section, you learn about the default and maximum quotas and limits for the following resources:

- Azure Machine Learning assets

- Azure Machine Learning computes (including serverless Spark)

- Azure Machine Learning shared quota

- Azure Machine Learning online endpoints (both managed and Kubernetes) and batch endpoints

- Azure Machine Learning pipelines

- Azure Machine Learning integration with Synapse

- Virtual machines

- Azure Container Instances

- Azure Storage

Important

Limits are subject to change. For the latest information, see Service limits in Azure Machine Learning.

Azure Machine Learning assets

The following limits on assets apply on a per-workspace basis.

| Resource | Maximum limit |

|---|---|

| Datasets | 10 million |

| Runs | 10 million |

| Models | 10 million |

| Component | 10 million |

| Artifacts | 10 million |

In addition, the maximum run time is 30 days and the maximum number of metrics logged per run is 1 million.

Azure Machine Learning Compute

Azure Machine Learning Compute has a default quota limit on both the number of cores and the number of unique compute resources that are allowed per region in a subscription.

Note

- The quota on the number of cores is split by each VM Family and cumulative total cores.

- The quota on the number of unique compute resources per region is separate from the VM core quota, as it applies only to the managed compute resources of Azure Machine Learning.

To raise the limits for the following items, Request a quota increase:

- VM family core quotas. To learn more about which VM family to request a quota increase for, see virtual machine sizes in Azure. For example, GPU VM families start with an "N" in their family name (such as the NCv3 series).

- Total subscription core quotas

- Cluster quota

- Other resources in this section

Available resources:

Dedicated cores per region have a default limit of 24 to 300, depending on your subscription offer type. You can increase the number of dedicated cores per subscription for each VM family. Specialized VM families like NCv2, NCv3, or ND series start with a default of zero cores. GPUs also default to zero cores.

Low-priority cores per region have a default limit of 100 to 3,000, depending on your subscription offer type. The number of low-priority cores per subscription can be increased and is a single value across VM families.

Total compute limit per region has a default limit of 500 per region within a given subscription and can be increased up to a maximum value of 2500 per region. This limit is shared between training clusters, compute instances, and managed online endpoint deployments. A compute instance is considered a single-node cluster for quota purposes.

The following table shows more limits in the platform. Reach out to the Azure Machine Learning product team through a technical support ticket to request an exception.

| Resource or Action | Maximum limit |

|---|---|

| Workspaces per resource group | 800 |

| Nodes in a single Azure Machine Learning compute (AmlCompute) cluster set up as a non communication-enabled pool (that is, can't run MPI jobs) | 100 nodes but configurable up to 65,000 nodes |

| Nodes in a single Parallel Run Step run on an Azure Machine Learning compute (AmlCompute) cluster | 100 nodes but configurable up to 65,000 nodes if your cluster is set up to scale as mentioned previously |

| Nodes in a single Azure Machine Learning compute (AmlCompute) cluster set up as a communication-enabled pool | 300 nodes but configurable up to 4,000 nodes |

| Nodes in a single Azure Machine Learning compute (AmlCompute) cluster set up as a communication-enabled pool on an RDMA enabled VM Family | 100 nodes |

| Nodes in a single MPI run on an Azure Machine Learning compute (AmlCompute) cluster | 100 nodes |

| Job lifetime | 21 days1 |

| Job lifetime on a low-priority node | 7 days2 |

| Parameter servers per node | 1 |

1 Maximum lifetime is the duration between when a job starts and when it finishes. Completed jobs persist indefinitely. Data for jobs not completed within the maximum lifetime isn't accessible.

2 Jobs on a low-priority node can be preempted whenever there's a capacity constraint. We recommend that you implement checkpoints in your job.

Azure Machine Learning shared quota

Azure Machine Learning provides a shared quota pool from which users across various regions can access quota to perform testing for a limited amount of time, depending upon availability. The specific time duration depends on the use case. By temporarily using quota from the quota pool, you no longer need to file a support ticket for a short-term quota increase or wait for your quota request to be approved before you can proceed with your workload.

Use of the shared quota pool is available for running Spark jobs and for testing inferencing for Llama-2, Phi, Nemotron, Mistral, Dolly, and Deci-DeciLM models from the Model Catalog for a short time. Before you can deploy these models via the shared quota, you must have an Enterprise Agreement subscription. For more information on how to use the shared quota for online endpoint deployment, see How to deploy foundation models using the studio.

You should use the shared quota only for creating temporary test endpoints, not production endpoints. For endpoints in production, you should request dedicated quota by filing a support ticket. Billing for shared quota is usage-based, just like billing for dedicated virtual machine families. To opt out of shared quota for Spark jobs, fill out the Azure Machine Learning shared capacity allocation opt out form.

Azure Machine Learning online endpoints and batch endpoints

Azure Machine Learning online endpoints and batch endpoints have resource limits described in the following table.

Important

These limits are regional, meaning that you can use up to these limits per each region you're using. For example, if your current limit for number of endpoints per subscription is 100, you can create 100 endpoints in the East US region, 100 endpoints in the West US region, and 100 endpoints in each of the other supported regions in a single subscription. Same principle applies to all the other limits.

To determine the current usage for an endpoint, view the metrics.

To request an exception from the Azure Machine Learning product team, use the steps in the Endpoint limit increases.

| Resource | Limit 1 | Allows exception | Applies to |

|---|---|---|---|

| Endpoint name | Endpoint names must |

- | All types of endpoints 3 |

| Deployment name | Deployment names must |

- | All types of endpoints 3 |

| Number of endpoints per subscription | 100 | Yes | All types of endpoints 3 |

| Number of endpoints per cluster | 60 | - | Kubernetes online endpoint |

| Number of deployments per subscription | 500 | Yes | All types of endpoints 3 |

| Number of deployments per endpoint | 20 | Yes | All types of endpoints 3 |

| Number of deployments per cluster | 100 | - | Kubernetes online endpoint |

| Number of instances per deployment | 50 4 | Yes | Managed online endpoint |

| Max request time-out at endpoint level | 180 seconds | - | Managed online endpoint |

| Max request time-out at endpoint level | 300 seconds | - | Kubernetes online endpoint |

| Total requests per second at endpoint level for all deployments | 500 5 | Yes | Managed online endpoint |

| Total connections per second at endpoint level for all deployments | 500 5 | Yes | Managed online endpoint |

| Total connections active at endpoint level for all deployments | 500 5 | Yes | Managed online endpoint |

| Total bandwidth at endpoint level for all deployments | 5 MBPS 5 | Yes | Managed online endpoint |

1 This is a regional limit. For example, if current limit on number of endpoints is 100, you can create 100 endpoints in the East US region, 100 endpoints in the West US region, and 100 endpoints in each of the other supported regions in a single subscription. Same principle applies to all the other limits.

2 Single dashes like, my-endpoint-name, are accepted in endpoint and deployment names.

3 Endpoints and deployments can be of different types, but limits apply to the sum of all types. For example, the sum of managed online endpoints, Kubernetes online endpoint and batch endpoint under each subscription can't exceed 100 per region by default. Similarly, the sum of managed online deployments, Kubernetes online deployments, and batch deployments under each subscription can't exceed 500 per region by default.

4 We reserve 20% extra compute resources for performing upgrades. For example, if you request 10 instances in a deployment, you must have a quota for 12. Otherwise, you receive an error. There are some VM SKUs that are exempt from extra quota. For more information on quota allocation, see virtual machine quota allocation for deployment.

5 Requests per second, connections, bandwidth, etc. are related. If you request to increase any of these limits, ensure that you estimate/calculate other related limits together.

Virtual machine quota allocation for deployment

For managed online endpoints, Azure Machine Learning reserves 20% of your compute resources for performing upgrades on some VM SKUs. If you request a given number of instances for those VM SKUs in a deployment, you must have a quota for ceil(1.2 * number of instances requested for deployment) * number of cores for the VM SKU available to avoid getting an error. For example, if you request 10 instances of a Standard_DS3_v2 VM (that comes with four cores) in a deployment, you should have a quota for 48 cores (12 instances * 4 cores) available. This extra quota is reserved for system-initiated operations such as OS upgrades and VM recovery, and it won't incur cost unless such operations run.

There are certain VM SKUs that are exempted from extra quota reservation. To view the full list, see Managed online endpoints SKU list. To view your usage and request quota increases, see View your usage and quotas in the Azure portal. To view your cost of running a managed online endpoint, see View costs for a managed online endpoint.

Azure Machine Learning pipelines

Azure Machine Learning pipelines have the following limits.

| Resource | Limit |

|---|---|

| Steps in a pipeline | 30,000 |

| Workspaces per resource group | 800 |

Azure Machine Learning integration with Synapse

Azure Machine Learning serverless Spark provides easy access to distributed computing capability for scaling Apache Spark jobs. Serverless Spark utilizes the same dedicated quota as Azure Machine Learning Compute. Quota limits can be increased by submitting a support ticket and requesting for quota and limit increase for ESv3 series under the "Machine Learning Service: Virtual Machine Quota" category.

To view quota usage, navigate to Machine Learning studio and select the subscription name that you would like to see usage for. Select "Quota" in the left panel.

Virtual machines

Each Azure subscription has a limit on the number of virtual machines across all services. Virtual machine cores have a regional total limit and a regional limit per size series. Both limits are separately enforced.

For example, consider a subscription with a US East total VM core limit of 30, an A series core limit of 30, and a D series core limit of 30. This subscription would be allowed to deploy 30 A1 VMs, or 30 D1 VMs, or a combination of the two that doesn't exceed a total of 30 cores.

You can't raise limits for virtual machines above the values shown in the following table.

| Resource | Limit |

|---|---|

| Azure subscriptions associated with a Microsoft Entra tenant | Unlimited |

| Coadministrators per subscription | Unlimited |

| Resource groups per subscription | 980 |

| Azure Resource Manager API request size | 4,194,304 bytes |

| Tags per subscription1 | 50 |

| Unique tag calculations per subscription2 | 80,000 |

| Subscription-level deployments per location | 8003 |

| Locations of Subscription-level deployments | 10 |

1You can apply up to 50 tags directly to a subscription. Within the subscription, each resource or resource group is also limited to 50 tags. However, the subscription can contain an unlimited number of tags that are dispersed across resources and resource groups.

2Resource Manager returns a list of tag name and values in the subscription only when the number of unique tags is 80,000 or less. A unique tag is defined by the combination of resource ID, tag name, and tag value. For example, two resources with the same tag name and value would be calculated as two unique tags. You still can find a resource by tag when the number exceeds 80,000.

3Deployments are automatically deleted from the history as you near the limit. For more information, see Automatic deletions from deployment history.

Container Instances

For more information, see Container Instances limits.

Storage

Azure Storage has a limit of 250 storage accounts per region, per subscription. This limit includes both Standard and Premium storage accounts.

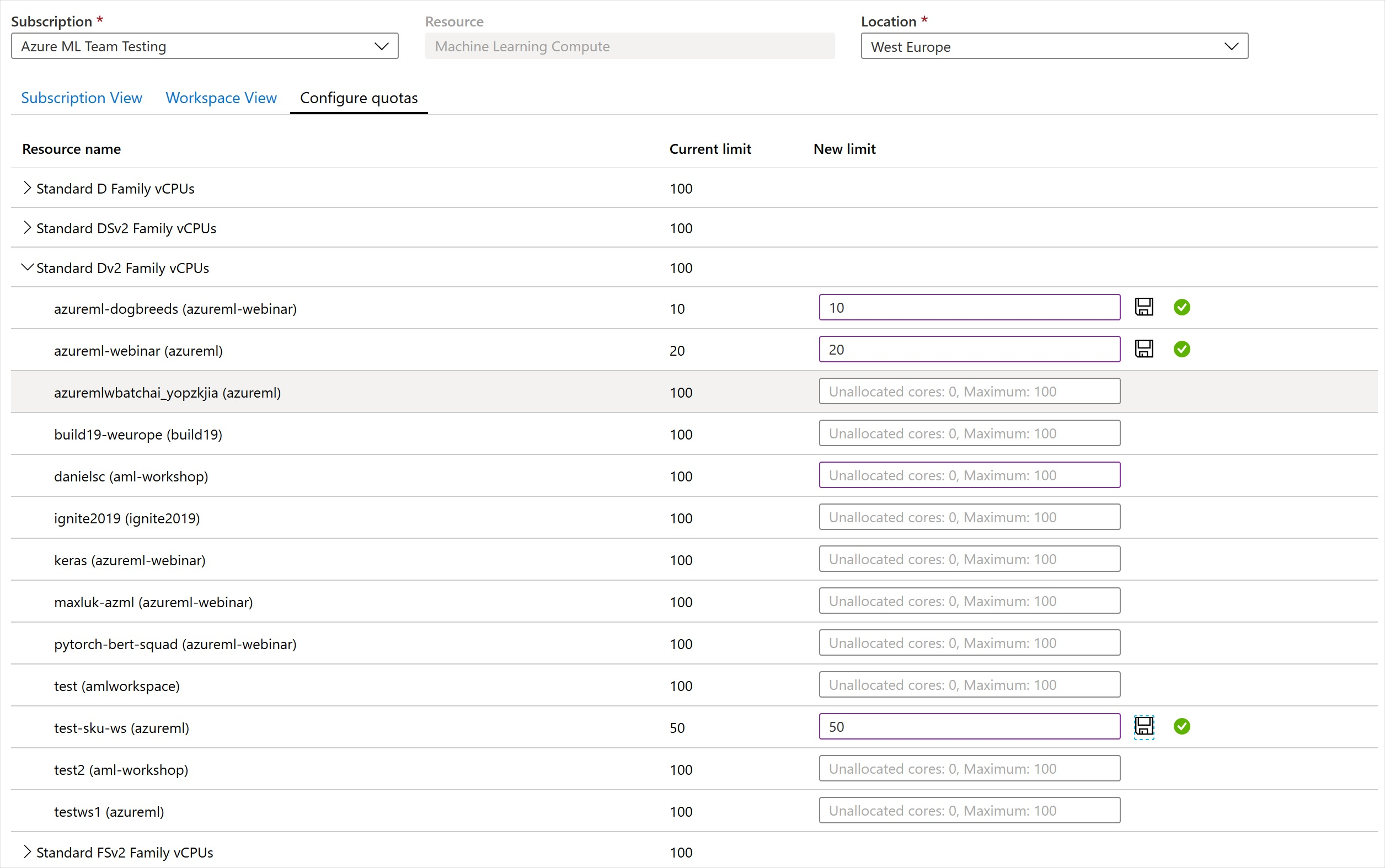

Workspace-level quotas

Use workspace-level quotas to manage Azure Machine Learning compute target allocation between multiple workspaces in the same subscription.

By default, all workspaces share the same quota as the subscription-level quota for VM families. However, you can set a maximum quota for individual VM families on workspaces in a subscription. Quotas for individual VM families let you share capacity and avoid resource contention issues.

- Go to any workspace in your subscription.

- In the left pane, select Usages + quotas.

- Select the Configure quotas tab to view the quotas.

- Expand a VM family.

- Set a quota limit on any workspace listed under that VM family.

You can't set a negative value or a value higher than the subscription-level quota.

Note

You need subscription-level permissions to set a quota at the workspace level.

View quotas in the studio

When you create a new compute resource, by default you see only VM sizes that you already have quota to use. Switch the view to Select from all options.

Scroll down until you see the list of VM sizes you don't have quota for.

Use the link to go directly to the online customer support request for more quota.

View your usage and quotas in the Azure portal

To view your quota for various Azure resources like virtual machines, storage, or network, use the Azure portal:

On the left pane, select All services and then select Subscriptions under the General category.

From the list of subscriptions, select the subscription whose quota you're looking for.

Select Usage + quotas to view your current quota limits and usage. Use the filters to select the provider and locations.

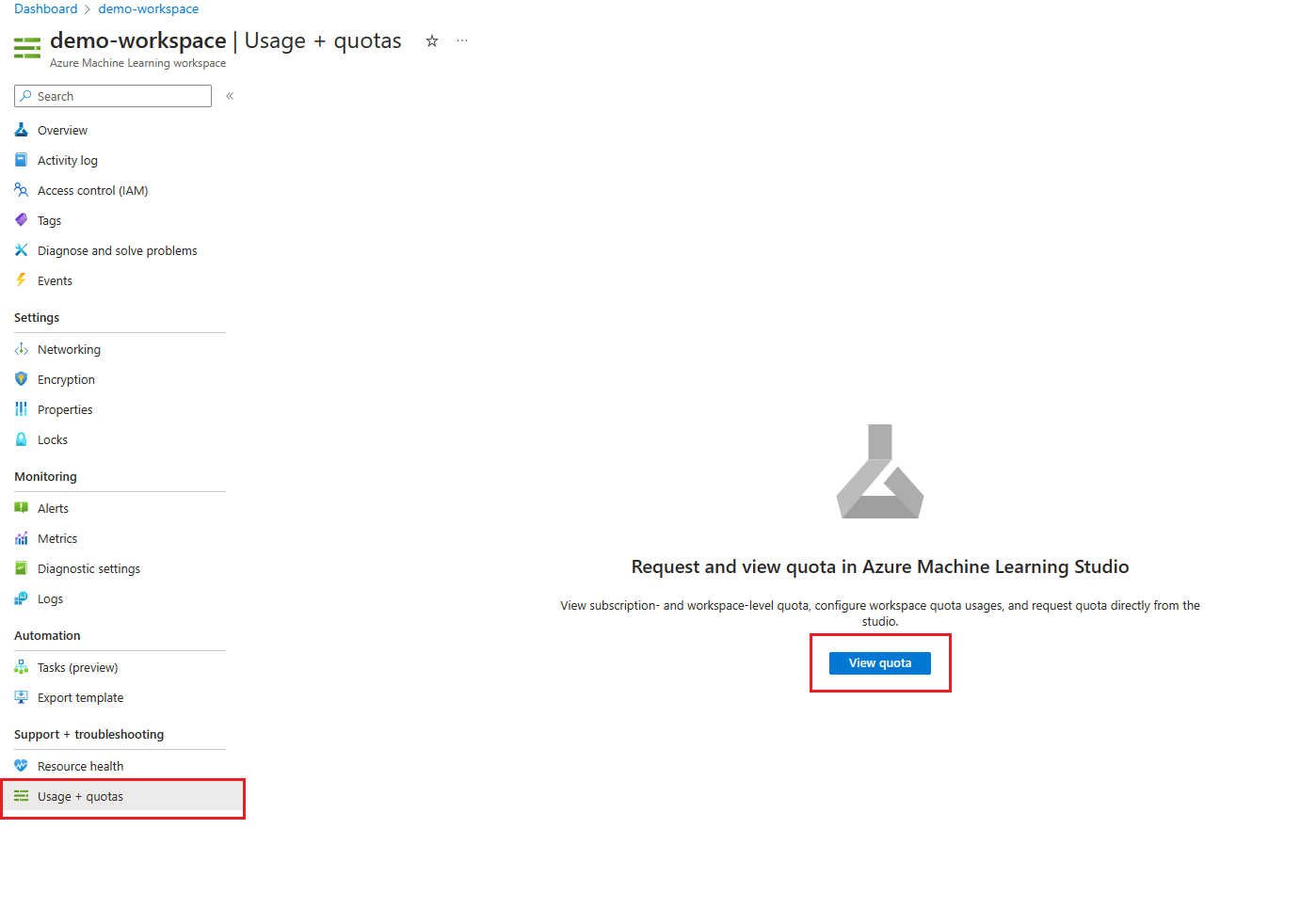

You manage the Azure Machine Learning compute quota on your subscription separately from other Azure quotas:

Go to your Azure Machine Learning workspace in the Azure portal.

On the left pane, in the Support + troubleshooting section, select Usage + quotas to view your current quota limits and usage.

Select a subscription to view the quota limits. Filter to the region you're interested in.

You can switch between a subscription-level view and a workspace-level view.

Request quota and limit increases

VM quota increase is to increase the number of cores per VM family per region. Endpoint limit increase is to increase the endpoint-specific limits per subscription per region. Make sure to choose the right category when you're submitting the quota increase request, as described in the next section.

VM quota increases

To raise the limit for Azure Machine Learning VM quota above the default limit, you can request for quota increase from the above Usage + quotas view or submit a quota increase request from Azure Machine Learning studio.

Navigate to the Usage + quotas page by following the above instructions. View the current quota limits. Select the SKU for which you'd like to request an increase.

Provide the quota you'd like to increase and the new limit value. Finally, select Submit to continue.

Endpoint limit increases

To raise endpoint limit, open an online customer support request. When requesting for endpoint limit increase, provide the following information:

When opening the support request, select Service and subscription limits (quotas) as the Issue type.

Select the subscription of your choice.

Select Machine Learning Service: Endpoint Limits as the Quota type.

On the Additional details tab, you need to provide detailed reasons for the limit increase in order for your request to be processed. Select Enter details and then provide the limit you'd like to increase and the new value for each limit, the reason for the limit increase request, and location(s) where you need the limit increase. Be sure to add the following information into the reason for limit increase:

- Description of your scenario and workload (such as text, image, and so on).

- Rationale for the requested increase.

- Provide the target throughput and its pattern (average/peak QPS, concurrent users).

- Provide the target latency at scale and the current latency you observe with a single instance.

- Provide the VM SKU and number of instances in total to support the target throughput and latency. Provide how many endpoints/deployments/instances you plan to use in each region.

- Confirm if you have a benchmark test that indicates the selected VM SKU and the number of instances that would meet your throughput and latency requirement.

- Provide the type of the payload and size of a single payload. Network bandwidth should align with the payload size and requests per second.

- Provide planned time plan (by when you need increased limits - provide staged plan if possible) and confirm if (1) the cost of running it at that scale is reflected in your budget and (2) the target VM SKUs are approved.

Finally, select Save and continue to continue.

Note

This endpoint limit increase request is different from VM quota increase request. If your request is related to VM quota increase, follow the instructions in the VM quota increases section.

Compute limit increases

In order to increase the total compute limit, open an online customer support request. Provide the following information:

When opening the support request, select Technical as the Issue type.

Select the subscription of your choice

Select Machine Learning as the Service.

Select the resource of your choice

In the summary, mention "Increase total compute limits"

Select Compute Cluster as the Problem type and Cluster does not scale up or is stuck in resizing as the Problem subtype.

On the Additional details tab, provide the subscription ID, region, new limit (between 500 and 2500) and business justification if you would like to increase the total compute limits in this region.

Finally, select Create to create a support request ticket.