How It Works: Always On–When Is My Secondary Failover Ready?

I keep running into the question: “When will my secondary allow automatic failover?” Based on the question I did some extended research and I will try to summarize in is blog post. I don’t want to turn this post into a novel so I am going to take some liberties and assume you have read SQL Server Books Online topics related to Always On failover.

The easy answer: Only when the secondary is marked SYNCHRONIZED. - End of blog right? – not quite!

At a 10,000 foot level that statement is easy enough to understand but the issue is really understanding what constitutes SYNCHRONIZED. There are several state machines that determine the NOT vs SYNCHRONIZED state. These states are maintained using multiple worker threads and at different locations to keep the system functional and fast.

- Secondary Connection State

- End Of Log Reached State

To understand these states I need to discuss a few concepts to make sure we are all on the same page.

Not Replicating Commits – Log Blocks Are The Replication Unit

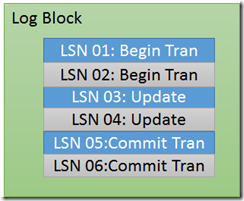

The first concept is to remember SQL Server does not ship transactions. It ships log blocks.

The design is not really different than a stand alone server. On a stand alone server a commit transaction issues (FlushToLSN/StartLogFlush) to make sure all LSN’s up to and including the commit LSN flushed. This causes the commit to block the session, waiting for the log manager to indicate that all blocks of the log have been properly flushed to stable media. Once the LSN has been reached any pending transaction(s) can be signaled to continue.

|

Let’s use the diagram on the left for discussion. The ODD LSNs are from Session 1 and the EVEN LSNs are from Session 2. The Log Block is a contiguous, chunk of memory (often 64K and disk sector size aligned), maintained by the Log Manager. Each database has multiple log blocks maintained in LSN order. As multiple workers are processing they can use various portions of the log block, as shown here. To make this efficient a worker requests space in the block to store its record. This request returns the current location in the log block, increments the next write position in the log block (to be used by the next caller) and acquires a reference count. This makes the allocation of space for a log record only a few CPU instructions. The storage position movement is thread safe and the reference count is used to determine when the log block can be closed out. In general, closing out a log block means all the space has been reserved and new space is being handed out for another log block. When all references are released the block can be compressed, encrypted, … and flushed to disk. Note: A commit transaction (FlushToLSN/StartLogFlush) can trigger similar behavior, even when the block is not full, so a commit transaction does not have to wait for the block to become full. Reference: https://support.microsoft.com/kb/230785 In this example both commits would be waiting on the log block to be written to stable media. Session 1 – FlushToLSN (05) Session 2 – FlushToLSN (06) The log writer’s completion routine is invoked when the I/O completes for the block. The completion routine checks for errors and when successful, signals any sessions waiting on a LSN <= 6. In this case both session 1 and 2 are signaled to continue processing. Write Log Waits accumulate during this wait for the flush activities. You can read more about write log waits at: https://blogs.msdn.com/b/psssql/archive/2009/11/03/the-sql-server-wait-type-repository.aspx Misconception I had a discussion on an e-mail where the individual was thinking we only shipped committed transactions. Not true (for Always On or Database Mirroring). If I only shipped committed transactions it would require a different set of log blocks for EACH transaction. This would be terrible performance impacting overhead. It would also be extremely difficult to handle changes on the same page. If SQL Server doesn’t have the ACID series of log records how would SQL Server ever be able to run recovery, both redo and undo. Throw in row versioning and shipping just the committed log records becomes very cumbersome. We don’t ship log records, we ship log blocks and optimize the recovery needs. |

Parallel Flushing / Hardening

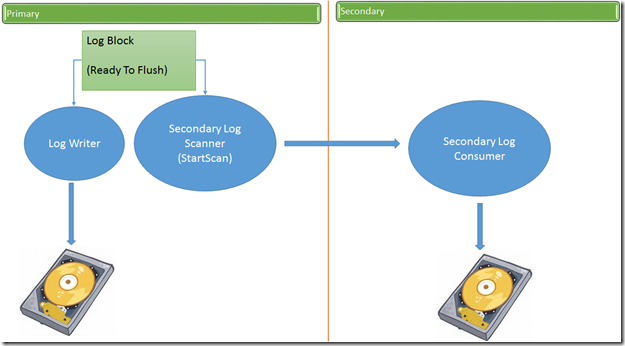

Always On is a bit different than database mirroring (DBM) with respect to sending the log blocks to the secondary replica(s). DBM flushes the log block to disk and once completed locally, sends the block to the secondary.

Always On changed this to flush the block(s) in parallel. In fact, a secondary could have hardened log block(s) before the primary I/O completes. This design increases performance and narrows the NOT IN SYNC window(s).

SQL Server uses an internal, callback mechanism with the log manager. When a log block is ready to be flushed (fully formatted and ready to write to disk) the notification callbacks are fired. A callback you might expect is Always On. These notifications start processing in parallel with the actual flushing of the log to the local (LDF) stable media.

As the diagram shows, the race is on. One worker (log writer) is flushing to the local media and the secondary consumer is reading new blocks and flushing on the secondary. A stall in the I/O on the primary can allow the secondary to flush before the primary just as a delay on the secondary could cause the primary to flush the I/O before the secondary.

My first reaction to this was, oh no, not in sync this is bad. However, the SQL Server developers didn’t stop at this juncture, Always On is built to handle this situation from the ground up.

Not shown in the diagram are the progress messages. The secondary sends messages to the primary indicating the hardened LSN level. The primary uses that information to help determine synchronization state. Again, these messages execute in parallel to the actual log block shipping activities.

Cluster Registry Key for the Availability Group

The cluster, AG resource is the central location used to maintain the synchronization states. Each secondary has information stored in the AG resource key (binary blob) indicating information about the current LSN levels, synchronization state and other details. This registry key is already replicated, atomically across the cluster so as long as we use the registry at the front of our WAL protocol design the AG state is maintained.

Note: We don’t update the registry for every transaction. In fact, it is seldom updated, only at required state changes. What I mean by WAL protocol here is that the registry is atomically updated before further action is taken on the database so the actions taken in the database are in sync with the registry across the cluster.

Secondary Connection State (Key to Synchronized State)

The design of Always On is a pull, not a push model. The primary does NOT connect to the secondary, the secondary must connect to the primary and ask for log blocks.

Whenever the secondary is NOT connected the cluster registry is immediately updated to NOT SYNCHRONIZED. Think if it this way. If we can’t communicate with the secondary we are unable to guarantee the state remains synchronized and we protect the system by marking it NOT SYNCHRONIZED.

Primary Database Startup

Whenever a database is taken offline/shutdown the secondary connections are closed. When the database is started we immediately set the state of the secondary to NOT SYNCHRONIZED and then recover the database on the primary. Once recovery has completed the secondary(s) are allowed to connect and start the log scanning activity.

Note: There is an XEvent session, definition included at the end of this blog, that you can be use to track several of the state changes.

Connected

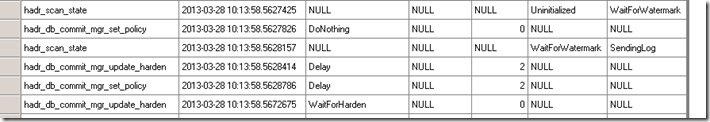

Once the secondary is connected it asks (pull) for a log scan to begin. As the XEvents show, you can see the states change for the secondary scanner on the primary.

| Uninitialized | The secondary has connected SQL Server but it has not sent LSN information yet. |

| WaitForWatermark | Waiting for the secondary to reconcile the hardened log LSN position on the secondary with the cluster key and recovery information. The secondary will send its end-of-log (EOL) LSN to the primary. |

| SendingLog | The primary has received the end-of-log position from the secondary so it can send log from the specified LSN on the primary to the secondary. |

Note: None of these states alone dictate that the secondary is IN SYNC. The secondary is still marked as NOT SYNCHRONIZED in the cluster registry.

Hardened Log On Secondary

You will notice the 3rd column is indicating the commit, harden policy. The harden policy indicates how a commit transaction should act on the primary database.

| DoNothing | There is no active ‘SendingLog’ so the commits on the primary don’t wait for acknowledgement from the secondary. There is no secondary connected so it can’t wait for an acknowledgement even if it wanted to. The state of the secondary must remain NOT SYNCHRONIZED as the primary is allowed to continue. I tell people this is why it is called HADR and not DRHA. High Availability (HA) is the primary goal so if a secondary is not connected the primary is allowed to continue processing. While this does put the installation in danger of data loss it allows production uptime and alternate backup strategies to compensate. |

| Delay | When a secondary is not caught up to the primary end–of-log (EOL) the transaction commits are held for a short delay period (sleep) helping the secondary catch up. This is directly seen while the secondary is connected and catching up (SYNCHRONIZING.) |

| WaitForHarden | As mentioned earlier the secondary sends progress messages to the primary. When the primary detects that the secondary has caught up to the end of the log the harden policy is changed to WaitForHarden. SYNCHRONIZING – DMVs will show synchronizing state until the end-of-log (EOL) is reached. Think of as a catch up phase. You can’t be synchronizing unless you are connected. SYNCHRONIZED – This is the point at which the secondary is marked as SYNCHRONIZED. (Secondary is connected and known to have achieved log block hardening with the primary EOL point.) !!! SYNCHRONIZED IS THE ONLY STATE ALLOWING AUTOMATIC FAILOVER !!! From this point forward all transactions have to wait for the primary (log writer) and secondary to advance the LSN flushes to the desired harden location. Going back to the first example, Session 2 waits for all LSNs up to and including 06 to be hardened. When involving the synchronous replica this is a wait for LSNs up to 06 to be hardened on the primary and the secondary. Until the progress of both the primary and secondary achieve LSN 06 the committing session is held (Wait For Log Flush.) |

Clean vs Hard Unexpected Database Shutdowns

When you think about database shutdown there are 2 main scenarios, clean and unexpected (hard). When a clean shutdown occurs the primary does not change the synchronized state in the cluster registry. Whatever the current synchronization state is at the time the shutdown was issued remains sticky. This allows clean failovers, AG moves and other maintenance operations to occur cleanly.

Unexpected, can’t change the state if the unexpected action occurs at the service level (SQL Server process terminated, power outage, etc..). However, if the database is taken offline for some reason (log writes start failing) the connection to the secondary(s) are terminated and terminating the connection immediately updates the cluster registry to NOT SYNCHRONIZED. Something like failure to write to the log (LDF) could be as simple as an administrator incorrectly removing a mount point. Adding the mount point back to the system and restarting the database restores the system quickly.

Scenarios

Now I started running scenarios on my white board. I think a few of these are applicable to this post to help solidify understanding.

| In Synchronized State Primary flushed LSN but not flushed on Secondary |

|

| In Synchronized State Secondary flushed LSN but not flushed on Primary |

|

The first reaction when I draw this out for my peers is, we are loosing transactions. Really we are not. We never acknowledge the transaction until the primary and secondary indicate the log has been hardened to LSN at both locations.

If you take the very same scenarios to a stand alone environment you have the same timing situations. The power outage could happen right after the log is hardened but before the client is sent the acknowledgement. It looks like a connection drop to the client and upon restart of the database the committed transaction is redone/present. In contrast, the flush may not have completed when the power outage occurred so the transaction would be rolled back. In neither case did the client receive an acknowledgement of success or failure for the commit.

SYNCHRONIZED – AUTOMATIC FAILOVER ALLOWED

Going back to the intent of this blog, only when the cluster registry has the automatic, targeted secondary, marked SYNCHRONIZED is automatic failover allowed. You can throw all kinds of other scenarios at this but as soon as you drop the connection (restart the log scan request, …) the registry is marked NOT SYNCHRONIZED and it won’t be marked SYNCHRONIZED again until the end-of-log (EOL) sync point is reached.

Many customers have experienced failure to allow fail over because they stopped the secondary and then tried a move. They assumed that because they no longer had primary, transaction activity it was safe. Not true as ghost, checkpoint and other processes can still be adding log records. As soon as you stop the secondary, by definition you no longer have HA so the primary marks the secondary NOT SYNCHRONIZED.

As long as the AG failover detection can use proper, cluster resource offline behaviors, SQL Server is shutdown cleanly or SQL Server is terminated harshly, while the secondary is in the SYNCHRONIZED state, automatic failover is possible. If the SQL Server is not shutdown but a database is taken offline the state is updated to NOT SYNCHRONIZED.

Single Failover Target

Remember that you can only have a single, automatic failover target. To help your HA capabilities you may want to setup a second, synchronous replica. While it can’t be the target of automatic failover it could help High Availability (HA).

For example, the automatic failover, secondary target machine has a power outage. Connection on primary is no longer valid so the secondary is marked NOT SYNCHRONIZED. The alternate synchronous, replica can still be SYNCHRONIZED and a target for a manual move WITHOUT DATA LOSS. The automatic failover target, in this example, is only a move WITH ALLOW DATA LOSS target.

Don’t forget that to enable true HA for this example the replica(s) should have redundant hardware. Second network cards, cabling and such. If you use the same network and a networking problem arises the connections on the primary are dropped and that immediately marks the replica(s) NOT SYNCHRONIZED.

Resolving State

Most of the time the question addressed in this post comes up because the secondary is NOT becoming the primary and is in Resolving state. Looking at the state changes leading up to the issue the secondary was in SYNCHRONIZING. When the primary goes down the secondary knows it was not SYNCHRONIZED. The secondary is attempting to connect to the a primary and the primary is down so the state is RESOLVING.

-------------------------------------------------------------------------------------------------------

Customizing Failover – All Kinds of Options

A secondary question that always follows this main question is: “If a disk fails on my database, within an AG why does automatic failover not occur?”

The short answer is that the secondary connections are dropped during database shutdown – NOT SYNCHRONIZED. (SQL Server development is looking into keeping the SYNCHRONIZED state in this situation instead of forcing NOT SYNCHRONIZED in vNext, opening up the window for automatic failover possibilities.)

The other part of the answer is that the built-in, failover logic is not designed to detect a single database failure. If you look at the failure conditions in SQL Server Books Online none of these are database level detections.

I was part of the work we did to enhance the failover diagnostics and decision conditions/levels. We specifically considered the custom solution needs. We evaluated dozens of scenarios, ranked and targeted those conditions safe for the broad customer base using Always On. This design specifically involved allowing any customer to extend the logic for your specific business needs. We made sure the mechanisms, the SQL Server and resource DLL use, were using publicly consumable interfaces and documented in SQL Server Books Online.

Note: All of the following can be done with PowerShell.

XEvents

For XEvents you can use the XEvent Linq Reader and monitor a live feed from the SQL Server. The easiest way to accomplish this would be to setup a SQL Agent job (continuous running so if the processes exits it restarts itself) which launches a C# executable or Powershell script.

- The job can make sure it is only starting the executable on the primary server.

- The executable can make sure the proper XEvent sessions are running (these sessions can even be defined to startup during SQL Server, service startup).

- The executable can monitor the steam of events for the custom trigger points you consider critical to your business needs and when the parameters fall out of the desired boundary(s) issue the Cluster command to MOVE the AG to another node.

- The XEvent session can also write to a file (.XEL) so the system has history of the event stream as well.

Note: The executable should be drop connection resilient. The design of the XEvent live stream is to terminate the connection for the stream if the server detects the event stream is stalled (client not processing events fast enough.) This means the client needs to detect the connection failure and reset. This usually means actions are posted to a worker thread in the application and the main reader only accepts the events and hands them to background tasks.

sp_server_diagnostics (https://msdn.microsoft.com/en-us/library/ff878233.aspx)

This was specifically designed to flow across a T-SQL connection (TDS) so anyone using a SQL Server client (.NET, ODBC, OLDEB, …) can execute the procedure and process the results. You don’t want dozens of these running on the SQL Server but you could easily monitor this stream as well and take any custom actions necessary.

Note: The I/O result row is NOT used by the SQL Server resource dll to make failover decisions. It is used for logging purposes only. It is not safe assumption that an I/O stall would be resolved by a failover of the system or even restart of the service. We have many examples of virus scanners and such components that can cause this issue and it would lead to a Ping-Pong among nodes if we trigger automated failover to occur.

DMVs and Policy Based Management (PBM)

In most cases it will be more efficient to setup an XEvent to monitor various aspects of the system. (Specific errors, database status changes, AG status changes, ….). However, the DMVs are also useful and a great safety net. We use many of the DMVs and the PBM rules to drive the Always On dashboard. You can create your own policies and execute them as well as using the XEvent predicates to limit the events produced.

Between some DMV queries and the policies you can easily detect things like corruption errors occurring, loss of a drive, etc…

External Factor Detections

Using PowerShell and WMI you can query information about the machine. For example you can check each drive for reported failure conditions, such as too many sector remaps or temperature problems. When detected you can take preemptive action to move the AG and pause the node, marking it for proper maintenance.

Example

$a = get-wmiobject win32_DiskDrive

$a[0] | get-member

Loss of LDF (No Automatic Failover)

A specific tenant of Always On is – protect the data- don’t automate things that can lead to data loss.

The scenario is a mount point, used to hold the LDF, is mistakenly removed from the primary node. This causes the SQL Server database to become suspect, missing log file but does not trigger automatic failover.

If the mount point can simply be added back to the node the database can be brought back online and business continues as usual, no data loss. If we had forced failover (ALLOW DATA LOSS) it could have led to data loss for a situation that the administrators could have cleanly resolved.

When the secondary drops a connection (loss of network, database LDF is damaged, …) the state is updated to ‘not synchronized’, preventing automatic failover. We are careful because allowing anything else may lead to split brain and other such scenarios that cause data loss. Furthermore, if you change a primary to a secondary it goes into recovery state and at that point if we had serious damage and needed to recover the data it is much more difficult to access the database.

A situation like this requires a business decision. Can the issue be quickly resolved or does it require a failover with allow data loss?

To help in preventing data loss the replicas are marked suspended. As described in the following link you can use a snapshot database, before resuming, to capture the changes that will be lost. https://msdn.microsoft.com/en-us/library/ff877957.aspx Then using T-SQL queries and facilities such as TableDiff one can determine the best reconciliation.

Note: You want to make sure the snapshot has a short life span to avoid the additional overhead for a long period of time and the fact that is can hold up other operations, such as File Stream garbage collection actions.

One could build additional monitoring to:

- Make sure primary was marked suspended

- Force the failover with allow data loss

- Create snapshot on OLD primary

- Resume OLD primary as a new secondary

Then take appropriate business steps to use the data in the snapshot to determine what the data loss would/could be. This is likely to involve a custom, data resolver design (much like the custom conflict resolution options of database replication) to determine how the data should be resolved.

Don’t Kill SQL

Killing SQL Server is a dangerous practice. It is highly unlikely but I can never rule out that it may be possible to introduce unwanted behavior, such as when SQL Server is attempting to update the cluster registry key, leaving the key corrupted. A corrupted registry key, blob for the Availability Group (AG) would then render every replica of the AG damaged because the AG configuration is damaged, not the data! You would then have to carefully drop and recreate the AG in a way that did not require you to rebuild the actual databases but instead allows the cluster configuration to be corrected. It is only few minute operation, once discovered, to fix it but immediate downtime and is usually a panic stricken situation.

SQL Server is design to handle power outages and tested well to accommodate this. Kill is a bit like simulating a power outage and not something Microsoft would recommend as a business practice. Instead you should be using something like PowerShell and issuing a ‘move’ of the availability group in a clean and designed way.

Example: (Move-ClusterResource) https://technet.microsoft.com/en-us/library/ee461049.aspx

XEvent Session

CREATE EVENT SESSION [HadronSyncStateChanges_CommitHardenPolicies] ON SERVER

ADD EVENT sqlserver.hadr_db_commit_mgr_set_policy( ACTION(package0.callstack,sqlserver.database_name)),

ADD EVENT sqlserver.hadr_db_commit_mgr_update_harden( ACTION(package0.callstack,sqlserver.database_name)),

ADD EVENT sqlserver.hadr_db_partner_set_sync_state( ACTION(package0.callstack,sqlserver.database_name)),

ADD EVENT sqlserver.hadr_db_manager_state (ACTION(package0.callstack,sqlserver.database_name)),

ADD EVENT sqlserver.hadr_ag_wsfc_resource_state(ACTION(package0.callstack,sqlserver.database_name)),

ADD EVENT sqlserver.hadr_scan_state( ACTION(package0.callstack,sqlserver.database_name))

ADD TARGET package0.event_file(SET filename=N'C:\temp\SyncStates',max_rollover_files=(100))

WITH (MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=NO_EVENT_LOSS,MAX_DISPATCH_LATENCY=5 SECONDS,MAX_EVENT_SIZE=0 KB,MEMORY_PARTITION_MODE=PER_CPU,TRACK_CAUSALITY=ON,STARTUP_STATE=ON)

Bob Dorr - Principal SQL Server Escalation Engineer

Comments

Anonymous

June 02, 2013

The comment has been removedAnonymous

June 03, 2013

Thanks Simon for the updated content - More applicable.Anonymous

August 31, 2013

Thanks for the valuable insights to make things clearer.Anonymous

July 01, 2015

Thanks for explaining the most useful concept.. :)Anonymous

September 21, 2015

Will automatic Failover happen when the database is corrupted in primary server?Anonymous

October 25, 2016

Great post Bob as it touches many important aspects of AG !!Appreciate it !Anonymous

February 09, 2017

Thanks for the detailed article. Are these concepts of secondary pull, parallel log flush applicable for synchronous replicas as well?Anonymous

November 29, 2017

Any updated information for new version e.g. 2017Anonymous

November 29, 2017

Any updated information for new version e.g. 2017