Security planning for LLM-based applications

Overview

This article discusses the Security planning for the sample Retail-mart application. It shows the architecture and data flow diagram of the example application. The artifacts are subject to change as your architecture and codebase evolves. Each of the labeled entities in the figures below are accompanied by meta-information, which describes the following items:

- Threats

- Data in scope

- Security controls recommendations

Discretion and resilience are essential qualities for AI models to effectively navigate complex and sensitive tasks, ensuring they can make informed decisions and maintain performance in challenging environments. More details can be found below under the Discretion heading. In addition to the security controls recommended in this plan, you're strongly advise to have robust defense mechanisms implemented using SIEM and SOC tools.

Application overview

Retail-mart is a fictional retail corporation that stands as a symbol of innovation, customer centric, and market leadership in the retail industry. With a rich history spanning several decades, Retail-mart has consistently set the standard for excellence and has become a household name, synonymous with quality and convenience.

Retail-mart operates a diverse range of retail products, catering to the diverse needs of its customers. Whether it's groceries, clothing, jewelry or DIY construction products, the convenience stores e-commerce platform provides a seamless shopping experience. Retail-mart has a presence in every facet of the retail landscape.

The company's commitment to customer satisfaction is evident in its dedication to offering the following items:

- Top-notch product.

- Exceptional service.

- A wide array of choices to meet the demands of shoppers from all walks of life.

Retail-mart wants to embrace cutting-edge technologies by using data-driven analytics and AI-driven recommendations to enhance the shopping experience and ensure that customers find exactly what they need.

This security planning will highlight the security risks associated with the proposed architecture and the security controls that will reduce the likelihood and severity of the security risks.

Diagrams

Architecture diagram

|

|---|

| Figure 1: Architecture Diagram |

Data Flow Diagram

|

|---|

| Figure 2: Data Flow Diagram |

Use cases

- Prompt flow/ prompt inputs requiring search /RAG Pattern Data Flow Sequence -- 1, 2, 3.a, 4, 5, 6, 7, 8, 9, 10, 11, 12

Data flow attributes

| # | Transport Protocol | Data Classification | Authentication | Authorization | Notes |

|---|---|---|---|---|---|

| 1 | HTTPS | Confidential | Entra ID | Entra Scopes | User is authenticated to web application. User launches chatbot and enters prompt. |

| 2 | HTTPS | Confidential | Entra ID | Entra Application permissions | Request is enhanced and sent to routing service. |

| 3.a | HTTPS | Confidential | Entra ID | Entra Application permissions | If request is related to search, then it's sent to Search RAG (Retrieval Augmented Generation) microservice |

| 3.b | HTTPS | Confidential | Entra ID | Entra Application permissions | If the request is conversational, it is sent to Conversational Microservice. |

| 3.b.1 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Conversational Prompt Input is sent to GPT model |

| 3.b.2 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Conversational prompt response from GPT model. |

| 3.b.3 | HTTPS | Confidential | Entra ID | Entra Application permissions | Response from Conversational Service to Routing Service |

| 3.c | HTTPS | Confidential | Entra ID | Entra Application permissions | If the request is not a search, it is sent to Non-Search Microservice. |

| 3.c.1 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Non-Search Request sent to GPT model |

| 3.c.2 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Non-Search response from GPT model |

| 3.c.3 | HTTPS | Confidential | Entra ID | Entra Application permissions | Response from Non-Search API service to Routing Service |

| 4 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Search API calls AI search API to fetch top 10 products. |

| 5 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Response from AI search API with details about top 10 products matching search input. |

| 6 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Product details output from AI search is sent to GPT model to summarize the content. |

| 7 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Response of GPT model to summarize the output |

| 8 | HTTPS | Confidential | Entra ID | Entra Application permissions | Response from Search Service |

| 9 | HTTPS | Confidential | Entra ID | Entra Application permissions | Response from routing service to Web application |

| 10 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Request sent to content moderation service to filter out harmful content provided by user. Content moderation service used at both user input and output response. |

| 11 | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Output response of content moderation service. |

| 12 | HTTPS | Confidential | Entra ID | Entra Application permissions | Response from web application to client browser |

| 13.a, 13.b, 13.c | HTTPS | Confidential | Entra ID | Azure RBAC(Azure AI Services OpenAI User) | Logs sent to Azure Monitor. |

Threat map

|

|---|

| Figure 3: Threat Map |

Threat properties

Threat #1: Prompt Injection

Principle: Confidentiality, integrity, availability, privacy

Threat: Users can modify the system-level prompt restrictions to "jailbreak" the LLM and overwrite previous controls in place.

As a result of the vulnerability, an attacker can create malicious input to manipulate LLMs into unknowingly execute unintended actions. There are two types of prompt injection direct and indirect. Direct prompt injections, also known as jail breaking, occur when an attacker overwrites or reveals underlying system prompt. Jail breaking allows attackers to exploit backend systems.

Indirect prompt injections occur when LLM accepts input from external sources like websites or files. Attackers may embed a prompt injection in the external content.

Affected Asset(s) Chat Bot Service, Routing Microservice, Conversational Microservice, Azure OpenAI GPT3.5 Model, Search API RAG microservice.

Mitigation:

- Enforce privilege control on LLM access to backend systems.

- Segregate external content from user prompts and limit the influence when untrusted content is used.

- Manually monitor input and output periodically to check that as expected.

- Maintain fine user control on decision making capabilities by LLM.

- All products and services must encrypt data in transit using approved cryptographic protocols and algorithms.

- Use Azure AI Content Safety Filters for prompt inputs and its responses.

- Use TLS to encrypt all HTTP-based network traffic. Use other mechanisms, such as IPSec, to encrypt non-HTTP network traffic that contains customer or confidential data.

- Use only TLS 1.2 or TLS 1.3. Use ECDHE-based ciphers suites and NIST curves. Use strong keys. Enable HTTP Strict Transport Security. Turn off TLS compression and do not use ticket-based session resumption.

Threat #2: Model Theft

Principle: Confidentiality

Threat Users can modify the system-level prompt restrictions to "jailbreak" the LLM and overwrite previous controls in place.

This threat arises when one of the following actions happens to the proprietary LLM models:

- Compromised.

- Physically stolen.

- Copied.

- Weights and parameters are extracted to create a functional equivalent.

Affected asset(s) Chat Bot Service, Routing Microservice, Conversational Microservice, Azure OpenAI GPT3.5 Model, Search API RAG microservice

Mitigation:

- Implement strong access controls and strong authentication mechanisms to limit unauthorized access to LLM model repositories and training environments.

- Restrict the LLMs access to network resources, internal services and API's.

- Regularly monitor and audit access logs and activities related to LLM model repositories to detect and respond to any suspicious activities.

- Automate MLOps deployment with governance and tracking and approval workflows.

- Rate Limiting API calls where applicable.

- All customer or confidential data must be encrypted before being written to non-volatile storage media (encrypted at-rest).

- Use approved algorithms, which includes AES-256, AES-192, or AES-128.

Threat #3 : Model Denial Of Service

Principle: Availability

Threat:

An attacker interacts with an LLM in a method that consumes an exceptionally large amount of resources, which results in a decline in the quality of service for them and other users. The one being attacked can potentially incur high resource costs. This attack can also be due to vulnerabilities in the supply chain.

Affected asset(s) Chat Bot Service, Routing Microservice, Conversational Microservice, Azure OpenAI GPT3.5 Model, Search API RAG microservice

Mitigation:

- Implement input validation and sanitization to ensure user input adheres to defined limits and filters out any malicious content.

- Cap resource use per request or step, so that requests involving complex parts execute more slowly.

- Enforce API rate limits to restrict the number of requests an individual user or IP address can make within a specific time frame.

- Limit the following counts:

- The number of queued actions.

- The number of total actions in a system reacting to LLM responses.

- Continuously monitor the resource utilization of the LLM to identify abnormal spikes or patterns that may indicate a DoS attack.

- Set strict input limits based on the LLMs context window to prevent overload and resource exhaustion.

- Promote awareness among developers about potential DoS vulnerabilities in LLMs and provide guidelines for secure LLM implementation.

- All services within the Azure Trust Boundary must authenticate all incoming requests, including requests coming from the same network. Proper authorization should also be applied to prevent unnecessary privileges.

- Whenever available, use Azure Managed Identities to authenticate services. Service Principals may be used if Managed Identities are not supported.

- External users or services may use Username + Passwords, Tokens, or Certificates to authenticate, provided these credentials are stored on Key Vault or any other vault solution.

- For authorization, use Azure RBAC and conditional access policies to segregate duties. Grant only the least amount of access to perform an action at a particular scope.

Threat #4: Insecure Output Handling

Principle: Confidentiality

Threat: Insufficient scrutiny of LLM output, unfiltered acceptance of the LLM output could lead to unintended code execution.

Insecure Output Handling occurs when there is insufficient validation, sanitization, and handling of the LLM outputs, which are passed downstream to other components and systems. Since LLM-generated content can be controlled by prompt input, this behavior is like providing users with indirect access to more functionality.

Affected asset(s) Web application, Non-Search Microservice, Search API RAG microservice, Conversational Microservice, Browser.

Mitigation:

- Treat the model as any other user. Adopt a zero-trust approach. Apply proper input validation on responses coming from the model to backend functions.

- Follow the best practices to ensure effective input validation and sanitization.

- Encode model output back to users to mitigate undesired code execution by JavaScript or Markdown.

- Use Azure AI Content Safety Filters for prompt inputs and its responses.

Threat #5: Supply chain Vulnerabilities

Principle: Confidentiality, Integrity, and Availability

Threat: Vulnerabilities in the open source/third party packages used for development could lead to an attacker exploitation of those vulnerabilities.

This threat occurs due to vulnerabilities in software components, training data, ML models or deployment platforms.

Affected asset(s) Web application, Non-Search Microservice, Search API RAG microservice, Conversational Microservice, Azure OpenAI GPT 3.5 model, Azure OpenAI Text moderation tool, Azure AI Service API APP

Mitigation:

- Use Azure Artifacts to publish and control feeds, that will lower the risk of supply chain vulnerability.

- Carefully vet data sources and suppliers, including T&Cs and their privacy policies, only using trusted suppliers. Ensure adequate and independently audited security is in place. Verify that model operator policies align with your data protection policies. That is, your data is not used for training their models. Similarly, seek assurances and legal mitigation against using copyrighted material from model maintainers.

- Only use reputable plug-ins and ensure they have been tested for your application requirements. LLM-Insecure Plugin Design provides information on the LLM-aspects of Insecure Plugin design you should test against to mitigate risks from using third-party plugins.

- Maintain an up-to-date inventory of components using a Software Bill of Materials (SBOM) to ensure you have an up-to-date, accurate, and signed inventory preventing tampering with deployed packages. SBOMs can be used to detect and alert new, zero-day vulnerabilities quickly.

- At the time of writing, SBOMs do not cover models, their artifacts, and datasets. If your LLM application uses its own model, you should use MLOps best practices. Use platforms offering secure model repositories with data, model, and experiment tracking.

- You should also use model and code signing when using external models and suppliers.

- Anomaly detection and adversarial robustness tests on supplied models and data can help detect tampering and poisoning.

- Implement sufficient monitoring to cover component and environment vulnerabilities scanning, use of unauthorized plugins, and out-of-date components, including the model and its artifacts.

- Implement a patching policy to mitigate vulnerable or outdated components. Ensure the application relies on a maintained version of APIs and the underlying model.

- Regularly review and audit supplier Security and Access, ensuring no changes in their security posture or T&Cs.

Threat #6 : Over reliance

Principle: Integrity

Threat: Over reliance can occur when an LLM produces erroneous information and provides it in an authoritative manner.

Affected asset(s) Web application, Non-Search Microservice, Search API RAG microservice, Conversational Microservice, Browser

Mitigation:

- Regularly monitor and review the LLM outputs. Use self-consistency or voting techniques to filter out inconsistent text. Comparing multiple model responses for a single prompt can better judge the quality and consistency of output.

- Cross-check the LLM output with trusted external sources. This extra layer of validation can help ensure the information provided by the model is accurate and reliable.

- Enhance the model with fine-tuning or embeddings to improve output quality. Generic pre-trained models are more likely to produce inaccurate information compared to tuned models in a particular domain. Techniques such as prompt engineering, parameter efficient tuning (PET), full model tuning, and chain of thought prompting can be employed for this purpose.

- Implement automatic validation mechanisms that can cross-verify the generated output against known facts or data. This cross-verification can provide an extra layer of security and mitigate the risks associated with hallucinations.

- Break down complex tasks into manageable subtasks and assign them to different agents. This break down not only helps in managing complexity, but it also reduces the chances of hallucinations. Each agent can be held accountable for a smaller task.

- Communicate the risks and limitations associated with using LLMs. These risks include potential for information inaccuracies. Effective risk communication can prepare users for potential issues and help them make informed decisions.

- Build APIs and user interfaces that encourage responsible and safe use of LLMs. Use measures such as content filters, user warnings about potential inaccuracies, and clear labeling of AI-generated content.

- When using LLMs in development environments, establish secure coding practices and guidelines to prevent the integration of possible vulnerabilities.

- Educate users so that they understand the implications of using the LLM outputs directly without any validation.

Threat #7: Information Disclosure

Principle: Confidentiality, Privacy

Threat:

LLM applications have the potential to reveal sensitive information, proprietary algorithms, or other confidential details through their output. This revelation can produce the following results:

- Unauthorized access to sensitive data.

- Unauthorized assess to intellectual property.

- Privacy violations.

- Other security breaches.

Affected asset(s) Web application, Non-Search Microservice, Search API RAG microservice, Conversational Microservice, Browser

Mitigation:

- Integrate adequate data sanitization and scrubbing techniques to prevent user data from entering the training model data.

- Implement robust input validation and sanitization methods to identify and filter out potential malicious inputs. These methods will prevent the model from being poisoned when enriching the model with data and if fine-tuning a model.

- Anything that is deemed sensitive in the fine-tuning data has the potential to be revealed to a user. Therefore, apply the rule of least privilege and do not train the model on information that the highest-privileged user can access which may be displayed to a lower-privileged user.

- Access to external data sources (orchestration of data at runtime) should be limited.

- Apply strict access control methods to external data sources and a rigorous approach to maintaining a secure supply chain.

Secrets inventory

An ideal architecture would contain zero secrets. Credential-less options like managed identities should be used whenever possible. Where secrets are required, it's important to track them for operational purposes by maintaining a secrets inventory.

Appendix

Discretion

AI should be a responsible and trustworthy custodian of any information it has access to. As humans, we will undoubtedly assign a certain level of trust in our AI relationships. At some point, these agents will talk to other agents or other humans on our behalf.

Designing controls that limit access to data and ensure that users/systems have access to authorized data is more important than ever before to establish trust in AI. Inline to this thought process, it is also equally important that LLM outputs aligns with the Responsible AI standards.

Resilience

The system should be able to identify abnormal behaviors and prevent manipulation or coercion outside of the normal boundaries of acceptable behavior in relation to the AI system and the specific task. These behaviors are caused by new types of attacks specific to the AI/ML space.

Systems should be designed to resist inputs that would otherwise conflict with local laws, ethics and values held by the community and its creators. This means providing AI with the capability to determine when an interaction is going “off script.” Integrity.

Hence, it is important to spruce up the defense mechanisms for the early detection of anomalies, so that AI based applications can fail safely while maintaining Business continuity.

Security principles

- Confidentiality refers to the objective of keeping data private or secret. In practice, it's about controlling access to data to prevent unauthorized disclosure.

- Integrity is about ensuring that data has not been tampered with and, therefore, can be trusted. It is correct, authentic, and reliable.

- Availability means that networks, systems, and applications are up and running. It ensures that authorized users have timely reliable access to resources when they are needed.

- Privacy relates to activities that focus on individual users' rights.

Microsoft Zero Trust Principles

Verify explicitly. Always authenticate and authorize based on all available data points, including user identity, location, device health, service or workload, data classification, and anomalies.

Use least privileged access. Limit user access with just-in-time and just-enough-access (JIT/JEA), risk-based adaptive policies, and data protection to help secure both data and productivity.

Assume breach. Minimize blast radius and segment access. Verify end-to-end encryption and use analytics to get visibility, drive threat detection, and improve defense.

Microsoft Data Classification Guidelines

| **Classification | Description |

|---|---|

| Sensitive | Data that is to have the most limited access and requires a high degree of integrity. Typically, it is data that will do the most damage to the organization should it be disclosed. Personal data (including PII) falls into this category and includes any identifier, such as name, identification number, location data, online identifier. It also includes data related to one or more of the following factors specific to the identity of the individual: physical, psychological, genetic, mental, economic, cultural, social. |

| Confidential | Data that might be less restrictive within the company but might cause damage if disclosed. |

| Private | Private data is compartmental data that might not do the company damage. This data must be kept private for other reasons. Human resources data is one example of data that can be classified as private. |

| Proprietary | Proprietary data is data that is disclosed outside the company on a limited basis or contains information that could reduce the company's competitive advantage, such as the technical specifications of a new product. |

| Public | Public data is the least sensitive data used by the company and would cause the least harm if disclosed. This data could be anything from data used for marketing to the number of employees in the company. |

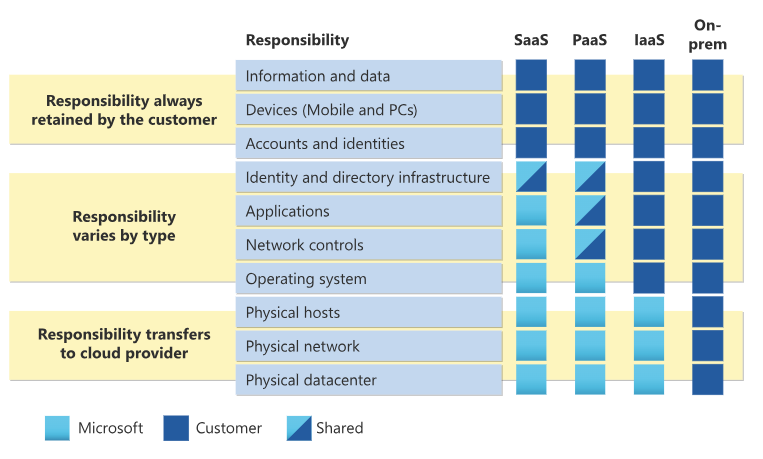

Shared Responsibility Model in the Azure Cloud

For all cloud deployment types, customers own their data and identities. They are responsible for protecting the security of their data and identities, on-premises resources, and the cloud components they control. The customer-controlled cloud components vary by service type.

Regardless of the type of deployment, customer always retains the following responsibilities:

- Data

- Endpoints

- Account

- Access management