Windows Azure Guidance - Background processing I

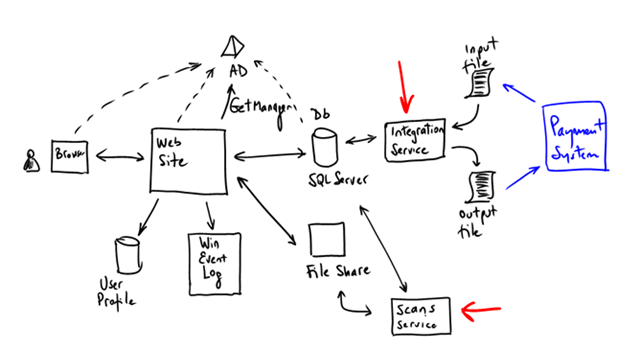

If you recall from my original article on a-Expense, there were 2 background processes:

- Scans Service: a-Expense allows its users to upload scanned documents (e.g. receipts) that are associated with a particular expense. The images are stored in a a file share and uses some naming convention to uniquely identify the image and the expense.

- Integration Service: this service runs periodically and generates files that are interfaces to an external system. The generated files contain data about expenses that are ready for reimbursement.

Let’s analyze #1 in this article.

The scan service performs 2 tasks:

- It compresses uploaded images so they use less space.

- It generates a thumbnail of the original scan that is displayed on the UI. Thumbnails are lighter weight and are displayed faster. Users can always browse the original if they want to.

When moving this to Windows Azure we had to make some adjustments to the original implementation (which were based on files and Windows Service).

The first thing to change is the storage. Even though the Windows Azure Drive could be a tempting choice because it is simple an NTFS volume that can be used with regular IO classes, we have opted not to use it. The main reason being that only one instance can write to it at any given point in time. We expect to have multiple web roles deployed, all of them with identical capabilities (for high availability and scalability) so having one instance able to write is not an option. A better approach is to simply store the images on block blobs.

The natural way of implementing the service itself is by using a worker role. Most of the code of the Windows service Adatum used before is simply repackaged in a Worker role (because we assume it is 100% managed code and we are not doing anything that requires Administrative access. One of the other reasons we can’t simply install the Windows Service on an Azure role).

The second change is related to how the service knows when to actually do some work. There are many ways people have implemented this.

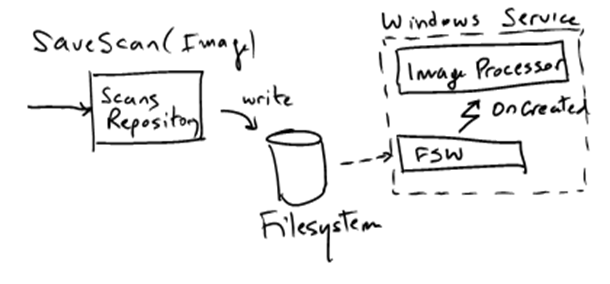

A common way is through a FileSystemWatcher object that raises events when new (image) files are created:

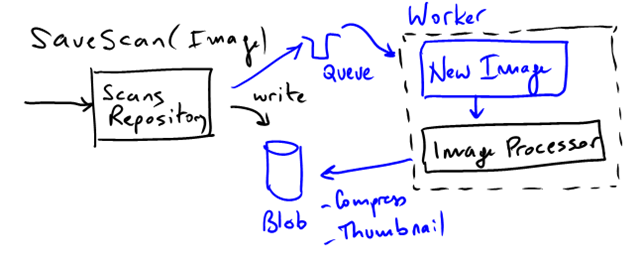

This notification design will have to be changed to use Azure Queues instead:

Every time there’s a new image uploaded in the system and stored to a blob, a message will be written to a queue. The Worker will just pick up new messages and process the image.

The final change has to do with storing the information back to table storage. Once compression is complete and the thumbnails are created, the worker will store references to them in the “expense” entity so it becomes available to the UI.

Some considerations on queues

Notice that processing events in this system more than once is ok. It is an idempotent operation. This is especially important when dealing with Azure Queues, because it is not guaranteed that the message will be delivered just once. There’s a chance that 2 workers will read the same event, or even the same worker picking up the same message more than once, resulting in duplicate processing. In our example, nothing would happen. The image will be compressed twice at worst. Or we could also add some logic so that if the compressed file exists, then nothing is done.

Another common provision is to deal with what’s known as poison messages. Those are essentially messages that generate exceptions, or errors and can’t be processed. Azure Queues have specific property (DequeueCount) to determine how many attempts were made to process a specific message and handle it.