Automatic SMB Scale-Out Rebalancing in Windows Server 2012 R2

Introduction

This blog post focus on the new SMB Scale-Out Rebalancing introduced in Windows Server 2012 R2. If you haven’t seen it yet, it delivers a new way of balancing file clients accessing a Scale-Out File Server.

In Windows Server 2012, each client would be randomly directed via DNS Round Robin to a node of the cluster and stick with that one for all shares, all traffic going to that Scale-Out File Server. If necessary, some server-side redirection of individual IO requests could happen in order to fulfill the client request.

In Windows Server 2012 R2, a single client might be directed to a different node for each file share. The idea here is that the client will connect to the best node for each individual file share in the Scale-Out File Server Cluster, avoiding any kind of server-side redirection.

Now there are some details about when redirection can happen and when the new behavior will apply. Let’s look into the 3 types of scenarios you might encounter.

Hyper-V over SMB with Windows Server 2012 and a SAN back-end (symmetric)

When we first introduced the SMB Scale-Out File Server in Windows Server 2012, as mentioned in the introduction, the client would be randomly directed to one and only one node for all shares in that cluster.

If the storage is equally accessible from every node (what we call symmetric cluster storage), then you can do reads and writes from every file server cluster node, even if it’s not the owner node for that Cluster Shared Volume (CSV). We refer to this as Direct IO.

However, metadata operations (like creating a new file, renaming a file or locking byte range on a file) must be done orchestrated cross the cluster and will be executed on a single node called the coordinator node or the owner node. Any other node will simply redirect these metadata operations to the coordinator node.

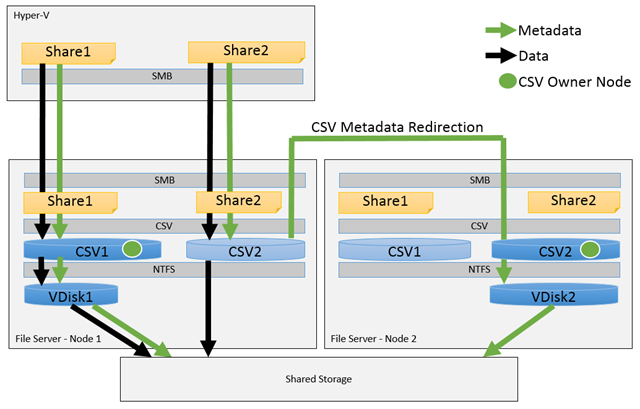

The diagram below illustrates these behaviors:

Figure 1: Windows Server 2012 Scale-Out File Server on symmetric storage

The most common example of symmetric storage is when the Scale-Out File Server is put in front of a SAN. The common setup is to have every file server node connected to the SAN.

Another common example is when the Scale-Out File Server is using a clustered Storage Spaces solution with a shared SAS JBOD using Simple Spaces (no resiliency).

Hyper-V over SMB with Windows Server 2012 and Mirrored Storage Spaces (asymmetric)

When using a Mirrored Storage Spaces, the CSV operates in a block level redirected IO mode. This means that every read and write to the volume must be performed through the coordinator node of that CSV.

This configuration, where not every node has the ability to read/write to the storage, is generically called asymmetric storage. In those cases, every data and metadata request must be redirected to the coordinator node.

In Windows Server 2012, the SMB client chooses one of the nodes of the Scale-Out File Server cluster using DNS Round Robin and that may not necessarily be the coordinator node that owns the CSV that contains the file share it wants to access.

In fact, if using multiple file shares in a well-balanced cluster, it’s likely that the node will own some but not all of the CSVs required.

That means some SMB requests (for data or metadata) are handled by the node and some are redirected via the cluster back-end network to the right owner node. This redirection, commonly referred to as “double-hop”, is a very common occurrence in Windows Server 2012 when using the Scale-Out File Server combined with Mirrored Storage Spaces.

It’s important to mention that this cluster-side redirection is something that is implemented by CSV and it can be very efficient, especially if your cluster network uses RDMA-capable interfaces.

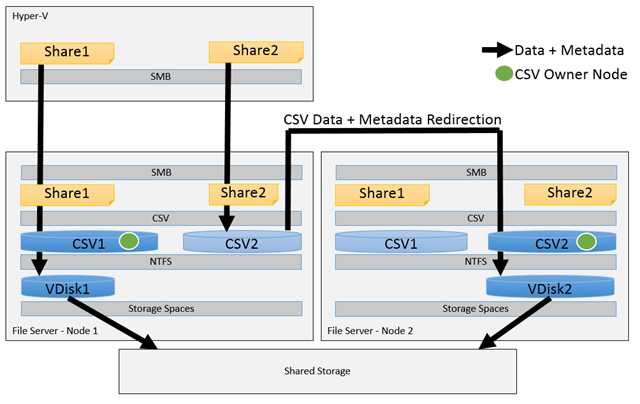

The diagram below illustrates these behaviors:

Figure 2: Windows Server 2012 Scale-Out File Server on asymmetric storage

The most common example of asymmetric storage is when the Scale-Out File Server is using a Clustered Storage Spaces solution with a Shared SAS JBOD using Mirrored Spaces.

Another common example is when only a subset of the file server nodes is directly connected to a portion backend storage, be it Storage Spaces or a SAN.

A possible asymmetric setup would be a 4-node cluster where 2 nodes are connected to one SAN and the other 2 nodes are connected to a different SAN.

Hyper-V over SMB with Windows Server 2012 R2 and Mirrored Storage Spaces (asymmetric)

If you’re following my train of thought here, you probably noticed that the previous configuration has a potential for further optimization and that’s exactly what we did in Windows Server 2012 R2.

In this new release, the SMB client gained the flexibility to connect to different Scale-Out File Server cluster nodes for each independent share that it needs to access.

The SMB server also gained the ability to tell its clients (using the existing Witness protocol) what is the ideal node to access the storage, in case it happens to be asymmetric.

With the combination of these two behavior changes, a Windows Server 2012 R2 SMB client and server are capable to optimize the traffic, so that no redirection is required even for asymmetric configurations.

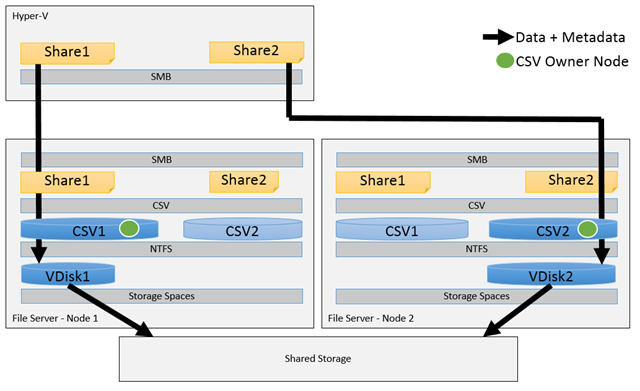

The diagram below illustrates these behaviors:

Figure 3: Windows Server 2012 R2 Scale-Out File Server on asymmetric storage

Note that the SMB client now always talks to the Scale-Out File Server node that is the coordinator of the CSV where the share is.

Note also that the CSV ownership is shared across nodes in the example. That is not a coincidence. CSV now includes the ability to spread its CSVs across the nodes uniformly.

If you add or remove nodes or CSVs in the Scale-Out File Server cluster, the CSVs will be rebalanced. The SMB clients will then also be rebalanced to follow the CSV owner nodes for their shares.

Key configuration requirements for asymmetric storage in Windows Server 2012 R2

Because of this new automatic rebalancing, there are key new considerations when designing asymmetric (Mirrored or Parity Storage Spaces) storage when using Windows Server 2012 R2.

First of all, you should have at least as many CSVs as you have file server cluster nodes. For instance, for a 3-node Scale-Out File Server, you should have at least 3 CSVs. Having 6 CSVs is also a valid configuration, which will help with rebalancing when one of the nodes is down for maintenance.

To be clear, if you have a single CSV in such asymmetric configuration in Windows Server 2012 R2 Scale-Out File Server cluster, only one node will be actively accessed by SMB clients.

You should also try, as much as possible, to have your file shares and workloads evenly spread across the multiple CSVs. This way you won’t have some nodes working much harder than others.

Forcing per-share redirection for symmetric storage in Windows Server 2012 R2

The new per-share redirection does not happen by default in the Scale-Out File Server if the back-end storage is found to be symmetric.

For instance, if every node of your file server is connected to a SAN back-end, you will continue to have the behavior described on Figure 1 (Direct IO from every node plus metadata redirection).

The CSVs will automatically be balanced across file server cluster nodes even in symmetric storage configurations. You can turn that behavior off using the cmdlet below, although I'm hard pressed to find any good reason to do it.

(Get-Cluster). CSVBalancer = 0

However, when using symmetric storage, the SMB clients will continue to each connect a single file server cluster node for all shares. We opted for this behavior by default because Direct IO tends to be efficient in these configurations and the amount of metadata redirection should be fairly small.

You can override this setting and make the symmetric cluster use the same rebalancing behavior as an asymmetric cluster by using the following PowerShell cmdlet:

Set-ItemProperty HKLM:\System\CurrentControlSet\Services\LanmanServer\Parameters -Name AsymmetryMode -Type DWord -Value 2 -Force

You must apply the setting above to every file server cluster node. The new behavior won’t apply to existing client sessions.

If you switch to this configuration, you must apply the same planning rules outlined previously (at least one CSV per file server node, ideally two).

Conclusion

I hope this clarifies the behavior changes introduced with SMB Scale-Out Automatic Rebalancing in Windows Server 2012 R2.

While most of it is designed to just work, I do get some questions about it from those interested in understanding what happens behind the scenes.

Let me know if you find those useful or if you have any additional questions.

Comments

- Anonymous

October 30, 2013

Thanks for the clarification. Great post - Anonymous

October 30, 2013

Can I read step-by-step of try behavior in Scale-Out File Server / 3 CSV / Windows Server 2012 R2 / Mirrored Storage Spaces / asymmetrc ?I want to try this behavior on performance test, and check transparent failover / cpu activity / network activity / storage activity / rebalance activity / backup / restore. - Anonymous

October 31, 2013

Very useful information. Thanks. - Anonymous

October 31, 2013

@YoshihiroAll you have to do is configure a two-node Windows Server 2012 R2 file server cluster with 2 mirrored Storage Spaces configured as CSVs, one SMB3 share on each CSV. If a client accesses the two shares, you will experience the behavior described in Figure 3.I published two step-by-step guides already for Windows Server 2012 R2. Check the other recent blogs here. - Anonymous

November 03, 2013

José,For demonstration purpose, how can i show which server is accessed when i set up asymmetric? - Anonymous

November 04, 2013

@RafaelYou can check which client is going to which node using the SMB Witness cmdlets. We use witness to redirect clients to the best node in Windows Server 2012 R2 and that infrastructure has been in place since Windows Server 2012.To view the current state, simply use this cmdlet from the server side: Get-SmbWitnessClientTo check if a connection is being redirected, you can use this cmdlet from the client side:Get-SmbConnectionYou can find more details on these and other SMB cmdlets that changes in Windows Server 2012 R2 atblogs.technet.com/.../what-s-new-in-smb-powershell-in-windows-server-2012-r2.aspx - Anonymous

November 05, 2013

@JoseThank you for your response.and I did Step-by-step blog posts , and I read other posts, and try IOmeter/SQLIO to it.Can I know best practice Scale-Out File Server configuration for Hyper-V host cluster and Hyper-V shared-VHDX based guest clusters ?Regards, - Anonymous

November 05, 2013

Can I read "Hyper-V over SMB performance considerations's Windows Server 2012 R2 / Scale-Out File Server / Storage Tier / Mirror version" ?REF: Hyper-V over SMB performance considerations'sblogs.technet.com/.../hyper-v-over-smb-performance-considerations.aspx - Anonymous

November 24, 2013

The comment has been removed - Anonymous

November 24, 2013

Just to add a bit more detail: currently, it seems like it favors SoFS node A over node B, even if node B is the CSV owner.I tried a few things. To start, node A owns the CAP role, but node B owns the CSV. Traffic is redirected rather than direct as described here. If I pause node A and fail the role over to node B, then RDMA traffic finally goes directly to node B (I thought it might fail outright if there was some RDMA issue with that node). I then resume node A and move the CSV over to it. Now the traffic goes directly to node A as expected. I pause node B again, failing over the CAP role back to node A. Traffic goes to node A as expected. But if I move the CSV back to node B, traffic still goes to node A and redirects over the CSV network again.It seems that node B is operating correctly since it is capable of handling the RDMA traffic directly, but for some reason if node A owns the CAP role, the traffic always goes through node A. If node B owns the CAP role, traffic goes to the owner node, whether it's A or B. What could cause this behavior? - Anonymous

November 24, 2013

@RyanIn the Scale-Out File Server, every node is capable of client access. So the node that owns the "CAP" should not really matter. The clients should only see a list of equally capable nodes. You can test this by using Resolve-DnsName plus the name of the scale-out file server.From your description, it seems like the Witness Service, for some reason, is not working. You should get something from the Get-SmbWitnessClient cmdlet when you run it on the server and there's a client actively accessing the scale-out file server.The move to the right node requires the use of the witness service. If that service is down or not working properly, that would explain the behavior you are experiencing (client hits the "wrong" node and does not redirect to the owner/coordinator node).Can you double check the output of Get-SmbConnection (from the client) and Get-SmbWitnessClient (from the server) while there is ongoing access to the file service? - Anonymous

November 25, 2013

@Jose: wow! I didn't expect you to respond so soon, especially on a Sunday evening! I was running SQLIO tests and failover scenarios (which are working out brilliantly!) instead of refreshing the page. :) Thank you!!It looks like I must not have run the Get-SmbWitnessClient command close enough time-wise to file activity. When I run it from the server during a SQLIO test, I'm getting a result (names altered slightly; not sure how this will look when posted!):Client Computer Witness Node Name File Server Node Network Name Share Name Client StateName Name------------------- ----------------- ------------------- ------------ ---------- ------------HV1 SOFS1 SOFS2 SOFSCAP HVCLUSTERWITNESS RequestedNotific...HV1 SOFS2 SOFS1 SOFSCAP 8COLUMNTEST RequestedNotific...Here is the output from Get-SmbConnection from the client (again, with some names altered and other classic file shares removed):ServerName ShareName UserName Credential Dialect NumOpens---------- --------- -------- ---------- ------- --------SoFSCAP 8ColumnTest DOMAINDomainAdminAcct DOMAINDomainAdminAcct 3.02 1SoFSCAP HVClusterWitness DOMAINHVClusterAccount DOMAINHVClusterAccount 3.02 2Does it matter that I connected to the SoFS share with a domain admin account (testing SQLIO) rather than via the cluster account? - Anonymous

November 25, 2013

@Jose: To add a bit more detail, I was running more SQLIO tests today (testing out the WBC), and encountered a different twist on the situation: node A owns the node, yet RDMA traffic is going through node B. If I run Get-SmbMultichannelConnection, it looks look the Hyper-V node only makes a connection to one node at a time in my scenario (names and IPs altered):Server Name Selected Client IP Server IP Client Server Client RSS Client RDMA Interface Interface Capable Capable Index Index----------- -------- --------- --------- -------------- -------------- -------------- --------------SoFSCAP True IP_SubnetB NodeB-IP_SubnetB 13 15 False TrueSoFSCAP True IP_SubnetA NodeB-IP_SubnetA 12 13 False TrueSoFSCAP True IP_SubnetB NodeB-IP_SubnetB 13 15 False TrueSoFSCAP True IP_SubnetA NodeB-IP_SubnetA 12 13 False TrueAre multiple share connections--one per volume, each owned by a different node--required at any given time in order for SMB to reconnect through the owner node? - Anonymous

November 27, 2013

So, I built a 2012 R2 SOFS cluster using the following config. It's usage is all IIS virtual and root directory content. We are an ASP.NET shop. We have 3 different web farms, all sitting behind load balancers, and the web servers communicate via UNC paths (also 2012 R2 and IIS 8.5) to the SOFS cluster. iSCSI MD3220i SAN with 8 Total Direct Attached iSCSI Ports3 x 2012 R2 Nodes9 Total CSV SharesMy understanding is SOFS is great for distributing IIS load across multiple active nodes, and thus far the performance has been good. My question is surrounding the settings above. Lets say a user is uploading a file via the website. This user is part of WEB-FARM-B. That particular CSV share is set to reside on NODE 2 by default. Does that mean that if the session happens to land on NODE 1 first, that the SOFS has to actually transfer that data to NODE 2 to actually be written to the SAN? Or does the DATA get written to the SAN by NODE 1, then the metadata is copied over letting the other NODES it's available? I just want to make sure that the SOFS is optimal for my environment. We have roughly 400 ASP.NET websites that are hosted on this SAN, and it's heavy on the reads, and not so heavy on the write. We might write a total of 2GB per day and that's it.I'm fairly new to this technology, so go easy on me :) - Anonymous

March 30, 2014

In this post, I'm providing a reference to the most relevant content related to Windows Server 2012 - Anonymous

May 19, 2014

Pingback from Nice to Know – MPIO “may” need to be configured correctly to increase performance when using Storage Spaces in Windows Server 2012 R2 « The Deployment Bunny - Anonymous

July 07, 2014

In this post, I'm providing a reference to the most relevant content related to Windows Server 2012 - Anonymous

August 12, 2014

Introduction Windows Server 2012 R2 introduced a new version of SMB. Technically it’s SMB version 3.02 - Anonymous

August 27, 2014

In today’s post in the modernizing your infrastructure with Hybrid Cloud series, I am going to talk about - Anonymous

November 03, 2016

@JoseFirst of all - Great blog!So if I understand correctly, in case of asymmetric storage (such as Spaces), the bandwidth for a share is limited to the bandwidth of one node. If so, then one of the major benefits of SoFS is lost (increase bandwidth), isn't it?Did I understand correctly?- Anonymous

December 12, 2016

You can still get it if you use multiple volumes and balance them.

- Anonymous