Executing Custom Script Extension using Azure CLI 2.0 on an Azure Linux Virtual Machine

Hello Everyone,

This blog post shows an end-to-end scenario for executing a bash script remotely on an Azure Linux VM. I put this together because out there we have documentation for the commands individually but no end-to-end example, here I show how to obtain the storage account key, how to check if a container exists and create one if it doesn’t and execute the script securely without public access turned on.

The following steps will guide through running a bash script through the Custom Script Extension for Linux.

It is also assumed that you have Azure CLI 2.0 installed in a management computer by following the steps outlined here to perform the installation, all command line instructions below assumes you are executing them on Linux bash, I’m using Bash on Ubuntu on Windows 10 to write this post.

Steps

We need to obtain the storage account key which is our authentication method to a storage account since we need to upload the script and tell the Custom Script Extension to download the script from that location when it gets executed inside the virtual machine. This key later is used for other operations.

key=$(az storage account keys list --account-name pmcstorage100 --resource-group Azure-Work-Week-RG --query '[0].value' --output tsv)The script needs to be located in a container within the storage account, so we need to check if a container exists

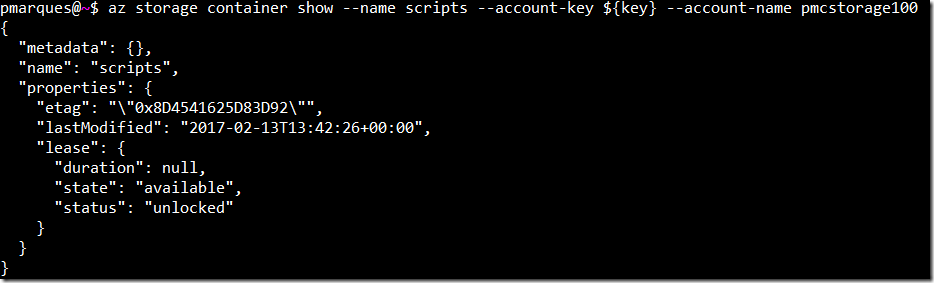

az storage container show --name scripts --account-key ${key} --account-name pmcstorage100This is the output when the tested exists:

If that container does not exists a message “The specified container does not exist” will be displayed:

If you need to test this in a script, follows a bash code snippet that exemplifies one of the ways to achieve it:

RESULT=$(az storage container show --name scripts1 --account-key ${key} --account-name pmcstorage100) if [ "$RESULT" == "" ] then echo "Container does not exist" else echo "Container exists" fiIf the tested container does not exist, the following command line will create it (notice that access is private)

az storage container create --name "scripts01" --account-name pmcstorage100 --account-key ${key} --public-access offNext step is to upload the file, notice that we keep reusing $key variable that contains the storage account key that is required

az storage blob upload --container-name scripts01 --file ./script01.sh --name script01.sh --account-key ${key} --account-name pmcstorage100Another important step is to build the Protected Settings JSON string, which will be used by the Custom Script Extension to download the file or files from the storage account

protectedSettings='{"storageAccountName":"pmcstorage100","storageAccountKey":"'${key}'"}'Finally, we execute the Custom Script Extension, passing several arguments, one of then is the setting JSON string (which can be built in other ways) where we indicate the URL(s) of the file(s) to download and the one that will be executed, plus the protected settings which contains the storage account key

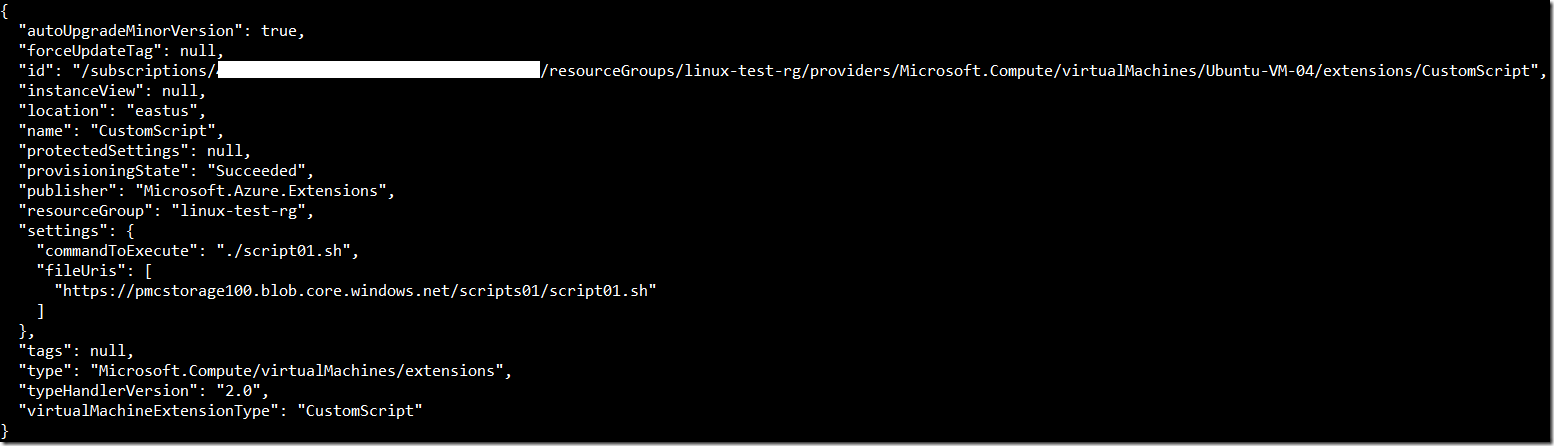

az vm extension set --publisher Microsoft.Azure.Extensions --name CustomScript --version 2.0 --settings '{"fileUris": ["https://pmcstorage100.blob.core.windows.net/scripts01/script01.sh"], "commandToExecute":"./script01.sh"}' --protected-settings $protectedSettings --vm-name Ubuntu-VM-04 --resource-group linux-test-rgOutput

Side note

If you’re like me that is just learning how to work with Linux and Bash, here is an example on how to obtain a shared access signature token:

# Getting next day date and time in UTC for expiry date of the SAS Token

dateVar=$(TZ=UTC date +"%Y-%m-%dT%H:%MZ" -d "+1 days")

# Obtaining the SAS token

sas=$(az storage container generate-sas --name scripts --expiry ${dateVar} --permissions rl --account-name pmcstorage100 --account-key ${key} --output tsv)

This is not directly related to what we did in this post but may be useful one day if you need to generate AdHoc SAS tokens. For more information about these tokens, please refer to Using Shared Access Signatures (SAS).

That is it for this blog, thanks for stopping by.

Regards

Paulo

Comments

- Anonymous

April 18, 2017

I was just wondering if you could just reload the script. Let say my extension is already installed and then I changed and upload my script back to the container, how can I make sure the VM reload the script.- Anonymous

April 26, 2017

The comment has been removed

- Anonymous

- Anonymous

June 09, 2017

Great post, thanks for sharing!

![clip_image001[5] clip_image001[5]](https://msdntnarchive.blob.core.windows.net/media/2017/02/clip_image0015_thumb1.png)