Are Your ETL Batch Windows Full? Real Time Operational Analytics Can Change The Landscape!

by Graeme Scott – Data Platform Solution Architect.

A colleague and I recently attended a discovery meeting with a utility company in the UK who are experiencing what they claim to be perceived performance limits with their SQL Server based data warehouse solution. Drilling a little deeper into the perceived performance limits their issues appeared to having nothing to do with the SQL Server relation database engine, more the export, transform and load (ETL) process that loads data into their warehouse.

The total duration of the daily ETL process to load data into their data warehouse is currently 8 hours. This ETL duration was described as preventing the company from expanding the data domain within their warehouse, thus a perceived limit had been reached.

The utility company was considering upgrading from a previous version of SQL Server to SQL Server 2014. It is not uncommon for organisations to remain at an n-1 position in terms of software releases as it can be perceived as introducing less risk.

It's my view that when going through the pain and risk of a software upgrade, it's always worth getting the best value for the disruption; software upgrades can be disruptive so companies should obviously strive to get the best business benefit for their efforts.

Based on the information gathered in the meeting, we both suggested to the customer that they upgrade their data warehouse to SQL Server 2016. There are numerous new features that could offer benefit to the customer but two things immediately came to mind. Firstly, the SQL Server 2016 relational database engine is driving Azure SQL Database which supports millions of customer databases. From a relational engine perspective, upgrading to SQL 2016 introduces minimal risk.

Secondly, the customer may be able to get both significant and immediate benefit to the long running ETL process by considering the SQL 2016 feature called Real Time Operational Analytics. This is an exciting new capability that allows both transactional and analytic workload to access the same table concurrently with minimal detriment to overall system performance. Real Time Operational Analytics benefits from the maturity of updatable columnstore indexes and in-memory table storage in SQL Server 2016.

In addition to supporting parallelised workload, column based data storage can provide a significant improvement to query performance for typical "fact table" (GROUP BY or column elimination) queries when compared to same queries run against a row-oriented table.

Real Time Operational Analytics can be implemented in three different ways:

- A traditional disk-based table with an updatable non-clustered columnstore index.

- A traditional disk-based table with a filtered updatable non-clustered columnstore index.

- A memory-optimized table with clustered a columnstore index.

A traditional disk-based table with an updatable non-clustered columnstore index – this implementation option uses an updateable columnstore index on a traditional (rowstore) table. The columnstore index maintains a copy of the table data so that the OLTP and analytic workloads can run in parallel against separate copies of the data; this design strategy minimizes the performance impact of these parallelized workloads. The data stored within the columnstore index is automatically maintained by SQL Server to accurately reflect the OLTP data changes applied to the traditional rowstore table. No filtering is applied to the non-clustered columnstore index definition with this particular implementation option.

A traditional disk-based table with a filtered updatable non-clustered columnstore index - this second approach is a further enhancement to the first implementation option. This implementation option provides more fine grain control as to when data that is modified is fully transitions to the columnstore index from the rowstore table. It may be appropriate to only store data within the columnstore index when the data is in a specific state or is less likely to change; an example of this being an online purchase that has shipped and this state can be expressed in the transaction row. A filter can be applied to the columnstore index to express this condition.

A second form of data filtering is known as compression delay specification. When a non-clustered columnstore index is created a compression delay interval may be specified. This in simple terms means when a row is modified, allow a defined amount of time to elapse before the row is loaded from a holding area (deltastore) within the index to a fully compressed portion of the columnstore index (rowgroup). There may be no obvious column (or columns) on a row that can be used to determine if a transaction row is in a specific state, however it may be known that a transaction row will reach a specific state (non-changing) after a finite period of time.

These filtering options allow for fine grain control of the non-clustered columnstore index supporting Real Time Operational Analytics with an objective of maintaining the OTLP workload performance.

A memory-optimized table with a clustered columnstore index – a memory-optimized table can be configured for Real Time Operational Analytics with the inclusion of a clustered columnstore index. Only a clustered columnstore index can be added to a memory-optimized table, this is necessary as all the table columns for this class of table are required within the index. With a clustered columnstore index on a memory-optimized table, there is no explicit deltastore rowgroup. New rows that are added to a memory-optimized table containing a clustered columnstore index are loaded directly into the table. The new rows that are added to the table are tracked and are known as being stored within the "Tail" portion of the table (the "Tail" portion of the table can be considered a virtual deltastore). Rows within the "Tail" portion of the table are only compressed within the index when the "Tail" contains circa 1 million rows.

The objective is to keep the rows that are being modified within the "Tail" portion of the memory-optimized table. It's possible to specify the compression delay interval when creating the clustered columnstore index so that rows are compressed and moved from the "Tail" to the index once a finite amount of time has elapsed. It's not possible to express a filter expression when creating a clustered columnstore index on a memory-optimized table of the form WHERE OrderState = 'Shipped'.

Columnstore Index Concepts

The previous section discussing the Real Time Operational Analytics implementation options has touched upon some columnstore index concepts which may need explanation.

So what actually is a columnstore index? A columnstore index is a technology for storing, retrieving and managing data by using a columnar data format referred to as columnstore. A traditional table will store all columns together as a row within established storage structures (pages and extents). A columnstore index groups and stores the data for individual columns in column-wise storage structure.

The terms "non-clustered columnstore" and "the storage of data" associated with this type of index may sound a little odd when compared to classic b-tree non-clustered indexes (not considering the INCLUDE clause). Firstly, data that is stored within a columnstore index is optimized for access using structure meta data in the form of dictionaries and is highly compressed.

To expand on this explanation, I'd like to introduce a few more columnstore index concepts:

Rowgroups – These are storage structures used by the columnstore index that effectively store batches of source data rows. By default, batches of approximately 1 million source data rows are stored within rowgroups. Think of this simply as horizontally slicing the source data into batches of 1 million rows. Each rowgroup maintains statistics about the ranges of data values that have been loaded into the rowgroup.

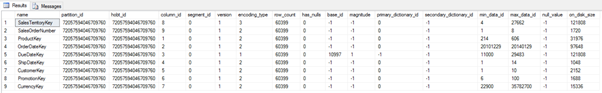

Column Segments – For a single batch of 1 million source rows used to populate a rowgroup, the data from the columns within the batch are stored within individual storage units called column segments (column-wise storage). There is a column segment storage structure for each column that makes up the definition of the columnstore index. Depending on the columns specified in the index definition (non-clustered), the index may also include columns from a b-tree clustered index definition if the index exists (for example a primary key). Column segments are stored within rowgroups.

Deltastore – This is a rowgroup within the columnstore index that holds rows from the base rowstore table that have been subject to DML operations (INSERT, UPDATE, DELETE). This rowgroup allows the columnstore index to accurately reflect the changing state of the base rowstore table.

So back to the utility customer and their challenge of an ETL process that currently takes 8 hours. For this customer to explore this feature they would have to host detailed transactional data within a SQL 2016 instance or possibly use an operational data store (ODS); this would possibly mean some ETL process refactoring however, by leveraging non-clustered column store indexes on the main transaction tables, it may be possible to eliminate a significant portion of the ETL processing and therefore reduce the overall ETL processing time. As the name of this new SQL Server 2016 feature would suggest, the customer could start performing real time analytics on transactional data, allowing the customer to gain richer data insight.

For this utility customer I would suggest that they initially explore using Real Time Operational Analytics using the following configuration option:

- Traditional disk-based table(s) with an updatable non-clustered columnstore index.

This is potentially the quickest and simplest configuration option for Real Time Operational Analytics. Adopting this approach will allow the customer to get quickly up to speed with the new capability and allow them to assess both the business benefit of faster data insight as well as consider the elements of the existing ETL process that may potentially be made obsolete. If the customer has a deeper understanding of the OTLP workload and can determine when transaction rows are in a consistent or stable state; then as a secondary exercise the columnstore indexes could be dropped and recreated with filters. This would be a useful exercise to see if this second iteration of columnstore indexes increases OLTP workload throughput compared to the first set of columnstore indexes.

I'm aware there are many factors that can cause an ETL process to have a significant duration, some examples include complex business logic required for data enrichment, layered data mappings and expensive lookups, performance of third party data sources and APIs, data conformity in terms of business transactions (transaction data only being complete and consistent after a specific point in time within the business day), data quality and sub-optimal infrastructure.

That said, the adoption of Real Time Operational Analytics to potentially replace elements of the current ETL process may help this customer reduce their overall ETL processing time and provide new forms of data insight. This approach would allow the customer to both expand the data domain within their data warehouse, provide real time access to data and provide a SQL Server platform to support re-architecting the current long running ETL solution.

These observations are not suggesting that customers in general should dispense with the use of established data warehouse design best practices however for analytic workloads, Real Time Operational Analytics provides an exciting proposition.

More details about SQL Server 2016 Real Time Operational Analytics and columnstore indexes can be found here:

SQL Server 2016 Operational Analytics:

https://channel9.msdn.com/Shows/Data-Exposed/SQL-Server-2016-Operational-Analytics

Getting Started with Real Time Operational Analytics:

https://msdn.microsoft.com/en-gb/library/dn817827.aspx

Columnstore Indexes Versioned Feature Summary

https://msdn.microsoft.com/en-us/library/dn934994.aspx

Columnstore Indexes for Data Warehousing

https://msdn.microsoft.com/en-gb/library/dn913734.aspx

The following white paper contains a high level comparison of in-memory tables and columnstore indexes:

A high level overview of columnstore indexes, rowgroups and column segments can be found here:

https://msdn.microsoft.com/en-gb/library/gg492088.aspx

Script to create a non-clustered columnstore index on a traditional rowstore table:

The following script can be used with the AdventureWorksDW2014 sample database.

USE [AdventureWorksDW2014]

GO

-- How many rows are in the sample fact table? Circa 60K.

SELECT

COUNT(*)

FROM dbo.FactInternetSales;

-- Create the non-clustered columnstore index

CREATE

NONCLUSTERED

COLUMNSTORE

INDEX "NCCI_OperationalAnalytics"

ON dbo.FactInternetSales

(ProductKey, OrderDateKey, CustomerKey, PromotionKey, SalesTerritoryKey, CurrencyKey, SalesAmount);

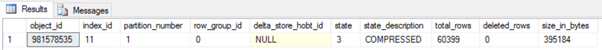

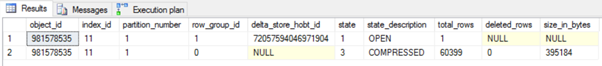

-- Get some statistics - 1 rowgroup as there are circa 60K rows, no DELTASTORE group is currently open

SELECT

*

FROM

sys.column_store_row_groups

-- Information on the column segments: SELECT c.name

,css.* FROM

sys.column_store_segments css INNER

JOIN

sys.columns c

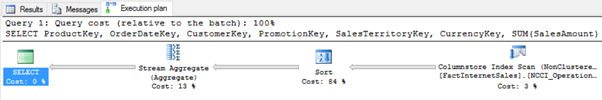

-- SELECT with a GROUP BY (include the actual execution plan) SELECT ProductKey, OrderDateKey, CustomerKey, PromotionKey, SalesTerritoryKey, CurrencyKey,

SUM(SalesAmount)

AS SalesAmount FROM dbo.FactInternetSales

GROUP

BY ProductKey, OrderDateKey, CustomerKey, PromotionKey, SalesTerritoryKey, CurrencyKey

ORDER

BY OrderDateKey DESC

-- Add a row to the rowstore table:

INSERT

INTO dbo.FactInternetSales

SELECT [ProductKey]

,[OrderDateKey]+1

,[DueDateKey]+1

,[ShipDateKey]+1

,[CustomerKey]

,[PromotionKey]

,[CurrencyKey]

,[SalesTerritoryKey]

,[SalesOrderNumber]

,[SalesOrderLineNumber]+2

,[RevisionNumber]

,[OrderQuantity]

,[UnitPrice]

,[ExtendedAmount]

,[UnitPriceDiscountPct]

,[DiscountAmount]

,[ProductStandardCost]

,[TotalProductCost]

,222.22

,[TaxAmt]

,[Freight]

,[CarrierTrackingNumber]

,[CustomerPONumber]

,DATEADD(DAY, 1, [OrderDate])

,DATEADD(DAY, 1, [DueDate])

,DATEADD(DAY, 1, [ShipDate])

FROM [dbo].[FactInternetSales]

WHERE ProductKey=214 AND OrderDateKey=20140128 AND CustomerKey=11794 AND PromotionKey=1 AND SalesTerritoryKey=4 AND CurrencyKey=100

-- Check the rowgroups: A deltastore is now open and the index data reflects the rowstore table changes. SELECT

*

FROM

sys.column_store_row_groups

Additional code snippet below:

CREATE

TABLE t_account

(

accountkey int

NOT

NULL,

Accountdescription nvarchar (50),

accounttype nvarchar(50),

unitsold int,

CONSTRAINT uniq_account UNIQUE (AccountKey) );

CREATE

NONCLUSTERED

INDEX NCI_AT ON t_account

(accounttype);

--Store the table as a columnstore.

--The unique constraint is preserved as a nonclustered index on the columnstore table.

CREATE

CLUSTERED

COLUMNSTORE

INDEX t_account_cci ON t_account;

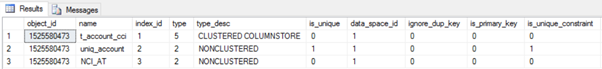

The system table sys.indexes can be queried to obtain data about the non-clustered and clustered Columnstore indexes:

SELECT

*

FROM

sys.indexes

WHERE

object_id

=

OBJECT_ID('dbo.t_account');