プロフェッショナル音声モデルをエンドポイントとしてデプロイする

音声モデルを正常に作成してトレーニングしたら、それをカスタム ニューラル音声エンドポイントにデプロイします。

Note

標準 (S0) Speech リソースを使用して最大 50 個のエンドポイントを作成でき、それぞれに独自のカスタム ニューラル音声を使用できます。

カスタム ニューラル音声を使用するには、音声モデルの名前を指定し、HTTP 要求にカスタム URI を直接使用し、同じ Speech リソースを使用してテキスト読み上げサービスの認証をパススルーする必要があります。

デプロイメント エンドポイントの追加

カスタム ニューラル音声エンドポイントを作成するには、次の手順を実行します。

Speech Studio にサインインします。

[Custom Voice]> [プロジェクト名] >[モデルのデプロイ]>[モデルのデプロイ] の順に選択します。

このエンドポイントに関連付ける音声モデルを選択します。

カスタム エンドポイントの [名前] と [説明] を入力します。

シナリオに応じて エンドポイントの種類 を選択します。 リソースがサポートされているリージョンにある場合、エンドポイントの種類の既定の設定は 高パフォーマンス です。 それ以外の場合、リソースがサポートされていないリージョンにある場合、使用可能な唯一のオプションは [高速再開] です。

- 高パフォーマンス: 会話型 AI、コール センター ボットなど、リアルタイムおよび大量の合成要求を使用するシナリオに最適化されています。 エンドポイントのデプロイまたは再開には約 5 分かかります。 ハイ パフォーマンス エンドポイントの種類がサポートされているリージョンの詳細については、リージョン の表の脚注を参照してください。

- 高速再開: 合成要求の頻度が低いオーディオ コンテンツ作成シナリオに最適化されています。 1 分未満でエンドポイントを簡単かつ迅速にデプロイまたは再開できます。 高速再開 エンドポイントの種類は、テキスト読み上げが利用可能なすべてのリージョンでサポートされています。

[デプロイ] を選択してエンドポイントを作成します。

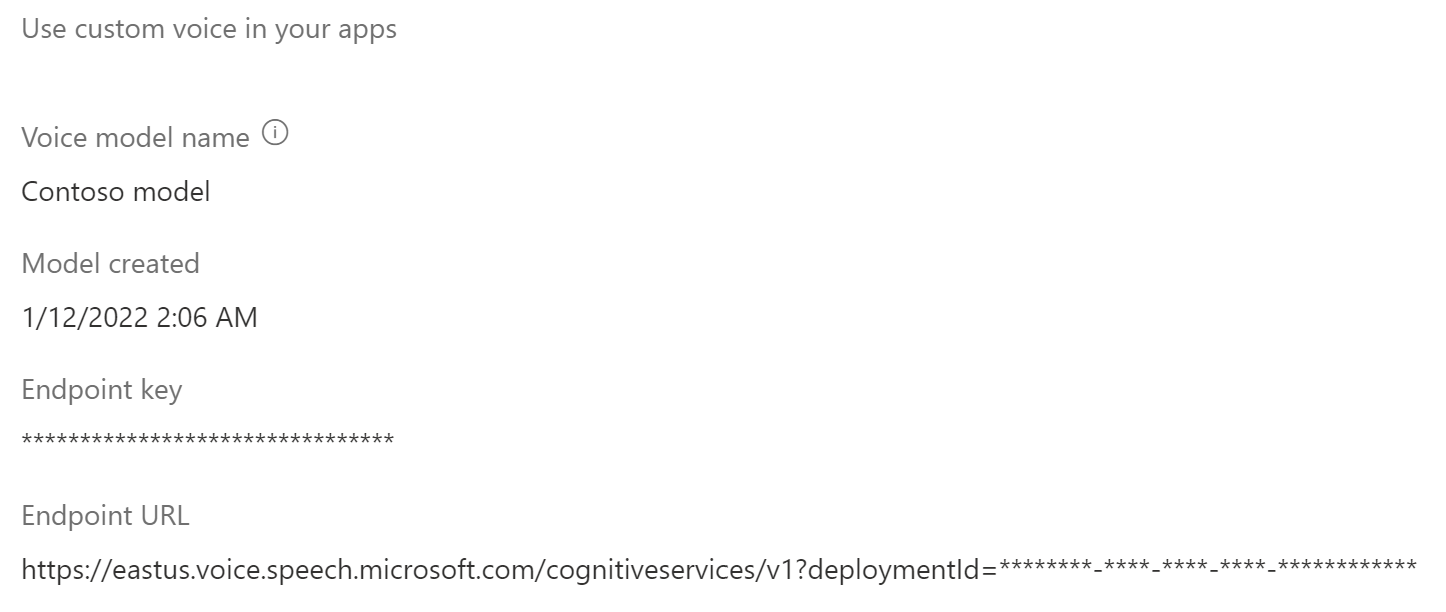

ご自分のエンドポイントがデプロイされると、エンドポイント名はリンクとして表示されます。 リンクを選択すると、エンドポイント キー、エンドポイントの URL、サンプル コードなどの、ご使用のエンドポイントに固有の情報が表示されます。 デプロイの状態が [Succeeded](成功) の場合、エンドポイントを使用する準備ができています。

アプリケーションの設定

REST API の要求パラメーターとして使用するアプリケーション設定は、Speech Studio の [モデルのデプロイ] タブから入手できます。

- [エンドポイント キー] は、エンドポイントが関連付けられている Speech リソース キーを示します。

Ocp-Apim-Subscription-Key要求ヘッダーの値としてエンドポイント キーを使用します。 - [Endpoint URL] (エンドポイント URL) には、サービス リージョンが表示されます。

voice.speech.microsoft.comの前にある値をサービス リージョン要求パラメーターとして使用します。 たとえば、エンドポイント URL がhttps://eastus.voice.speech.microsoft.com/cognitiveservices/v1の場合はeastusを使用します。 - [Endpoint URL] (エンドポイント URL) には、エンドポイント ID が表示されます。 エンドポイント ID 要求パラメーターの値として、

?deploymentId=クエリ パラメーターに追加された値を使用します。

カスタム音声を使用する

カスタム エンドポイントの機能は、テキスト読み上げ要求に使用される標準のエンドポイントと同じです。

1 つ違うのは、Speech SDK を介してカスタム音声を使用するには、EndpointId を指定する必要があることです。 テキスト読み上げのクイックスタートから始めて、EndpointId と SpeechSynthesisVoiceName でコードを更新できます。 詳しくは、「カスタム エンドポイントを使用する」をご覧ください。

音声合成マークアップ言語 (SSML) でカスタム音声を使うには、音声名としてモデル名を指定します。 この例では、YourCustomVoiceName 音声を使用します。

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis" xml:lang="en-US">

<voice name="YourCustomVoiceName">

This is the text that is spoken.

</voice>

</speak>

製品上で新しい音声モデルに切り替える

音声モデルを最新のエンジン バージョンに更新した後、または製品の新しい音声に切り替える場合、新しい音声モデルを新しいエンドポイントに再デプロイする必要があります。 既存のエンドポイントに新しい音声モデルを再デプロイすることはサポートされていません。 デプロイ後、新しく作成したエンドポイントにトラフィックを切り替えます。 最初に、テスト環境の新しいエンドポイントにトラフィックを転送し、トラフィックが正常に動作するのを確認してから、運用環境の新しいエンドポイントにトラフィックを転送することをお勧めします。 切り替え中は、以前のエンドポイントを保持する必要があります。 切り替え中に新しいエンドポイントで問題が発生した場合は、以前のエンドポイントに再び切り替えることができます。 新しいエンドポイントでトラフィックが約 24 時間 (推奨値) 正常に実行された場合は、以前のエンドポイントを削除してかまいません。

注意

音声名が変更され、音声合成マークアップ言語 (SSML) を使用する場合は、SSML で新しい音声名を使用する必要があります。

エンドポイントを中断して再開する

エンドポイントの中断と再開により、使用を制限し、使用されていないリソースを節約できます。 エンドポイントが中断されている間は課金されません。 エンドポイントを再開すると、アプリケーションで引き続き同じエンドポイント URL を使用して音声を合成できます。

Note

中断操作はほぼ即座に完了します。 再開操作の完了には、新しいデプロイとほぼ同じ時間がかかります。

このセクションでは、Speech Studio ポータルでカスタム ニューラル音声エンドポイントを中断または再開する方法について説明します。

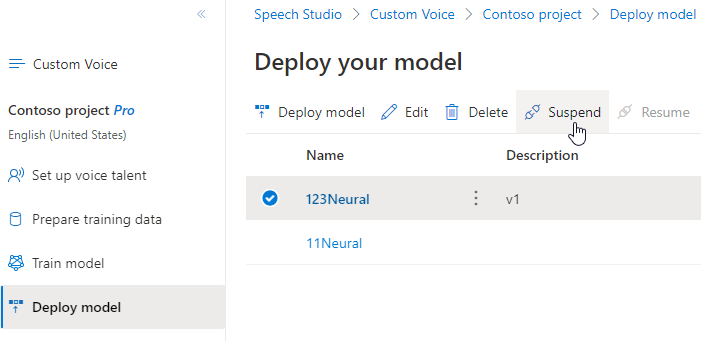

エンドポイントを中断する

エンドポイントを中断して非アクティブにするには、Speech Studio の [Deploy model] (モデルのデプロイ) タブから [Suspend] (中断) を選択します。

表示されるダイアログ ボックスで、[Submit] (送信) を選択します。 エンドポイントが中断されると、Speech Studio に [Successfully suspended endpoint] (エンドポイントが正常に中断されました) という通知が表示されます。

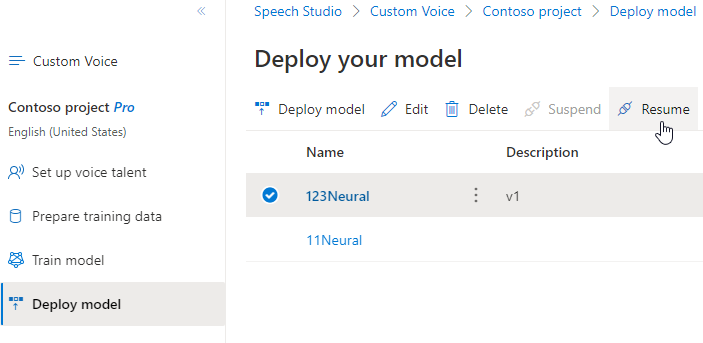

エンドポイントを再開する

エンドポイントを再開してアクティブにするには、Speech Studio の [Deploy model] (モデルのデプロイ) タブから [Resume] (再開) を選択します。

表示されるダイアログ ボックスで、[Submit] (送信) を選択します。 エンドポイントを正常に再アクティブ化すると、状態が [Suspended] (中断) から [Succeeded] (成功) に変わります。

次のステップ

音声モデルを正常に作成してトレーニングしたら、それをカスタム ニューラル音声エンドポイントにデプロイします。

Note

標準 (S0) Speech リソースを使用して最大 50 個のエンドポイントを作成でき、それぞれに独自のカスタム ニューラル音声を使用できます。

デプロイメント エンドポイントの追加

エンドポイントを作成するには、カスタム音声 API の Endpoints_Create 操作を使用します。 次の手順に従って要求本文を作成します。

- 必須の

projectIdプロパティを設定します。 プロジェクトの作成に関する記事を参照してください。 - 必須の

modelIdプロパティを設定します。 音声モデルのトレーニングに関する記事を参照してください。 - 必須の

descriptionプロパティを設定します。 説明は後で変更できます。

HTTP PUT 要求は、以下の Endpoints_Create の例に示したように URI を使用して行います。

YourResourceKeyをSpeech リソース キーに置き換えます。YourResourceRegionを Azure Cognitive Service for Speech リソースのリージョンに置き換えます。EndpointIdを任意のエンドポイント ID に置き換えます。 ID は GUID である必要があり、Azure Cognitive Service for Speech リソース内で一意である必要があります。 ID はプロジェクトの URI で使われ、後で変更することはできません。

curl -v -X PUT -H "Ocp-Apim-Subscription-Key: YourResourceKey" -H "Content-Type: application/json" -d '{

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

} ' "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/EndpointId?api-version=2023-12-01-preview"

次の形式で応答本文を受け取る必要があります。

{

"id": "9f50c644-2121-40e9-9ea7-544e48bfe3cb",

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

"properties": {

"kind": "HighPerformance"

},

"status": "NotStarted",

"createdDateTime": "2023-04-01T05:30:00.000Z",

"lastActionDateTime": "2023-04-02T10:15:30.000Z"

}

応答ヘッダーに Operation-Location プロパティが含まれています。 この URI を使用して、Endpoints_Create 操作の詳細を取得します。 応答ヘッダーの例を次に示します。

Operation-Location: https://eastus.api.cognitive.microsoft.com/customvoice/operations/284b7e37-f42d-4054-8fa9-08523c3de345?api-version=2023-12-01-preview

Operation-Id: 284b7e37-f42d-4054-8fa9-08523c3de345

後続の API 要求でエンドポイント Operation-Location を使用して、エンドポイントの中断および再開と、エンドポイントの削除を行います。

カスタム音声を使用する

カスタム ニューラル音声を使用するには、音声モデルの名前を指定し、HTTP 要求にカスタム URI を直接使用し、同じ Speech リソースを使用してテキスト読み上げサービスの認証をパススルーする必要があります。

カスタム エンドポイントの機能は、テキスト読み上げ要求に使用される標準のエンドポイントと同じです。

1 つ違うのは、Speech SDK を介してカスタム音声を使用するには、EndpointId を指定する必要があることです。 テキスト読み上げのクイックスタートから始めて、EndpointId と SpeechSynthesisVoiceName でコードを更新できます。 詳しくは、「カスタム エンドポイントを使用する」をご覧ください。

音声合成マークアップ言語 (SSML) でカスタム音声を使うには、音声名としてモデル名を指定します。 この例では、YourCustomVoiceName 音声を使用します。

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis" xml:lang="en-US">

<voice name="YourCustomVoiceName">

This is the text that is spoken.

</voice>

</speak>

エンドポイントを中断する

エンドポイントの中断と再開により、使用を制限し、使用されていないリソースを節約できます。 エンドポイントが中断されている間は課金されません。 エンドポイントを再開すると、アプリケーションで引き続き同じエンドポイント URL を使用して音声を合成できます。

エンドポイントを中断するには、カスタム音声 API の Endpoints_Suspend 操作を使用します。

HTTP POST 要求は、以下の Endpoints_Suspend の例に示したように URI を使用して行います。

YourResourceKeyをSpeech リソース キーに置き換えます。YourResourceRegionを Azure Cognitive Service for Speech リソースのリージョンに置き換えます。YourEndpointIdを、エンドポイントの作成時に受信したエンドポイント ID に置き換えます。

curl -v -X POST "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/YourEndpointId:suspend?api-version=2023-12-01-preview" -H "Ocp-Apim-Subscription-Key: YourResourceKey" -H "content-type: application/json" -H "content-length: 0"

次の形式で応答本文を受け取る必要があります。

{

"id": "9f50c644-2121-40e9-9ea7-544e48bfe3cb",

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

"properties": {

"kind": "HighPerformance"

},

"status": "Disabling",

"createdDateTime": "2023-04-01T05:30:00.000Z",

"lastActionDateTime": "2023-04-02T10:15:30.000Z"

}

エンドポイントを再開する

エンドポイントを中断するには、カスタム音声 API の Endpoints_Resume 操作を使用します。

HTTP POST 要求は、以下の Endpoints_Resume の例に示したように URI を使用して行います。

YourResourceKeyをSpeech リソース キーに置き換えます。YourResourceRegionを Azure Cognitive Service for Speech リソースのリージョンに置き換えます。YourEndpointIdを、エンドポイントの作成時に受信したエンドポイント ID に置き換えます。

curl -v -X POST "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/YourEndpointId:resume?api-version=2023-12-01-preview" -H "Ocp-Apim-Subscription-Key: YourResourceKey" -H "content-type: application/json" -H "content-length: 0"

次の形式で応答本文を受け取る必要があります。

{

"id": "9f50c644-2121-40e9-9ea7-544e48bfe3cb",

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

"properties": {

"kind": "HighPerformance"

},

"status": "Running",

"createdDateTime": "2023-04-01T05:30:00.000Z",

"lastActionDateTime": "2023-04-02T10:15:30.000Z"

}

エンドポイントの削除

エンドポイントを削除するには、カスタム音声 API の Endpoints_Delete 操作を使用します。

次の Endpoints_Delete の例で示すように、URI を使って HTTP DELETE 要求を行います。

YourResourceKeyをSpeech リソース キーに置き換えます。YourResourceRegionを Azure Cognitive Service for Speech リソースのリージョンに置き換えます。YourEndpointIdを、エンドポイントの作成時に受信したエンドポイント ID に置き換えます。

curl -v -X DELETE "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/YourEndpointId?api-version=2023-12-01-preview" -H "Ocp-Apim-Subscription-Key: YourResourceKey"

状態コード 204 の応答ヘッダーを受け取ります。

製品上で新しい音声モデルに切り替える

音声モデルを最新のエンジン バージョンに更新した後、または製品の新しい音声に切り替える場合、新しい音声モデルを新しいエンドポイントに再デプロイする必要があります。 既存のエンドポイントに新しい音声モデルを再デプロイすることはサポートされていません。 デプロイ後、新しく作成したエンドポイントにトラフィックを切り替えます。 最初に、テスト環境の新しいエンドポイントにトラフィックを転送し、トラフィックが正常に動作するのを確認してから、運用環境の新しいエンドポイントにトラフィックを転送することをお勧めします。 切り替え中は、以前のエンドポイントを保持する必要があります。 切り替え中に新しいエンドポイントで問題が発生した場合は、以前のエンドポイントに再び切り替えることができます。 新しいエンドポイントでトラフィックが約 24 時間 (推奨値) 正常に実行された場合は、以前のエンドポイントを削除してかまいません。

注意

音声名が変更され、音声合成マークアップ言語 (SSML) を使用する場合は、SSML で新しい音声名を使用する必要があります。