Microsoft Cognitive Services Emotion API demo using UWP app in Visual Studio

Microsoft Cognitive Services are basically a set of APIs, SDKs and services available for developers. Using MCS developers can make their applications more intelligent, user engaging. Microsoft Cognitive Services are more associated with and expansion of Microsoft Azure Machine Learning APIs by which developers can easily enable rich and intelligent features in applications like understanding user emotion and video detection, face detection, speech, vision recognition and speech and language understanding into developer applications. In this article I have implemented one WPF application which detect face from your photo which you can browse. I used Microsoft Face API. What is Emotion API?

Microsoft Emotion API - which allows you to build more personalized apps with Microsoft’s cutting edge cloud-based emotion recognition algorithm. The Emotion API takes a facial expression in an image as an input, and returns the confidence across a set of emotions for each face in the image, as well as bounding box for the face, using the Face API. If a user has already called the Face API, they can submit the face rectangle as an optional input. The emotions detected are anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise.

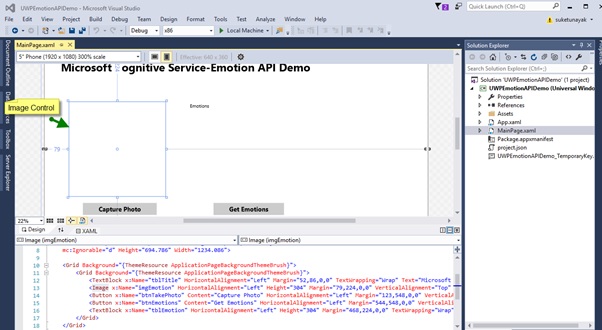

Open Visual Studio 2015. Go to Start -> New Project-> select Universal -> Blank Application -> give your app name EmotionAPIDemo -> OK.

In the Solution Explorer and add the following references in the project.

- Microsoft.ProjectOxford.Emotion

- Newtonsoft.Json

For adding Microsoft.ProjectOxford.Emotion Reference, right click your project from Solution Explorer and select "Manage NuGet Packages". Click on Browse and find out ProjectOxford.Emotion and Newtonsoft.Json and Click on Install.

In MainWindow.xaml.cs file add following code:

using Windows.Media.Capture;

using Windows.Storage;

using Windows.Graphics.Imaging;

using Windows.UI.Xaml.Media.Imaging;

using Windows.Storage.Streams;

using Microsoft.ProjectOxford.Emotion;

using Microsoft.ProjectOxford.Emotion.Contract;

namespace UWPEmotionAPIDemo

{

public sealed partial class MainPage : Page

{

CameraCaptureUI captureUI = new CameraCaptureUI();

StorageFile photo;

IRandomAccessStream imageStream;

const string APIKey = "********EMOTION API KEY****************";

EmotionServiceClient emotionserviceclient = new EmotionServiceClient(APIKey);

Emotion[] emotionresult;

public MainPage()

{

this.InitializeComponent();

captureUI.PhotoSettings.Format = CameraCaptureUIPhotoFormat.Jpeg;

captureUI.PhotoSettings.CroppedSizeInPixels = new Size(200, 200);

}

private async void btnTakePhoto_Click(object sender, RoutedEventArgs e)

{

try

{

photo = await captureUI.CaptureFileAsync(CameraCaptureUIMode.Photo);

if (photo == null)

{

return;

}

else {

imageStream = await photo.OpenAsync(FileAccessMode.Read);

BitmapDecoder decoder = await BitmapDecoder.CreateAsync(imageStream);

SoftwareBitmap softwarebitmap = await decoder.GetSoftwareBitmapAsync();

SoftwareBitmap softwarebitmapBGRB = SoftwareBitmap.Convert(softwarebitmap,

BitmapPixelFormat.Bgra8, BitmapAlphaMode.Premultiplied);

SoftwareBitmapSource bitmapsource = new SoftwareBitmapSource();

await bitmapsource.SetBitmapAsync(softwarebitmapBGRB);

imgEmotion.Source = bitmapsource;

}

}

catch

{

tblEmotion.Text = "Error Campturing Photo";

}

}

private async void btnEmotions_Click(object sender, RoutedEventArgs e)

{

try

{

emotionresult = await emotionserviceclient.RecognizeAsync(imageStream.AsStream());

if (emotionresult != null)

{

Scores score = emotionresult[0].Scores;

tblEmotion.Text = "Your Emotions are : \n" +

"Happiness: " + (score.Happiness)*100 + " %" + "\n" +

"Sadness: " + (score.Sadness)*100 + " %" + "\n" +

"Surprise: " + (score.Surprise)*100 + " %" + "\n" +

"Neutral: " + (score.Neutral)*100 + " %" + "\n" +

"Anger: " + (score.Anger)*100 + " %" + "\n" +

"Contempt: " + (score.Contempt)*100 + " %" + "\n" +

"Disgust: " + (score.Disgust)*100 + " %" + "\n" +

"Fear: " + (score.Fear)*100 + " %" + "\n";

}

}

catch

{

tblEmotion.Text = "Error Returning the Emotions from API";

}

}

}

}

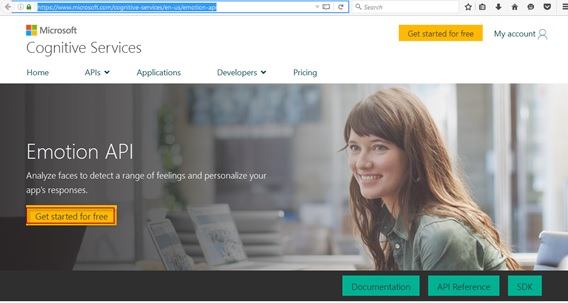

Face API Key Free available in (https://www.microsoft.com/cognitive-services/en-us/emotion-api) also available in Azure Portal:

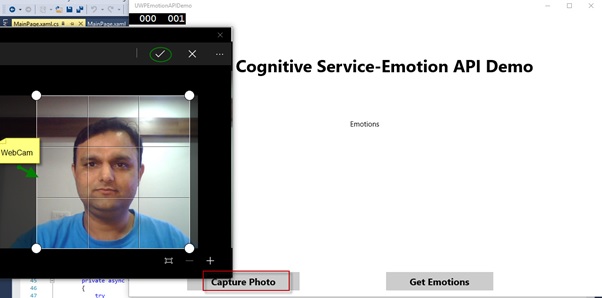

Than Run the Project:

Click on Capture Photo button Web Cam will on and capture your photo than click on Get Emotions button and see the Emotions Attributes.

See Also

Another important place to find an extensive amount of Cortana Intelligence Suite related articles is the TechNet Wiki itself. The best entry point is Cortana Intelligence Suite Resources on the TechNet Wiki.