Logic Apps: Face Verification Using Microsoft Cognitive Services Face Api and Logic Apps

What are Logic Apps?

Logic Apps are a piece of integration workflow hosted on Azure which are used to create scale-able integrations between various systems. These are very easy to design and provide connectivity between various disparate systems using many out of the box connectors as well as with the facility to design custom connectors for specific purposes. This makes integration easier than ever as the design aspect of the earlier complex integrations is made easy with minimum steps required to get a workflow in place and get it running.

What are Cognitive Services?

As per Microsoft :

Microsoft Cognitive Services( formerly Project Oxford) are a set of APIs, SDKs and services available to the developers to make their applications more intelligent, engaging and discoverable. Microsoft Cognitive Services expands on Microsoft's evolving portfolio of machine learning APIs and enables developers to easily add intelligent features such as emotion and video detection; facial, speech and vision recognition; and speech and language understanding - into their applications. Our vision is for more personal computing experiences and enhanced productivity aided by systems that increasingly can see hear, speak, understand and even begin to reason.

Scope

Microsoft Team has released various connectors for the Face API cognitive services which makes it easy to integrate them using Logic App in one way or another to fit into systems dealing with intelligent facial recognition. These face API connectors are in Preview and have some very particular way in which they can be integrated together. This article aims to discuss to implement business flow in which two images are compared to check if the face in both the images belongs to the same person or not. The Face API compares the two pictures (one reference and another image to be verified) runs the verification algorithms and returns a confidence score which is an indicator of the likeness of the faces in the two images.

Flow For Face Verification

- Create a person group with a unique person group id in which reference pictures of the face of person(s) will be stored.

- Create a person in the person group

- Attach images to the person created( At-least one image per person is required!)

- Select the image the face in which is to be verified.

- Detect the face in it

- Call the Face Verification method to get the confidence score after the comparison between the reference image and the image which requires verification.

Logic App Flow

The following figure describes the flow of the logic flow which will be used to do a sample facial verification.

Each of the bullet points highlight the calls that need to be made to the Face Api to do the verification of the face.

Design

Face API Account

First a Account for the face API needs to be created to use the face API connectors in the Logic App. Following screen shots indicate the process of creation of the Face API Connection.

![]()

Once the API account is created, select the account from the dashboard and following window is visible, the access keys and end point are required from this window which will be used to create a connection to the face API.

Now the Face API connectors are ready to be used in the Logic App.

Logic App Flow

Note: Person Group Id and Person Id and Name need to be provided as a custom value ( Drop down will allow providing a custom value)

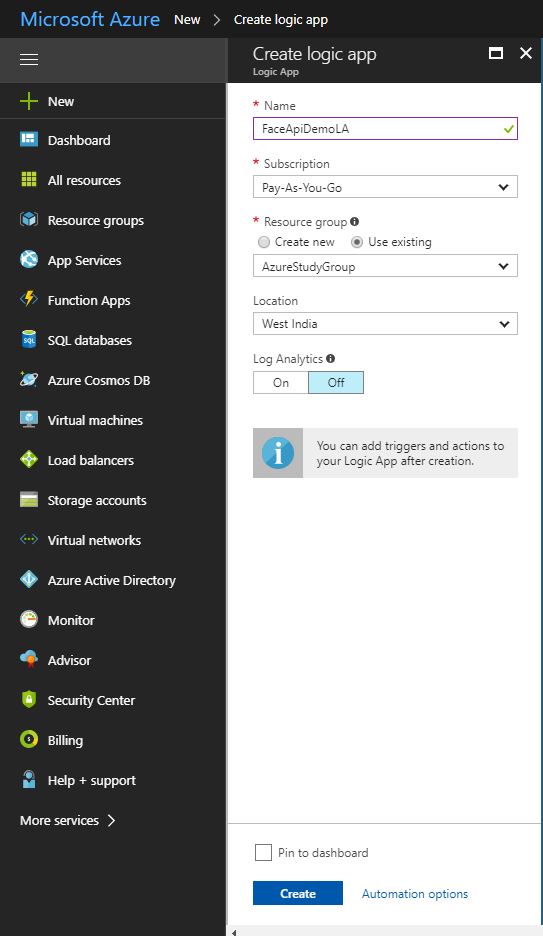

Create a Logic App Named, FaceApiDemoLA.

Select the Details like subscription, Resource Group, Location and then ht the create blade on the blade. Refer below screenshot.

Select the HTTP Request-Response Template as shown in below screenshot.

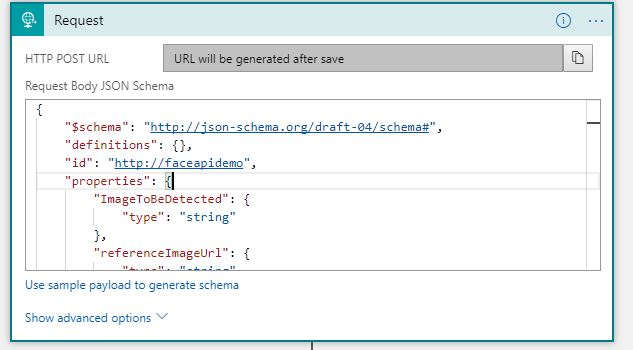

Use following JSON payload as the request to the Logic app.

{ "$schema": "http://json-schema.org/draft-04/schema#", "definitions": {}, "id": "http://faceapidemo", "properties": { "ImageToBeDetected": { "type": "string" }, "referenceImageUrl": { "type": "string" }, "PersonGroupId": { "type": "string" }, "PersonGroupName": { "type": "string" }, "PersonName": { "type": "string" } }, "required": [ "referenceImageUrl", "ImageToBeDetected", "PersonGroupId", "PersonGroupName", "PersonName" ], "type": "object" }Post above payload in the Request Body JSON Schema as shown below

[

](resources/6170.JsonRequestPayload.JPG)

Initialize a string variable to record the Confidence Score Post the Face Verification. Refer Below screen shot.

[

](resources/8666.InitializeVariable.JPG)

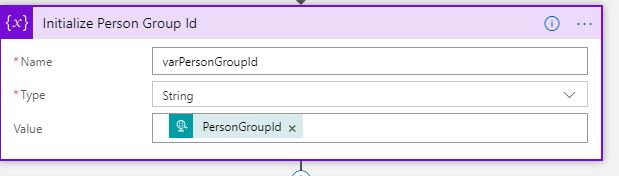

Initialize a variable To track the person Group Id as shown below.

Select the Face API- Add Person Group Action from the list of available Actions and Configure the Connector as shown below. Use the PersonGroup Id and Person Group Name from the previous step ( HTTP Request Trigger)

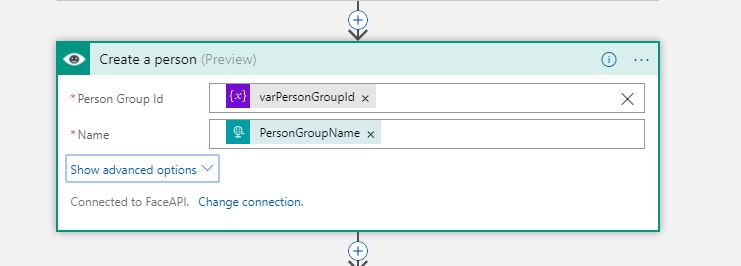

Add action Face API- Create Person from the list of available actions. Configure the Connector as shown below. Use the Person Grpup Id Variable and person group name from the HTTP Request.

[

](resources/0815.Create-a-Person.JPG)

Now a Face needs to be attached to the person created in above step. The API call in above step will return a unique person Id. This needs to be used to attach a face to the person. Since Face API is in the preview stage, it only accepts an image URL. Hence the referenceImageUrl can be provided from the HTTP request. Refer below screenshot.

](resources/6038.AddFace.JPG)

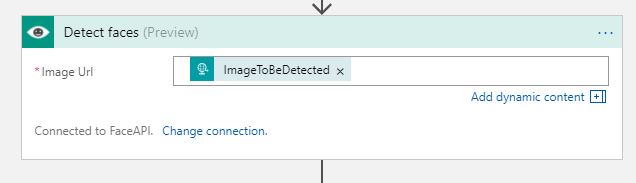

Now the reference face is set up. Whatever face that needs to be verified will be verified in comparison with the face created in step 9. Select the Face API- Detect Face action from the list of available actions. Now the ImageTobeDetected field from the request payload (which contains the URL of the image to be verified) should be passed to the URL field of the Detect Face API. Refer below screenshot.

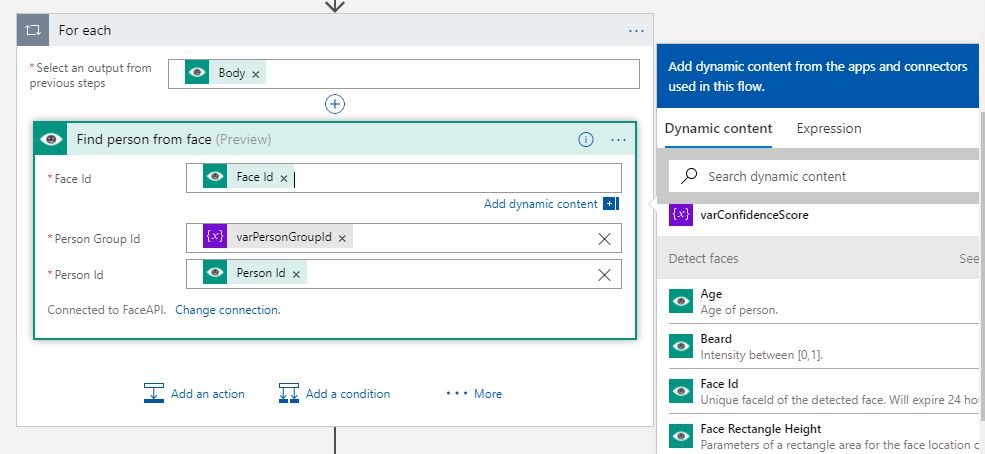

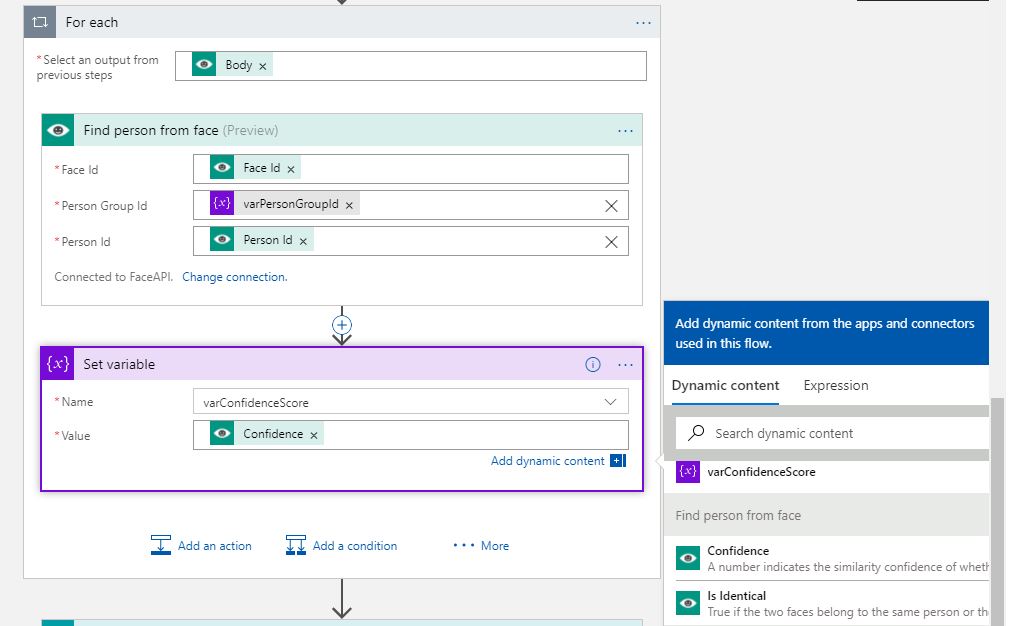

Select Face API - Find Person From Face action from the list of the action. Provide the Face ID from the Detect Face Step, Person Id from the Create Person Step and Person Group Id from the person group id variable initialized before. Since Detect Face action can detect multiple faces in an image, Logic App designer will add a for each loop by itself. Refer Below screenshot for a sample.

Set the Confidence Score variable initialized in step 3 with the Confidence Score received as a response for Find Person API call. Refer below screenshot.

Add the confidence score received to the JSON payload which will be sent as the response. Configure the Response Action as shown below.

Save the Logic App. The final Design of Logic App looks like below.

[

](resources/0028.CompletrLA.JPG)

Saving the Logic App will generate an Url which should be used to test the Logic App.

Testing The Logic App

Following Images are used for testing the Logic app.

Reference Image

[

](resources/2275.ReferenceImage.jpg)

Image To Be verified

[

](resources/7206.FaceToBeDetected.jpg)

Since Face Api is in preview stage, connectors only accept the urls to the image. Hence these images were stored onto to a server so that the testing can be done.

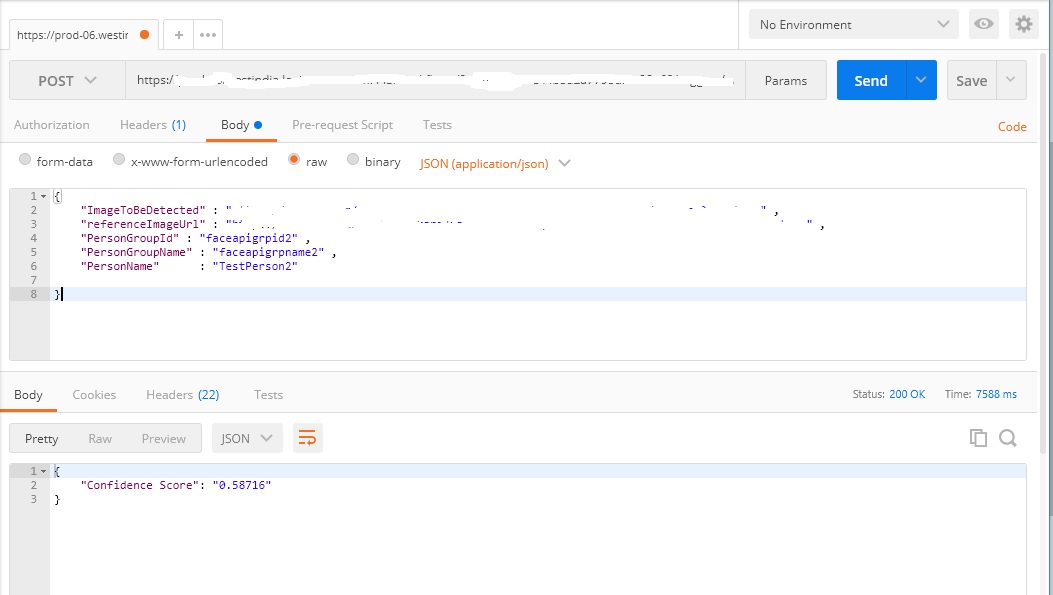

To test the Logic App, PostMan can be used to call the HTTP Request url generated after saving the Logic app.

The sample message sent to Logic app is as shown below.

{

"ImageToBeDetected" : "url here" ,

"referenceImageUrl" : "url here" ,

"PersonGroupId" : "faceapigrpid1" ,

"PersonGroupName" : "faceapigrpname1" ,

"PersonName" : "TestPerson1"

}

The content-type for the request should be set to application/json in POST Man.

The screen shot for the POSTMAN test request- response is shown below.

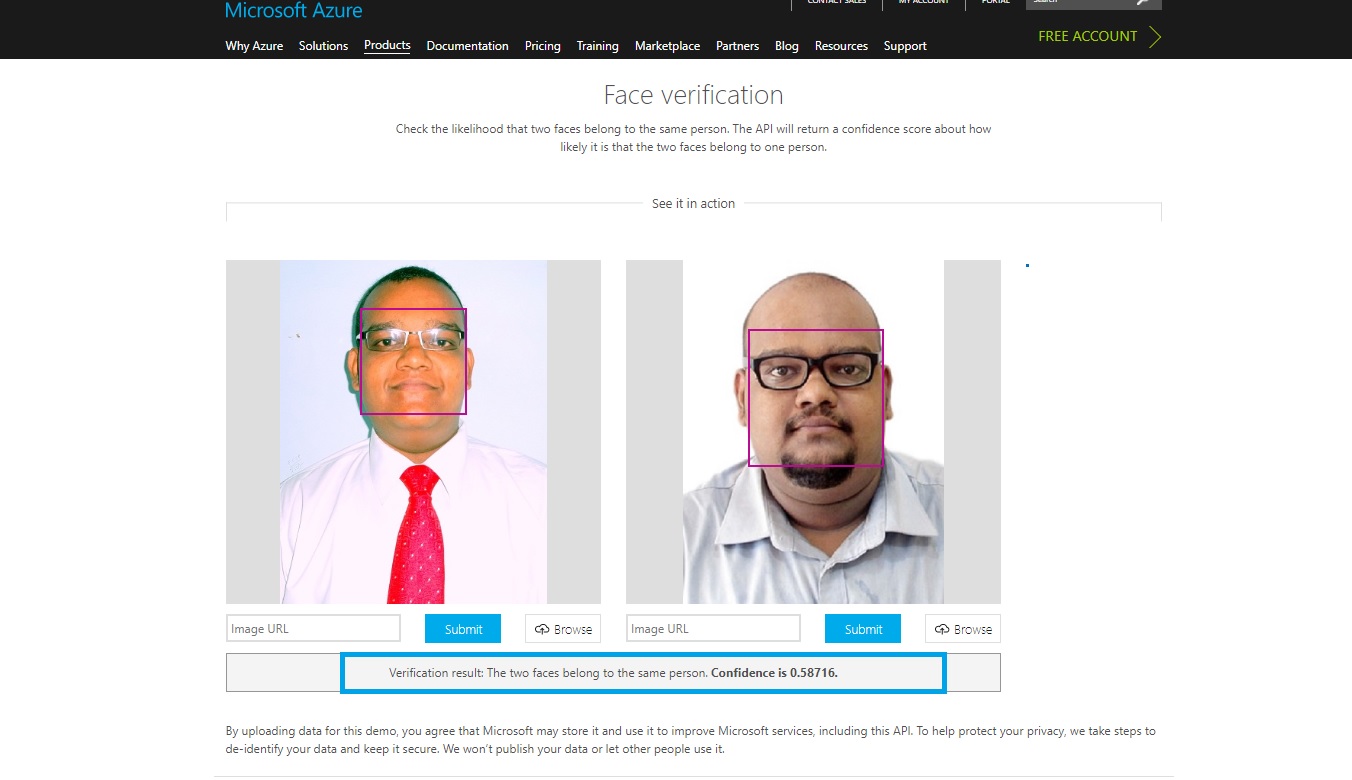

Actual results of face verification for same images on Azure Demo Page is shown below.

As visible, both the test results give same value of confidence, proving the integration using logic app is successful.

Future Possible Scenarios

The Face API is currently in Preview stage and hence for steps like adding a face to a person or detecting a face, the connectors only accept an image URL. In future, it might be possible to upload images from connectors like Get-File Content connector which picks up the content of a file on local system through an on-premises data gateway.

Conclusion

Logic Apps can be used successfully to integrate the Microsoft Cognitive Face API to verify if the faces belong to same or different persons. This capability can be extended to many persons withing the same group so that the app actually compares the face in the image against a variety of faces.

See Also

To read more about Logic Apps refer: Logic Apps

To read more about cognitive services refer: Cognitive Services