Azure Data Factory: Cost effective way of running pipelines with Integration Runtime

Background

Azure data factory v2 (referred as ADF) is an online data integration service which can create, schedule and manage your data integrations at scale.

When we speak of any cloud-based solution – the design part of it needs to be done diligently. This is not only to ensure utilize cloud scale optimally but also to ensure the scale consumption is done for a limited time and only when you need it, this can essentially make an enormous impact on your operational cost.

We will only be speaking of ADF pipeline and SSIS runtime costs to keep the article short and to the point. This article assumes that you have a basic understanding of Azure data factory and its integral components, if you are not familiar with it, then it is highly recommended that you should learn the essentials of it by visiting the documentation.

Scenario

We will create a scenario and keep it simple,

Let's assume that we have some pipelines which are scheduled for execution at a specific time during a day and once they are invoked, the pipelines then invoke SSIS packages execution in a series. Once all packages are finished executing, pipelines are said to be completed and that’s let's call it the end of the schedule of our pipelines.

With the scenario set, let's move to the next part i.e. execution.

The primary thing to consider here is that we turned on the SSIS integration runtime infrastructure of ADF right before the time when pipelines were scheduled for their execution. Assuming that the pipelines will take max up to 4 hours to complete and then we turn off the ADF integration runtime. We have to utilize the Azure automation here in order to automate this turning ON and turning OFF of the ADF's integration runtime.

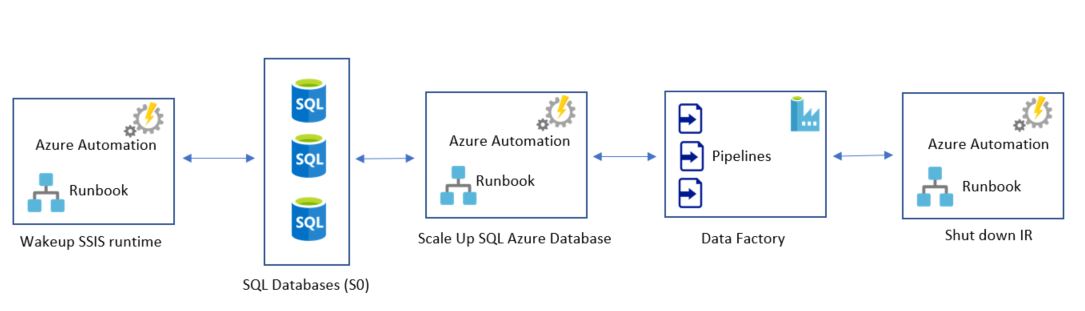

So overall initial architecture looks like this

Now the interesting bit resides in SSIS packages part – when the series of packages are executed by the pipeline, the activities inside the packages are the ones doing all the heavy work e.g. interact with some quite large tables, reading delta from large tables or merging data into some tables. The process can run from minutes to hours depending upon the data we are dealing with (e.g. reading delta from last week or last day or even last year etc.). So while doing this – we often will hit the DTU threshold of underlying SQL DBs (yes, chances are still there even when you have set up right indexes on tables and even though you have written your merge statements effectively).

Note that hitting DTU threshold does not mean that your pipelines will fail, it is just that the amount of time to complete the execution will increase and what does this mean? This means that for this entire duration of execution, your SSIS runtime will be turned ON and this essentially means it will cost us money. So what can be done for this?

At the time of writing this article, one node of SSIS runtime (1 core, 3.5 GB RAM) provisioned in North Europe data center costs you $ 0.5916 per hour, so even if we are running our pipelines daily which runs for that assumed 4 hours duration, we would be spending $71.1 per month. Remember, though this still sounds cheap and cost-effective there is a good chance to save few more bucks here for sure. But how?

Possible solutions or workarounds

Use of Elastic pools

This sounds good, however consider the scenario where you have 10 SQL databases, and all are resident of same elastic pool and data factory pipelines needs to be scheduled at same time and each database needs to have 50 DTUs at same time but you have only 100 reserved DTUs shared across all the databases so chances that you would still enter in the same situation where all DBs start to show DTU spikes and we hit the threshold. Another cons of it are that we would be reserving 100 DTUs un-necessarily for all the time even when we will not be really needing those and hence the elastic pool goes out of the picture for this scenario.

Scaling out SSIS runtime nodes

Sounds interesting again and should be a solution for a number of other scenarios but not in our case. Why? Because at the end of the day – our SSIS packages are loading and unloading data directly on the SQL Azure database tables and it is the SQL Azure database’s DTU we were running out of and not the CPU or memory of underlying node which hosts our SSIS integration services.

Solution

So Ideally, we would need a solution which can leverage the scale power of SQL Azure databases and Integration runtime together but at the same time, it should be cost effective. Hence one possible solution could be easily divided into below distinct sections

- Turn on the runtime right before pipelines start to run.

- Scale up underlying SQL Azure databases to higher tier e.g. S4

- Wait for scale operation to finish.

- Run the SSIS packages in IR.

- Scale down the SQL database to smallest tier e.g. S0

- Scan pipelines status before scheduler turns off the runtime.

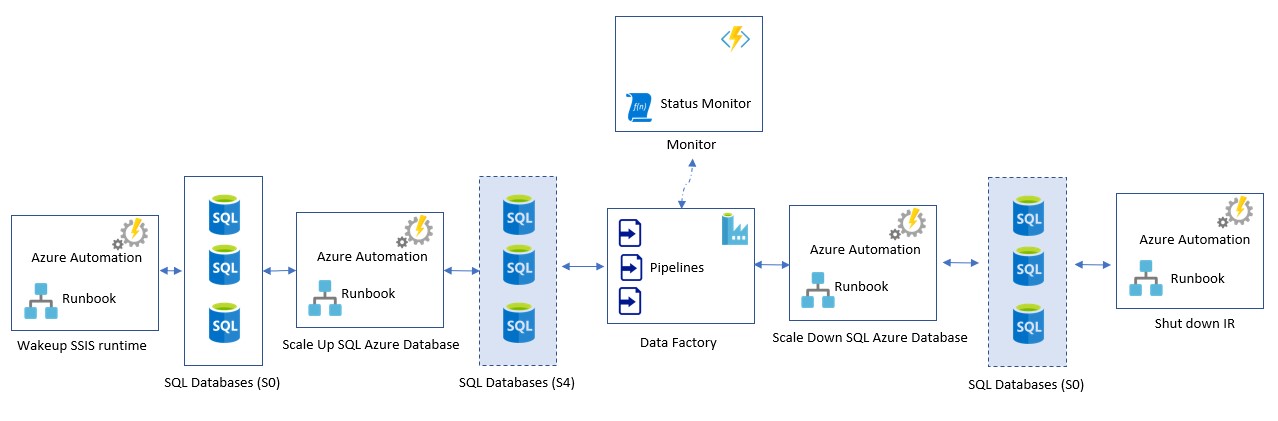

Let’s see how it can easily be visualized

1. Turn on the runtime right before pipelines start to run.

It was already in place and was achieved using Azure automation so that remains unchanged and untouched.

2. Scale up underlying SQL Azure databases to higher tier and (5) scan pipelines status before scheduler turns off the runtime.

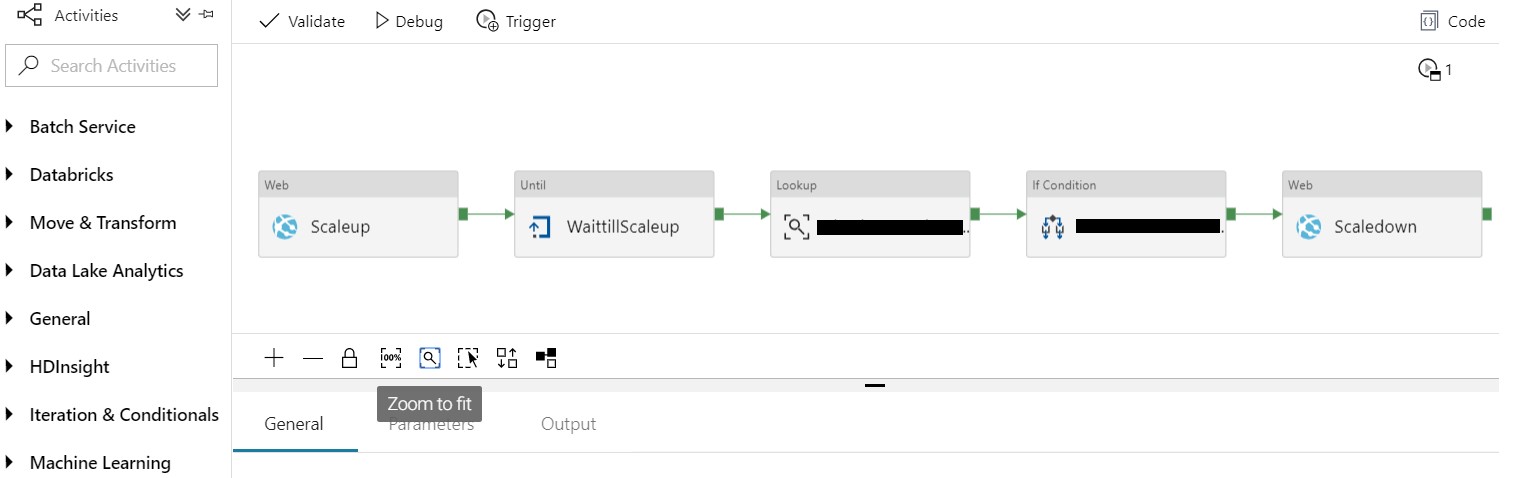

Step 2 and 5 can again be achieved using Azure automation services with a small PS workflow which can vertically scale up or down our databases. All we needed to do is to invoke this automation workflow using a Webhook which can be called from Azure data factory pipeline. Feel free to check it here on TechNet gallery.

3. Wait for scale operation to finish.

Step 3 is achieved by making use of a small Azure function to whom we can ask the current tier of the SQL Azure database and by making use of until activity in DF pipeline we wait till we get the result as the desired tier which we asked for. The azure functions can be called directly from within your data factory pipeline (Using web activity).

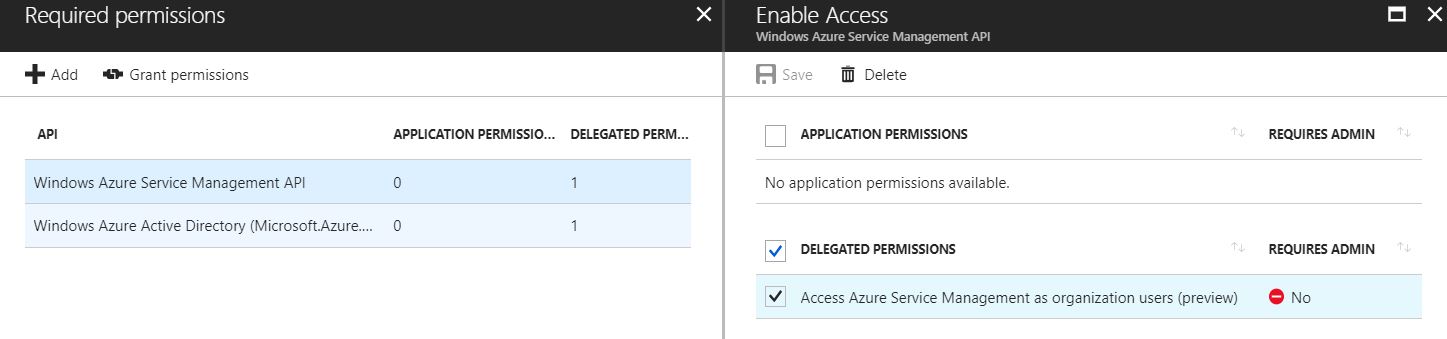

Sharing the azure functions source which we can use just for the demonstration purpose. Note that in order to make this function working - we should be creating an Azure AD app and give it access to the Windows Azure Service Management API.

Also, ensure that the Azure AD app has got access to the resource group in our subscription containing our SQL Azure databases./p>

We have used the Azure SQL management nuget to talk to management API, you can refer it https://www.nuget.org/packages/Microsoft.Azure.Management.Sql.

[FunctionName("GetSqlStatus")]

public static async Task<HttpResponseMessage> Run([HttpTrigger(AuthorizationLevel.Function, "post", Route = null)]HttpRequestMessage req, TraceWriter log)

{

string eventData = req.Content.ReadAsStringAsync().Result;

InputData inputData = JsonConvert.DeserializeObject<InputData>(eventData);

AuthenticationResult token = AuthHelper.InitializeAuthenticationResult();

SqlManagementClient sqlClient = new SqlManagementClient(new TokenCredentials(token.AccessToken));

sqlClient.SubscriptionId = "your_subscription_id";

var database = await sqlClient.Databases.GetAsync(inputData.ResourceGroupName, inputData.SqlServerName, inputData.DatabaseName);

JObject result = new JObject();

if (database.CurrentServiceObjectiveName == inputData.PerfLevel)

{

result.Add("status", "true");

}

else

{

result.Add("status", "false");

}

return req.CreateResponse(HttpStatusCode.OK, result);

}}

4. Run the SSIS packages in IR.

Step 4 – This is a core activity which can invoke the SSIS package serial execution using a stored procedure. The core command can be checked at here.

6. Scale down the SQL database to smallest tier

Step 6 - Comes the last step i.e. stopping the SSIS runtime node when you have no pipelines running. This again is achieved using Azure automation workflow which triggers itself in every 1 hour (this being minimum trigger frequency available at the time of writing this article) and scans your ADF pipeline’s run status of last 2 hours, if it does not see any running pipeline, it turns off the IR node. i.e. SSIS integration runtime. We have already shared the workflow here, so you can check it out there on TechNet gallery.

You might have already noticed that we have mostly achieved everything either using Azure automation and using Azure functions and might have figured the reason behind it too! Yes, the cost – Azure functions does offer you 10 million free executions each month and automation service to offer 500 minutes of process automation free each month.

After implementing this approach, the runtime for pipelines could get reduced significantly because the SQL Azure database leverages S4 tier which offers dedicated 200 DTUs.

And finally, let’s look at the pipeline designer and understand how we can incorporate steps above

Conclusion

Now, let’s do some basic math here and find out approximate operational cost difference (skipping data read/write costs). Note that we are going to make use of Azure cost calculator and use the prices which are shown at the time of writing this article.

Previous Model:

- SQL database S0 - $0.48 per day

- SSIS runtime uptime (4 hours) - $2.347 per day

- Per day operational cost appx $2.82, translates to $84.6 per month.

Updated Model:

- SQL database S4 for 1 hour per day – $0.40

- SQL database S0 for rest of the day (23 hours) - $0.46

- SSIS runtime for 1 hour per day - $0.59

- Azure functions – None

- Azure automation – None

- Per day operational cost approximate $1.45, translates to $43.5 per month. Numbers do indicate that the cost seems to have reduced significantly and have reduced it almost to half for each month.

Hope this helps someone who is headed on the same path of running Azure infrastructure and solutions in a cost-effective way.

References

This article was originally published here at Cost effective way of running Azure Data Factory Pipelines with Integration Run-time. This link is external to TechNet Wiki. It will open in a new window. Please feel free to improve this article by adding new missing or important content, fix spell and so on.