Azure data factory v2: Copy content of multiple blob to different SQL tables

Scenario

We have different files in a blob container and we need to copy the content to SQL table .

This is how the container looks like. We have two file which are different set of data.

Few points to consider

The number of columns which is on the blob should not increase from the initial load . The reason being on the first run it creates a table as per the initial blob , if we add more columns later , the insertion to the table will fail , complaining about the "column does not exists" . If your scenario is that the blob structure changes , then we have to use a dynamic expression for the table name so that is always unique ( may be adding a date ) .

The idea is to copy the data to SQL and the success criteria is to move the content to SQL table .

SELECT * FROM DEPT

SELECT * FROM EMP

We will be using the below activities

- GetMetadata

- Foreach

- Setvariable – optional

- CopyActivity

- Dynamic expression .

**Steps **

Use the GetMetadata activity and point to the correct blob container/folder.Select the field list -> Child Items

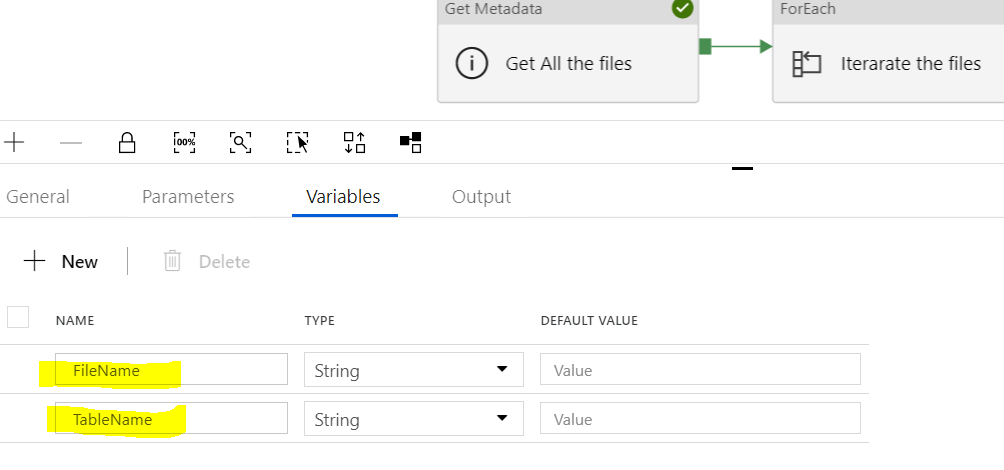

Also set the variables name which we will use later

2. Add the foreach activity on the canvas and update the setting and also set the Items property to

@activity('Get All the files').output.childItems

3.Add one setvariable activity in the foreach to capture the file name eg (emp.txt , we will use this while coping the blob )

4.Add one more SetVariable to capture the SQL table name eg if the blob name is dept.txt the table name will be dept . We will use the dynamic expression

@substring(variables('FileName'),0,indexof(variables('FileName'),'.'))

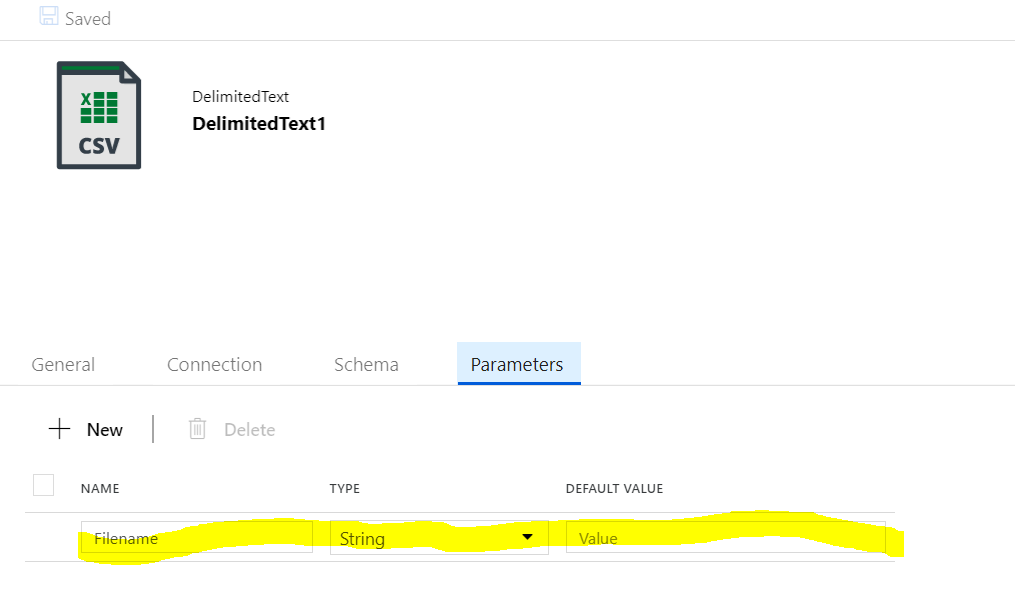

- Add the copy activity , first parameterized the Source dataset

Add the parameter in the dataset

Now set the parameter

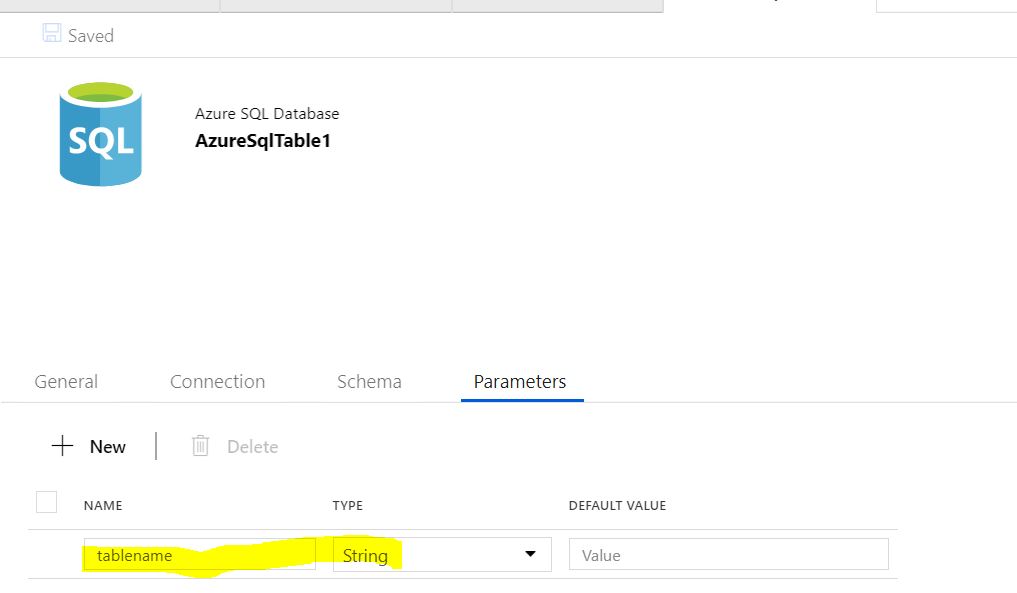

6. Lets parameterized the Sink side .

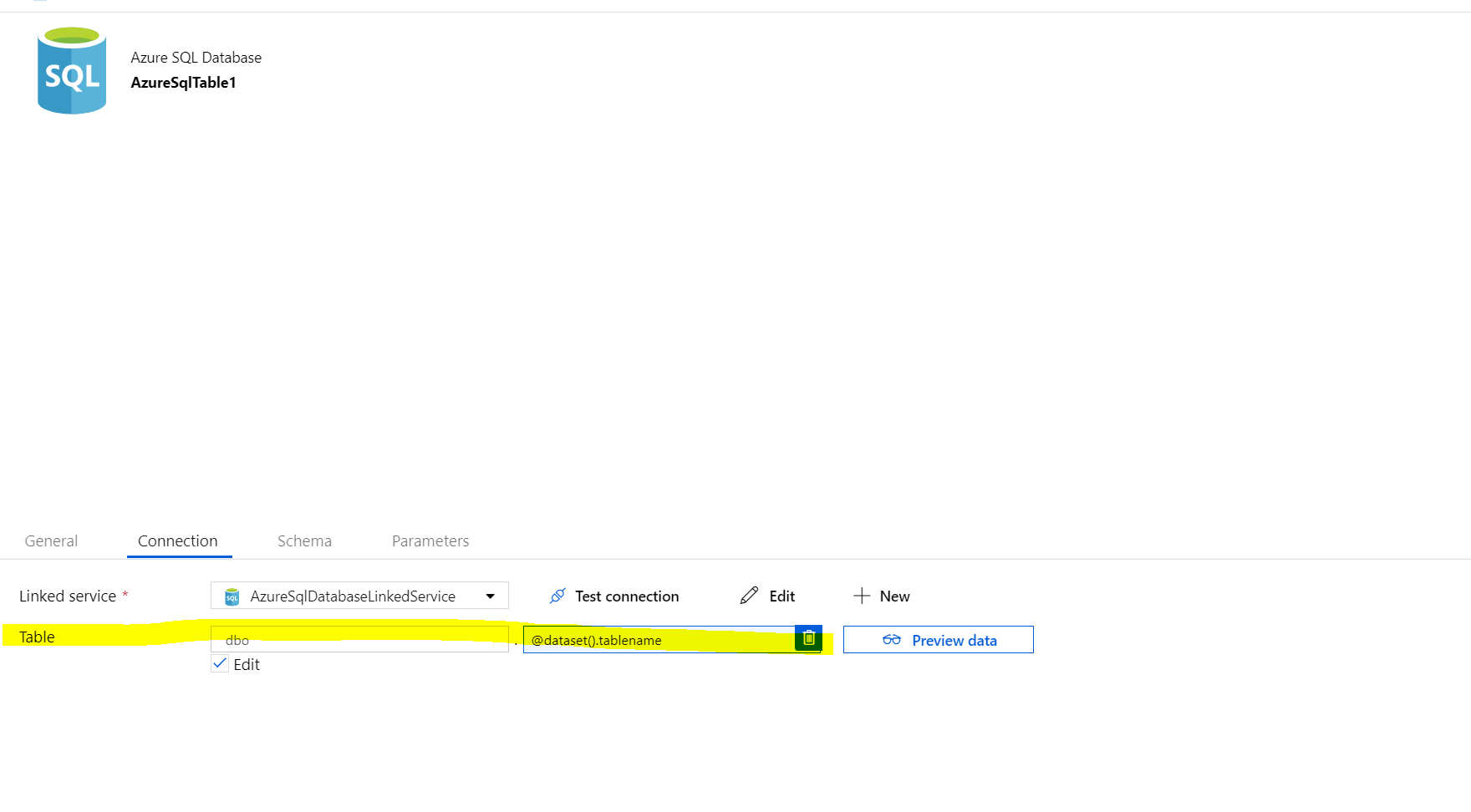

6.1 Add the parameter in the connection

7. Set the parameter of the sink dataset. @variables('TableName') and also table option to “Auto Create Table” , save the pipeline and debug the pipeline .

If all goes all you should have the data on the SQL side .

SELECT * FROM DEPT

SELECT * FROM EMP