Connect Power BI to Azure Databricks

Microsoft Power BI is a business analytics service that provides interactive visualizations with self-service business intelligence capabilities, enabling end users to create reports and dashboards by themselves without having to depend on information technology staff or database administrators.

When you use Azure Databricks as a data source with Power BI, you can bring the advantages of Azure Databricks performance and technology beyond data scientists and data engineers to all business users.

Publish to Power BI Online from Azure Databricks

When using Azure Databricks as a data source with Power BI Online, you can create Power BI datasets from tables or schemas directly from the Databricks UI.

Requirements

Your data must be in Unity Catalog, and your compute (cluster) must be Unity Catalog enabled. Hive metastore is not currently supported.

You must have a premium (premium capacity or premium per-user license) Power BI license.

You must grant the following permissions to the registered Entra ID app, Databricks Dataset Publishing Integration, that you are using to publish from Databricks to Power BI:

Content.Create

Dataset.ReadWrite.All

Workspace.Read.All

For more information, see Microsoft Entra Permissions.

Note

Entra Admin consent is typically required for these permissions, especially for workspace and dataset-level access in Power BI. See Review admin consent requests for instructions.

You must enable Read Write for the XMLA Endpoint in your Power BI capacity. Follow this link for instructions.

If users would like to edit semantic models in Power BI Service after publishing, you must enable Users can edit data models in Power BI service (preview) in the workspace settings. You can also edit the Semantic Model using Tabular Editor by making a connection using the XMLA endpoint.

If your Power BI workspace uses a private link, you must update the dataset’s datasource credentials manually in Power BI.

Publish Azure Databricks tables to a Power BI dataset

Sign in to your Databricks workspace and click

Catalog in the sidebar to open Catalog Explorer.

Catalog in the sidebar to open Catalog Explorer.Select a compute resource from the drop-down list at the top right.

Open a catalog and select the schema or tables to be published. Do not select from a hive metastore or the samples catalog.

On the upper right, click Use with BI tools for a schema or Open in a dashboard for a table.

Select Publish to Power BI workspace.

In the Connect to partner dialog, click Connect to Microsoft Entra ID to authenticate.

In the Power BI workspaces, select the Power BI workspace.

In Dataset Mode, select either DirectQuery or Import.

In Authentication Method in Power BI, select OAuth or PAT (Personal Access Token).

Databricks recommends using OAuth since it allows for fine-grained access control and user-level auditing. OAuth credentials might need to be configured on the Power BI dataset settings page under Data source credentials. If you select Personal Access Token (PAT), a PAT is created on your behalf to allow Power BI to access the semantic model.

In Dataset Name, select Publish as a new data set or Use an existing data set.

When you choose Use an existing dataset, the following is true:

- If the dataset exists, it is not overwritten. Updates only append new records in a given schema.

- If the selected table is not part of the existing dataset, it’s added.

- If the table was already in your dataset and new columns were added after the last update, those columns are automatically added.

- You can choose a different storage model for the new table to create a composite semantic model.

If you have an existing semantic model that you want update while you keep existing data connections in place, choose Use an existing dataset. If you want to create a new dataset with a new semantic model, choose Publish as new data set.

- If the dataset exists, it is not overwritten. Updates only append new records in a given schema.

Click Publish to Power BI.

In 10 to 20 seconds when the dataset is published, click Open Power BI to open your Power BI dataset in a new tab.

Features and notes

- When publishing a schema containing multiple tables, all tables with columns are published. Tables with no columns are not published.

- Comments on a table’s columns in Azure Databricks are copied to the descriptions of corresponding columns in Power BI.

- Foreign key relationships are preserved in the published dataset. However, Power BI only supports one active relationship path between any two tables. When multiple paths are present in the schema in Azure Databricks, some of the corresponding relationships in Power BI are set to inactive. You can later change which relationships are active or inactive in the data model view in Power BI.

- When using OAuth or an on-premises data gateway, credentials might need to be configured under “Data source credentials” on the Power BI dataset settings page.

- Currently, users can only publish directly to Power BI workspaces in their home tenant. Publishing in tenants where they are guest users is not supported.

Troubleshooting

| Error | Notes |

|---|---|

| “Approval required” when launching Publish to Power BI for the first time | If you see this message, you will need Entra Admin approval before you can grant permissions to this integration feature. Contact your Entra Admin to approve the request. Follow this link for instructions. |

| PowerBINotLicensedException | Ensure that you have a Power BI Premium license. |

| We couldn’t deploy this dataset. Contact a capacity administrator to make sure XMLA read/write support is enabled in the capacity settings on the Power BI Premium capacity, and then try again. For additional information, see ‘XMLA read/write support’ in the product documentation. | Contact your Power BI capacity administrator to enable Read and Write capabilities under XMLA endpoint in the capacity settings. Follow this link for instructions. |

| XMLA endpoint feature is disabled. Turn on Analyze in Excel feature in PowerBI.com to enable this feature. | Contact your Power BI capacity administrator to enable Read and Write capabilities under XMLA endpoint in the capacity settings. Follow this link for instructions. |

| CapacityNotActive | Your capacity may be paused. Contact your capacity administrator to check the capacity status. |

The database with the name of ‘<ccon>_</ccon>’ already exists in the ‘<ccon>workspace </ccon>’ _. |

Ensure that you have the permissions to create a semantic model. Then, retry Publish to Power BI to publish the semantic model with an incremented version number. |

| You cannot use Direct Lake mode together with other storage modes in the same model. Composite model does not support Direct Lake mode. Remove the unsupported tables or switch them to Direct Lake mode. | Since Publish to Power BI creates a semantic model with Import or DirectQuery mode, it cannot be used to publish to an existing dataset that uses Direct Lake mode. Follow this link to learn more. |

The ‘<euii> *</euii>’ user does not have permission to call the Discover method. |

Ensure that you are publishing to a Power BI workspace with a Premium license mode. You cannot publish to a workspace with a Pro license mode. |

Either the ‘<euii> *</euii>’ user does not have permission to create a new object in ‘***’, or the object does not exist. |

You may have the Viewer role in the Power BI workspace. Check whether you have the permissions to create a semantic model. Follow this link for more information. |

| “Failed to update data source credentials: [Microsoft][thriftextension] (14) Unexpected response from server during a HTTP connection: Unauthorized/Forbidden error response returned, but no token expired message received.” when editing data source credentials on Power BI | Check whether your Databricks workspace is publicly accessible. If your workspace is using Private Link or IP access lists, then you may need to configure a Power BI on-premises gateway. |

Connect Power BI Desktop to Azure Databricks

You can connect Power BI Desktop to your Azure Databricks clusters and Databricks SQL warehouses. You can also publish Power BI reports to the Power BI service and enable users to access the underlying Azure Databricks data using single sign-on (SSO), passing along the same Microsoft Entra ID credentials they use to access the report.

Requirements

Power BI Desktop 2.85.681.0 or above. To use data managed by Unity Catalog with Power BI, you must use Power BI Desktop 2.98.683.0 or above (October 2021 release).

Note

Power BI Desktop requires Windows. An alternative for other operating systems is to run Power BI Desktop on a physical host or a Windows-based virtual machine and then connect to it from your operating system.

If you use a version of Power BI Desktop below 2.85.681.0, you also need to install the Databricks ODBC driver in the same environment as Power BI Desktop.

- An Azure Databricks personal access token or your Microsoft Entra ID account credentials.

Note

As a security best practice when you authenticate with personal access tokens, Databricks recommends that you use personal access tokens belonging to service principals instead of workspace users. To create tokens for service principals, see Manage tokens for a service principal.

Connect Power BI Desktop to Azure Databricks using Partner Connect

You can use Partner Connect to connect to a cluster or SQL warehouse from Power BI Desktop in just a few clicks.

Make sure your Azure Databricks account, workspace, and the signed-in user meet the requirements for Partner Connect.

In the sidebar, click

Marketplace.

Marketplace.In Partner Connect integrations, click View all.

Click the Power BI tile.

In the Connect to partner dialog, for Compute, choose the name of the Azure Databricks compute resource that you want to connect.

Choose Download connection file.

Open the downloaded connection file, which starts Power BI Desktop.

In Power BI Desktop, enter your authentication credentials:

- Personal Access Token: Enter your Azure Databricks personal access token.

- Microsoft Entra ID: Click Sign in and then follow the on-screen instructions.

Click Connect.

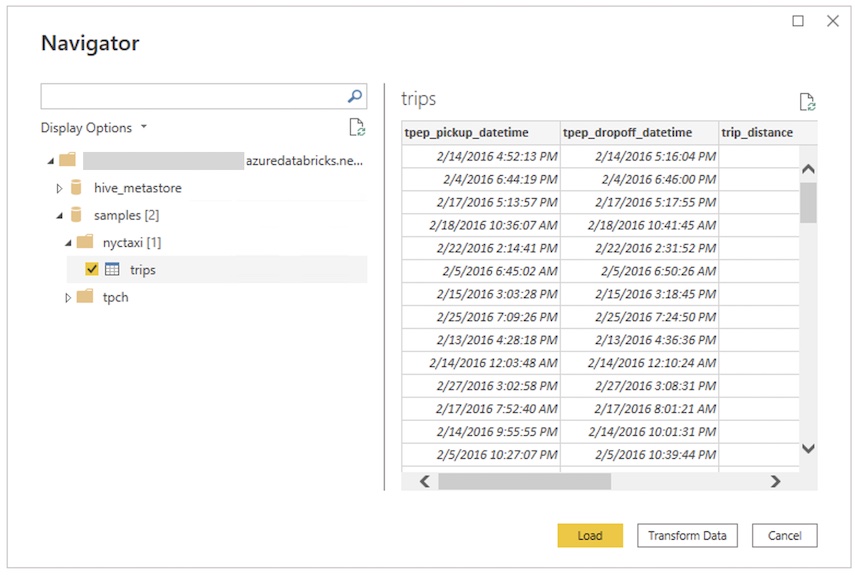

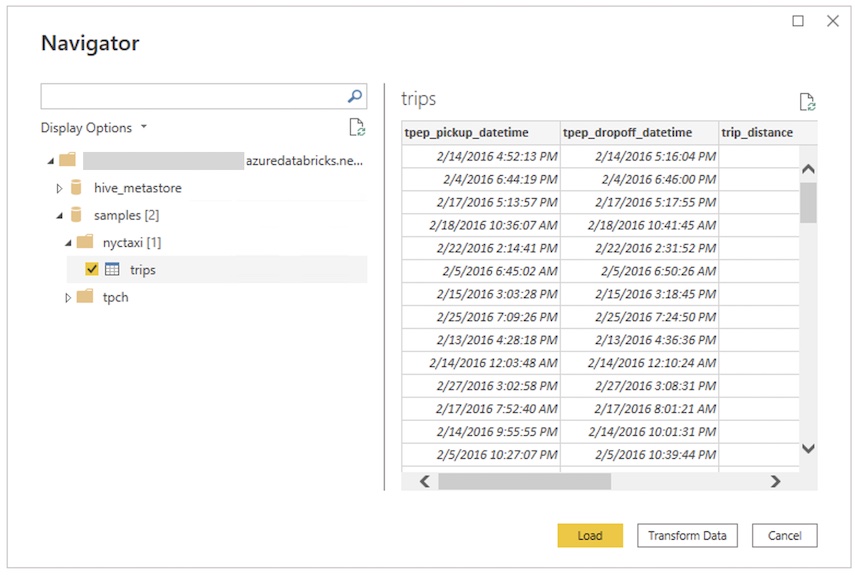

Select the Azure Databricks data to query from the Power BI Navigator.

Connect Power BI Desktop to Azure Databricks manually

Follow these instructions, depending on your chosen authentication method, to connect to a cluster or SQL warehouse with Power BI Desktop. Databricks SQL warehouses are recommended when using Power BI in DirectQuery mode.

Note

To connect faster with Power BI Desktop, use Partner Connect.

Start Power BI Desktop.

Click Get data or File > Get data.

Click Get data to get started.

Search for Databricks, then click the connector:

- Azure Databricks

Click Connect.

Enter the Server Hostname and HTTP Path.

Select your Data Connectivity mode. For information about the difference between Import and DirectQuery, see Use DirectQuery in Power BI Desktop.

Click OK.

Click your authentication method:

Personal Access Token: Enter your

Azure Databricks personal access token.

Microsoft Entra ID: Click Sign in and then follow the on-screen instructions.

Click Connect.

Select the Azure Databricks data to query from the Power BI Navigator. If Unity Catalog is enabled for your workspace, select a catalog before you select a schema and a table.

Using a custom SQL query

The Databricks connector provides the Databricks.Query data source that allows a user to provide a custom SQL query.

Follow the steps described in Connect with Power BI Desktop to create a connection, using Import as the data connectivity mode.

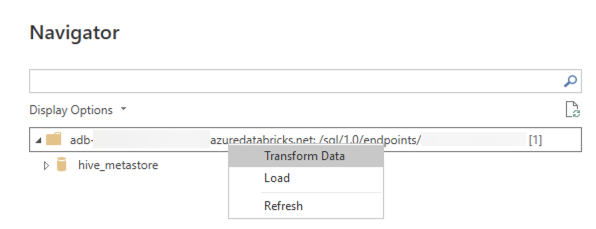

In the Navigator, right click the top-most item containing the selected host name and HTTP path and click Transform Data to open the Power Query Editor.

In the function bar, replace the function name

Databricks.CatalogswithDatabricks.Queryand apply the change. This creates a Power Query function that takes a SQL query as parameter.Enter the SQL query in the parameter field and click Invoke. This executes the query and a new table is created with the query results as its contents.

Access Azure Databricks data source using the Power BI service

When you publish a report to the Power BI service, you connect to Azure Databricks using a personal access token.

In the Power BI service, you can also enable single sign on (SSO) so that users can access reports built using DirectQuery storage mode by passing their Microsoft Entra ID credentials to Azure Databricks.

Publish your Power BI report from Power BI Desktop to the Power BI service.

Enable single sign on (SSO) access to the report and underlying data source.

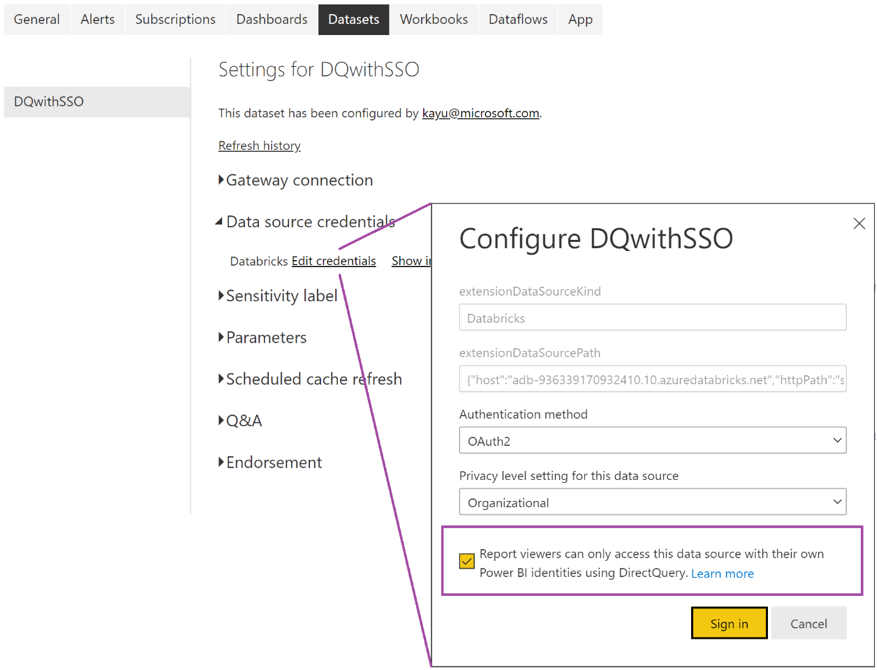

- Go to the underlying Azure Databricks dataset for the report in the Power BI service, expand Data source credentials, and click Edit credentials.

- On the configuration dialog, select Report viewers can only access this data source with their own Power BI identities using Direct Query and click Sign in.

With this option selected, access to the data source is handled using DirectQuery and managed using the Microsoft Entra ID identity of the user who is accessing the report. If you don’t select this option, only you, as the user who published the report, have access to the Azure Databricks data source.

Automated HTTP proxy detection

Power BI Desktop version 2.104.941.0 and above (May 2022 release) has built-in support for detecting Windows system-wide HTTP proxy configuration.

Power BI Desktop can automatically detect and use your Windows system-wide HTTP proxy configuration.

If the proxy server does not provide a CRL distribution point (CDP), Power BI might show the following error message:

Details: "ODBC: ERROR [HY000] [Microsoft][DriverSupport] (1200)

-The revocation status of the certificate or one of the certificates in the certificate chain is unknown."

To fix this error, complete the following steps:

Create the file

C:\Program Files\Microsoft Power BI Desktop\bin\ODBC Drivers\Simba Spark ODBC Driver\microsoft.sparkodbc.iniif it does not exist.Add the following config to your

microsoft.sparkodbc.inifile:[Driver] CheckCertRevocation=0

Power BI Delta Sharing connector

The Power BI Delta Sharing connector allows users to discover, analyze, and visualize datasets shared with them through the Delta Sharing open protocol. The protocol enables secure exchange of datasets across products and platforms by leveraging REST and cloud storage.

For connection instructions, see Power BI: Read shared data.

Limitations

- The Azure Databricks connector supports web proxy. However, automatic proxy settings defined in .pac files aren’t supported.

- In the Azure Databricks connector, the

Databricks.Querydata source is not supported in combination with DirectQuery mode. - The data that the Delta Sharing connector loads must fit into the memory of your machine. To ensure this, the connector limits the number of imported rows to the Row Limit that was set earlier.