Connect Tableau and Azure Databricks

This article shows you how to use Partner Connect to connect from Azure Databricks to Tableau Desktop and from Tableau Desktop or Tableau Cloud to Azure Databricks. This article also includes information about Tableau Server on Linux.

Note

To configure Azure Databricks sign-on from Tableau Server, see Configure Azure Databricks sign-on from Tableau Server.

When you use Azure Databricks as a data source with Tableau, you can provide powerful interactive analytics, bringing the contributions of your data scientists and data engineers to your business analysts by scaling to massive datasets.

Explore in Tableau Cloud from Azure Databricks

When using Azure Databricks as a data source with Tableau Cloud, you can create Tableau datasources from tables or schemas directly from the Databricks UI.

Requirements

- Your data must be in Unity Catalog, and your compute (cluster) must be Unity Catalog enabled. Hive metastore is not currently supported.

Publish Azure Databricks tables to a Tableau Cloud

- Sign in to your Databricks workspace and click

Catalog in the sidebar to open Catalog Explorer.

Catalog in the sidebar to open Catalog Explorer. - Select a compute resource from the drop-down list at the top right.

- Open a catalog and select the schema or tables to be published. Do not select from a hive metastore or the samples catalog.

- On the upper right, click Use with BI tools for a schema or Open in a dashboard for a table.

- Select Explore in Tableau Cloud.

- Verify the Compute and Publish data are desired, and then click Explore in Tableau Cloud.

- In 10 to 20 seconds a new tab prompts you to sign in to your Tableau Cloud account.

- After signing in, you will land on the Tableau page, which prompts you to sign back into Azure Databricks.

- After logging in to Azure Databricks, you can begin to build a dashboard in the workbook editor page.

- You can also click the Open in Tableau Cloud button to open the workbook in Tableau Cloud.

Features and notes

- You can either publish a table or a schema. When publishing a schema, you must select a table from the Tableau Data Source panel.

- Databricks enforces OAuth as the auth mode when publishing to Tableau Cloud.

- The published datasource is in draft mode and not saved anywhere, you must save it from Tableau Cloud to make it accessible to others.

- The redirect link returned by Tableau will expire after around 5 minutes.

Requirements to connect Tableau and Azure Databricks

The connection details for a compute resource or SQL warehouse, specifically the Server Hostname and HTTP Path values.

Tableau Desktop 2019.3 or above.

Databricks ODBC Driver 2.6.15 or above.

A Microsoft Entra ID (formerly Azure Active Directory) token (recommended), a Azure Databricks personal access token, or your Microsoft Entra ID account credentials.

Note

As a security best practice, when you authenticate with automated tools, systems, scripts, and apps, Databricks recommends that you use personal access tokens belonging to service principals instead of workspace users. To create tokens for service principals, see Manage tokens for a service principal.

You must be assigned one of the following Microsoft Entra ID roles:

If the admin consent workflow is configured, non-admins can request access to Tableau at sign in.

Connect Azure Databricks to Tableau Desktop using Partner Connect

You can use Partner Connect to connect a compute resource or SQL warehouse with Tableau Desktop in just a few clicks.

Make sure your Azure Databricks account, workspace, and the signed-in user all meet the requirements for Partner Connect.

In the sidebar, click

Marketplace.

Marketplace.In Partner Connect integrations, click View all.

Click the Tableau tile.

In the Connect to partner dialog, for Compute, choose the name of the Azure Databricks compute resource that you want to connect.

Choose Download connection file.

Open the downloaded connection file, which starts Tableau Desktop.

In Tableau Desktop, enter your authentication credentials, and then click Sign In:

To use a Microsoft Entra ID token, enter token for Username and your Microsoft Entra ID token for Password.

To use a Azure Databricks personal access token, enter token for Username and your personal access token for Password.

To use your Microsoft Entra ID credentials, click Edit Connection, double-click the database in the Data tab, and then select Microsoft Entra ID in the Authentication list.

For Tableau Desktop 2021.1 and above:

- If you do not use a Microsoft Entra ID (formerly Azure Active Directory) B2B guest account or Azure Databricks on Azure Government, you can simply enter

https://login.microsoftonline.com/commonas the OAuth Endpoint.

- If you do use a Microsoft Entra ID B2B guest account or Azure Databricks on Azure Government, contact your administrator to obtain a dedicated Microsoft Entra ID service principal.

Note

If you aren’t an admin, a Need admin approval error displays. Ask a cloud application administrator, or application administrator to grant you permission to connect to Tableau, then try to sign in again.

If your Microsoft Entra ID account has the admin consent workflow-enabled, Tableau Desktop prompts you to request access to Tableau. After a cloud application administrator or application administrator approves the request, try to sign in again.

- If you do not use a Microsoft Entra ID (formerly Azure Active Directory) B2B guest account or Azure Databricks on Azure Government, you can simply enter

Connect Tableau Desktop to Azure Databricks

Follow these instructions to connect from Tableau Desktop to a compute resource or SQL warehouse.

Note

To connect faster with Tableau Desktop, use Partner Connect.

Start Tableau Desktop.

Click File > New.

On the Data tab, click Connect to Data.

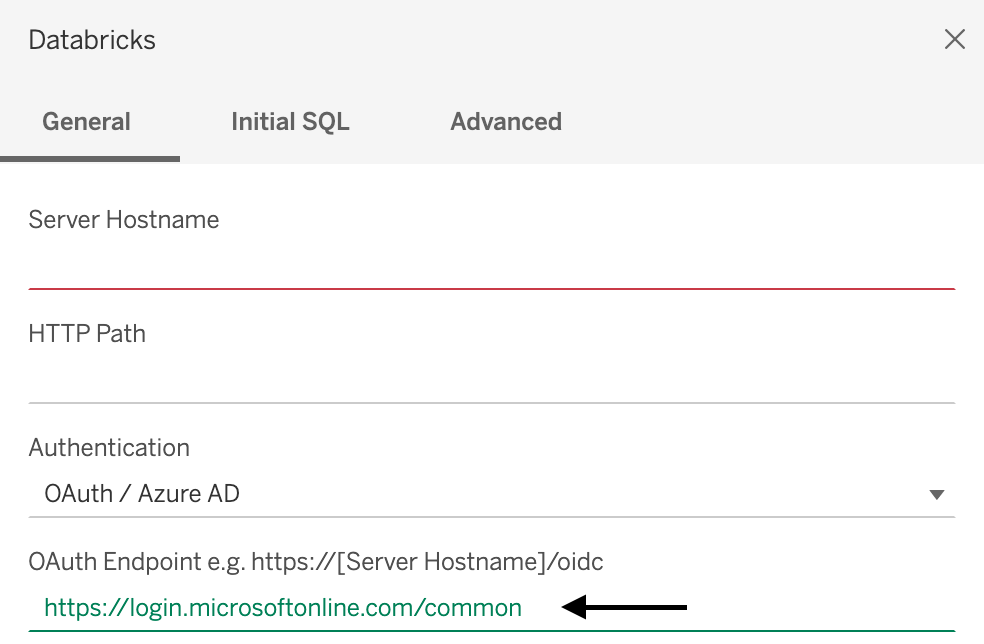

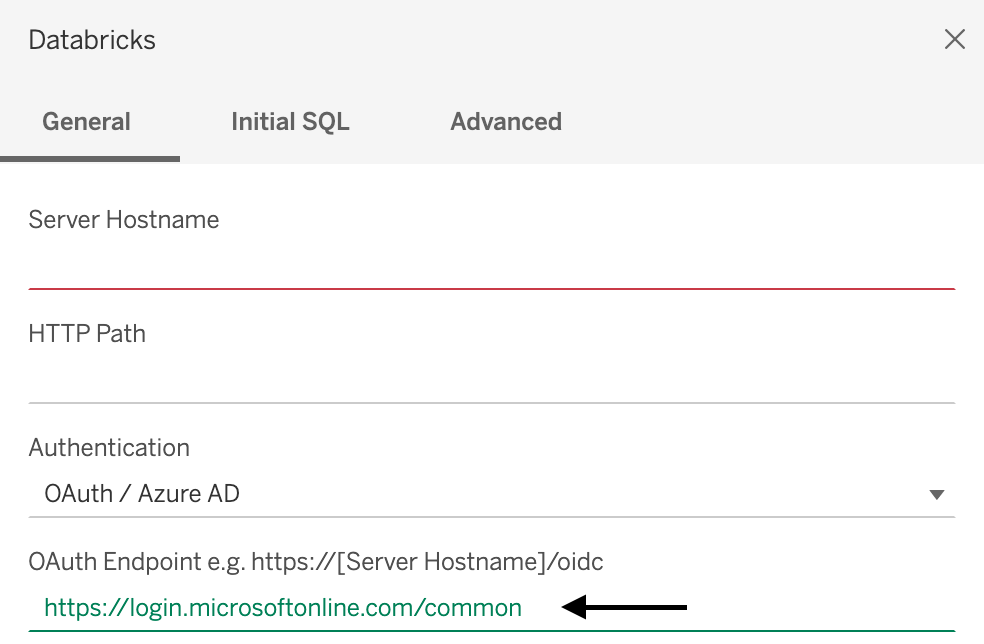

In the list of connectors, click Databricks.

Enter the Server Hostname and HTTP Path.

For Authentication, choose your authentication method, enter your authentication credentials, and then click Sign in.

To use a Microsoft Entra ID token, select Personal Access Token and enter your Microsoft Entra ID token for Password.

To use a Azure Databricks personal access token, select Personal Access Token and enter your personal access token for Password.

To use your Microsoft Entra ID credentials, select Microsoft Entra ID.

For Tableau Desktop 2021.1 and above:

If you do not use a Microsoft Entra ID (formerly Azure Active Directory) B2B guest account or Azure Databricks on Azure Government, you can simply enter

https://login.microsoftonline.com/commonas the OAuth Endpoint.

- If you do use a Microsoft Entra ID B2B guest account or Azure Databricks on Azure Government, contact your administrator to obtain a dedicated Microsoft Entra ID warehouse.

Note

If you aren’t an admin, a Need admin approval error displays. Ask a cloud application administrator, or application administrator to grant you permissions to connect to Tableau, then try to sign in again.

If your Microsoft Entra ID account has the admin consent workflow enabled, Tableau Desktop prompts you to request access to Tableau. After a cloud application administrator, or application administrator approves the request, try to sign in again.

If Unity Catalog is enabled for your workspace, additionally set the default catalog. In the Advanced tab, for Connection properties, add

Catalog=<catalog-name>. To change the default catalog, in the Initial SQL tab, enterUSE CATALOG <catalog-name>.

Connect Tableau Cloud to Azure Databricks

Follow these instructions to connect to a compute resource or SQL warehouse from Tableau Cloud.

- Start a new workbook

- On the menu bar, click Data > New Data Source.

- On the Connect to Data page, click Connectors > Databricks.

- On the Azure Databricks page, input the the Server Hostname and HTTP Path values.

- Select your authentication method and enter the information requested (if any).

- Click Sign In.

Tableau Server on Linux

Edit /etc/odbcinst.ini to include the following:

[Simba Spark ODBC Driver 64-bit]

Description=Simba Spark ODBC Driver (64-bit)

Driver=/opt/simba/spark/lib/64/libsparkodbc_sb64.so

Note

Tableau Server on Linux recommends 64-bit processing architecture.

Publish and refresh a workbook on Tableau Cloud from Tableau Desktop

This article shows how to publish a workbook from Tableau Desktop to Tableau Cloud and keep it updated when the data source changes. You need a workbook in Tableau Desktop and a Tableau Cloud account.

- Extract the workbook’s data from Tableau Desktop: in Tableau Desktop, with the workbook that you want to publish displayed, click Data >

<data-source-name>> Extract Data. - In the Extract Data dialog box, click Extract.

- Browse to a location on your local machine where you want to save the extracted data, and then click Save.

- Publish the workbook’s data source to Tableau Cloud: in Tableau Desktop, click Server > Publish Data Source >

<data-source-name>. - If the Tableau Server Sign In dialog box displays, click the Tableau Cloud link, and follow the on-screen directions to sign in to Tableau Cloud.

- In the Publish Data Source to Tableau Cloud dialog box, next to Refresh Not Enabled, click the Edit link.

- In the flyout box that displays, for Authentication, change Refresh not enabled to Allow refresh access.

- Click anywhere outside of this flyout to hide it.

- Select Update workbook to use the published data source.

- Click Publish. The data source displays in Tableau Cloud.

- In Tableau Cloud, in the Publishing Complete dialog box, click Schedule, and follow the on-screen directions.

- Publish the workbook to Tableau Cloud: in Tableau Desktop, with the workbook you want to publish displayed, click Server > Publish Workbook.

- In the Publish Workbook to Tableau Cloud dialog box, click Publish. The workbook displays in Tableau Cloud.

Tableau Cloud checks for changes to the data source according to the schedule you set, and updates the published workbook if changes are detected.

For more information, see the following on the Tableau website:

- Publish a Data Source

- Comprehensive Steps to Publish a Workbook

- Schedule Extract Refreshes as You Publish a Workbook

Best practices and troubleshooting

The two fundamental actions to optimize Tableau queries are:

- Reduce the number of records being queried and visualized in a single chart or dashboard.

- Reduce the number of queries being sent by Tableau in a single chart or dashboard.

Deciding which to try first depends on your dashboard. If you have a number of different charts for individual users all in the same dashboard, it’s likely that Tableau is sending too many queries to Azure Databricks. If you only have a couple of charts but they take a long time to load, there are probably too many records being returned by Azure Databricks to load effectively.

Tableau performance recording, available on both Tableau Desktop and Tableau Server, can help you identify performance bottlenecks by identifying processes that cause latency when you run a particular workflow or dashboard.

Enable performance recording to debug any Tableau issue

For instance, if query execution is the problem, you know it has to do with the data engine process or the data source that you are querying. If the visual layout is performing slowly, you know that it is the VizQL.

If the performance recording says that the latency is in the executing query, it is likely that too much time is taken by Azure Databricks to return the results or by the ODBC/Connector overlay processing the data into SQL for VizQL. When this occurs, you should analyze what you are returning and attempt to change the analytical pattern to have a dashboard per group, segment, or article instead of trying to cram everything into one dashboard and relying on Quick Filters.

If the poor performance is caused by sorting or visual layout, the problem may be the number of marks the dashboard is trying to return. Azure Databricks can return one million records quickly, but Tableau may not be able to compute the layout and sort the results. If this is a problem, aggregate the query and drill into the lower levels. You can also try a bigger machine since Tableau is only constrained by physical resources on the machine on which it is running.

For an in-depth tutorial on the performance recorder, see Create a Performance Recording.

Performance on Tableau Server versus Tableau Desktop

In general, a workflow that runs on Tableau Desktop is no faster on Tableau Server. A dashboard that doesn’t execute on Tableau Desktop will not execute on Tableau Server.

Using Desktop is a much better troubleshooting technique because Tableau Server has more processes to consider when you troubleshoot. If things work in Tableau Desktop but not in Tableau Server, then you can safely narrow the issue down to the processes in Tableau Server that aren’t in Tableau Desktop.

Configuration

By default, the parameters from the connection URL override those in the Simba ODBC DSN. There are two ways you can customize the ODBC configurations from Tableau:

.tdsfile for a single data source:- Follow the instructions in Save Data Sources to export the

.tdsfile for the data source. - Find the property line

odbc-connect-string-extras=''in the.tdsfile and set the parameters. For example, to enableAutoReconnectandUseNativeQuery, you can change the line toodbc-connect-string-extras='AutoReconnect=1,UseNativeQuery=1'. - Reload the

.tdsfile by reconnecting the connection.

The compute resource is optimized to use less heap memory for collecting large results, so it can serve more rows per fetch block than Simba ODBC’s default. Append

RowsFetchedPerBlock=100000'to the value of theodbc-connect-string-extrasproperty.- Follow the instructions in Save Data Sources to export the

.tdcfile for all data sources:- If you have never created a

.tdcfile, you can add TableauTdcExample.tdc to the folderDocument/My Tableau Repository/Datasources. - Add the file to all developers’ Tableau Desktop installations, so that it works when the dashboards are shared.

- If you have never created a

Optimize charts (worksheets)

There are a number of tactical chart optimizations that can help you improve the performance of your Tableau worksheets.

For filters that don’t change often and are not meant to be interacted with, use context filters, which speed up execution time.

Another good rule of thumb is to use if/else statements instead of case/when statements in your queries.

Tableau can push down filters into data sources, which can improve query speeds. For more information about data source push down filters, see Filtering Across Multiple Data Sources Using a Parameter and Filter Data Across Multiple Data Sources.

Try to avoid table calculations, as they scan the full dataset. For more information about table calculations, see Transform Values with Table Calculations.

Optimize dashboards

The following are some tips and troubleshooting exercises you can apply to improve your Tableau dashboard performance.

With Tableau dashboards connected to Azure Databricks, quick filters on individual dashboards that serve a number of different users, functions, or segments can be a common source of issues. You can attach quick filters to all of the charts on the dashboard. One quick filter on a dashboard with five charts causes a minimum of 10 queries to be sent to Azure Databricks. This can grow to greater numbers when more filters are added, and it can cause performance problems because Spark is not built to handle many concurrent queries starting at the same exact moment. This becomes more problematic when the Azure Databricks cluster or SQL warehouse that you are using is not large enough to handle the high volume of queries.

As a first step, we recommend that you use Tableau performance recording to troubleshoot what might be causing the issue.

If the poor performance is caused by sorting or visual layout, the problem could be the number of marks the dashboard is trying to return. Azure Databricks can return one million records quickly, but Tableau may not be able to compute the layout and sort the results. If this is a problem, aggregate the query and drill into the lower levels. You can also try a bigger machine, as Tableau is constrained only by the physical resources on the machine on which it is running.

For information about drilling down in Tableau, see Drill down into the details.

If you see many granular marks, this is often a poor analytical pattern because it doesn’t provide insight. Drilling down from higher levels of aggregation makes more sense and reduces the number of records that must be processed and visualized.

Use actions to optimize dashboards

Use Tableau _actions to click a mark (for example, a state on a map) and be sent to another dashboard that filters based on the state you click. Using _actions reduces the need for multiple filters on one dashboard and the number of records that must be generated. (You are setting an action to not generate records until it gets a predicate to filter on.

For more information, see Actions and 6 Tips to Make Your Dashboards More Performant.

Caching

Caching data is a good way to improve the performance of worksheets or dashboards.

Caching in Tableau

Tableau has four layers of caching before it goes back to the data, whether that data is in a live connection or an extract:

- Tiles: If someone loads the same dashboard and nothing changes, Tableau tries to reuse the same tiles for the charts. This is similar to Google Maps tiles.

- Model: If the tiles cache can’t be used, the model cache of mathematical calculations is used to generate visualizations. Tableau Server attempts to use the same models.

- Abstract: Aggregate results of queries are stored as well. This is the third “defense” level. If a query returns Sum(Sales), Count(orders), Sum(Cost), in a previous query and a future query wants just Sum(Sales), then Tableau grabs that result and uses it.

- Native Cache: If the query is the same as another one, Tableau uses the same results. This is the last level of caching. If this fails, Tableau goes to the data.

Caching frequency in Tableau

Tableau has administrative settings for caching more or less frequently. If the server is set to Refresh Less Often, Tableau keeps data in the cache for up to 12 hours. If the server is set to Refresh More Often, Tableau returns to the data on every page refresh.

Customers who use the same dashboard repeatedly, for example, “Monday morning pipeline reports”, should be on a server set to Refresh Less Often so that the dashboards all use the same cache.

Cache warming in Tableau

In Tableau, you can warm the cache by setting up a subscription for the dashboard to send before you want the dashboard viewed. (The dashboard must be rendered to generate the subscription email image.) See Warming the Tableau Server Cache Using Subscriptions.

Tableau Desktop: Error The drivers... are not properly installed

Issue: When you try to connect Tableau Desktop to Databricks, Tableau displays an error message in the connection dialog with a link to the driver download page, where you can find driver links and installation instructions.

Cause: Your installation of Tableau Desktop is not running a supported driver.

Resolution: Download the Databricks ODBC driver version 2.6.15 or above.

See also: Error “The drivers… are not properly installed” on the Tableau website.

Primary / foreign key constraints

To propagate primary key (PK) and foreign key (FK) constraints from Azure Databricks to Tableau, you must understand the capabilities and limitations of both platforms regarding constraints.

Understanding Azure Databricks constraints

Azure Databricks supports primary and foreign key constraints starting from Databricks Runtime 15.2. These constraints are informational and not enforced by default, meaning they do not prevent data integrity violations but can be used to optimize queries and provide metadata about data relationships. See Declare primary key and foreign key relationships.

Understanding Tableau uses constraints to create table relationships

Tableau does not directly enforce primary and foreign key constraints. Instead, Tableau uses relationships to model data connections. To work with constraints in Tableau, you must understand that Tableau’s data model offers two levels of modeling: a logical layer and a physical layer. See Tableau Data Model. The implications of this two-level data model on Azure Databricks constraints being recognized as relationships in Tableau are discussed below.

Connecting Azure Databricks to Tableau

When you connect Azure Databricks to Tableau, Tableau attempts to create relationships at the physical layer between tables based on existing key constraints and matching fields. Tableau automatically attempts to detect and create relationships at the physical layer based on primary and foreign key constraints defined in Azure Databricks. If no key constraints are defined, Tableau uses matching column names to auto-generate joins. At the logical layer, only single-column name matches are used to determine a relationship. At the physical layer, this column name matching detects both simple (single-column) and composite (multi-column) key relationships.

If Tableau cannot determine the matching fields, you must manually specify the join relationship between the two tables at the physical layer by supplying the columns, condition, and type of constraint. To shift from the logical layer in the UI to the physical layer, double-click the table at the logical layer.