Merk

Tilgang til denne siden krever autorisasjon. Du kan prøve å logge på eller endre kataloger.

Tilgang til denne siden krever autorisasjon. Du kan prøve å endre kataloger.

In this section, you'll learn how to create and use native external tables in Synapse SQL pools. Native external tables have better performance when compared to external tables with TYPE=HADOOP in their external data source definition. This is because native external tables use native code to access external data.

External tables are useful when you want to control access to external data in Synapse SQL pool. External tables are also useful if you want to use tools, such as Power BI, in conjunction with Synapse SQL pool. External tables can access two types of storage:

- Public storage where users access public storage files.

- Protected storage where users access storage files using SAS credential, Microsoft Entra identity, or Managed Identity of Synapse workspace.

Note

In dedicated SQL pools you can only use native external tables with a Parquet file type, and this feature is in public preview. If you want to use generally available Parquet reader functionality in dedicated SQL pools, or you need to access CSV or ORC files, use Hadoop external tables. Native external tables are generally available in serverless SQL pools. Learn more about the differences between native and Hadoop external tables in Use external tables with Synapse SQL.

The following table lists the data formats supported:

| Data format (Native external tables) | Serverless SQL pool | Dedicated SQL pool |

|---|---|---|

| Parquet | Yes (GA) | Yes (public preview) |

| CSV | Yes | No (Alternatively, use Hadoop external tables) |

| delta | Yes | No |

| Spark | Yes | No |

| Dataverse | Yes | No |

| Azure Cosmos DB data formats (JSON, BSON, etc.) | No (Alternatively, create views) | No |

Prerequisites

Your first step is to create a database where the tables will be created. The database must have a master key to protect the credentials. For more information on this, see CREATE MASTER KEY (Transact-SQL). Then create the following objects that are used in this sample:

EXTERNAL DATA SOURCE

sqlondemanddemothat references public demo storage account, and EXTERNAL DATA SOURCEnyctlcthat references publicly available Azure storage account on locationhttps://azureopendatastorage.blob.core.windows.net/nyctlc/.CREATE EXTERNAL DATA SOURCE SqlOnDemandDemo WITH (LOCATION = 'https://fabrictutorialdata.blob.core.windows.net/sampledata/Synapse'); GO CREATE EXTERNAL DATA SOURCE nyctlc WITH ( LOCATION = 'https://azureopendatastorage.blob.core.windows.net/nyctlc/') GO CREATE EXTERNAL DATA SOURCE DeltaLakeStorage WITH ( location = 'https://fabrictutorialdata.blob.core.windows.net/sampledata/Synapse/delta-lake' );File formats

QuotedCSVWithHeaderFormatandParquetFormatthat describe CSV and parquet file types.CREATE EXTERNAL FILE FORMAT QuotedCsvWithHeaderFormat WITH ( FORMAT_TYPE = DELIMITEDTEXT, FORMAT_OPTIONS ( FIELD_TERMINATOR = ',', STRING_DELIMITER = '"', FIRST_ROW = 2 ) ); GO CREATE EXTERNAL FILE FORMAT ParquetFormat WITH ( FORMAT_TYPE = PARQUET ); GO CREATE EXTERNAL FILE FORMAT DeltaLakeFormat WITH ( FORMAT_TYPE = DELTA ); GO

The queries in this article will be executed on your sample database and use these objects.

External table on a file

You can create external tables that access data on an Azure storage account that allows access to users with some Microsoft Entra identity or SAS key. You can create external tables the same way you create regular SQL Server external tables.

The following query creates an external table that reads population.csv file from SynapseSQL demo Azure storage account that is referenced using sqlondemanddemo data source.

Note

Change the first line in the query, that is, [mydbname], so you're using the database you created.

USE [mydbname];

GO

CREATE EXTERNAL TABLE populationExternalTable

(

[country_code] VARCHAR (5) COLLATE Latin1_General_BIN2,

[country_name] VARCHAR (100) COLLATE Latin1_General_BIN2,

[year] smallint,

[population] bigint

)

WITH (

LOCATION = 'csv/population/population.csv',

DATA_SOURCE = sqlondemanddemo,

FILE_FORMAT = QuotedCSVWithHeaderFormat

);

Native CSV tables are currently available only in the serverless SQL pools.

External table on a set of files

You can create external tables that read data from a set of files placed on Azure storage:

CREATE EXTERNAL TABLE Taxi (

vendor_id VARCHAR(100) COLLATE Latin1_General_BIN2,

pickup_datetime DATETIME2,

dropoff_datetime DATETIME2,

passenger_count INT,

trip_distance FLOAT,

fare_amount FLOAT,

tip_amount FLOAT,

tolls_amount FLOAT,

total_amount FLOAT

) WITH (

LOCATION = 'yellow/puYear=*/puMonth=*/*.parquet',

DATA_SOURCE = nyctlc,

FILE_FORMAT = ParquetFormat

);

You can specify the pattern that the files must satisfy in order to be referenced by the external table. The pattern is required only for Parquet and CSV tables. If you're using Delta Lake format, you need to specify just a root folder, and the external table will automatically find the pattern.

Note

The table is created on partitioned folder structure, but you cannot leverage some partition elimination. If you want to get better performance by skipping the files that don't satisfy some criterion (like specific year or month in this case), use views on external data.

External table on appendable files

The files that are referenced by an external table shouldn't be changed while the query is running. In the long-running query, SQL pool could retry reads, read parts of the files, or even read the file multiple times. Changes of the file content would cause wrong results. Therefore, the SQL pool fails the query if detects that the modification time of any file is changed during the query execution.

In some scenarios, you might want to create a table on the files that are constantly appended. To avoid the query failures due to constantly appended files, you can specify that the external table should ignore potentially inconsistent reads using the TABLE_OPTIONS setting.

CREATE EXTERNAL TABLE populationExternalTable

(

[country_code] VARCHAR (5) COLLATE Latin1_General_BIN2,

[country_name] VARCHAR (100) COLLATE Latin1_General_BIN2,

[year] smallint,

[population] bigint

)

WITH (

LOCATION = 'csv/population/population.csv',

DATA_SOURCE = sqlondemanddemo,

FILE_FORMAT = QuotedCSVWithHeaderFormat,

TABLE_OPTIONS = N'{"READ_OPTIONS":["ALLOW_INCONSISTENT_READS"]}'

);

The ALLOW_INCONSISTENT_READS read option disables file modification time check during the query lifecycle and read whatever is available in the files that are referenced by the external table. In appendable files, the existing content isn't updated, and only new rows are added. Therefore, the probability of wrong results is minimized compared to the updateable files. This option might enable you to read the frequently appended files without handling the errors.

This option is available only in the external tables created on CSV file format.

Note

As the option name implies, the creator of the table accepts a risk that the results might not be consistent. In the appendable files, you might get incorrect results if you force multiple read of the underlying files by self-joining the table. In most of the "classic" queries, the external table will just ignore some rows that are appended while the query was running.

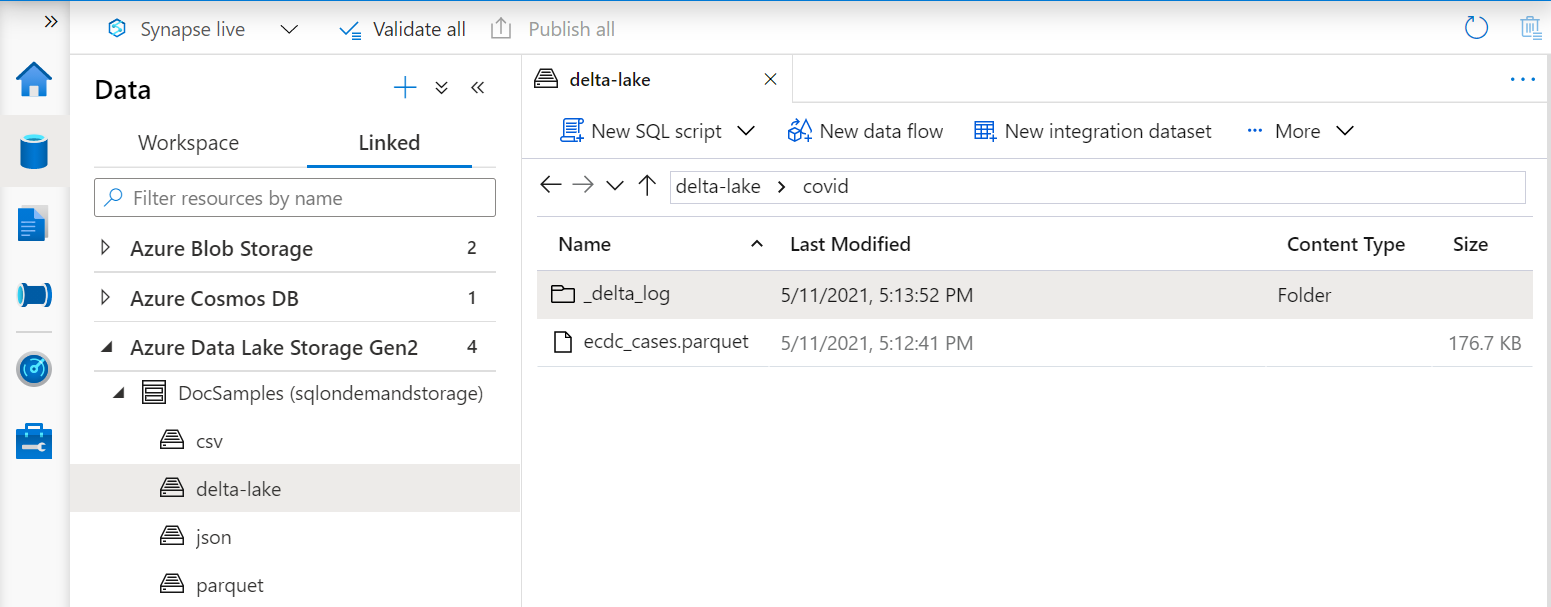

Delta Lake external table

External tables can be created on top of a Delta Lake folder. The only difference between the external tables created on a single file or a file set and the external tables created on a Delta Lake format is that in Delta Lake external table you need to reference a folder containing the Delta Lake structure.

An example of a table definition created on a Delta Lake folder is:

CREATE EXTERNAL TABLE Covid (

date_rep date,

cases int,

geo_id varchar(6)

) WITH (

LOCATION = 'covid', --> the root folder containing the Delta Lake files

data_source = DeltaLakeStorage,

FILE_FORMAT = DeltaLakeFormat

);

External tables can't be created on a partitioned folder. Review the other known issues on Synapse serverless SQL pool self-help page.

Delta tables on partitioned folders

External tables in serverless SQL pools don't support partitioning on Delta Lake format. Use Delta partitioned views instead of tables if you have partitioned Delta Lake data sets.

Important

Don't create external tables on partitioned Delta Lake folders even if you see that they might work in some cases. Using unsupported features like external tables on partitioned delta folders might cause issues or instability of the serverless pool. Azure support won't be able to resolve any issue if it's using tables on partitioned folders. You would be asked to transition to Delta partitioned views and rewrite your code to use only the supported feature before proceeding with issue resolution.

Use an external table

You can use external tables in your queries the same way you use them in SQL Server queries.

The following query demonstrates this using the population external table we created in previous section. It returns country/region names with their population in 2019 in descending order.

Note

Change the first line in the query, that is, [mydbname], so you're using the database you created.

USE [mydbname];

GO

SELECT

country_name, population

FROM populationExternalTable

WHERE

[year] = 2019

ORDER BY

[population] DESC;

Performance of this query might vary depending on region. Your workspace might not be placed in the same region as the Azure storage accounts used in these samples. For production workloads, place your Synapse workspace and Azure storage in the same region.