ALM adoption maturity model

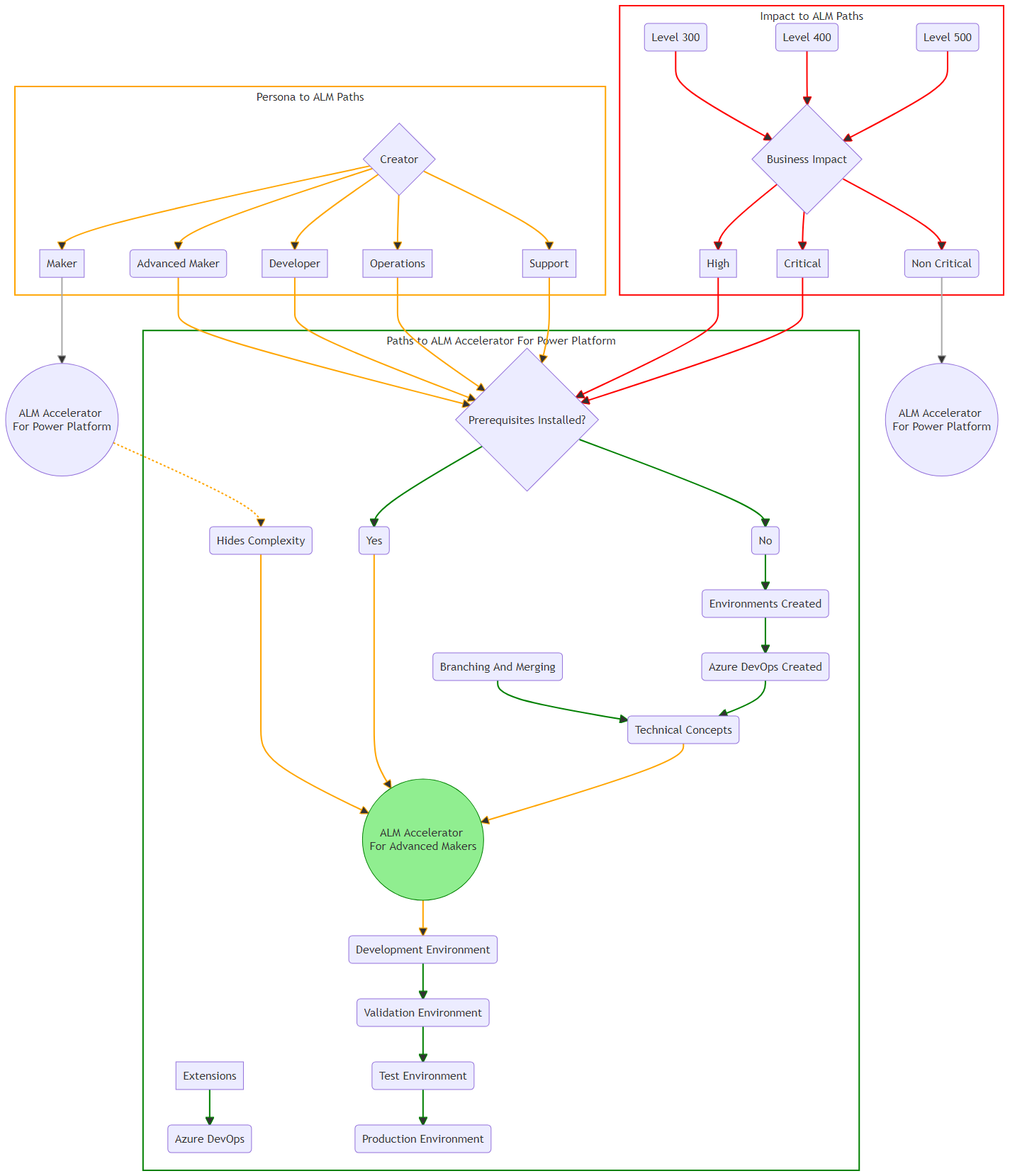

Use the levels and capabilities in the Power Platform adoption maturity model to evaluate your organization's usage of application lifecycle management (ALM) and how the ALM Accelerator for Power Platform can help.

Quick start

The ALM Accelerator is a good match when you can verify that the following considerations apply to your organization.

What is the impact of the solution?

- Are the applications classified as critical or high business impact?

- Who is using the application?

- Is it a productivity application used by everyone in your organization?

- Is it used by senior leadership to make business-impact decisions?

- Is it integrated with external users; for example, partners and customers who rely on the solution as part of an external process?

- Are there specific compliance and auditing needs?

- How important is tracking and auditing who is using the application?

- Does the solution have compliance and auditing requirements?

Do you have the prerequisites in place?

- Do you have a defined environment strategy for development, validation, test, and production?

- Do you use Azure DevOps Services or can you integrate with Azure DevOps Services for source control and build pipelines?

- Do you have license prerequisites in place?

- Do you have Basic or above Azure DevOps licenses for makers?

- Do you have Per App or Per User Power Apps licenses to access the ALM Accelerator maker solution?

Are you looking to move to Level 300 - Defined or beyond?

Do you have an environment strategy in place?

- What is the request strategy for environments?

- Do you have a process to create development, test, and production environments?

- Is the process to request environments automated?

- What is the request strategy for environments?

Do you understand and have you implemented source control concepts?

- Is branching and merging understood?

- Are pull requests used to review and merge changes?

- Are build pipelines used to integrate and deploy between validation, test, and production environments?

Are fusion development teams engaged to include low-code and pro-code teams?

Are your professional development teams familiar with branching and merging strategies and able to assist makers?

Do your pro-code and operations teams manage Azure Pipelines?

Are you a pro-code team creating components in JavaScript?

Are you integrating with OpenAPI-enabled Web APIs?

Are you using or planning to create plug-ins to extend business processes in Microsoft Dataverse?

Do you have a support plan?

- Who is supporting the application and solution?

- Do you have a formal support team to manage issues with the solution?

Leading questions

If the following leading questions reveal gaps, consider what proactive steps you can take to help grow the maturity of your people, processes, and technology to move toward advanced maker integration inside your organization.

Alignment

Is innovation driven by business areas, from the bottom up?

Is there a common vision between IT and business?

Is there a dedicated Power Platform product owner?

Is there an established Center of Excellence team?

Is Power Platform a key part of your digital transformation strategy?

Impact

Is Power Platform targeting low-complexity scenarios?

Is there limited reuse of common components and services?

Do applications allow bottom-up and top-down innovation?

Do applications focus on increased delivery efficiency, supporting rapidly changing business needs?

Are there organization-wide initiatives to deliver large-scale integrated apps?

Strategy

Is your Power Platform strategy defined?

Is there a demand management process in place?

Is there a defined understanding of the role of Power Platform in your organization's IT portfolio?

Are business plans shared across departments?

Are vision and strategy understood by all?

Do enterprise architecture decisions include Power Platform capabilities?

Business value and business strategy viability

What business outcomes will this solution realize?

What is the expected time frame?

What do you do well today?

What do you want to do better?

What thing do you want to do differently?

Technological viability

What are manual steps vs automated steps?

How measurable are the qualitative and quantitative outcomes?

What is the dashboard and report capability to allow stakeholders to visualize and drill into and track action on data?

How available are analytics?

On what frequency are analytics updated?

How frequently are changes required?

What is the technical debt that needs to be accounted for?

What are the security implications?

Financial viability

What is the economic value added?

Does this address the current market model or is a new model being developed?

What is the time horizon for implementation?

What investment model is required?

Business impact

Critical

The system severely affects production, operations, or deployment deadlines or production or profitability. Multiple users or services are affected.

Initial response time is less than 60 minutes with 24x7 access.

Issues demand an immediate response and require 24x7 operation, every day.

High

The system has moderate business impact and can be dealt with during business hours. Multiple users, single users, or customers are affected.

Initial response time is one hour, four hours, or next-day business hours, with support available 24x7.

Noncritical

The system has minimal business impact. The issue is important but doesn't significantly affect service or productivity. Acceptable workarounds are considered.

Initial response time is four to eight hours or greater, with business hours access and support.

Administration and governance

Who can create environments?

What data loss prevention (DLP) policies are in place?

Do Power Platform Service Admin roles exist to administer Power Platform tenants and environments?

Are tenants and environments isolated from each other?

Is there monitoring in place?

- Has the CoE Starter Kit – Core been installed?

- Does license, capacity, and consumption monitoring inform decision making?

- Has the CoE Starter Kit – Governance Module been adopted to gain compliance insights and archive resources?

- Does app usage data help identify business-critical apps and makers?

Are custom environments used for specific use cases and ALM scenarios?

Support

Are apps created by makers supported by a help desk or dedicated team?

Has an application and solution risk profile been defined that details what level of support will be received?

Is there an ongoing continuous improvement plan for the application?

Are there clearly defined roles and responsibilities for the solution?

Do the roles and responsibilities include ownership to build and operate the solution?

Nurture citizen developers (makers)

Do you have a training and upskilling program for your makers to help them learn key concepts to grow your pool of makers?

Do you have an internal champions community established?

Have you adopted the CoE Starter Kit – Nurture module?

Do you have show-and-tell sessions to demonstrate advanced maker concepts?

Do you have an adoption campaign to demonstrate how fusion development processes work?

Do you have a career path option for makers?

Have you built a community of mentors to share advanced maker concepts and best practices?

Do you have a common development strategy and goals for citizen and professional developers?

Automation

Do you have environment and DLP connector policy requests that are automated?

Do you have communication about processes and compliance between admin and makers? Is this process automated?

Fusion teams

Do you have standard libraries, custom connectors, and components to be consumed by makers?

Do you have the need for fusion teams to manage source control and app lifecycle; for example, build, verification, test, and production?

Do you have cross-functional teams that plan and execute work jointly, including makers, testers, and operational teams?

Do you have a common development strategy and goals for citizen and pro developers needed for new projects?

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for