Observação

O acesso a essa página exige autorização. Você pode tentar entrar ou alterar diretórios.

O acesso a essa página exige autorização. Você pode tentar alterar os diretórios.

Reviewed by: Steven Green, Peng Song, Xiaochen Wu, Kun Cheng, Sanjay Mishra

Introduction

Database migration from SQL Server to Azure SQL Database is a process that many organizations must implement as they move to Azure public cloud. This article is a guide that describes one specific implementation path for the migration process, that has been commonly used by Microsoft customers. To aid others in the same task, in this article we present lessons learned, recommendations, examples, caveats, potential issues, solutions, and workarounds. A sample PowerShell script that automates some migration steps is available as well.

This article is long, compared to a typical blog post. We intentionally include a lot of conceptual and background material to help the reader understand the entire flow of the migration process. Optimally, this article should be read during migration planning, rather than during implementation or troubleshooting. The target audience is Architects, Database Engineers, DBAs, and Developers who are considering or implementing database migration from SQL Server to Azure SQL Database.

As a prerequisite, we recommend reviewing Azure SQL Database documentation on the topic of migration. That provides a broad overview of multiple migration paths and tools that are available. In the current article, rather than trying to encompass the breadth of possible migration approaches discussed in documentation, we concentrate on an in-depth review of one of the most common ways to migrate, namely on using bacpac files and the DacFx framework.

In practice, a migration project will likely include multiple SQL Server databases. For simplicity, we will consider a single database migration in this guide. Unless noted differently, all migration steps are equally applicable to migrations of multiple databases.

At a high level, the migration process consists of the following activities:

1. The first step is to ensure the application is compatible with Azure SQL Database.

2. Validate migration process functionality, and minimize required application downtime.

3. Ensure adequate database performance, once migrated to Azure SQL Database.

4. Operationalize migrated database. Establish standard operating procedures for monitoring, troubleshooting, change management, support, etc.

These activities are not in a strict order; they overlap and blend with each other. They also tend to be iterative. For example, to minimize application downtime during actual migration, it will likely be necessary to repeat step 2 multiple times, to tune the process optimally for the specific application and database being migrated.

In this article, we will concentrate on the first two activities, i.e. on ensuring compatibility, and on the actual migration steps. Future articles are planned on the topics of performance and operations for the migrated database.

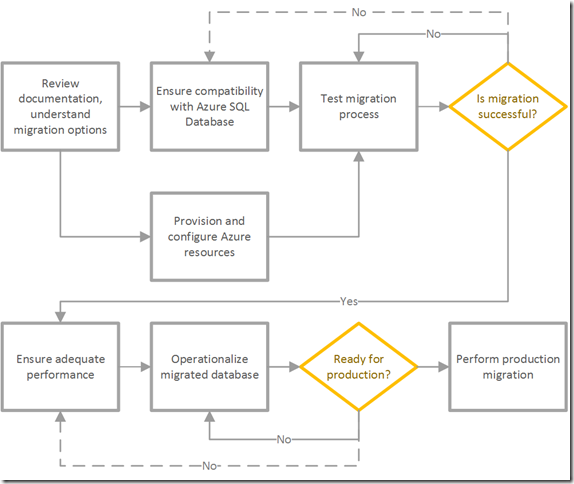

Figure 1 is a diagram describing the primary phases of the migration process.

Figure 1

The rest of this article describes the first four phases in detail.

Database Compatibility Considerations

There are three main areas to consider to ensure application and database compatibility with Azure SQL Database:

1. Feature compatibility. If the application uses any SQL Server features that are not available in Azure SQL Database, then this is an area that will likely require the most investment in terms of application re-engineering, which would be a prerequisite for migration to Azure SQL Database.

2. T-SQL language compatibility. In Azure SQL Database V12, this is usually not a major obstacle. Most of the T-SQL language constructs supported in SQL Server are also supported in Azure SQL Database. For details, see Azure SQL Database Transact-SQL differences. Nevertheless, this still requires attention; the specifics of validating language compatibility are described later in this article.

3. Behavior compatibility. While built on a common codebase, Azure SQL Database behaves differently from SQL Server in several important areas, i.e. high availability, backup, connectivity, security, resource governance, default concurrency model, etc. Most importantly, the application must implement robust retry logic to work reliably once migrated to the cloud.

The only fully reliable way to guarantee that the migration process is sound, and the database workload is compatible with Azure SQL Database, is comprehensive and thorough testing of both the migration process and the application in a test environment. That is a standing recommendation; however, many compatibility issues can and should be addressed ahead of time, before any migrations occur.

The preferred way to determine the extent to which a given SQL Server database is compatible with Azure SQL Database, in terms of T-SQL language and features, is to use SQL Server Data Tools (SSDT). In a nutshell, the approach is to create a Visual Studio database project for the database to be migrated, set the target platform for the project to “Microsoft Azure SQL Database V12”, and make changes to database schema and code to fix build errors until the project builds successfully. A successful build would indicate that the project is compatible with the target platform in terms of language and features (though not necessarily in terms of behavior and performance). For a detailed walkthrough, see Migrate a SQL Server Database to Azure SQL Database Using SQL Server Data Tools for Visual Studio. It is important to install the latest version of SSDT, to validate the project against the current surface area of Azure SQL Database, which expands frequently.

Once the database project is created, make sure to set the database compatibility level option (under project Properties, Project Settings, Database Settings, Miscellaneous) to whichever compatibility level the migrated database will have once it is migrated. Supported features and language constructs depend on both the platform (Azure SQL Database V12), and the selected compatibility level. In general, the latest compatibility level (130 as of this writing) is recommended to enable many recent improvements in the database engine. However, if the source database currently runs under a lower compatibility level, then a possibility of query performance regressions under a higher compatibility level exists. For additional details and guidance on choosing the right compatibility level for your database, see Improved Query Performance with Compatibility Level 130 in Azure SQL Database.

Another database level option that should be considered is Read-Committed Snapshot Isolation (RCSI). In Azure SQL Database, this is the default for new databases, while in SQL Server, the default transaction isolation level continues to be Read-Committed. Most applications experience improved concurrency with RCSI, however it comes at a cost of increased tempdb utilization; also, there are some application patterns, e.g. certain queue implementations, that require Read-Committed transaction isolation (at least at the statement level with the READCOMMITTEDLOCK table hint). RCSI can be enabled and disabled at the database level as needed, and can also be set in the SSDT project settings (under project Properties, Project Settings, Database Settings, Operational, Read committed snapshot). In the context of migration, we generally recommend using the default RCSI enabled option for migrated databases; however, if there is any application functionality dependent on Read-Committed, then code may have to be modified to use the READCOMMITTEDLOCK hint, or, if such modification is not feasible, the RCSI option would have to be disabled at the database level.

In addition to verifying database compatibility with Azure SQL Database, SSDT provides another advantage – it ensures the validity of object references in database schema and code. For example, if the database to be migrated has any objects that reference non-existing objects, e.g. views that reference dropped tables, or any three-part references (which are not supported in Azure SQL Database, unless referring to tempdb), then they will be identified via build errors. This is important, because bacpac creation later in the migration process may fail if any broken references exist. Since SSDT database projects and bacpacs use the same DacFx framework internally, this is a good way to discover potential problems in advance. While not directly related to migration, an important side benefit of using SSDT is detecting and removing any invalid objects and code in the source database.

Once the changes to make the database compatible with Azure SQL Database have been made in the SSDT project, they will also have to be made in the actual SQL Server database to be migrated. This can be done by using the “publish” functionality in SSDT, or using any other approach that may be more optimal for a given change, in the context of a particular application and database. For example, if the SSDT publish script rebuilds a large table to implement a column data type change, then a more optimal approach may be to use the online alter column functionality available in SQL Server 2016.

It should be noted that in the recent versions of DacFx, the SqlPackage tool that creates the bacpac became smarter in its handling of Azure SQL Database import/export scenarios. For example, it will no longer block bacpac export, if filegroups other than PRIMARY are present in the source database. Since Azure SQL Database does not support any database filegroups other than PRIMARY, all references to such filegroups present in the bacpac will be automatically replaced with references to the PRIMARY filegroup when SqlPackage imports a bacpac. Nevertheless, filegroups other than PRIMARY are still treated as build errors in an SSDT project, when target platform set to “Microsoft Azure SQL Database V12”.

Therefore, even though getting the database project to build in SSDT guarantees that bacpac export will not be blocked, it may, in some cases, entail more work than what is actually needed to successfully migrate the database. If you find that the effort to get the SSDT project to build successfully is overly large, then you may attempt to migrate the database as is. However, if export fails due to compatibility issues, then they would have to be addressed in the source database prior to attempting the actual migration.

SSDT will discover SQL Server features and T-SQL language constructs in the database schema and code that are incompatible with Azure SQL Database. It will not, however, discover them in queries that are embedded or generated in the application code, because they are not a part of the database project. Also, SSDT will not do anything to address the behavior compatibility of the database, e.g. the extent to which the differences between SQL Server and Azure SQL Database in the connectivity, high availability, and resource governance behavior could affect the application. For these two reasons in particular, comprehensive testing against the migrated database is recommended to address all compatibility concerns, prior to production migration.

Database Size Considerations

Currently, the maximum database size in Azure SQL Database is limited to 1 TB. However, for the purposes of database migration, several other size limits must be considered.

1. Disk space requirements on the machine where the bacpac is created need to be considered when dealing with large databases. During bacpac export, DacFx temporarily writes table contents to the directory referenced in the %TEMP% user environment variable, as well as the directory used by .Net Isolated Storage. These typically both reside on the C drive. Free disk space that will be required on this drive is roughly equal to double the size of the largest table in the source database. If free space on C is insufficient for these temporary allocations and for the bacpac itself, then the bacpac can be created on a different drive with sufficient space. Another workaround is to change the user %TEMP% environment variable in the machine System Properties dialog to point to a directory on a drive with more free space.

2. The same size considerations exist for import operations as well, if the import operation is done using SqlPackage on an Azure VM, as opposed to using the Import/Export Service. Using an Azure VM may be needed for network security reasons, as described in the Import the Bacpac section later in the article.

3. Size limits also exist in the Import/Export Service. Currently, the size of the bacpac file, plus double the size of the largest table, should not exceed 400 GB.

4. The maximum size of a block blob in Azure Blob Storage is limited to slightly more than 195 GB. Since Import/Export Service only supports block blobs, this limits bacpac size that can be imported by the service. For larger bacpac files, a workaround would be to use an Azure VM to import the bacpac.

We should note that the data in the bacpac file is compressed, therefore the actual source database size may be larger than some of these limits. For example, if a 500 GB source database compresses to a 190 GB bacpac, then the block blob limit in the list above (#4) will not prevent the use of Import/Export Service.

It is worth mentioning explicitly that the amount of downtime required to create, upload, and import a bacpac of a large database may be prohibitively large in the context of an actual production application migration. In those cases, the migration approach using transactional replication, which significantly reduces the necessary downtime at the cost of added complexity, may be a feasible solution.

Service Tier Considerations

Each database in Azure SQL Database is assigned a service tier, or service objective (sometimes also known as service level objective, or SLO). Examples are S0, P2, etc. This defines the amount of resources allocated to the database, the features that are available, and, no less importantly, the cost of service. It is not surprising that customers migrating to Azure SQL Database want to know the service tier that is optimal for the database being migrated, i.e. the one that maximizes performance and available features, and minimizes cost.

However, our experience shows that answering this question definitively before the workload is tested against the migrated database is often challenging, and may not be feasible at all. A tool that is often mentioned in this context is Azure SQL Database DTU Calculator. The tool works by using a Performance Monitor log for a SQL Server machine, collected while the application workload is running. The tool analyzes several performance counters, and predicts DTU consumption for the migrated database. However, while the tool is helpful (if used correctly), we consider the results it provides only an initial approximation of the optimal service tier at best, for the following reasons:

1. The database engines being compared are usually different, e.g. an older version of SQL Server running on-premises, and Azure SQL Database. Resource utilization by the same workload on different database engines may be different.

2. Resource governance models are different. Resource consumption of a typical SQL Server workload is not constrained by anything other than the platform (hardware) limits; Azure SQL Database, on the other hand, explicitly limits available resources in each service tier using its own implementation of the resource governance model. This may create unexpected resource bottlenecks for workload running against the migrated database.

3. The infrastructure platforms are usually different. This includes the hardware, the hypervisors, and the operating systems. CPU core speeds, cache sizes, generations, etc. may differ, storage type is often different (spinning media vs. SSD), and hypervisor type and configuration are likely different as well.

4. Database internal structures get modified as a by-product of migration, e.g. indexes are getting rebuild, and statistics are getting updated. This is an additional factor affecting performance and resource consumption post-migration.

5. For many real-life migrated applications, workload patterns in the cloud are different from what they were on-premises. The application may get utilized more or less, and application features may change as a part of migration. In that case, resource consumption will be different as well.

Therefore, accurately predicting required Azure SQL Database service tier in advance, based on SQL Server performance data, tends to be a futile undertaking. Instead, we recommend first selecting the Edition (Basic, Standard, Premium) based on service features that are required, taking an initial guess at the service tier, and then iteratively testing a representative application workload against Azure SQL Database. As long as the workload is indeed representative, the database can be scaled up or down from the initially selected service tier, to right-size it according to the workload, all prior to the production migration.

If this kind of iterative testing and scaling approach is not feasible for any reason, e.g. if the representative workload is not available, then a reasonable approach is to select a relatively high service tier (or a relatively high elastic pool size, if migrating multiple databases directly into an elastic pool) at first, monitor resource consumption and application performance, and then gradually scale down until the service tier or elastic pool size is appropriate for the workload (taking into consideration possible workload spikes), and the cost is acceptable.

Finally, we should note that Azure SQL Database alleviates these concerns by providing the ability to easily and relatively quickly change database service tier over the lifetime of the application, so that the importance of selecting the “exactly right” service tier at the outset is greatly reduced.

Database Migration

Once the database is deemed compatible with Azure SQL Database, migration testing can start. Note that here we intentionally focus on the database migration only; the scope of the overall application migration to Azure is much larger, and is mostly beyond the scope of this article.

At a high level, migration steps are as follows:

1. Provision Azure resources; create logical server(s) and server level objects, and configure Azure SQL Database features. These preliminary steps should be done well in advance of the actual migration.

2. Declare the start of application downtime.

3. Export a bacpac of the source database. This bacpac contains all database scoped objects and data to be migrated.

4. Upload the bacpac to Azure Blob Storage, or to an Azure VM.

5. Start and monitor a bacpac import operation.

6. Once the import operation completes successfully, verify objects and data in the migrated database.

7. Grant database access.

8. Verify application functionality, as well as database management and monitoring functionality.

9. Declare the end of application downtime.

If there are multiple databases to be migrated, then steps 3-8 may be executed concurrently for all databases, to minimize application downtime.

Let’s discuss each step in detail.

Provision and Configure Azure Resources

The activities to provision and configure Azure resources can and should be done well in advance of the actual migration, to ensure proper configuration, and to avoid spending time on these tasks during application downtime. A large part of these activities is configuring application resources and deploying application code, which is a core task in the overall application migration process. Here we concentrate on provisioning and configuration of database resources specifically.

Develop a Naming Convention

The naming convention will govern the names given to resource groups, logical servers, logins, firewall rules, etc. Keep in mind that for some resource types, i.e. for Azure SQL Database servers and Azure Storage accounts, names must be globally unique. While many adequate naming conventions are possible, one that is commonly used is where separate name segments identify the organization (i.e. the Azure tenant), the Azure region, the application, the environment, etc., and include a numeric resource identifier for disambiguation. For example, for a fictitious organization named Wide World Importers, migrating an application named Import All, one of the production Azure SQL Database server names may be wwi-ia-pd-041. Many other conventions can be used, as long as they provide name clarity, consistency, and uniqueness.

For different Azure resource types, limits on the length of the name, its case sensitivity, allowed characters, etc. may be significantly different. For example, the name of an Azure SQL Database server is limited to 63 characters, while the name of an Azure IaaS Virtual Machine is limited to 15 characters. To simplify the naming convention, it may be tempting to adopt the most restrictive set of rules for all Azure resource types. However, that would negatively affect clarity and readability, and in extreme cases could force you into violating the naming convention just to make a name sufficiently descriptive. Therefore, our recommendation is to prioritize name clarity and descriptiveness over using the same rigid naming scheme for different resource types. Instead, consider using customized naming conventions for different resource types, to accommodate Azure name limits while ensuring that names are sufficiently descriptive.

Develop a Resource Grouping Strategy

Each Azure resource belongs to a resource group. A resource group is treated as a unit for provisioning and de-provisioning purposes. A good rule of thumb for grouping resources is to ask the question: “Would all resources in this group be created or deleted at the same time and as a part of the same task?” If yes, then the grouping strategy is probably sound. The extremes to be avoided are using a single group for all resources, or a separate group for each resource.

Provision and Configure Azure SQL Database Logical Servers

Azure SQL Database servers act as logical containers for databases. Unlike a traditional SQL server, a logical Azure SQL Database server is not associated with a pool of resources (CPU, memory, storage, network) that is shared among all databases on the server. Resources are allocated to each individual database, regardless of its placement on any specific logical server.

That said, some limits at the logical server level do exist: one is a quota on the total number of Database Transaction Units (DTUs) for all databases hosted on the same logical server (45,000 DTUs); another is the hard limit on the total number of databases per logical server (5,000 databases). As of this writing, the recommended maximum on the number of databases per server is in the 1000-2000 range. As with many limits, this is subject to change over time, and is highly dependent on the workload.

The reason for considering limits at the server level is that operations such as login processing, server firewall rule processing, and querying of server-level DMVs use resources allocated to the master database. Just like resources allocated to user databases, master database resources are subject to limits and governance. For highly intensive workloads, particularly those with high rate of connections, these limits could reduce scalability. Recently, an improvement has been made to mitigate this problem by caching logins and firewall rules in user databases, so this became less of a concern. For the uncommon workloads where this still causes a scalability issue, the dependency on the master database can be reduced further by eliminating server firewall rules and logins altogether, and instead using database-level firewall rules and database authentication (i.e. users with password and/or external provider users). That also reduces the need for configuration at the server level, making databases more portable.

Since Azure SQL Database servers are logical, and limits at the server level that we described above are not a concern for the vast majority of customer workloads, it may be advantageous to host multiple databases on the same logical server, in order to simplify environment configuration and improve manageability. In other words, just because two databases have been hosted on two different SQL Server instances, does not necessarily mean that they must be migrated to two different logical servers in Azure SQL Database. If the databases are related, i.e. used by the same application, consider hosting them on the same logical server.

Once a logical server is provisioned, some of the server level settings such as Firewall, Azure Active Directory admin (if used, see the section on server logins later in the article), and Auditing & Threat Detection can be configured. Note that Firewall and Auditing & Threat Detection can also be configured for each individual database later, if required. If Auditing & Threat Detection is configured at the server level, this configuration will be used for all databases on this server.

Configure Access Control and Network Security

This includes Azure Role-Based Access Control (RBAC), network security (firewall), and authentication (SQL authentication and/or Azure Active Directory authentication).

The principle of least privilege should be the guiding rule. To the extent possible, Azure SQL Database firewall rules should restrict inbound traffic to well-known sources, e.g. the application, management, and monitoring endpoints only. If any exceptions become necessary, e.g. for ad-hoc troubleshooting, they should be made just-in-time, and removed promptly once no longer needed.

If multiple databases are hosted on the same logical server, consider whether the firewall rules should be the same for all of them. If not, create firewall rules at the database level, rather than at the server level. As noted earlier, this also makes the database more portable, and reduces its configuration and performance dependencies on the logical server.

If using RBAC, assign principals to logical servers, and grant them membership in either built-in RBAC roles or custom RBAC roles. This will restrict access to resource management operations at the Azure Resource Management API level, to control the operations allowed for Azure Active Directory users and groups in Azure Portal and Azure PowerShell.

Note that RBAC controls Azure resource management operations only, and not authorization within the database. In Azure SQL Database, that is controlled via database role membership, and GRANT/DENY statements, just like in SQL Server.

Create Server Logins

A single SQL authentication login acting as a server administrator is always created as a part of server provisioning. That login is conceptually similar to the sa login in SQL Server. As a security best practice, we recommend restricting the use of this login as much as possible. Avoid using it for routine administration work, let alone for application connections. Instead, use dedicated logins for each functional role, i.e. application, DBA, monitoring tool, reporting tool, data reader, etc. Depending on the purpose of each login, it may require access to the master database, which is granted by creating a user in the master database for the login, and making that user a member of built-in roles in master (i.e. dbmanager, loginmanager, etc.) as needed.

We should mention that the logins mentioned so far are SQL authentication logins, and as such, are subject to known limitations and drawbacks of SQL authentication. Among them are the overhead of managing a separate set of credentials specifically for database authentication, the need to store passwords in application configuration assets, an increased likelihood of unintentional sharing and disclosure of credentials, etc.

From this perspective, Azure Active Directory (AAD) authentication is a better authentication mechanism, addressing these concerns for Azure SQL Database similar to the way Windows Authentication does it for SQL Server. With AAD authentication, a single Azure Active Directory group can be designated as a server administrator, to grant all members of this group the same privileges that are held by SQL authentication server administrator. Furthermore, Azure Active Directory users and groups can be associated with users in individual databases, to grant all AAD group members required access in a specific database.

There is an important consideration for SQL authentication logins used in the context of Geo-replication. If server level SQL authentication is used, then the same logins must be created on all logical servers that may host geo-replicas of a given database. “The same” here means that the name, the security identifier (SID), and the password of the logins must be identical, to be able to fail over from a primary geo-replica to a secondary geo-replica without making any other database or application changes. If login SID on the server hosting the secondary geo-replica is different, then database access for that login will be lost after failover.

Making logins the same on multiple servers requires explicitly specifying the SID parameter when logins are created. To determine the SID value to use, first create a login without specifying the SID parameter, as in the following example:

CREATE LOGIN AppLogin1 WITH PASSWORD = 'SecretPwd1-';

Then, query the sys.sql_logins catalog view in the context of the master database, restricting the result set by login name:

SELECT sid

FROM sys.sql_logins

WHERE name = 'AppLogin1';

This returns:

sid

------------------------------------------------------------------

0x01060000000000640000000000000000C6D0091C76F7144F98172D155AD531D3

Now, to create the same login on any other server, use the following statement:

CREATE LOGIN AppLogin1 WITH PASSWORD = 'SecretPwd1-', SID = 0x01060000000000640000000000000000C6D0091C76F7144F98172D155AD531D3;

If not explicitly specified, SID values are generated during login creation. For any new login, the SID value can be initially obtained by creating the login without specifying the SID as in the previous example, and then used in the CREATE LOGIN statements for other servers explicitly. This would be useful when creating similar servers in multiple environments, to make login creation process identical for all servers. Note that SID values must be unique per server, therefore for each new login, its SID value must be first generated by creating the login without specifying the SID. SID values are structured, and must be generated by Azure SQL Database; using an arbitrary 32 byte value as the SID is unlikely to work.

Create an Empty Database

This step is optional, because the import operation later in the process will create the database, if a database with the same name does not exist on the target logical server. Nevertheless, it may be advantageous to do this now, in order to configure database level settings in advance of data migration, and thus reduce application downtime.

A key point here is that the pre-created database must remain empty until the start of the import operation. If the import operation detects any user objects in the target database, it will fail to prevent potential data loss.

The settings that can be enabled in advance include Geo-replication, Auditing & Threat Detection, and Transparent Data Encryption (TDE). If business continuity and/or compliance requirements mandate the use of these features, then this approach ensures that they are enabled before any data is migrated to the cloud. A tradeoff between compliance and performance is that enabling TDE in advance of the import operation will slow down the import to some extent.

The service objective of the empty database can be set relatively low to reduce cost in the interim period prior to migration. Service objective can be scaled up later as a part of the import operation.

Note that Azure Portal will not allow you to specify the name of an existing database when starting the import operation. If you pre-created an empty database, you will need to use PowerShell to import a bacpac into that database.

Create a Storage Account for Bacpacs

This Azure Blob Storage account will be used to upload and store the bacpac(s) to be imported. Create the account, and create a container for bacpac blobs, making sure to use private access type for the container. Make sure to create the account in the same region where the migrated databases will be hosted, to avoid slower cross-region data transfer during import.

If migrating multiple databases concurrently, use a locally-redundant storage account to maximize ingress bandwidth, and thus avoid a bottleneck during concurrent upload of many bacpacs. Multiple storage accounts may be used if the ingress bandwidth of a single account is exceeded while uploading many bacpacs concurrently.

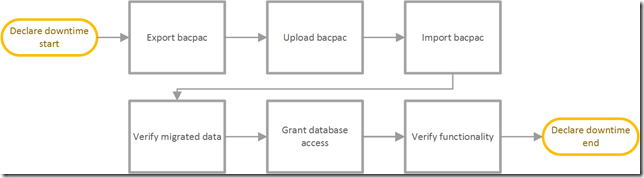

Migrate the Database

Once the preliminary activities described in the previous sections are complete, we are ready to declare application downtime, and start actual database migration. Figure 2 outlines the core activities to be performed.

Figure 2

Export the Bacpac

In this step, we will create a bacpac file for the database to be migrated. SQL Server Management Studio (SSMS) provides a UI for creating bacpacs (under the Database context menu, Tasks, Export Data-tier Application). However, using the SqlPackage tool from the command line, or as a command in a script, provides additional flexibility, and is preferred in most migrations.

The SqlPackage tool is a part of the DacFx framework, which needs to be installed on the machine where the bacpac will be created. Note that it is not required to create the bacpac on the same machine where the source database is hosted, though connectivity to the SQL Server instance hosting the source database is required. It is recommended to use the most current release of the DacFx framework. New releases of DacFx are announced on the SSDT team blog.

The DacFx framework is available in x86 and an x64 versions. It is recommended to install both. SSMS, being a 32-bit application, will use the x86 version, which imposes a limitation on the amount of memory the bacpac export process can use. You may notice this limitation if the following error occurs when exporting or importing the bacpac: “Exception of type 'System.OutOfMemoryException' was thrown.” This is one of the reasons to use the command prompt (either cmd or PowerShell), where the 64-bit version of SqlPackage can be used.

A sample command to create a bacpac is:

SqlPackage /Action:Export /SourceServerName:SampleSQLServer.sample.net,1433 /SourceDatabaseName:SampleDatabase /TargetFile:"F:\Temp\SampleDatabase.bacpac"

By default, the current version of the DacFx framework is installed in C:\Program Files\Microsoft SQL Server\130\DAC\bin, assuming the x64 version. The x86 version is in a similar location under C:\Program Files (x86) . These paths are not added to the system PATH environment variable; therefore, we need to either execute SqlPackage commands from one of these directories, or use a fully qualified path.

A very important point to make is that unlike a native SQL Server backup, a bacpac is not guaranteed to contain transactionally consistent data. If the source database is modified while the export process is running, then data in the bacpac can be transactionally and referentially inconsistent, resulting in failed bacpac import and potential data loss later in the migration process. To prevent this, and to ensure transactional consistency and referential integrity of the migrated database, all write access to the source database must be stopped before the export operation starts, e.g. by making the database read-only.

An important note from SqlPackage documentation, with emphasis added: “Validation for the Export action ensures Windows Azure SQL Database compatibility for the complete targeted database even if a subset of tables is specified for the export.” In other words, this means that the database model of the entire database must be consistent (no broken references) and compatible with Azure SQL Database, in order to create the bacpac. Using an SSDT database project to model the database, as described in an earlier section, helps ensure this.

As a practical matter for many actual database migrations, objects in the source database may need to be modified, sometimes significantly, to enable successful bacpac export and import. For example, unused objects may need to be dropped, broken references fixed, code incompatible with Azure SQL Database rewritten, etc. Some of these changes may be trivial, while others may be quite involved.

Recall that at this point in the migration process, we are in the middle of application downtime. We need to minimize the time taken to create the bacpac. Internally, a bacpac is a ZIP archive that contains schema metadata (represented in XML), and data (in native BCP format). During bacpac creation, all this data is extracted from the source database, and compressed in a single bacpac file. Compression is a CPU intensive operation. To make it fast, it is important to use a machine with plenty of CPU capacity. For this reason, it is often best to use a machine other than the source SQL server for bacpac creation, to avoid CPU contention between data extraction threads in SQL Server and data compression threads in SqlPackage. We recommend monitoring CPU utilization during bacpac export. If it is close to 100%, then using a machine with more CPU capacity could speed up the export. The assumption here is that network bandwidth between the two machines is sufficiently high (e.g. they are on the same LAN), and therefore we are not creating a different (network) bottleneck for data extraction by using separate machines.

One rather obvious, but at the same time often overlooked way to shorten the duration of bacpac creation, as well as the duration of the following phases of the migration process (upload, import, verification), is to remove any data in the database that does not need to be migrated. This includes data that is past its required retention period, temporary copies of tables that are no longer needed, data no longer used by the application, etc.

Upload the Bacpac

Once the bacpac is created successfully, we need to upload it to Azure Blob Storage.

One obvious prerequisite is that connectivity to Azure Blob Storage must be available from the on-premises network. Today, unintentional or malicious data exfiltration is a major concern for many organizations. Therefore, multiple barriers for outbound data transfers may exist, by design. But in our scenario, intentional data exfiltration (with respect to the on-premises network) is actually a required step in the migration process. In many environments, this is a challenge that will require advance cooperation and coordination within the IT organization to resolve.

For similar reasons, accessing outside network resources directly from database servers is not allowed in many environments. An acceptable compromise may be to allow temporary outbound access from a different dedicated machine on the internal network, which is also allowed to connect to the database server to extract data. This is yet another reason, besides performance considerations, to create and upload the bacpac on the machine other than the source database server.

Assuming that a reliable connection to Azure Blob Storage is available, our goal is to make the bacpac upload as fast as possible, given available network bandwidth, in order to minimize application downtime. A solution that has worked well for many customers is AzCopy, a tool for working with Azure Blob Storage that supports multi-threaded network transfers. Multi-threading provides a major increase in transfer speed. In fact, on networks with limited outbound bandwidth, it may be necessary to reduce the number of threads, to avoid saturating the network link and starving other on-premises systems of network bandwidth during bacpac upload.

Here is an example of the AzCopy command that uploads all bacpac files found in the F:\Temp directory to the specified storage account:

AzCopy /Source:"F:\Temp\bacpacs" /Dest:"https://exampleaccount.blob.core.windows.net/bacpacs" /DestKey:storageaccountkey /Pattern:*.bacpac

By default, AzCopy is installed in C:\Program Files (x86)\Microsoft SDKs\Azure\AzCopy. This path is not added to the system PATH environment variable; therefore, we need to either execute the AzCopy command from this directory, or use a fully qualified path.

Note that Import/Export service supports block blobs only. Since Premium storage does not support block blobs, a Standard storage account must be used to upload the bacpac.

Import the Bacpac

Once the bacpac is uploaded to Azure Blob Storage, we can start the import operation. This can be done interactively from Azure Portal, or using the New-AzureRmSqlDatabaseImport PowerShell cmdlet (using the older Start-AzureSqlDatabaseImport cmdlet is not recommended). With either option, the service objective of the target database is a required parameter. Note however, that if you pre-created an empty database and used a lower service objective at that time, New-AzureRmSqlDatabaseImport cmdlet will not automatically change the service objective as a part of import, as the older Start-AzureSqlDatabaseImport cmdlet would do. Therefore, you may need to scale up the existing database explicitly prior to starting the import operation.

The import operation is typically performed by the Azure SQL Database Import/Export Service. Similar to the bacpac export done earlier, the service also uses the DacFx framework to import the uploaded bacpac into the target database.

The IP address space used for outbound connections from the Import/Export Service infrastructure to the target logical server is not documented, and is subject to change at any time. Therefore, given that connections to the target Azure SQL Database server are gated by server firewall, the only fully reliable way to ensure that the Import/Export service will be able to connect is to enable the firewall rule that allows access from all Azure services (or, equivalently, from the 0.0.0.0 IP address). Obviously, opening the firewall to a large IP address space is a network security risk. Security conscious organizations will want to mitigate this risk by disabling this firewall rule as soon as the import operation completes successfully. For our migration scenario, there is an additional mitigating factor: during the import, the database is only accessible via the server administrator login and the AAD administrator group, if the latter is provisioned.

For organizations finding the security risk of temporarily opening the firewall to the entire Azure IP address space unacceptably high, a workaround would be to provision an Azure IaaS VM in the same region where the target database will reside, install DacFx framework on that machine, and execute the import process using SqlPackage from that machine. In this case, the firewall only needs to be opened to the public IP address of this machine.

Similar to previous steps and for the same reasons, we need to minimize the amount of time the import operation takes. During bacpac import, data is bulk loaded (using ADO.NET SqlBulkCopy class) into the target database. Usually, multiple tables are loaded concurrently. Recall that for each Azure SQL Database service objective, the amount of available resources is limited. One of these limitations is on the volume of transaction log writes. Since all bulk load threads write to the transaction log of the target database, log write throttling may be encountered during the import operation, and is in fact the primary factor gating import speed for most migrations. Therefore, a common way to speed up the import operation is to temporarily increase the service objective to the point where transaction log writes are no longer throttled. For example, if the target service objective for the database is S2, and there is a requirement to minimize import time, then it will probably make sense to import into a P2 or higher database, and then scale down to S2 once import completes.

A simple way to see if transaction log writes, or any other governed resource, is being throttled is to examine the output of sys.dm_db_resource_stats DMV during the import operation. Here is an example of a query that does this. The query needs to be executed in the context of the database being imported:

SELECT *

FROM sys.dm_db_resource_stats

ORDER BY end_time DESC;

If transaction log writes are currently being throttled, then the values in the avg_log_write_percent column for the top rows in the result set will be close or equal to 100%. This is an indication that a higher service objective can reduce import time. Note that similar resource utilization data is also available in Azure Portal, and in the sys.resource_stats DMV. However, that data is averaged over five minute intervals, as opposed to 15 second intervals in sys.dm_db_resource_stats. Therefore, spikes in resource utilization that may indicate throttling will be less noticeable in the portal.

For larger databases, the import operation can take a long time, and should be monitored. If using Azure Portal, use the Import/Export history tile under the Operations section on the SQL server Overview blade, and examine the operations in progress. Completion percentage and current operation status are displayed.

If using PowerShell, the Get-AzureRmSqlDatabaseImportExportStatus cmdlet will return an object that describes the status of the import operation, including completion percentage. This cmdlet requires an OperationStatusLink parameter value (i.e. the identifier for the import operation), which is a field of the object returned by the New-AzureRmSqlDatabaseImport cmdlet when it starts a new import operation.

Once the import operation completes successfully, the server firewall rule that allowed access from all Azure services can be disabled, and bacpacs uploaded to the storage account can be deleted, to reduce data exposure and exfiltration concerns.

Verify Migrated Data

As with any database migration, before allowing the application to use the database, we should verify, to the extent it is practical and reasonable, that all objects and data in the source database have been successfully migrated. As noted earlier, this is particularly important for a bacpac migration, which does not natively guarantee that included data is transactionally and referentially consistent.

A simple verification mechanism for database metadata is to compare the sets of all user objects in the source and target databases, ensuring that they are the same. Similarly, for each user table, a basic data verification approach is to compare row counts between the source and target databases.

Here is a sample query to return a set of all user objects in a database:

SELECT s.name AS [schema_name],

o.name AS [object_name],

o.type_desc AS [object_type_desc]

FROM sys.objects AS o

INNER JOIN sys.schemas AS s

ON o.schema_id = s.schema_id

WHERE s.name <> 'sys'

AND

o.is_ms_shipped = 0

AND

o.type NOT IN ('IT','S')

;

Here is a sample query to return the row count for each user table in a database:

SELECT s.name AS [schema_name],

t.name AS table_name,

SUM(p.rows) AS row_count

FROM sys.partitions AS p

INNER JOIN sys.indexes AS i

ON p.object_id = i.object_id

AND

p.index_id = i.index_id

INNER JOIN sys.tables AS t

ON p.object_id = t.object_id

INNER JOIN sys.schemas AS s

ON t.schema_id = s.schema_id

WHERE s.name <> 'sys'

AND

t.is_ms_shipped = 0

AND

t.type NOT IN ('IT','S')

AND

i.type_desc IN ('HEAP','CLUSTERED','CLUSTERED COLUMNSTORE')

GROUP BY s.name, t.name

;

These are quick, but by no means comprehensive verification methods, particularly for verifying full fidelity of migrated data. Either a data compare tool (e.g. SSDT), or the tablediff utility, may be used for a more thorough, but also more time-consuming data comparison between the source and target databases.

Grant Database Access

Once the migrated database is successfully verified, we can grant database access to any server logins that were created previously, or create database authentication principals (users with passwords, or external provider users if using AAD authentication). This step should be implemented with an idempotent script that creates database users for server logins (and/or creates database users with passwords, and/or creates database users from external provider), and grants them database role membership and explicit object permissions as needed.

Verify Application Functionality

At this point, the database is successfully migrated. However, we should recall that database migration is just a part of the overall application migration to Azure. We still need to confirm that the application functions as expected, that the monitoring tools work, and that the database can be managed successfully. Only once these steps are successfully completed, we can declare the application migration successful, and declare the end of migration downtime.

Conclusion

In this article, we went over an implementation of the SQL Server to Azure SQL Database migration that many customers have used as a part of moving applications to the Azure public cloud. We described the preliminary steps needed to prepare the migration, and then focused on the specific implementation details of actual migration. This article will be helpful to organizations considering or actively working on database migration to Azure SQL Database.

Appendix A. Sample Migration Script

A sample PowerShell script that implements bacpac upload and import steps is available. The script (optionally) uploads all bacpac files found in specified directory to an Azure Storage account using AzCopy, and then starts multiple concurrent import operations for each bacpac found in the specified Azure Blob Storage container. The script continues to run for as long as any of the started import operations are in progress, and periodically outputs the status of all in-progress or failed operations.

Prior to completion, the script throws an exception if any import operations have failed, or if there are any source bacpacs without a matching database on the target logical server.

The latest version of the script can be found in this GitHub repo.

Comments

- Anonymous

October 27, 2016

Articles like this are like gold.I do wonder MS compares SQL Databases on the same virtual server or across different virtual servers that need to return identical results for both the objects and the row counts in an automated fashion. The queries in SSMS as one-offs are easy but with multiple databases, its a different case and need heavy-lifting automation. - Anonymous

April 11, 2017

A bit chatty for my taste, and fails to provide sufficient detail on the actual import step, I do not see in the current portal where one specifies the import. Is the Powershell script the only documented way? Can SQLPackage do it? Frustrating.- Anonymous

April 20, 2017

Importing from portal is certainly supported. On the details blade for a SQL server, there is the Import database button at the top.SqlPackage can be used as well. Here is a sample command:SqlPackage /Action:Import /TargetServerName:SampleSQLServer.sample.net,1433 /TargetUser:CloudSA /TargetPassword:secret /SourceFile:"F:\Temp\SampleDatabase.bacpac"

- Anonymous