Windows Azure Storage Client Library 2.0 Tables Deep Dive

This blog post serves as an overview to the recently released Windows Azure Storage Client for .Net and the Windows Runtime. In addition to the legacy implementation shipped in versions 1.x that is based on DataServiceContext, we have also provided a more streamlined implementation that is optimized for common NoSQL scenarios.

Note, if you are migrating an existing application from a previous release of the SDK please see the overview and migration guide posts.

New Table implementation

The new table implementation is provided in the Microsoft.WindowsAzure.Storage.Tablenamespace. There are three key areas we emphasized in the design of the new table implementation: usability, extensibility, and performance. The basic scenarios are simple and “just work”; in addition, we have also provided two distinct extension points to allow developers to customize the client behaviors to their specific scenario. We have also maintained a degree of consistency with the other storage clients (Blob and Queue) so that moving between them feels seamless.

With the addition of this new implementation users have effectively three different patterns to choose from when programing against table storage. A high level summary of each and a brief description of the benefits they offer are provided below.

- Table Service Layer via TableEntity – This approach offers significant performance and latency improvements over the WCF Data Services, but still offers the ability to define POCO objects in a similar fashion without having to write serialization / deserialization logic. Additionally, the optional EntityResolver provides the ability to easily work with heterogeneous entity types returned via queries without any additional client objects or overhead. Additionally, users can optionally customize the serialization behavior of their entities by overriding the ReadEntity or WriteEntity methods. The Table Service layer does not currently expose an IQueryable, meaning that queries need to be manually constructed (helper functions are exposed via static methods on the TableQuery class, see below for more). For an example see the NoSQL scenario below.

- Table Service Layer via DynamicTableEntity – This approach is provided to allow user’s direct access to a Dictionary key value pairs. This is particularly useful for more advanced scenarios such as defining entities whose property names are dictated at runtime, entities with large amount of properties, server side projections, and bulk updates of heterogeneous data. Since DynamicTableEntity implements the ITableEntity interface all results, including projections, can be persisted back to the server. For an example see the Heterogeneous Update scenario below.

- WCF Data Services – Similar to the legacy 1.7x implementation, this approach exposes an IQueryable allowing users to construct complex queries via LINQ. This approach is recommended for users with existing code assets as well as non-latency sensitive queries as it utilizes greater system resources. The WCF Data Services based implementation has been migrated to the Microsoft.WindowsAzure.Storage.Table.DataServicesnamespace. For additional details see the DataServices section below.

Note, a similar table implementation using WCF Data Services is not provided in the recently released Windows 8 library due to limitations when projecting to various supported languages.

Dependencies

The new table implementation utilizes the OdataLib components to provide the over the wire protocol implementation. These libraries are available via NuGet (See the resources section below). Additionally, to maintain compatibility with previous versions of the SDK, the client library has a dependency on System.Data.Services.Client.dll which is part of the .Net platform. Please Note, the current WCF Data Services standalone installer contains version 5.0.0 assemblies, referencing these assemblies will result in a runtime failure.

You can resolve these dependencies as shown below

NuGet

To install Windows Azure Storage, run the following command in the Package Manager Console.

PM> Install-Package WindowsAzure.Storage

This will automatically resolve any needed dependencies and add them to your project.

Windows Azure SDK for .NET - October 2012 release

- Install the SDK (https://www.windowsazure.com/en-us/develop/net/ click on the “install the SDK” button)

- Create a project and add a reference to %Program Files%\Microsoft SDKs\Windows Azure\.NET SDK\2012-10\ref\Microsoft.WindowsAzure.Storage.dll

- In Visual Studio go to Tools > Library Package Manager-> Package Manager Console and execute the following command.

PM> Install-Package Microsoft.Data.OData -Version 5.0.2

Performance

The new table implementation has shown significant performance improvements over the updated DataServices implementation and the previous versions of the SDK. Depending on the operation latencies have improved by between 25% and 75% while system resource utilization has also decreased significantly. Queries alone are over twice as fast and consume far less memory. We will have more details in a future Performance blog.

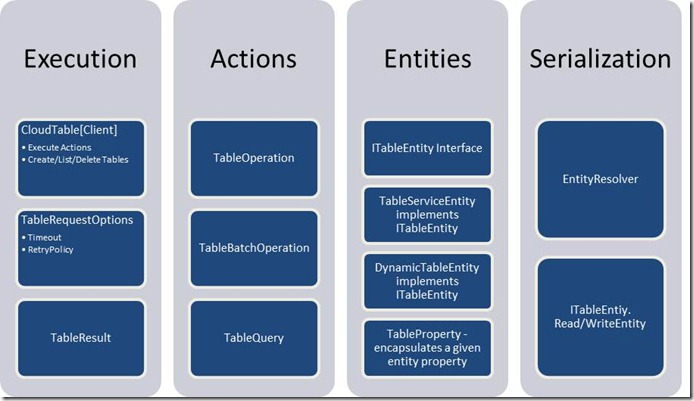

Object Model

A diagram of the table object model is provided below. The core flow of the client is that a user defines an action (TableOperation, TableBatchOperation, or TableQuery) over entities in the Table service and executes these actions via the CloudTableClient. For usability, these classes provide static factory methods to assist in the definition of actions.

For example, the code below inserts a single entity:

CloudTable table = tableClient.GetTableReference([TableName]);

table.Execute(TableOperation.Insert(entity));

Execution

CloudTableClient

Similar to the other Azure Storage clients, the table client provides a logical service client, CloudTableClient, which is responsible for service wide operations and enables execution of other operations. The CloudTableClient class can update the Storage Analytics settings for the Table service, list all tables in the account, and can create references to client side CloudTable objects, among other operations.

CloudTable

A CloudTable object is used to perform operations directly on a given table (Create, Delete, SetPermissions, etc.), and is also used to execute entity operations against the given table.

TableRequestOptions

The TableRequestOptions class defines additional parameters which govern how a given operation is executed, specifically the timeouts and RetryPolicy that are applied to each request. The CloudTableClient provides default timeouts and RetryPolicy settings; TableRequestOptions can override them for a particular operation.

TableResult

The TableResult class encapsulates the result of a single TableOperation. This object includes the HTTP status code, the ETag and a weak typed reference to the associated entity. For TableBatchOperations, the CloudTable.ExecuteBatch method will return a collection of TableResults whose order corresponds with the order of the TableBatchOperation. For example, the first element returned in the resulting collection will correspond to the first operation defined in the TableBatchOperation.

Actions

TableOperation

The TableOperation class encapsulates a single operation to be performed against a table. Static factory methods are provided to create a TableOperation that will perform an Insert, Delete, Merge, Replace, Retrieve, InsertOrReplace, and InsertOrMerge operation on the given entity. TableOperations can be reused so long as the associated entity is updated. As an example, a client wishing to use table storage as a heartbeat mechanism could define a merge operation on an entity and execute it to update the entity state to the server periodically.

Sample – Inserting an Entity into a Table

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

// Create the table client.

CloudTableClient tableClient = storageAccount.CreateCloudTableClient();

CloudTable peopleTable = tableClient.GetTableReference("people");

peopleTable.CreateIfNotExists();

// Create a new customer entity.

CustomerEntity customer1 = new CustomerEntity("Harp", "Walter");

customer1.Email = "Walter@contoso.com";

customer1.PhoneNumber = "425-555-0101";

// Create an operation to add the new customer to the people table.

TableOperation insertCustomer1 = TableOperation.Insert(customer1);

// Submit the operation to the table service.

peopleTable.Execute(insertCustomer1);

TableBatchOperation

The TableBatchOperation class represents multiple TableOperation objects which are executed as a single atomic action within the table service. There are a few restrictions on batch operations that should be noted:

- You can perform batch updates, deletes, inserts, merge and replace operations.

- A batch operation can have a retrieve operation, if it is the only operation in the batch.

- A single batch operation can include up to 100 table operations.

- All entities in a single batch operation must have the same partition key.

- A batch operation is limited to a 4MB data payload.

The CloudTable.ExecuteBatch which takes as input a TableBatchOperation will return an IList of TableResults which will correspond in order to the entries in the batch itself. For example, the result of a merge operation that is the first in the batch will be the first entry in the returned IList of TableResults. In the case of an error the server may return a numerical id as part of the error message that corresponds to the sequence number of the failed operation in the batch unless the failure is associated with no specific command such as ServerBusy, in which case -1 is returned. TableBatchOperations, or Entity Group Transactions, are executed atomically meaning that either all operations will succeed or if there is an error caused by one of the individual operations the entire batch will fail.

Sample – Insert two entities in a single atomic Batch Operation

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

// Create the table client.

CloudTableClient tableClient = storageAccount.CreateCloudTableClient();

CloudTable peopleTable = tableClient.GetTableReference("people");

peopleTable.CreateIfNotExists();

// Define a batch operation.

TableBatchOperation batchOperation = new TableBatchOperation();

// Create a customer entity and add to the table

CustomerEntity customer = new CustomerEntity("Smith", "Jeff");

customer.Email = "Jeff@contoso.com";

customer.PhoneNumber = "425-555-0104";

batchOperation.Insert(customer);

// Create another customer entity and add to the table

CustomerEntity customer2 = new CustomerEntity("Smith", "Ben");

customer2.Email = "Ben@contoso.com";

customer2.PhoneNumber = "425-555-0102";

batchOperation.Insert(customer2);

// Submit the operation to the table service.

peopleTable.ExecuteBatch(batchOperation);

TableQuery

The TableQuery class is a lightweight query mechanism used to define queries to be executed against the table service. See “Querying” below.

Entities

ITableEntity interface

The ITableEntity interface is used to define an object that can be serialized and deserialized with the table client. It contains the PartitionKey, RowKey, Timestamp, and Etag properties, as well as methods to read and write the entity. This interface is implemented by the TableEntity and DynamicTableEntity entity types that are included in the library; a client may implement this interface directly to persist different types of objects or objects from 3rd-party libraries. By overriding the ITableEntity.ReadEntity or ITableEntity.WriteEntity methods a client may customize the serialization logic for a given entity type.

TableEntity

The TableEntity class is an implementation of the ITableEntity interface and contains the RowKey, PartitionKey, and Timestamp properties. The default serialization logic TableEntity uses is based off of reflection where all public properties of a supported type that define both get and set are serialized. This will be discussed in greater detail in the extension points section below. This class is not sealed and may be extended to add additional properties to an entity type.

Sample – Define a POCO that extends TableEntity

// This class defines one additional property of integer type, since it derives from

// TableEntity it will be automatically serialized and deserialized.

public class SampleEntity : TableEntity

{

public int SampleProperty { get; set; }

}

DynamicTableEntity

The DynamicTableEntity class allows clients to update heterogeneous entity types without the need to define base classes or special types. The DynamicTableEntity class defines the required properties for RowKey, PartitionKey, Timestamp, and ETag; all other properties are stored in an IDictionary. Aside from the convenience of not having to define concrete POCO types, this can also provide increased performance by not having to perform serialization or deserialization tasks.

Sample – Retrieve a single property on a collection of heterogeneous entities

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

// Define the query to retrieve the entities, notice in this case we

// only need to retrieve the Count property.

TableQuery query = new TableQuery().Select(new string[] { "Count" });

// Note the TableQuery is actually executed when we iterate over the

// results. Also, this sample uses the DynamicTableEntity to avoid

// having to worry about various types, as well as avoiding any

// serialization processing.

foreach (DynamicTableEntity entity in myTable.ExecuteQuery(query))

{

// Users should always assume property is not there in case another client removed it.

EntityProperty countProp;

if (!entity.Properties.TryGetValue("Count", out countProp))

{

throw new ArgumentNullException("Invalid entity, Count property not found!");

}

// Display Count property, however you could modify it here and persist it back to the service.

Console.WriteLine(countProp.Int32Value);

}

Note: an ExecuteQuery equivalent is not provided in the Windows Runtime library in keeping with best practice for the platform. Instead use the ExecuteQuerySegmentedAsync method to execute the query in a segmented fashion.

EntityProperty

The EntityProperty class encapsulates a single property of an entity for the purposes of serialization and deserialization. The only time the client has to work directly with EntityProperties is when using DynamicTableEntity or implementing the TableEntity.ReadEntity and TableEntity.WriteEntity methods.

The samples below show two approaches that can be a player’s score property. The first approach uses DynamicTableEntity to avoid having to declare a client side object and updates the property directly, whereas the second will deserialize the entity into a POJO and update that object directly.

Sample –Update of entity property using EntityProperty

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

// Retrieve entity

TableResult res = gamerTable.Execute(TableOperation.Retrieve("Smith", "Jeff"));

DynamicTableEntity player = (DynamicTableEntity)res.Result;

// Retrieve Score property

EntityProperty scoreProp;

// Users should always assume property is not there in case another client removed it.

if (!player.Properties.TryGetValue("Score ", out scoreProp))

{

throw new ArgumentNullException("Invalid entity, Score property not found!");

}

scoreProp.Int32Value += 1;

// Store the updated score

gamerTable.Execute(TableOperation.Merge(player));

Sample – Update of entity property using POJO

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

public class GamerEntity : TableEntity

{

public int Score { get; set; }

}

// Retrieve entity

TableResult res = gamerTable.Execute(TableOperation.Retrieve<GamerEntity>("Smith", "Jeff"));

GamerEntity player = (GamerEntity)res.Result;

// Update Score

player.Score += 1;

// Store the updated score

gamerTable.Execute(TableOperation.Merge(player));

EntityResolver

The EntityResolver delegate allows client-side projection and processing for each entity during serialization and deserialization. This is designed to provide custom client side projections, query-specific filtering, and so forth. This enables key scenarios such as deserializing a collection of heterogeneous entities from a single query.

Sample – Use EntityResolver to perform client side projection

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

// Define the query to retrieve the entities, notice in this case we only need

// to retrieve the Email property.

TableQuery<TableEntity> query = new TableQuery<TableEntity>().Select(new string[] { "Email" });

// Define a Entity resolver to mutate the entity payload upon retrieval.

// In this case we will simply return a String representing the customers Email address.

EntityResolver<string> resolver = (pk, rk, ts, props, etag) => props.ContainsKey("Email") ? props["Email"].StringValue : null;

// Display the results of the query, note that the query now returns

// strings instead of entity types since this is the type of EntityResolver we created.

foreach (string projectedString in gamerTable.ExecuteQuery(query, resolver, null /* RequestOptions */, null /* OperationContext */))

{

Console.WriteLine(projectedString);

}

Querying

There are two query constructs in the table client: a retrieve TableOperation which addresses a single unique entity and a TableQuery which is a standard query mechanism used against multiple entities in a table. Both querying constructs need to be used in conjunction with either a class type that implements the TableEntity interface or with an EntityResolver which will provide custom deserialization logic.

Retrieve

A retrieve operation is a query which addresses a single entity in the table by specifying both its PartitionKey and RowKey. This is exposed via TableOperation.Retrieve and TableBatchOperation.Retrieve and executed like a typical operation via the CloudTable.

Sample – Retrieve a single entity

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

// Create the table client.

CloudTableClient tableClient = storageAccount.CreateCloudTableClient();

CloudTable peopleTable = tableClient.GetTableReference("people");

// Retrieve the entity with partition key of "Smith" and row key of "Jeff"

TableOperation retrieveJeffSmith = TableOperation.Retrieve<CustomerEntity>("Smith", "Jeff");

// Retrieve entity

CustomerEntity specificEntity = (CustomerEntity)peopleTable.Execute(retrieveJeffSmith).Result;

TableQuery

TableQuery is a lightweight object that represents a query for a given set of entities and encapsulates all query operators currently supported by the Windows Azure Table service. Note, for this release we have not provided an IQueryable implementation, so developers who are migrating applications to the 2.0 release and wish to leverage the new table implementation will need to reconstruct their queries using the provided syntax. The code below produces a query to take the top 5 results from the customers table which have a RowKey greater than 5.

Sample – Query top 5 entities with RowKey greater than or equal to 5

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

TableQuery<TableEntity> query = new TableQuery<TableEntity>().Where(TableQuery.GenerateFilterCondition("RowKey", QueryComparisons.GreaterThanOrEqual, "5")).Take(5);

In order to provide support for JavaScript in the Windows Runtime, the TableQuery can be used via the concrete type TableQuery, or in its generic form TableQuery<EntityType> where the results will be deserialized to the given type. When specifying an entity type to deserialize entities to, the EntityType must implement the ITableEntity interface and provide a parameterless constructor.

The TableQuery object provides methods for take, select, and where. There are static methods provided such as GenerateFilterCondition, GenerateFilterConditionFor*, and CombineFilters which construct other filter strings. Some examples of constructing queries over various types are shown below

// 1. Filter on String

TableQuery.GenerateFilterCondition("Prop", QueryComparisons.GreaterThan, "foo");

// 2. Filter on GUID

TableQuery.GenerateFilterConditionForGuid("Prop", QueryComparisons.Equal, new Guid());

// 3. Filter on Long

TableQuery.GenerateFilterConditionForLong("Prop", QueryComparisons.GreaterThan, 50L);

// 4. Filter on Double

TableQuery.GenerateFilterConditionForDouble("Prop", QueryComparisons.GreaterThan, 50.50);

// 5. Filter on Integer

TableQuery.GenerateFilterConditionForInt("Prop", QueryComparisons.GreaterThan, 50);

// 6. Filter on Date

TableQuery.GenerateFilterConditionForDate("Prop", QueryComparisons.LessThan, DateTime.Now);

// 7. Filter on Boolean

TableQuery.GenerateFilterConditionForBool("Prop", QueryComparisons.Equal, true);

// 8. Filter on Binary

TableQuery.GenerateFilterConditionForBinary("Prop", QueryComparisons.Equal, new byte[] { 0x01, 0x02, 0x03 });

Sample – Query all entities with a PartitionKey=”SamplePK” and RowKey greater than or equal to “5”

string pkFilter = TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, "samplePK");

string rkLowerFilter = TableQuery.GenerateFilterCondition("RowKey", QueryComparisons.GreaterThanOrEqual, "5");

string rkUpperFilter = TableQuery.GenerateFilterCondition("RowKey", QueryComparisons.LessThan, "10");

// Note CombineFilters has the effect of “([Expression1]) Operator (Expression2]), as such passing in a complex expression will result in a logical grouping.

string combinedRowKeyFilter = TableQuery.CombineFilters(rkLowerFilter, TableOperators.And, rkUpperFilter);

string combinedFilter = TableQuery.CombineFilters(pkFilter, TableOperators.And, combinedRowKeyFilter);

// OR

string combinedFilter = string.Format(“({0}) {1} ({2}) {3} ({4})”, pkFilter, TableOperators.And, rkLowerFilter, TableOperators.And, rkUpperFilter);

TableQuery<SampleEntity> query = new TableQuery<SampleEntity>().Where(combinedFilter);

Note: There is no logical expression tree provided in the current release, and as a result repeated calls to the fluent methods on TableQuery overwrite the relevant aspect of the query. Additionally,

Note the TableOperators and QueryComparisons classes define string constants for all supported operators and comparisons:

TableOperators

- And

- Or

- Not

QueryComparisons

- Equal

- NotEqual

- GreaterThan

- GreaterThanOrEqual

- LessThan

- LessThanOrEqual

Scenarios

NoSQL

A common pattern in a NoSQL datastore is to work with storing related entities with different schema in the same table. In this sample below, we will persist a group of heterogeneous shapes that make up a given drawing. In our case, the PartitionKey for our entities will be a drawing name that will allow us to retrieve and alter a set of shapes together in an atomic manner. The challenge becomes how to work with these heterogeneous entities on the client side in an efficient and usable manner.

The table client provides an EntityResolver delegate which allows client side logic to execute during deserialization. In the scenario detailed above, let’s use a base entity class named ShapeEntity which extends TableEntity. This base shape type will define all common properties to a given shape, such as it Color Fields and X and Y coordinates in the drawing.

public class ShapeEntity : TableEntity

{

public virtual string ShapeType { get; set; }

public double PosX { get; set; }

public double PosY { get; set; }

public int ColorA { get; set; }

public int ColorR { get; set; }

public int ColorG { get; set; }

public int ColorB { get; set; }

}

Now we can define some shape types that derive from the base ShapeEntity class. In the sample below we define a rectangle which will have a Width and Height property. Note, this child class also overrides ShapeType for serialization purposes. For brevities sake the Line, and Ellipse entities are omitted here, however you can imagine representing other types of shapes in different child entity type such as triangles, trapezoids etc.

public class RectangleEntity : ShapeEntity

{

public double Width { get; set; }

public double Height { get; set; }

public override string ShapeType

{

get { return "Rectangle"; }

set {/* no op */}

}

}

Now we can define a query to load all of the shapes associated with our drawing and an EntityResolver that will resolve each entity to the correct child class. Note, that in this example aside from setting the core properties PartitionKey, RowKey, Timestamp, and ETag, we did not have to write any custom deserialization logic and instead rely on the built in deserialization logic provided by TableEntity.ReadEntity.

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

TableQuery<ShapeEntity> drawingQuery = new TableQuery<ShapeEntity>().Where(TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, "DrawingName"));

EntityResolver<ShapeEntity> shapeResolver = (pk, rk, ts, props, etag) =>

{

ShapeEntity resolvedEntity = null;

string shapeType = props["ShapeType"].StringValue;

if (shapeType == "Rectangle") { resolvedEntity = new RectangleEntity(); }

else if (shapeType == "Ellipse") { resolvedEntity = new EllipseEntity(); }

else if (shapeType == "Line") { resolvedEntity = new LineEntity(); }

// Potentially throw here if an unknown shape is detected

resolvedEntity.PartitionKey = pk;

resolvedEntity.RowKey = rk;

resolvedEntity.Timestamp = ts;

resolvedEntity.ETag = etag;

resolvedEntity.ReadEntity(props, null);

return resolvedEntity;

};

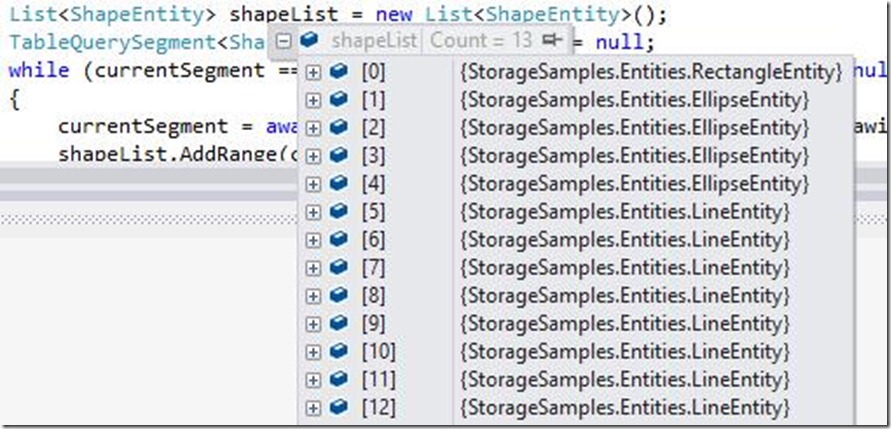

Now we can execute this query in a segmented Asynchronous manner in order to keep our UI fast and fluid. The code below is written using the Async Methods exposed by the client library for Windows Runtime.

List<ShapeEntity> shapeList = new List<ShapeEntity>();

TableQuerySegment<ShapeEntity> currentSegment = null;

while (currentSegment == null || currentSegment.ContinuationToken != null)

{

currentSegment = await drawingTable.ExecuteQuerySegmentedAsync(

drawingQuery,

shapeResolver,

currentSegment != null ? currentSegment.ContinuationToken : null);

shapeList.AddRange(currentSegment.Results);

}

Once we execute this we can see the resulting collection of ShapeEntities contains shapes of various entity types.

Heterogeneous update

In some cases it may be required to update entities regardless of their type or other properties. Let’s say we have a table named “employees”. This table contains entity types for developers, secretaries, contractors, and so forth. The example below shows how to query all entities in a given partition (in our example the state the employee works in is used as the PartitionKey) and update their salaries regardless of job position. Since we are using merge, the only property that is going to be updated is the Salary property, and all other information regarding the employee will remain unchanged.

// You will need the following using statements

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Table;

TableQuery query = new TableQuery().Where("PartitionKey eq 'Washington'").Select(new string[] { "Salary" });

// Note for brevity sake this sample assumes there are 100 or less employees, however the client should ensure batches are kept to 100 operations or less.

TableBatchOperation mergeBatch = new TableBatchOperation();

foreach (DynamicTableEntity ent in employeeTable.ExecuteQuery(query))

{

EntityProperty salaryProp;

// Check to see if salary property is present

if (!ent.Properties.TryGetValue("Salary", out salaryProp))

{

if (salaryProp.DoubleValue < 50000)

{

// Give a 10% raise

salaryProp.DoubleValue = 1.1;

}

else if (salaryProp.DoubleValue < 100000)

{

// Give a 5% raise

salaryProp.DoubleValue = *1.05;

}

mergeBatch.Merge(ent);

}

else {

throw new ArgumentNullException("Entity does not contain Salary!");

}

}

// Execute batch to save changes back to the table service

employeeTable.ExecuteBatch(mergeBatch);

Persisting 3rd party objects

In some cases we may need to persist objects exposed by 3rd party libraries, or those which do not fit the requirements of a TableEntity and cannot be modified to do so. In such cases, the recommended best practice is to encapsulate the 3rd party object in a new client object that implements the ITableEntity interface, and provide the custom serialization logic needed to persist the object to the table service via ITableEntity.ReadEntity and ITableEntity.WriteEntity.

Continuation Tokens

Continuation Tokens can be returned in the segmented execution of a query. One key improvement to the [Blob|Table|Queue]ContinuationTokens in this release is that all properties are now publicly settable and a public default constructor is provided. This, in addition to the IXMLSerializable implementation, allows clients to easily persist a continuation token.

DataServices

The legacy table service implementation has been migrated to the Microsoft.WindowsAzure.Storage.Table.DataServicesnamespace and updated to support new features in the 2.0 release such as OperationContext, end to end timeouts, and asynchronous cancellation. In addition to the new features, there have been some breaking changes introduced in this release, for a full list please reference the tables section of the Breaking Changes blog post.

Developing in Windows Runtime

A key driver in this release was expanding platform support, specifically targeting the upcoming releases of Windows 8, Windows RT, and Windows Server 2012. As such, we are releasing the following two Windows Runtime components to support Windows Runtime as Community Technology Preview (CTP):

- Microsoft.WindowsAzure.Storage.winmd - A fully projectable storage client that supports JavaScript, C++, C#, and VB. This library contains all core objects as well as support for Blobs, Queues, and a base Tables Implementation consumable by JavaScript

- Microsoft.WindowsAzure.Storage.Table.dll – A table extension library that provides generic query support and strong type entities. This is used by non-JavaScript applications to provide strong type entities as well as reflection based serialization of POCO objects

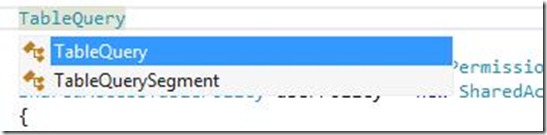

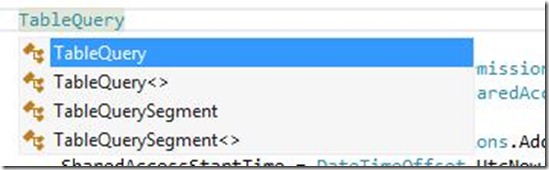

The images below illustrate the Intellisense experience when defining a TableQuery in an application that just reference the core storage component and when the table extension library is used. EntityResolver, and the TableEntity object are also absent in the core storage component, instead all operations are based off of the DynamicTableEntity type.

Intellisense when defining a TableQuery referencing only the core storage component

Intellisense when defining a TableQuery with the table extension library

While most table constructs are the same, you will notice that when developing for the Windows Runtime all synchronous methods are absent which is in keeping with the specified best practice. As such, the equivalent of the desktop method CloudTable.ExecuteQuery which would handle continuation for the user has been removed. Instead developers are supposed to handle this segmented execution in their application and utilize the provided ExecuteQuerySegmentedAsync methods in order to keep their apps fast and fluid.

Summary

This blog post has provided an in depth overview of developing applications that leverage the Windows Azure Table service via the new Storage Client libraries for .Net and the Windows Runtime. Additionally, we have discussed some specific differences when migrating existing applications from a previous 1.x release of the SDK.

Joe Giardino

Serdar Ozler

Veena Udayabhanu

Justin Yu

Windows Azure Storage

Resources

Get the Windows Azure SDK for .Net

- Download the Windows Azure SDK

- Windows Azure SDK for .Net source code on GitHub

- How to use the Table Storage Service

- nuget – Windows Azure Storage

- nuget - Microsoft.Data.Edm

- nuget - Microsoft.Data.OData

- nuget - System.Spatial

Comments

Anonymous

November 06, 2012

Hello, We're currently migrating our code base to the new Storage Client library. Everything is going well so far, however during our regression testing we found something important - any property defined as nullable (ex: int?, DateTime?) in our POCO object is not getting deserialized and always stays NULL. This was working previously using the old DataServices library and is very important for us. Is this a known problem, and is there a work-around? GabrielAnonymous

November 06, 2012

Regarding my previous comment with nullable values, I looked at the source code and it seems that TableEntity.ReadEntity only handles nullable DateTime values. The rest is not handled.Anonymous

November 08, 2012

You are correct, both DateTime and DateTimeOffset are current supported for nullable types. Unfortunately some primitive types are not currently supported. This issue has been fixed and is currently in testing / signing. Please check github / nuget mid next week for the updated binaries. JoeAnonymous

November 13, 2012

I've just downloaded v2.0.1.0 from nuget today which was released on monday and I have the same problem as Gabriel Michaud. My int? is always null even if it has a value. Do you have an updated fix date? The new library is unusable until this is fixed.Anonymous

November 15, 2012

How do we use TPL Dataflow with Azure Tables? Specifically I want to process data as continuation tokens are given to me... rather than wait for the last entity to arrive. Will the TPL "async" Task<T> come to the regular Storage Client in .NET?Anonymous

November 20, 2012

Is it possible to query based on generics for a TableQuery<T>().Where(...); Like so? var exQuery = new TableQuery<T>().Where(TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, partitionKey));Anonymous

November 20, 2012

@Joey - Please make sure T is defined as "where T : TableEntity, new()" in your method. That will enable you to use T as a generic argument in TableQuery.Anonymous

November 30, 2012

I figured out how to make TPL work with Azure Storage... I posted it here github.com/makerofthings7/AzureStorageExtensionsAnonymous

January 07, 2013

TableOperation has a 'Merge' option but no 'Update' option, am I missing something here or is there no way to perform a conditional update based on the ETag with this new Library and 'CloudTable' stuff?Anonymous

February 04, 2013

I have a table that has millions of thousands of entities. I wanted to retrieve items by PartitionKey and limit the number of items by 5. However using the Take(5) doesnt work. Here's my code. Am I missing something: public void GetData(string connectionString, string tableName) { var cloudStorageAccount = CloudStorageAccount.Parse(connectionString); var cloudTableClient = cloudStorageAccount.CreateCloudTableClient(); var cloudTable = cloudTableClient.GetTableReference(tableName); var filter = TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, "Test_Service:20121016"); var query = new TableQuery<CustomTableEntity>().Where(filter).Take(5); foreach (var item in cloudTable.ExecuteQuery<CustomTableEntity>(query)) { Trace.TraceInformation(string.Format("{0} - {1} - {2}", item.PartitionKey, item.RowKey, item.Timestamp)); } } public class CustomTableEntity : TableEntity { } This code only returns all data where the PartitionKey is "Test_Service:20121016" and doesnt give me the Top 5 or First 5 items.Anonymous

February 11, 2013

@Arvin There was an issue regarding the correct handling of takecount that was resolved in 2.0.4. You can get the source on github (github.com/.../azure-sdk-for-net) or the assembly via nugget (www.nuget.org/.../WindowsAzure.Storage).Anonymous

February 11, 2013

@Roy Herrod The nomenclature is slightly different than WCF Data Services which abstracted away much of the protocol and underlying implementation. When you "update" an entity you can either merge the payload with the entity on the server or replace it completely. For example, if the entity on the server contains properties A,B,C, and you were to MERGE a entity with properties C',D,E,F, the resulting entity would contain, A,B,C',D,E,F. If you were to do the same operation with the REPLACE the resulting entity would contain only C',D,E,F. To accomplish these semantics via WCF data services you would have used Merge ... ctx.UpdateObject(mergeEntity); ctx.SaveChangesWithRetries(); ... Replace ... ctx.UpdateObject(replaceEntity); ctx.SaveChangesWithRetries(SaveChangesOptions.ReplaceOnUpdate); ... As far as the conditional aspect, both the Merge, and Replace TableOperations will send the If-Match header and are therefore conditional. To perform an operation regardless of state you can utilize the InsertOrMerge or InsertOrReplace operations which will not send the etag. This "Upser" semantic will perform the action even if the entity does not exist on the server or is an older version. Hope this helps, JoeAnonymous

February 22, 2013

What is the 2.0 equivalent of the following? context.IgnoreMissingProperties = true/false In other words, how do I accomplish this using the 2.0 client libraries.Anonymous

February 23, 2013

@ Scott Ignore missing properties simply will continue if a given property is not found on the entity. This the default behavior of the new TSL api (i.e. similar to IgnoreMissingProperties=true in WCF data services). In many cases the deserialization is controlled by the user or is consumed by a non poco type (for example DynamicTableEntity or using a EntityResolver). If your requirement is to assert for a given property not found on a POCO type (i.e. IgnoreMissingProperties = false) then you can accomplish this by overriding the ITableEntity.ReadEntity method. joeAnonymous

February 26, 2013

Thanks Joe...That was exactly what I needed! That also explains why Partiton/RowKey are no longer virtual - in TableEntity. Custom (de)serialization of everything can now be handled by overriding (Read/Write)Entity.Anonymous

August 19, 2014

Hi, I'm not sure what you mean when you are describing the TableEntity and you say "This class is sealed and may be extended to add additional properties to an entity type." If the class can be extended (which it can), it is not sealed in the reserved C# keyword sense of the word.Anonymous

August 20, 2014

@Nigel, TableEntity class is NOT sealed. Post was missing the 'NOT' and has been fixed. Thanks for bringing it to our attention.Anonymous

December 10, 2014

We still don't have powershell commandlets for managing (reading, writing) data from Azure storage Table. Do we have this C# code converted to Powershell one that we can use as I am not C# developer. I know powershell as I am operations field and perform system admin task though powershell.