What is Semantic Kernel?

Semantic Kernel is an open-source SDK that lets you easily build agents that can call your existing code. As a highly extensible SDK, you can use Semantic Kernel with models from OpenAI, Azure OpenAI, Hugging Face, and more! By combining your existing C#, Python, and Java code with these models, you can build agents that answer questions and automate processes.

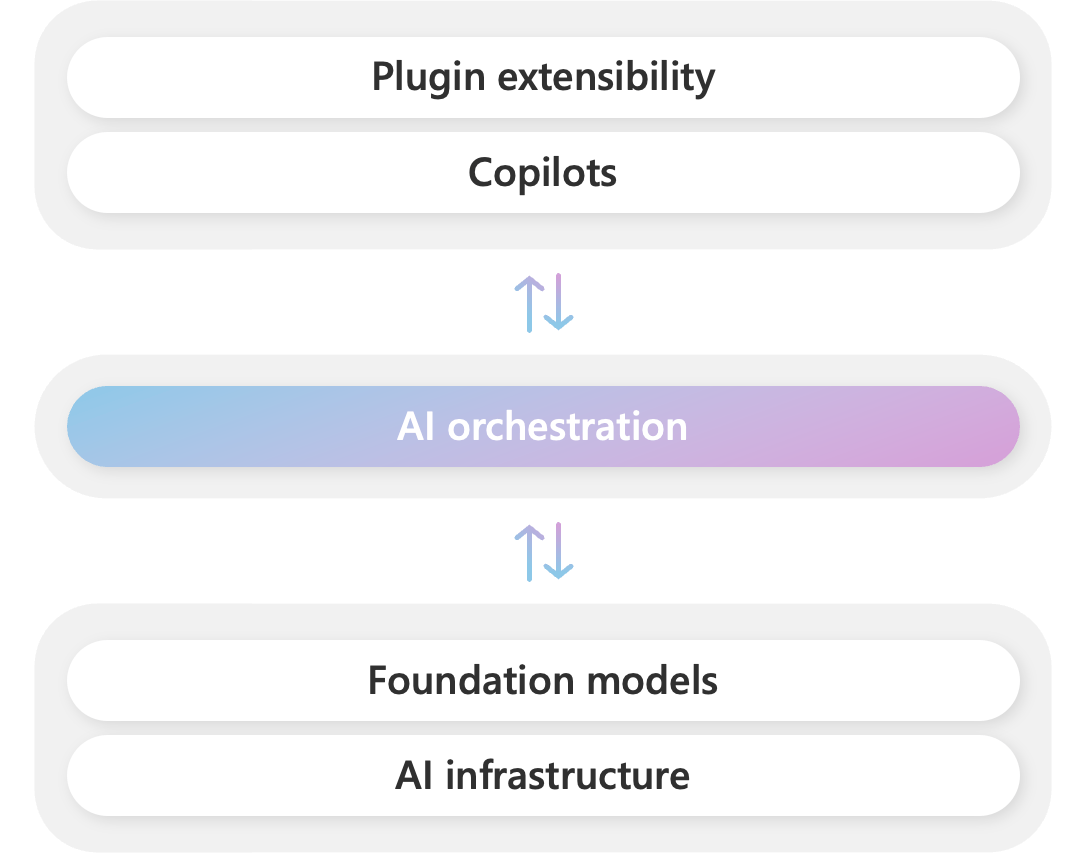

Semantic Kernel is at the center of the agent stack

During Kevin Scott's talk The era of the AI Copilot, he demonstrated how Microsoft powers its Copilot system with a stack consisting of of AI models and plugins. At the center of this stack is an AI orchestration layer that allows Microsoft to combine AI models and plugins together to create brand new experiences for users.

To help developers build their own Copilot experiences on top of AI plugins, we have released Semantic Kernel, a lightweight open-source SDK that allows you to orchestrate plugins (i.e., your existing code) with AI. With Semantic Kernel, you can leverage the same AI orchestration patterns that power Microsoft's Copilots in your own apps.

Tip

If you are interested in seeing a sample of the copilot stack in action (with Semantic Kernel at the center of it), check out Project Miyagi. Project Miyagi reimagines the design, development, and deployment of intelligent applications on top of Azure with all of the latest AI services and tools.

Why use an SDK like Semantic Kernel?

Today's AI models can easily generate messages and images for users. While this is helpful when building a simple chat app, it is not enough to build fully automated AI agents that can automate business processes and empower users to achieve more. To do so, you would need a framework that can take the responses from these models and use them to call existing code to actually do something productive.

With Semantic Kernel, we've done just that. We've created an SDK that allows you to easily describe your existing code to AI models so they can request that they be called. Afterwards, Semantic Kernel does the heavy lifting of translating the model's response into a call to your code.

To see how this works, let's build a simple AI agent that can turn on and off a lightbulb.

How many agents does it take to change a lightbulb?

Let's say you wanted an AI agent to be able to turn on and off a lightbulb. In a real business scenario, you may want the AI to perform more complex tasks, like send emails, update databases, and more, but even in those scenarios, you would still follow the same steps.

First, you need code that can change the state of the lightbulb. This is fairly simple to do with a few lines of C# code. Below we create our LightPlugin class that has two methods, GetState and ChangeState.

public class LightPlugin

{

public bool IsOn { get; set; } = false;

#pragma warning disable CA1024 // Use properties where appropriate

[KernelFunction]

[Description("Gets the state of the light.")]

public string GetState() => IsOn ? "on" : "off";

#pragma warning restore CA1024 // Use properties where appropriate

[KernelFunction]

[Description("Changes the state of the light.'")]

public string ChangeState(bool newState)

{

this.IsOn = newState;

var state = GetState();

// Print the state to the console

Console.WriteLine($"[Light is now {state}]");

return state;

}

}

Notice that we've added a few attributes to the methods, [KernelFunction] and [Description]. Whenever you want an AI to call your code, you need to first describe it to the AI so it knows how to actually use it. In this case, we've described two functions, GetState and ChangeState, so the AI can request that they be called.

Now that we have our code, we now need to provide it to the AI. This is where Semantic Kernel comes in. With Semantic Kernel, we can create a single Kernel object that has all the information necessary to orchestrate our code with AI. To do so, we'll create a new Kernel object and pass it our LightPlugin class and the model we want to use:

var builder = Kernel.CreateBuilder()

.AddAzureOpenAIChatCompletion(modelId, endpoint, apiKey);

builder.Plugins.AddFromType<LightPlugin>();

Kernel kernel = builder.Build();

Now that we have a kernel, we can use it to create an agent that will call our code whenever its prompted to do so. Let's simulate a back-and-forth chat with a while loop:

// Create chat history

var history = new ChatHistory();

// Get chat completion service

var chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

// Start the conversation

Write("User > ");

string? userInput;

while ((userInput = ReadLine()) != null)

{

// Add user input

history.AddUserMessage(userInput);

// Enable auto function calling

OpenAIPromptExecutionSettings openAIPromptExecutionSettings = new()

{

ToolCallBehavior = ToolCallBehavior.AutoInvokeKernelFunctions

};

// Get the response from the AI

var result = await chatCompletionService.GetChatMessageContentAsync(

history,

executionSettings: openAIPromptExecutionSettings,

kernel: kernel);

// Print the results

WriteLine("Assistant > " + result);

// Add the message from the agent to the chat history

history.AddMessage(result.Role, result.Content ?? string.Empty);

// Get user input again

Write("User > ");

}

After running these few lines of code, you should be able to have a conversation with your AI agent:

User > Hello

Assistant > Hello! How can I assist you today?

User > Can you turn on the lights

[Light is now on]

Assistant > I have turned on the lights for you.

While this is just a simple example of how you can use Semantic Kernel, it quickly shows the power of the SDK and just how easy it is to use to automate tasks. To recreate a similar experience with other AI SDKs, you would easily need to write twice as much code.

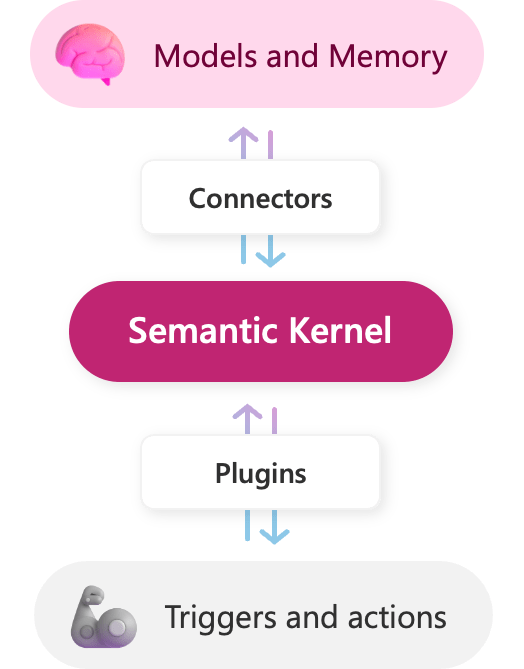

Semantic Kernel makes AI development extensible

Semantic Kernel has been engineered to easy to add your existing code to your AI agents with plugins. With plugins, you can give your agents the ability to interact with the real-world by calling your existing apps and services. In this way, plugins are like the "arms and hands" of your AI app.

Additionally, Semantic Kernel's interfaces allow it to flexibly integrate any AI service. This is done with a set of connectors that make it easy to add memories and AI models. In this way, Semantic Kernel is able to add a simulated "brain" to your app that you can easily swap out as newer and better AI models become available.

Because of the extensibility Semantic Kernel provides with connectors and plugins, you can use it to orchestrate nearly any of your existing code without being locked into a specific AI model provider. For example, if you built a bunch of plugins for OpenAI's ChatGPT, you could use Semantic Kernel to orchestrate them with models from other providers like Azure or Hugging Face.

As a developer, you can use the different components of Semantic Kernel separately. For example, if you just need an abstraction over OpenAI and Azure OpenAI services, you could just use the SDK to run handcrafted prompts, but the real power of Semantic Kernel comes from combining these components together.

Get started using the Semantic Kernel SDK

Now that you know what Semantic Kernel is, follow the get started link to try it out. Within minutes you can create prompts and chain them with out-of-the-box plugins and native code. Soon afterwards, you can give your apps memories with embeddings and summon even more power from external APIs.