Auto-Vectorizer in Visual Studio 2012 – How Much Faster?

If you’ve not read previous posts in this series about auto-vectorization, you may want to begin at the beginning.

This post explains how to measure the benefits of auto-vectorization – how much it speeds up your code. (To find out whether any particular loop was successfully vectorized, please see the earlier post called Did-It-Work?)

For this post, let’s use the following, small example:

const int N = 1000000;

float a[N], b[N];

int main() {

const int REPS = 500;

Timer t;

t.Start(); // start the clock

for (int reps = 1; reps <= REPS; ++reps) {

for (int n = 0; n < N; ++n) a[n] += sin(b[n]) * cos(b[n]);

}

t.Stop(); // stop the clock

cout << "Time in millisecs = " << t.Elapsed()/(double)REPS << endl;

cin.get(); // pause

}

As you can see, on line 10, it steps through array a, setting each element to the sin*cosof the corresponding element from array b. The arrays a and b are dimensioned to hold a million elements each. We repeat the measurement REPS times and report the average elapsed time.

For details of the Timer object, see Simon’s post on High-resolution timer for C++.

In order to experiment, you can disable the auto-vectorizer by including the following pragma just before the sin*cos loop:

#pragma loop(no_vector)

Here are the results, in milliseconds, measured on my office PC, for three cases:

Debug |

Release, no vectorization |

Release, auto-vectorized |

38 |

13.6 |

6.3 |

Notice how the performance improves in going from a debug (non-optimized) run, to a release (optimized-for-speed) run, by 2.8X. And by a further 2.2X if we allow auto-vectorization to kick in.

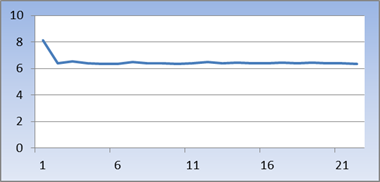

We should ask ourselves whether 500 repetitions are enough to get a reliable average. Here is a graph showing the time, in milliseconds, taken for the first 20 or so reps. As you can see, the very first run is slower (due to our arrays not being in the cache yet – we say the cache is“cold”). But it settles down quickly to a consistent result. (Not very scientific, I agree, but plausible)

That’s all we really need in order to experiment – just surround the loop of interest with a t.Start() and a t.Stop() . Then grab the elapsed time, for that loop, using t.Elapsed() . Include enough repetitions to get a meaningful average.

You need to be very careful in measuring the performance of programs, especially if you are going to discuss the results with anyone, other than your cat. There’s a good chance you will end up in a fist fight.

Anyhow, partly to forestall similar bitter arguments in the comments for this blog post, about the effectiveness of auto-vectorization, and partly because it’s actually useful to know, the rest of this post discusses issues on measuring performance. It’s not essential, or even specific to auto-vectorization, so feel free to skip.

Measuring Performance - Overview

First note that we are interested only in the performance of compute-bound loops. The programs we measure in this blog do not include any IO to disk, console, screen, network, or anything else. Make sure any results are reported using IO (typically to the console) lie outsideof the timed loop.

Next, before changing things to improve speed, you must profile your code! This will help avoid effort wasted in optimizing code-paths whose contribution to overall performance is negligible.

[NitPick: this recalls the maxim: “premature optimization is the root of all evil”? Yes, and this article examines the amusing confusion over its origin – Knuth or Hoare]

There are lots of profilers available, ranging in price from $0 up to $hundreds. Visual Studio provides profiling under the ANALYZE tab. You can experiment with this feature using the free Ultimate 2012 RC download.

Measuring Performance - Tips

Here then, are some factors to keep you awake at night:

What do you want to measure?Do you want the “steady-state” result? – obtained by running the program again and again. Or do you want the “cold start” result? – obtained by running the program once?

If “steady-state”, then choose enough repetitions.See the example graph above– set REPS big enough that the curve flattens out.

If “cold startup”, you should still measure multiple times, but you need to ‘pollute’ the cache between each run.

Be aware that your PC may increase the clock frequency ‘when the going gets tough’ – see, for example, this article comparing the AMD and Intel approaches

Similar to the previous, laptops often support dynamic frequency scaling (sometimes called “CPU Throttling”).

To see effects 2 or 3 actually happening, click Start, then type perfmon /res to open a Resource Monitor window – watch the little box titled “% Maximum Frequency” as your program runs.

Be aware that your PC is doing lots of other things that steal cycles as your program runs: network IO, anti-virus scans, registry queries, etc. At any time, it may have 70+ processes loaded. As a revealing experiment, you might investigate the excellent Procmon tool. (On my PC it registers a continuous“background noise” of some 3,000 events per second!)

Be aware that the Operating System may well reschedule your test across different cores (with each such context switch incurring cache misses).You might want to investigate affinitizing your program to just one core.

If you are running an old PC or old version of Windows, then be aware of potential timing problems, due to limited resolution, counter overflow, cross-core synchronization, BIOS bugs, hardware gotchas, etc. Here is one example, and another.

Consider whether to filter the results. For example, to discard outliers. Similarly, whether to calculate the geo-mean, rather than a simple average.

Beware that the compiler will apply its razor-sharp logic to your code: if it sees no use of a particular calculation, it may skip generating the corresponding machine code! (This is an optimization called“Dead Code Elimination”, commonly abbreviated DCE) If your program suddenly starts to run incredibly fast, DCE may be the cause.

Beware floating-point. In particular, de-normals and rounding modes: in running a test program for this blog series, I accidentally generated de-normals – performance plunged over a cliff edge. If interested, check this link for a historical perspective.

Finally, beware trying to explain the reasons for your results, good or bad. Debate will range over dozens of topics, relevant or not: instruction selection, L1/2/3 caches, register allocation, out-of-order execution, multiple issue, pipeline bubbles, speculative execution, branch prediction, etc,).

Good luck!

Comments

Anonymous

September 10, 2012

Jim, I ran the tests on Intel i7 quad-core CPU (i7-940XM) laptop and my results are 156-47-13.6 ms. I have initialized b[n] to the unorm range. I checked for de-normal to ensure I did not generate any. Why could my results be so much slower than yours? I thought that my laptop is as fast as you could build them (for a 2-year old one)...Anonymous

September 11, 2012

@Alan: My results (which I just re-checked) were for an x64 build. If I rerun on my same PC, but for a Win32 build, all runs (debug, optimized-no-vector and optimized) go significantly slower. If you are building Win32, that's the likely reason. [Other side-effects to investigate: if you specify /fp:fast for 32-bit mode, we allow ourselves to use faster versions of the 32-bit trig libraries] [In passing, the next version of the compiler will likely not vectorize this inner loop. There's an alternate, better optimization, that yields a speedup of around 5x]Anonymous

September 11, 2012

Right!! Man, VS11 does not show Solution Platform by default. Aahh!! Now I am getting closer to your numbers. Very valuable lesson on paying attention and the difference between 32-bit and 64-bit optimizations! 64-bit 40.7 19.9 5.5 What is wrong with the following loop? info C5002: loop not vectorized due to reason '1200' for (int i = 0; i < N; ++i) { b[i] = static_cast<double>(rand()) / (RAND_MAX + 1.0);// * (1.0 - 0.5) + 0.5; }Anonymous

September 11, 2012

@Alan: The rand() function is "side-effecting". Put another way, rand() is not pure. Put another way, the result is not solely determined by its input arguments - indeed, it doesn't have any! Specifically, the result of any call to rand() depends upon the value returned (and stored internally) from the previous call. This equates to a "loop-carried dependency". Thus, reason code 1200 (discussed some, in the "Cookbook" post)Anonymous

October 19, 2012

Does auto-vectorization work in all cases where it could be applied, or are prerequisites like being encapsulated in a loop apply? I'm writing a pseudo-analog modular synthetic for Windows 8. I've chosen pure C for the synthetic kernel to avoid the call overhead in .NET and apply SIMD intrinsics where it makes sense (since we don't have that in .NET). Auto-vectorization sounds like a better idea, so that I don't need to go to Macro-ville for enabling the ARM build. Thing is, I loop though a linked list repeatedly, calling a computation function just once each time. The loop's around the linked list walking function. Would my code still profit from auto-vectorization or do I need to do it manually?Anonymous

October 19, 2012

Synthesizer* Damn autocorrect.Anonymous

October 19, 2012

The comment has been removedAnonymous

May 30, 2014

In the Auto-Vectorization Cookbook I read that it will only work with int. Does this mean unsigned ints won't be optimized this way?