Windows Server'da Doğrudan Saklama Alanlarını dağıtma

Bu konu, Windows Server'da Depolama Alanları Doğrudan dağıtmak için adım adım yönergeler sağlar. Storage Spaces Direct'i Azure Yerel'in bir parçası olarak dağıtmak için Azure Yerel Hakkında bakın.

Bahşiş

Hiper yakınsayan altyapıyı satın almak mı istiyorsunuz? Microsoft, iş ortaklarımızdan doğrulanmış bir donanım/yazılım Azure Yerel çözümü satın almanızı önerir. Bu çözümler, uyumluluk ve güvenilirlik sağlamak için başvuru mimarimize göre tasarlanır, derlenir ve doğrulanır, böylece hızla çalışmaya başlarsınız. Azure Yerel ile çalışan donanım/yazılım çözümleri kataloğunu incelemek için bkz. Azure Yerel Kataloğu.

Bahşiş

Depolama Alanları Doğrudan'ı donanımolmadan değerlendirmek

Başlamadan önce

Depolama Alanları Doğrudan donanım gereksinimlerini gözden geçirin ve genel yaklaşımı ve bazı adımlarla ilişkili önemli notları öğrenmek için bu belgeye göz gezdirin.

Aşağıdaki bilgileri toplayın:

Dağıtım seçeneği. Doğrudan Depolama Alanları, iki dağıtım seçeneğini destekler: hiperbütünleşik ve bütünleşik, aynı zamanda ayrıştırılmış olarak da bilinir. Hangisinin size uygun olduğuna karar vermek için her birinin avantajlarını tanıyın. Aşağıdaki 1-3 arası adımlar her iki dağıtım seçeneği için de geçerlidir. 4. adım yalnızca yakınsanmış dağıtım için gereklidir.

Sunucu adları. Kuruluşunuzun bilgisayarlar, dosyalar, yollar ve diğer kaynaklar için adlandırma ilkeleri hakkında bilgi edinin. Her birinde benzersiz adlar bulunan birkaç sunucu sağlamanız gerekir.

Alan adı. Kuruluşunuzun etki alanı adlandırma ve etki alanına katılma ilkeleri hakkında bilgi edinin. Sunucuları etki alanınıza ekleyeceksiniz ve etki alanı adını belirtmeniz gerekir.

RDMA ağı. İki tür RDMA protokolü vardır: iWarp ve RoCE. Ağ bağdaştırıcılarınızın hangisini kullandığını ve RoCE ise sürümü de (v1 veya v2) not edin. RoCE için raf üstü anahtarınızın modelini de not edin.

VLAN Kimliği. Varsa sunucularda yönetim işletim sistemi ağ bağdaştırıcıları için kullanılacak VLAN kimliğini not edin. Bunu ağ yöneticinizden edinebilmeniz gerekir.

1. Adım: Windows Server'ı dağıtma

1.1. Adım: İşletim sistemini yükleme

İlk adım, kümede olacak her sunucuya Windows Server yüklemektir. Windows Server Datacenter Edition, Storage Spaces Direct'e gereksinim duyar. Sunucu Çekirdeği yükleme seçeneğini veya Masaüstü Deneyimi ile Sunucu seçeneğini kullanabilirsiniz.

Windows Server'ı Kurulum sihirbazını kullanarak yüklediğinizde, Windows Server 2012 R2'de kullanılabilen Tam yükleme seçeneğinin eşdeğeri olan Windows Server (Sunucu Çekirdeği'ne başvuran) ile Windows Server (Masaüstü Deneyimi ile Sunucu)arasında seçim yapabilirsiniz. Seçmezseniz Sunucu Çekirdeği yükleme seçeneğini alırsınız. Daha fazla bilgi için bkz. Sunucu Çekirdeği yükleme.

1.2. Adım: Sunuculara bağlanma

Bu kılavuz, Sunucu Çekirdeği yükleme seçeneğine ve aşağıdakilere sahip olması gereken ayrı bir yönetim sisteminden uzaktan dağıtma/yönetme konularına odaklanır:

- Windows Server veya Windows 10'un en azından yönettiği sunucular kadar yeni ve en son güncelleştirmeleri içeren bir sürümü

- Yönettiği sunuculara ağ bağlantısı

- Aynı etki alanına veya tam olarak güvenilen bir etki alanına katıldı

- Hyper-V ve Yük Devretme Kümelemesi için Uzak Sunucu Yönetim Araçları (RSAT) ve PowerShell modülleri. RSAT araçları ve PowerShell modülleri Windows Server'da kullanılabilir ve diğer özellikler yüklenmeden yüklenebilir. Uzak Sunucu Yönetim Araçları bir Windows 10 yönetim bilgisayarına da yükleyebilirsiniz.

Yönetim sisteminde Yük Devretme Kümesini ve Hyper-V yönetim araçlarını yükleyin. Bu, Rol ve Özellik Ekleme sihirbazı

PS oturumunu girin ve bağlanmak istediğiniz düğümün sunucu adını veya IP adresini kullanın. Bu komutu yürütürken parola girmeniz istenir. Windows'u ayarlarken belirttiğiniz yönetici parolasını girin.

Enter-PSSession -ComputerName <myComputerName> -Credential LocalHost\Administrator

Bunu birden çok kez yapmanız gerekirse, betiklerde daha yararlı olacak şekilde aynı işlemi yapma örneği aşağıda verilmiştir:

$myServer1 = "myServer-1"

$user = "$myServer1\Administrator"

Enter-PSSession -ComputerName $myServer1 -Credential $user

İpucu

Bir yönetim sisteminden uzaktan dağıtıyorsanız, winRM'nin isteği işleyememesi gibi bir hata alabilirsiniz. Bunu düzeltmek için, her sunucuyu yönetim bilgisayarınızdaki Güvenilen Konaklar listesine eklemek için Windows PowerShell'i kullanın:

Set-Item WSMAN:\Localhost\Client\TrustedHosts -Value Server01 -Force

Not: Güvenilen konaklar listesi, Server*gibi joker karakterleri destekler.

Güvenilen Konaklar listenizi görüntülemek için Get-Item WSMAN:\Localhost\Client\TrustedHostsyazın.

Listeyi boşaltmak için Clear-Item WSMAN:\Localhost\Client\TrustedHostyazın.

1.3. Adım: Etki alanına katılma ve etki alanı hesapları ekleme

Şimdiye kadar yerel yönetici hesabını kullanarak tek tek sunucuları yapılandırdınız, <ComputerName>\Administrator.

Depolama Alanları Doğrudan'ı yönetmek için sunucuları bir etki alanına eklemeniz ve her sunucudaki Administrators grubunda yer alan bir Active Directory Etki Alanı Hizmetleri etki alanı hesabı kullanmanız gerekir.

Yönetim sisteminden Yönetici ayrıcalıklarına sahip bir PowerShell konsolu açın. Her sunucuya bağlanmak için Enter-PSSession kullanın ve kendi bilgisayar adınızı, etki alanı adınızı ve etki alanı kimlik bilgilerinizi değiştirerek aşağıdaki cmdlet'i çalıştırın:

Add-Computer -NewName "Server01" -DomainName "contoso.com" -Credential "CONTOSO\User" -Restart -Force

Depolama yöneticisi hesabınız Domain Admins grubunun üyesi değilse, depolama yöneticisi hesabınızı her düğümdeki yerel Yöneticiler grubuna ekleyin veya daha iyisi, depolama yöneticileri için kullandığınız grubu ekleyin. Aşağıdaki komutu kullanabilirsiniz (veya bunu yapmak için bir Windows PowerShell işlevi yazabilirsiniz) daha fazla bilgi için bkz. Etki Alanı Kullanıcılarını Yerel Gruba Eklemek için PowerShell Kullanma):

Net localgroup Administrators <Domain\Account> /add

1.4. Adım: Rolleri ve özellikleri yükleme

Sonraki adım, sunucu rollerini her sunucuya yüklemektir. Bunu Windows Yönetim Merkezi, Sunucu Yöneticisi) veya PowerShell kullanarak yapabilirsiniz. Yüklenecek roller şunlardır:

- Yük Devretme Kümelemesi

- Hyper-V

- Dosya Sunucusu (yakınsanmış dağıtım gibi herhangi bir dosya paylaşımını barındırmak istiyorsanız)

- Data-Center-Bridging (iWARP ağ bağdaştırıcıları yerine RoCEv2 kullanıyorsanız)

- RSAT-Clustering-PowerShell

- Hyper-V-PowerShell

PowerShell aracılığıyla yüklemek için Install-WindowsFeature cmdlet'ini kullanın. Bunu aşağıdaki gibi tek bir sunucuda kullanabilirsiniz:

Install-WindowsFeature -Name "Hyper-V", "Failover-Clustering", "Data-Center-Bridging", "RSAT-Clustering-PowerShell", "Hyper-V-PowerShell", "FS-FileServer"

Komutunu kümedeki tüm sunucularda aynı anda çalıştırmak için, betiğin başındaki değişkenlerin listesini ortamınıza uyacak şekilde değiştirerek bu küçük betiği kullanın.

# Fill in these variables with your values

$ServerList = "Server01", "Server02", "Server03", "Server04"

$FeatureList = "Hyper-V", "Failover-Clustering", "Data-Center-Bridging", "RSAT-Clustering-PowerShell", "Hyper-V-PowerShell", "FS-FileServer"

# This part runs the Install-WindowsFeature cmdlet on all servers in $ServerList, passing the list of features into the scriptblock with the "Using" scope modifier so you don't have to hard-code them here.

Invoke-Command ($ServerList) {

Install-WindowsFeature -Name $Using:Featurelist

}

2. Adım: Ağı yapılandırma

Depolama Alanları Doğrudan'ı sanal makinelerin içinde dağıtıyorsanız bu bölümü atlayın.

Depolama Alanları Doğrudan, kümedeki sunucular arasında yüksek bant genişliğine sahip, düşük gecikme süreli ağ gerektirir. En az 10 GbE ağ gereklidir ve uzaktan doğrudan bellek erişimi (RDMA) önerilir. İşletim sistemi sürümünüzle eşleşen Windows Server logosuna sahip olduğu sürece iWARP veya RoCE kullanabilirsiniz, ancak iWARP'ı ayarlamak genellikle daha kolaydır.

Önemli

Ağ ekipmanınıza ve özellikle RoCE v2'ye bağlı olarak raf üstü anahtarının bazı yapılandırmaları gerekebilir. Depolama Alanları Doğrudan'ın güvenilirliğini ve performansını sağlamak için doğru anahtar yapılandırması önemlidir.

Windows Server 2016, Hyper-V sanal anahtarı içinde anahtara gömülü ekip oluşturma (SET) özelliğini tanıttı. Bu, RDMA kullanılırken tüm ağ trafiği için aynı fiziksel NIC bağlantı noktalarının kullanılmasına olanak sağlayarak gerekli fiziksel NIC bağlantı noktalarının sayısını azaltır. Storage Spaces Direct için anahtar gömülü ekip oluşturma önerilmektedir.

Anahtarlı veya anahtarsız düğüm bağlantıları

- Anahtarlama: Ağ anahtarları, bant genişliğini ve ağ türünü yönetebilmek için doğru şekilde yapılandırılmalıdır. RoCE protokolunu uygulayan RDMA kullanılıyorsa ağ cihazı ve anahtar yapılandırması daha da önemlidir.

- Anahtarsız: Düğümler, doğrudan bağlantılar kullanılarak birbirine bağlanabilir; böylece anahtara ihtiyaç duyulmaz. Her düğümün kümenin diğer tüm düğümleriyle doğrudan bağlantısı olması gerekir.

Depolama Alanları Doğrudan için ağ oluşturma yönergeleri hakkında bilgi almak için Windows Server 2016 ve 2019 RDMA Dağıtım Kılavuzu bakın.

3. Adım: Depolama Alanları Doğrudan'ı yapılandırma

Aşağıdaki adımlar, yapılandırılan sunucularla aynı sürümdeki bir yönetim sisteminde gerçekleştirilir. Aşağıdaki adımlar bir PowerShell oturumu kullanılarak uzaktan çalıştırılmamalıdır, bunun yerine yönetim sistemindeki yerel bir PowerShell oturumunda ve yönetim izinleriyle çalıştırılmalıdır.

Adım 3.1: Sürücüleri temizleme

Depolama Alanları Doğrudan'ı etkinleştirmeden önce sürücülerinizin boş olduğundan emin olun: eski bölümler veya başka veriler yok. Tüm eski bölümleri veya diğer verileri kaldırmak için bilgisayarınızın adlarını değiştirerek aşağıdaki betiği çalıştırın.

Önemli

Bu betik, işletim sistemi önyükleme sürücüsü dışındaki sürücülerde bulunan tüm verileri kalıcı olarak kaldırır!

# Fill in these variables with your values

$ServerList = "Server01", "Server02", "Server03", "Server04"

foreach ($server in $serverlist) {

Invoke-Command ($server) {

# Check for the Azure Temporary Storage volume

$azTempVolume = Get-Volume -FriendlyName "Temporary Storage" -ErrorAction SilentlyContinue

If ($azTempVolume) {

$azTempDrive = (Get-Partition -DriveLetter $azTempVolume.DriveLetter).DiskNumber

}

# Clear and reset the disks

$disks = Get-Disk | Where-Object {

($_.Number -ne $null -and $_.Number -ne $azTempDrive -and !$_.IsBoot -and !$_.IsSystem -and $_.PartitionStyle -ne "RAW")

}

$disks | ft Number,FriendlyName,OperationalStatus

If ($disks) {

Write-Host "This action will permanently remove any data on any drives other than the operating system boot drive!`nReset disks? (Y/N)"

$response = read-host

if ( $response.ToLower() -ne "y" ) { exit }

$disks | % {

$_ | Set-Disk -isoffline:$false

$_ | Set-Disk -isreadonly:$false

$_ | Clear-Disk -RemoveData -RemoveOEM -Confirm:$false -verbose

$_ | Set-Disk -isreadonly:$true

$_ | Set-Disk -isoffline:$true

}

#Get-PhysicalDisk | Reset-PhysicalDisk

}

Get-Disk | Where-Object {

($_.Number -ne $null -and $_.Number -ne $azTempDrive -and !$_.IsBoot -and !$_.IsSystem -and $_.PartitionStyle -eq "RAW")

} | Group -NoElement -Property FriendlyName

}

}

Çıkış şöyle görünür: Sayısı, her bir sunucudaki her modelin sürücü sayısını gösterir.

Count Name PSComputerName

----- ---- --------------

4 ATA SSDSC2BA800G4n Server01

10 ATA ST4000NM0033 Server01

4 ATA SSDSC2BA800G4n Server02

10 ATA ST4000NM0033 Server02

4 ATA SSDSC2BA800G4n Server03

10 ATA ST4000NM0033 Server03

4 ATA SSDSC2BA800G4n Server04

10 ATA ST4000NM0033 Server04

3.2. Adım: Kümeyi doğrulama

Bu adımda, sunucu düğümlerinin Depolama Alanları Doğrudan kullanarak küme oluşturacak şekilde doğru yapılandırıldığından emin olmak için küme doğrulama aracını çalıştıracaksınız. Küme doğrulama (Test-Cluster) küme oluşturulmadan önce çalıştırıldığında, yapılandırmanın yük devretme kümesi olarak başarıyla çalışmaya uygun göründüğünü doğrulayan testleri çalıştırır. Doğrudan aşağıdaki örnekte -Include parametresi kullanılır ve ardından belirli test kategorileri belirtilir. Bu, Depolama Alanları Doğrudan'a özgü testlerin doğrulamaya dahil edilmesini sağlar.

Depolama Alanları Doğrudan kümesi olarak kullanılacak bir sunucu kümesini doğrulamak için aşağıdaki PowerShell komutunu kullanın.

Test-Cluster -Node <MachineName1, MachineName2, MachineName3, MachineName4> -Include "Storage Spaces Direct", "Inventory", "Network", "System Configuration"

3.3. Adım: Kümeyi oluşturma

Bu adımda, aşağıdaki PowerShell cmdlet'ini kullanarak önceki adımda küme oluşturma için doğruladığınız düğümlerle bir küme oluşturacaksınız.

Kümeyi oluştururken şu uyarıyı alırsınız: "Kümelenmiş rol oluşturulurken başlatılmasını engelleyebilecek sorunlar oluştu. Daha fazla bilgi için aşağıdaki rapor dosyasını görüntüleyin. Bu uyarıyı güvenle dikkate almayabilirsiniz. Bunun nedeni küme çekirdeği için kullanılabilir disk olmamasıdır. Küme oluşturulduktan sonra bir dosya paylaşım tanığının veya bulut tanığının yapılandırılması önerilir.

Not

Sunucular statik IP adresleri kullanıyorsa, aşağıdaki parametreyi ekleyerek ve IP adresini belirterek statik IP adresini yansıtacak şekilde aşağıdaki komutu değiştirin:-StaticAddress <X.X.X.X>. Aşağıdaki komutta ClusterName yer tutucusu benzersiz ve 15 karakter veya daha kısa bir netbios adıyla değiştirilmelidir.

New-Cluster -Name <ClusterName> -Node <MachineName1,MachineName2,MachineName3,MachineName4> -NoStorage

Küme oluşturulduktan sonra, küme adı için DNS kaydının çoğaltılması zaman alabilir. Süre, ortama ve DNS çoğaltma yapılandırmasına bağlıdır. Kümeyi çözümleme işlemi başarılı olmazsa, çoğu durumda küme adı yerine kümenin etkin üyesi olan bir düğümün makine adını kullanarak başarılı olabilirsiniz.

3.4. Adım: Küme tanığı yapılandırma

Üç veya daha fazla sunucuya sahip kümelerin iki sunucunun başarısız olmasına veya çevrimdışı olmasına dayanabilmesi için küme için bir tanık yapılandırmanızı öneririz. İki sunuculu dağıtım bir küme tanığı gerektirir, aksi takdirde çevrimdışı olan sunuculardan biri diğerinin de kullanılamaz duruma gelmesine neden olur. Bu sistemlerle bir dosya paylaşımını tanık olarak veya bulut tanığı olarak kullanabilirsiniz.

Daha fazla bilgi için aşağıdaki konulara bakın:

3.5. Adım: Depolama Alanları Doğrudan'ı etkinleştirme

Kümeyi oluşturduktan sonra, depolama sistemini Depolama Alanları Doğrudan moduna alacak ve otomatik olarak aşağıdakileri yapacak Enable-ClusterStorageSpacesDirect PowerShell cmdlet'ini kullanın:

Havuz oluştur: "Cluster1 üzerinde S2D" gibi bir ada sahip tek bir büyük havuz oluşturur.

Depolama Alanları Doğrudan önbelleklerini yapılandırır: Depolama Alanları Doğrudan kullanımı için birden fazla medya (sürücü) türü varsa en hızlı önbellek cihazlarını etkinleştirir (çoğu durumda okuma ve yazma)

Katmanları: Varsayılan katman olarak iki katman oluşturur. Bunlardan biri "Kapasite" ve diğeri "Performans" olarak adlandırılır. Cmdlet, cihazları analiz eder ve her katmanı cihaz türlerinin ve dayanıklılığın karışımıyla yapılandırır.

Yönetim sisteminden, Yönetici ayrıcalıklarıyla açılan bir PowerShell komut penceresinde aşağıdaki komutu başlatın. Küme adı, önceki adımlarda oluşturduğunuz kümenin adıdır. Bu komut düğümlerden birinde yerel olarak çalıştırılırsa, -CimSession parametresi gerekli değildir.

Enable-ClusterStorageSpacesDirect -CimSession <ClusterName>

Yukarıdaki komutu kullanarak Depolama Alanları Doğrudan'ı etkinleştirmek için küme adı yerine düğüm adını da kullanabilirsiniz. Yeni oluşturulan küme adıyla oluşabilecek DNS çoğaltma gecikmeleri nedeniyle düğüm adını kullanmak daha güvenilir olabilir.

Bu komut tamamlandığında, bu işlem birkaç dakika sürebilir, sistem birimlerin oluşturulması için hazır olur.

3.6. Adım: Birimler oluşturma

En hızlı ve en basit deneyimi sağladığından New-Volume cmdlet'ini kullanmanızı öneririz. Bu tek cmdlet, sanal diski otomatik olarak oluşturur, bölümlere ayırır ve biçimlendirer, eşleşen ada sahip birimi oluşturur ve tek bir kolay adımda küme paylaşılan birimlerine ekler.

Daha fazla bilgi için, Depolama Alanları Doğrudan'da birimler oluşturmabölümüne bakın.

3.7. Adım: İsteğe bağlı olarak CSV önbelleğini etkinleştirme

İsteğe bağlı olarak, windows önbellek yöneticisi tarafından önbelleğe alınmamış okuma işlemlerinin yazma blok düzeyinde önbelleği olarak sistem belleğini (RAM) kullanmak için küme paylaşılan birimi (CSV) önbelleğini etkinleştirebilirsiniz. Bu, Hyper-V gibi uygulamaların performansını artırabilir. CSV önbelleği, okuma isteklerinin performansını artırabilir ve Scale-Out Dosya Sunucusu senaryoları için de yararlıdır.

CSV önbelleğinin etkinleştirilmesi, hiper yakınsama kümesindeki VM'leri çalıştırmak için kullanılabilir bellek miktarını azaltır, bu nedenle depolama performansını VHD'ler için kullanılabilir bellekle dengelemeniz gerekir.

CSV önbelleğinin boyutunu ayarlamak için, yönetim sisteminde depolama kümesinde yönetici izinlerine sahip bir hesapla bir PowerShell oturumu açın ve ardından bu betiği kullanarak $ClusterName ve $CSVCacheSize değişkenlerini uygun şekilde değiştirin (bu örnek sunucu başına 2 GB CSV önbelleği ayarlar):

$ClusterName = "StorageSpacesDirect1"

$CSVCacheSize = 2048 #Size in MB

Write-Output "Setting the CSV cache..."

(Get-Cluster $ClusterName).BlockCacheSize = $CSVCacheSize

$CSVCurrentCacheSize = (Get-Cluster $ClusterName).BlockCacheSize

Write-Output "$ClusterName CSV cache size: $CSVCurrentCacheSize MB"

Daha fazla bilgi için bkz. CSV bellek içi okuma önbelleğini kullanma.

3.8. Adım: Hiper yakınsanmış dağıtımlar için sanal makineleri dağıtma

Hiper yakınsanmış bir küme dağıtıyorsanız, son adım Depolama Alanları Doğrudan kümesinde sanal makineler sağlamaktır.

Sanal makinenin dosyaları, yük devretme kümelerindeki kümelenmiş VM'ler gibi sistemlerin CSV ad alanında (örnek: c:\ClusterStorage\Volume1) depolanmalıdır.

System Center Virtual Machine Manager gibi depolama ve sanal makineleri yönetmek için yerleşik araçları veya diğer araçları kullanabilirsiniz.

4. Adım: Yakınsanmış çözümler için Scale-Out Dosya Sunucusu dağıtma

Yakınsanmış bir çözüm dağıtıyorsanız, sonraki adım bir Scale-Out Dosya Sunucusu örneği oluşturmak ve bazı dosya paylaşımları ayarlamaktır. Hiper yakınsama kümesi dağıtıyorsanız, işiniz bitti demektir ve bu bölüme ihtiyacınız yoktur.

4.1. Adım: Scale-Out Dosya Sunucusu rolünü oluşturma

Dosya sunucunuz için küme hizmetlerini ayarlamanın bir sonraki adımı, sürekli olarak kullanılabilir dosya paylaşımlarınızın barındırıldığı Scale-Out Dosya Sunucusu örneğini oluşturduğunuzda kümelenmiş dosya sunucusu rolünü oluşturmaktır.

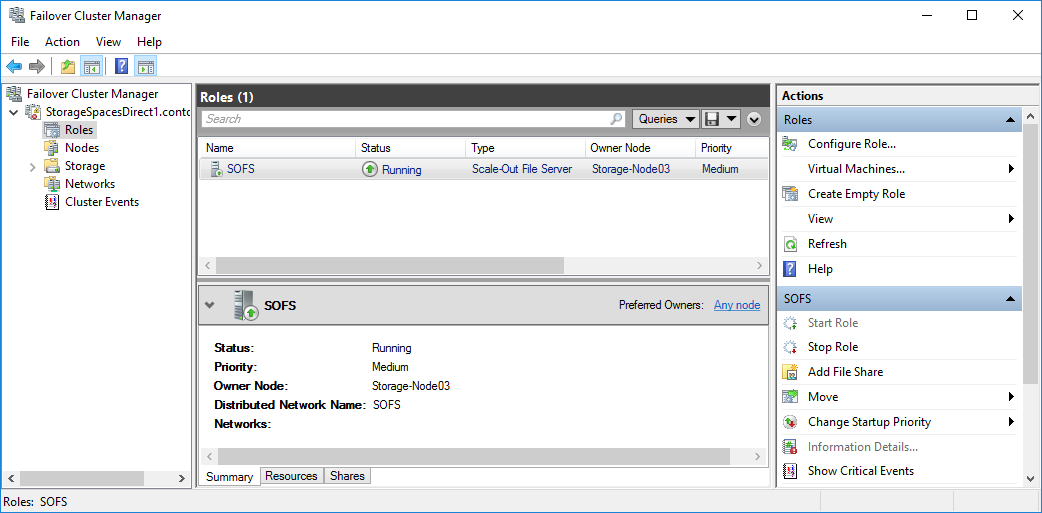

Scale-Out Dosya Sunucusu rolü oluşturmak için Yük Devretme Kümesi Yöneticisini kullanın.

Yük Devretme Kümesi Yöneticisi'nde kümeyi seçin, Roller'ne gidin ve Rolü Yapılandır...öğesine tıklayın.

Yüksek Kullanılabilirlik Sihirbazı görüntülenir.Rol Seçin sayfasında, Dosya Sunucusuöğesine tıklayın.

Dosya Sunucusu Türü sayfasında,uygulama verileri içinScale-Out Dosya Sunucusu'na tıklayın. İstemci Erişim Noktası sayfasında, Scale-Out Dosya Sunucusu için bir ad yazın.

Rolün başarıyla ayarlandığını doğrulamak için Roller bölümüne gidin ve Şekil 1'de gösterildiği gibi oluşturduğunuz kümelenmiş dosya sunucusu rolünün yanında Durum sütununda Çalışıyor ifadesinin göründüğünü onaylayın.

Şekil 1 Çalışıyor durumuyla Scale-Out Dosya Sunucusu'nu gösteren Yük Devretme Kümesi Yöneticisi

Not

Kümelenmiş rolü oluşturduktan sonra, birkaç dakika veya daha uzun süre dosya paylaşımları oluşturmanızı engelleyebilecek bazı ağ yayma gecikmeleri olabilir.

Windows PowerShell kullanarak Scale-Out Dosya Sunucusu rolü oluşturmak için

Dosya sunucusu kümesine bağlı bir Windows PowerShell oturumunda, Scale-Out Dosya Sunucusu rolünü oluşturmak için aşağıdaki komutları girin,

Add-ClusterScaleOutFileServerRole -Name SOFS -Cluster FSCLUSTER

Not

Kümelenmiş rolü oluşturduktan sonra, birkaç dakika veya daha uzun süre dosya paylaşımları oluşturmanızı engelleyebilecek bazı ağ yayma gecikmeleri olabilir. SOFS rolü hemen başarısız olursa ve başlatılmazsa, bunun nedeni kümenin bilgisayar nesnesinin SOFS rolü için bir bilgisayar hesabı oluşturma izni olmaması olabilir. Bununla ilgili yardım için şu blog gönderisine bakın: Scale-Out Dosya Sunucusu Rolü 1205, 1069 ve 1194Olay Kimlikleriyle Başlayamaz.

4.2. Adım: Dosya paylaşımları oluşturma

Sanal disklerinizi oluşturup CSV'lere ekledikten sonra, sanal disk başına CSV başına bir dosya paylaşımı olmak üzere dosya paylaşımları oluşturmanın zamanı geldi. System Center Virtual Machine Manager (VMM) sizin için izinleri işlediğinden bunu yapmak için muhtemelen en kolay yoldur, ancak ortamınızda yoksa, dağıtımı kısmen otomatikleştirmek için Windows PowerShell'i kullanabilirsiniz.

Hyper-V İş Yükleri için SMB Paylaşım Yapılandırması betiğinde yer alan ve grup ve paylaşım oluşturma işlemini kısmen otomatikleştiren betikleri kullanın. Hyper-V iş yükleri için yazılmıştır, bu nedenle başka iş yükleri dağıtıyorsanız, paylaşımları oluşturduktan sonra ayarları değiştirmeniz veya ek adımlar uygulamanız gerekebilir. Örneğin, Microsoft SQL Server kullanıyorsanız, SQL Server hizmet hesabına paylaşım ve dosya sistemi üzerinde tam denetim verilmelidir.

Not

Paylaşımlarınızı oluşturmak için System Center Virtual Machine Manager kullanmadığınız sürece küme düğümleri eklerken grup üyeliğini güncelleştirmeniz gerekir.

PowerShell betiklerini kullanarak dosya paylaşımları oluşturmak için aşağıdakileri yapın:

Hyper-V İş Yükleri için SMB Paylaşım Yapılandırması'nda bulunan betikleri dosya sunucusu kümesinin düğümlerinden birine indirin.

Yönetim sisteminde Etki Alanı Yöneticisi kimlik bilgileriyle bir Windows PowerShell oturumu açın ve ardından aşağıdaki betiği kullanarak Hyper-V bilgisayar nesneleri için bir Active Directory grubu oluşturun ve değişkenlerin değerlerini ortamınıza uygun şekilde değiştirin:

# Replace the values of these variables $HyperVClusterName = "Compute01" $HyperVObjectADGroupSamName = "Hyper-VServerComputerAccounts" <#No spaces#> $ScriptFolder = "C:\Scripts\SetupSMBSharesWithHyperV" # Start of script itself CD $ScriptFolder .\ADGroupSetup.ps1 -HyperVObjectADGroupSamName $HyperVObjectADGroupSamName -HyperVClusterName $HyperVClusterNameDepolama düğümlerinden birinde Yönetici kimlik bilgileriyle bir Windows PowerShell oturumu açın ve her CSV için paylaşımlar oluşturmak ve paylaşımlar için Domain Admins grubuna ve işlem kümesine yönetici izinleri vermek için aşağıdaki betiği kullanın.

# Replace the values of these variables $StorageClusterName = "StorageSpacesDirect1" $HyperVObjectADGroupSamName = "Hyper-VServerComputerAccounts" <#No spaces#> $SOFSName = "SOFS" $SharePrefix = "Share" $ScriptFolder = "C:\Scripts\SetupSMBSharesWithHyperV" # Start of the script itself CD $ScriptFolder Get-ClusterSharedVolume -Cluster $StorageClusterName | ForEach-Object { $ShareName = $SharePrefix + $_.SharedVolumeInfo.friendlyvolumename.trimstart("C:\ClusterStorage\Volume") Write-host "Creating share $ShareName on "$_.name "on Volume: " $_.SharedVolumeInfo.friendlyvolumename .\FileShareSetup.ps1 -HyperVClusterName $StorageClusterName -CSVVolumeNumber $_.SharedVolumeInfo.friendlyvolumename.trimstart("C:\ClusterStorage\Volume") -ScaleOutFSName $SOFSName -ShareName $ShareName -HyperVObjectADGroupSamName $HyperVObjectADGroupSamName }

Adım 4.3 Kerberos'un kısıtlanmış temsilini etkinleştirme

Uzak senaryo yönetimini ve Live Migration güvenliğini artırmak için depolama kümesi düğümlerinden birinden Hyper-V İş Yükleri için SMB Paylaşım Yapılandırması'nda bulunan KCDSetup.ps1 betiğini kullanarak Kerberos kısıtlı yetkilendirmesini ayarlayın. Betik için bir ara katman:

$HyperVClusterName = "Compute01"

$ScaleOutFSName = "SOFS"

$ScriptFolder = "C:\Scripts\SetupSMBSharesWithHyperV"

CD $ScriptFolder

.\KCDSetup.ps1 -HyperVClusterName $HyperVClusterName -ScaleOutFSName $ScaleOutFSName -EnableLM