Overview of Encoding in Media Foundation

This topic is an overview of the file encoding APIs provided in Microsoft Media Foundation.

Terminology

Encoding is a general term that covers several distinct processes:

- Encoding an audio or video stream into a compressed formats. For example, encoding a video stream to H.264 video.

- Multiplexing ("muxing") one or more streams into a single byte stream. Typically, the incoming streams are encoded first. This step might involve packetizing the encoded streams.

- Writing a multiplexed byte stream to a file, such as an MP4 or Advanced Systems Format (ASF) file. Alternatively, the multiplexed stream can be sent over the network.

The following diagram shows these three processes:

Variations of this process include transcoding and remuxing:

- Transcoding means decoding an existing file, re-encoding the streams, and re-multiplexing the encoded streams. Transcoding might be done to convert a file from one encoding type to another; for example, to convert H.264 video to Windows Media Video (WMV). It can also be done to change the encoded bit rate; the video frame size; the frame rate; or other format parameters.

- Remultiplexing or remuxing means demultiplexing a file and re-multiplexing the streams, with no decode/encode step. This might be done to change how the audio/video packets are multiplexed, to remove a stream, or to combine streams from two different source files.

- Transrating is a special case of transcoding, where the bit-rate is changed without changing the compression type. For example, you might convert a high-bit-rate file to a lower bit-rate. A typical scenario in which transrating might be used is when synchronizing media content from a PC to a portable device. If the portable device does not support a high bit rate, the file might be transrated before it is copied to the portable device.

The following block diagram shows the transcoding process.

The following block diagram shows the remuxing process.

This documentation sometimes uses the term encoding to include both transcoding and remuxing. When it is important to distinguish between them, the documentation will note the difference.

See also: Media Foundation: Essential Concepts.

Media Foundation Encoding Architecture

At the lowest layer of the Media Foundation architecture, the following types of component are used for encoding:

- For transcoding, Media Sources are used to demultiplex the source file.

- For the encoding process, Media Foundation Transforms are used to decode and encode streams.

- For the multiplexing process, Media Sinks are used to multiplex the streams and write the multiplexed stream to a file or network.

The following diagram shows the data flow between these components in a transcoding scenario:

Most applications will not use these components directly. Instead, an application will use higher-level APIs that manage these lower-level components. Media Foundation provides two higher-level APIs for encoding:

-

The Media Session provides an end-to-end pipeline that moves data from the media source, through the codecs, and finally to the media sink. The application controls the Media Session and receives status events from the Media Session.

-

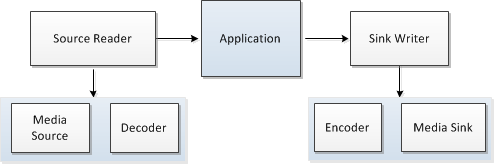

Source Reader plus Sink Writer

-

The Source Reader wraps the media source and optionally the decoders. It delivers either encoded or decoded samples the application. The Sink Writer wraps the media sink and optionally the encoders. The application passes samples to the Sink Writer.

The following diagram shows the Media Session:

The Transcode API (the blue shaded box) is a set of APIs introduced in Windows 7, which make it easier to configure the Media Session for encoding.

The next diagram shows the Source Reader and Sink Writer:

Related topics