在 Analytics Platform System (PDW) 中配置 PolyBase 以访问 Hadoop 中的外部数据

本文介绍如何在 Analytics Platform System (PDW) 或 APS 设备上使用 PolyBase 查询 Hadoop 中的外部数据。

先决条件

PolyBase 支持两个 Hadoop 提供程序:Hortonworks 数据平台 (HDP) 和 Cloudera 分布式 Hadoop (CDH)。 Hadoop 遵循其新版本的“Major.Minor.Version”模式,且主要和次要版本中支持的所有版本均受支持。 支持以下 Hadoop 提供程序:

- Linux/Windows Server 上的 Hortonworks HDP 1.3

- Linux 上的 Hortonworks HDP 2.1 - 2.6

- Linux 上的 Hortonworks HDP 3.0 - 3.1

- Windows Server 上的 Hortonworks HDP 2.1 - 2.3

- Linux 上的 Cloudera CDH 4.3

- Linux 上的 Cloudera CDH 5.1 - 5.5、5.9 - 5.13、5.15 和 5.16

配置 Hadoop 连接

首先,配置 APS 以使用特定的 Hadoop 提供程序。

使用“hadoop connectivity”运行 sp_configure 并为提供程序设置适当的值。 若要为提供程序查找值,请参阅 PolyBase 连接配置。

-- Values map to various external data sources. -- Example: value 7 stands for Hortonworks HDP 2.1 to 2.6 and 3.0 - 3.1 on Linux, -- 2.1 to 2.3 on Windows Server, and Azure Blob Storage sp_configure @configname = 'hadoop connectivity', @configvalue = 7; GO RECONFIGURE GO使用 Appliance Configuration Manager 上的“服务状态”页重启 APS 区域。

启用下推计算

若要提高查询性能,请对 Hadoop 群集启用下推计算:

打开与 APS PDW 控制节点的远程桌面连接。

在控制节点上查找文件

yarn-site.xml。 通常,路径为C:\Program Files\Microsoft SQL Server Parallel Data Warehouse\100\Hadoop\conf\。对于 Hadoop 计算机,在 Hadoop 配置目录中查找类似文件。 在文件中,查找并复制配置密钥

yarn.application.classpath的值。在控件节点上的

yarn.site.xml文件中,找到yarn.application.classpath属性。 将 Hadoop 计算机的值粘贴到值元素中。对于所有 CDH 5.X 版本,都需要将

mapreduce.application.classpath配置参数添加到yarn.site.xml文件的末尾或添加到mapred-site.xml文件中。 HortonWorks 在yarn.application.classpath配置中包括了这些配置。 有关示例,请参阅 PolyBase 配置。

CDH 5.X 群集默认值的示例 XML 文件

Yarn-site.xml 与 yarn.application.classpath 和 mapreduce.application.classpath 配置。

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>yarn.resourcemanager.connect.max-wait.ms</name>

<value>40000</value>

</property>

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>30000</value>

</property>

<!-- Applications' Configuration-->

<property>

<description>CLASSPATH for YARN applications. A comma-separated list of CLASSPATH entries</description>

<!-- Please set this value to the correct yarn.application.classpath that matches your server side configuration -->

<!-- For example: $HADOOP_CONF_DIR,$HADOOP_COMMON_HOME/share/hadoop/common/*,$HADOOP_COMMON_HOME/share/hadoop/common/lib/*,$HADOOP_HDFS_HOME/share/hadoop/hdfs/*,$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*,$HADOOP_YARN_HOME/share/hadoop/yarn/*,$HADOOP_YARN_HOME/share/hadoop/yarn/lib/* -->

<name>yarn.application.classpath</name>

<value>$HADOOP_CLIENT_CONF_DIR,$HADOOP_CONF_DIR,$HADOOP_COMMON_HOME/*,$HADOOP_COMMON_HOME/lib/*,$HADOOP_HDFS_HOME/*,$HADOOP_HDFS_HOME/lib/*,$HADOOP_YARN_HOME/*,$HADOOP_YARN_HOME/lib/,$HADOOP_MAPRED_HOME/*,$HADOOP_MAPRED_HOME/lib/*,$MR2_CLASSPATH*</value>

</property>

<!-- kerberos security information, PLEASE FILL THESE IN ACCORDING TO HADOOP CLUSTER CONFIG

<property>

<name>yarn.resourcemanager.principal</name>

<value></value>

</property>

-->

</configuration>

如果选择将两个配置设置拆分为 mapred-site.xml 和 yarn-site.xml,则这两个文件将如下所示:

对于 yarn-site.xml:

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>yarn.resourcemanager.connect.max-wait.ms</name>

<value>40000</value>

</property>

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>30000</value>

</property>

<!-- Applications' Configuration-->

<property>

<description>CLASSPATH for YARN applications. A comma-separated list of CLASSPATH entries</description>

<!-- Please set this value to the correct yarn.application.classpath that matches your server side configuration -->

<!-- For example: $HADOOP_CONF_DIR,$HADOOP_COMMON_HOME/share/hadoop/common/*,$HADOOP_COMMON_HOME/share/hadoop/common/lib/*,$HADOOP_HDFS_HOME/share/hadoop/hdfs/*,$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*,$HADOOP_YARN_HOME/share/hadoop/yarn/*,$HADOOP_YARN_HOME/share/hadoop/yarn/lib/* -->

<name>yarn.application.classpath</name>

<value>$HADOOP_CLIENT_CONF_DIR,$HADOOP_CONF_DIR,$HADOOP_COMMON_HOME/*,$HADOOP_COMMON_HOME/lib/*,$HADOOP_HDFS_HOME/*,$HADOOP_HDFS_HOME/lib/*,$HADOOP_YARN_HOME/*,$HADOOP_YARN_HOME/lib/*</value>

</property>

<!-- kerberos security information, PLEASE FILL THESE IN ACCORDING TO HADOOP CLUSTER CONFIG

<property>

<name>yarn.resourcemanager.principal</name>

<value></value>

</property>

-->

</configuration>

对于 mapred-site.xml:

请注意属性 mapreduce.application.classpath。 在 CDH 5.x 中,你会发现遵守 Ambari 中的相同命名约定的配置值。

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration xmlns:xi="http://www.w3.org/2001/XInclude">

<property>

<name>mapred.min.split.size</name>

<value>1073741824</value>

</property>

<property>

<name>mapreduce.app-submission.cross-platform</name>

<value>true</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/*,$HADOOP_MAPRED_HOME/lib/*,$MR2_CLASSPATH</value>

</property>

<!--kerberos security information, PLEASE FILL THESE IN ACCORDING TO HADOOP CLUSTER CONFIG

<property>

<name>mapreduce.jobhistory.principal</name>

<value></value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value></value>

</property>

-->

</configuration>

HDP 3.X 群集默认值的示例 XML 文件

对于 yarn-site.xml:

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>yarn.resourcemanager.connect.max-wait.ms</name>

<value>40000</value>

</property>

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>30000</value>

</property>

<!-- Applications' Configuration-->

<property>

<description>CLASSPATH for YARN applications. A comma-separated list of CLASSPATH entries</description>

<!-- Please set this value to the correct yarn.application.classpath that matches your server side configuration -->

<!-- For example: $HADOOP_CONF_DIR,$HADOOP_COMMON_HOME/share/hadoop/common/*,$HADOOP_COMMON_HOME/share/hadoop/common/lib/*,$HADOOP_HDFS_HOME/share/hadoop/hdfs/*,$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*,$HADOOP_YARN_HOME/share/hadoop/yarn/*,$HADOOP_YARN_HOME/share/hadoop/yarn/lib/* -->

<name>yarn.application.classpath</name>

<value>$HADOOP_CONF_DIR,/usr/hdp/3.1.0.0-78/hadoop/*,/usr/hdp/3.1.0.0-78/hadoop/lib/*,/usr/hdp/current/hadoop-hdfs-client/*,/usr/hdp/current/hadoop-hdfs-client/lib/*,/usr/hdp/current/hadoop-yarn-client/*,/usr/hdp/current/hadoop-yarn-client/lib/*,/usr/hdp/3.1.0.0-78/hadoop-mapreduce/*,/usr/hdp/3.1.0.0-78/hadoop-yarn/*,/usr/hdp/3.1.0.0-78/hadoop-yarn/lib/*,/usr/hdp/3.1.0.0-78/hadoop-mapreduce/lib/*,/usr/hdp/share/hadoop/common/*,/usr/hdp/share/hadoop/common/lib/*,/usr/hdp/share/hadoop/tools/lib/*</value>

</property>

<!-- kerberos security information, PLEASE FILL THESE IN ACCORDING TO HADOOP CLUSTER CONFIG

<property>

<name>yarn.resourcemanager.principal</name>

<value></value>

</property>

-->

</configuration>

配置外部表

若要查询 Hadoop 数据源中的数据,必须定义外部表以在 Transact-SQL 查询中使用。 以下步骤介绍如何配置外部表。

在数据库上创建主密钥。 这是加密凭据密钥所必需的。

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'S0me!nfo';为受 Kerberos 保护的 Hadoop 群集创建数据库范围凭据。

-- IDENTITY: the Kerberos user name. -- SECRET: the Kerberos password CREATE DATABASE SCOPED CREDENTIAL HadoopUser1 WITH IDENTITY = '<hadoop_user_name>', Secret = '<hadoop_password>';使用 CREATE EXTERNAL DATA SOURCE 创建外部数据源。

-- LOCATION (Required) : Hadoop Name Node IP address and port. -- RESOURCE MANAGER LOCATION (Optional): Hadoop Resource Manager location to enable pushdown computation. -- CREDENTIAL (Optional): the database scoped credential, created above. CREATE EXTERNAL DATA SOURCE MyHadoopCluster WITH ( TYPE = HADOOP, LOCATION ='hdfs://10.xxx.xx.xxx:xxxx', RESOURCE_MANAGER_LOCATION = '10.xxx.xx.xxx:xxxx', CREDENTIAL = HadoopUser1 );使用 CREATE EXTERNAL FILE FORMAT 创建外部文件格式。

-- FORMAT TYPE: Type of format in Hadoop (DELIMITEDTEXT, RCFILE, ORC, PARQUET). CREATE EXTERNAL FILE FORMAT TextFileFormat WITH ( FORMAT_TYPE = DELIMITEDTEXT, FORMAT_OPTIONS (FIELD_TERMINATOR ='|', USE_TYPE_DEFAULT = TRUE)使用 CREATE EXTERNAL TABLE 创建指向存储在 Hadoop 中的数据的外部表。 在此示例中,外部数据包含汽车传感器数据。

-- LOCATION: path to file or directory that contains the data (relative to HDFS root). CREATE EXTERNAL TABLE [dbo].[CarSensor_Data] ( [SensorKey] int NOT NULL, [CustomerKey] int NOT NULL, [GeographyKey] int NULL, [Speed] float NOT NULL, [YearMeasured] int NOT NULL ) WITH (LOCATION='/Demo/', DATA_SOURCE = MyHadoopCluster, FILE_FORMAT = TextFileFormat );在外部表上创建统计信息。

CREATE STATISTICS StatsForSensors on CarSensor_Data(CustomerKey, Speed)

PolyBase Queries

PolyBase 适用于三个函数:

- 对外部表的即席查询。

- 导入数据。

- 导出数据。

下面的查询提供了虚构汽车传感器数据示例。

即席查询

下面的即席查询联接与 Hadoop 数据的关系。 它选择驾驶速度超过 35 mph 的客户,将 APS 中存储的结构化客户数据与 Hadoop 中存储的汽车传感器数据相联接。

SELECT DISTINCT Insured_Customers.FirstName,Insured_Customers.LastName,

Insured_Customers. YearlyIncome, CarSensor_Data.Speed

FROM Insured_Customers, CarSensor_Data

WHERE Insured_Customers.CustomerKey = CarSensor_Data.CustomerKey and CarSensor_Data.Speed > 35

ORDER BY CarSensor_Data.Speed DESC

OPTION (FORCE EXTERNALPUSHDOWN); -- or OPTION (DISABLE EXTERNALPUSHDOWN)

导入数据

下面的查询将外部数据导入 APS。 此示例将快速驾驶员数据导入 APS 以进一步深入分析。 为提高性能,它利用 APS 中的列存储技术。

CREATE TABLE Fast_Customers

WITH

(CLUSTERED COLUMNSTORE INDEX, DISTRIBUTION = HASH (CustomerKey))

AS

SELECT DISTINCT

Insured_Customers.CustomerKey, Insured_Customers.FirstName, Insured_Customers.LastName,

Insured_Customers.YearlyIncome, Insured_Customers.MaritalStatus

from Insured_Customers INNER JOIN

(

SELECT * FROM CarSensor_Data where Speed > 35

) AS SensorD

ON Insured_Customers.CustomerKey = SensorD.CustomerKey

导出数据

以下查询将数据从 APS 导出到 Hadoop。 它可用于将关系数据存档到 Hadoop,同时仍能够对其进行查询。

-- Export data: Move old data to Hadoop while keeping it query-able via an external table.

CREATE EXTERNAL TABLE [dbo].[FastCustomers2009]

WITH (

LOCATION='/archive/customer/2009',

DATA_SOURCE = HadoopHDP2,

FILE_FORMAT = TextFileFormat

)

AS

SELECT T.* FROM Insured_Customers T1 JOIN CarSensor_Data T2

ON (T1.CustomerKey = T2.CustomerKey)

WHERE T2.YearMeasured = 2009 and T2.Speed > 40;

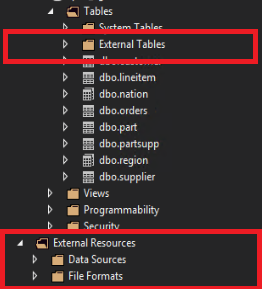

查看 SSMS 中的 PolyBase 对象

在 SQL Server Data Tools 中,外部表在单独的文件夹“外部表”中显示。 外部数据源和外部文件格式位于“外部资源” 下的子文件夹中。

相关内容

- 有关 Hadoop 安全设置,请参阅配置 Hadoop 安全性。

- 有关 PolyBase 的详细信息,请参阅什么是 PolyBase?。