教學課程:偵測臉部活動

本文內容

臉部活動偵測用於判斷輸入視訊串流中的臉部是否為真實 (即時) 或虛假 (詐騙)。 這是生物特徵辨識驗證系統中的重要建置組塊,用以防止冒充者使用相片、視訊、遮罩或其他方法來模擬他人以存取系統。

臉部活躍度偵測的目標是確保系統在驗證時與實際存在的真實人員互動。 隨著數位金融、遠端存取和線上身分識別驗證流程的興起,這些系統的重要性與日俱增。

Azure AI 臉部活偵測解決方案可成功防禦各種詐騙類型,包括紙張列印、2D/3D 面具,以及手機和筆記型電腦上的詐騙呈現。 臉部活動偵測是一個仍在積極研究的領域,人們不斷進行改進以應對日益複雜的詐騙攻擊。 隨著整體解決方案對新類型攻擊變得更加穩健,我們會隨著時間對用戶端和服務元件進行持續改進。

重要

針對臉部活躍度的用戶端 SDK 是一項受管制功能。 您必須填寫臉部辨識接收表單 以要求存取活躍度功能。 當您的 Azure 訂用帳戶獲得存取權時,您可以下載臉部活動 SDK。

簡介

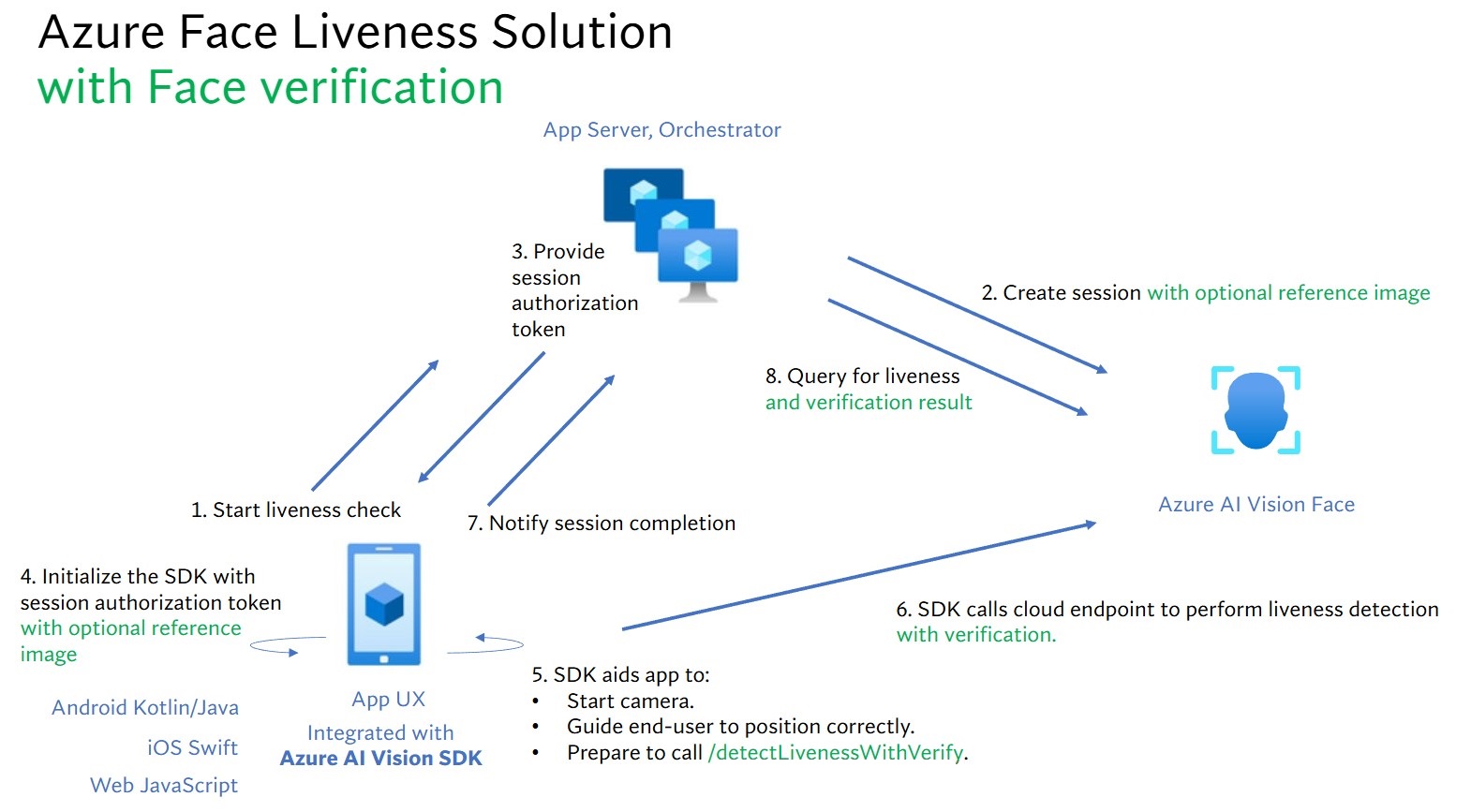

臉部活動解決方案整合涉及兩個不同的元件:前端行動/Web 應用程式和應用程式伺服器/協調器。

前端應用程式 :前端應用程式會從應用程式伺服器接收授權,以初始化臉部活動偵測。 其主要目標是啟用相機,並精準地引導一般使用者完成臉部活動偵測流程。應用程式伺服器 :應用程式伺服器可作為後端伺服器,以建立臉部活動偵測工作階段,並從臉部服務取得特定工作階段的授權權杖。 此權杖會授權前端應用程式執行臉部活動偵測。 該應用程式伺服器的目標是管理工作階段、授與前端應用程式的授權,以及檢視臉部活動偵測流程的結果。

此外,我們會結合臉部驗證與臉部活動偵測,以驗證該人員是否為您指定的特定人員。 下表說明了臉部活動偵測功能的詳細資料:

功能

描述

臉部活動偵測

判斷輸入是真實或虛假,而且只有應用程式伺服器有權啟動臉部活動檢查並查詢結果。

使用臉部活動偵測的臉部驗證

判斷輸入是真實的或虛假,並根據您提供的參照影像來驗證人員的身分識別。 應用程式伺服器或前端應用程式都可以提供參考影像。 僅應用程式伺服器有權初始化臉部活動並查詢結果。

本教學課程會示範如何操作前端應用程式和應用程式伺服器,以跨各種語言 SDK 執行臉部活動偵測 和使用臉部活動偵測的臉部驗證 。

必要條件

Azure 訂用帳戶 - 建立免費帳戶

您的 Azure 帳戶必須具有已指派的 [認知服務參與者]指派角色 文件中的步驟,或連絡系統管理員。

擁有 Azure 訂用帳戶之後,在 Azure 入口網站中建立臉部資源 ,以取得您的金鑰和端點。 在其部署後,選取 [前往資源]

您需要來自所建立資源的金鑰和端點,以將應用程式連線至臉部服務。

您可以使用免費定價層 (F0) 來試用服務,之後可升級至付費層以用於實際執行環境。

存取適用於行動裝置和 Web 的 Azure AI 視覺臉部用戶端 SDK (IOS 和 Android)。 若要開始使用,您必須申請臉部辨識有限存取功能 以取得 SDK 的存取權。 如需詳細資訊,請參閱臉部有限存取權 頁面。

我們為前端應用程式和應用程式伺服器提供不同語言的 SDK。 請參閱下列指示來設定前端應用程式和應用程式伺服器。

下載適用於前端應用程式的 SDK

在您可存取 SDK 之後,請遵循 azure-ai-vision-sdk GitHub 存放庫中的指示,以將 UI 和程式碼整合到原生行動應用程式中。 臉部活動 SDK 支援適用於 Android 行動應用程式的 Java/Kotlin、適用於 iOS 行動應用程式的 Swift 和適用於 Web 應用程式的 JavaScript:

將程式碼新增至應用程式之後,SDK 會處理啟動相機、引導使用者調整位置、組成臉部活動承載,以及呼叫 Azure AI 臉部雲端服務來處理臉部活動承載。

下載適用於應用程式伺服器的 Azure AI 臉部用戶端程式庫

應用程式伺服器/協調器負責控制臉部活動工作階段的生命週期。 應用程式伺服器必須先建立工作階段,才能執行臉部活動偵測,然後才能查詢結果並在完成臉部活動檢查時刪除工作階段。 我們提供各種語言的程式庫,可讓您輕鬆實作您的應用程式伺服器。 請遵循下列步驟來安裝此套件:

建立環境變數

在此範例中,在執行應用程式的本機電腦上將認證寫入環境變數。

前往 Azure 入口網站。 如果已成功部署您在 [必要條件] 區段中建立的資源,請選取 [後續步驟] 下的 [前往資源] 按鈕。 您可以在 [金鑰和端點] 頁面中 [資源管理] 底下找到金鑰和端點。 您的資源金鑰與您的 Azure 訂用帳戶識別碼不同。

若要設定金鑰和端點的環境變數,請開啟主控台視窗,然後遵循作業系統和開發環境的指示進行。

若要設定 FACE_APIKEY 環境變數,請以您其中一個資源索引碼取代 <your_key>。

若要設定 FACE_ENDPOINT 環境變數,請將 <your_endpoint> 取代為您資源的端點。

setx FACE_APIKEY <your_key>

setx FACE_ENDPOINT <your_endpoint>

新增環境變數之後,您可能需要重新啟動任何將讀取環境變數的執行中程式,包括主控台視窗。

export FACE_APIKEY=<your_key>

export FACE_ENDPOINT=<your_endpoint>

新增環境變數之後,請從主控台視窗執行 source ~/.bashrc,讓變更生效。

涉及臉部活動協調流程的高階步驟如下所示:

前端應用程式會啟動臉部活動檢查,並通知應用程式伺服器。

應用程式伺服器會使用 Azure AI 臉部服務建立新的臉部活動工作階段。 服務會建立臉部活動工作階段,並且會使用工作階段授權權杖回應。 如需更多關於建立臉部活動工作階段中每個要求參數的資訊,請參閱臉部活動建立工作階段作業 。

var endpoint = new Uri(System.Environment.GetEnvironmentVariable("FACE_ENDPOINT"));

var credential = new AzureKeyCredential(System.Environment.GetEnvironmentVariable("FACE_APIKEY"));

var sessionClient = new FaceSessionClient(endpoint, credential);

var createContent = new CreateLivenessSessionContent(LivenessOperationMode.Passive)

{

DeviceCorrelationId = "723d6d03-ef33-40a8-9682-23a1feb7bccd",

SendResultsToClient = false,

};

var createResponse = await sessionClient.CreateLivenessSessionAsync(createContent);

var sessionId = createResponse.Value.SessionId;

Console.WriteLine($"Session created.");

Console.WriteLine($"Session id: {sessionId}");

Console.WriteLine($"Auth token: {createResponse.Value.AuthToken}");

String endpoint = System.getenv("FACE_ENDPOINT");

String accountKey = System.getenv("FACE_APIKEY");

FaceSessionClient sessionClient = new FaceSessionClientBuilder()

.endpoint(endpoint)

.credential(new AzureKeyCredential(accountKey))

.buildClient();

CreateLivenessSessionContent parameters = new CreateLivenessSessionContent(LivenessOperationMode.PASSIVE)

.setDeviceCorrelationId("723d6d03-ef33-40a8-9682-23a1feb7bccd")

.setSendResultsToClient(false);

CreateLivenessSessionResult creationResult = sessionClient.createLivenessSession(parameters);

System.out.println("Session created.");

System.out.println("Session id: " + creationResult.getSessionId());

System.out.println("Auth token: " + creationResult.getAuthToken());

endpoint = os.environ["FACE_ENDPOINT"]

key = os.environ["FACE_APIKEY"]

face_session_client = FaceSessionClient(endpoint=endpoint, credential=AzureKeyCredential(key))

created_session = await face_session_client.create_liveness_session(

CreateLivenessSessionContent(

liveness_operation_mode=LivenessOperationMode.PASSIVE,

device_correlation_id="723d6d03-ef33-40a8-9682-23a1feb7bccd",

send_results_to_client=False,

)

)

print("Session created.")

print(f"Session id: {created_session.session_id}")

print(f"Auth token: {created_session.auth_token}")

const endpoint = process.env['FACE_ENDPOINT'];

const apikey = process.env['FACE_APIKEY'];

const credential = new AzureKeyCredential(apikey);

const client = createFaceClient(endpoint, credential);

const createLivenessSessionResponse = await client.path('/detectLiveness/singleModal/sessions').post({

body: {

livenessOperationMode: 'Passive',

deviceCorrelationId: '723d6d03-ef33-40a8-9682-23a1feb7bccd',

sendResultsToClient: false,

},

});

if (isUnexpected(createLivenessSessionResponse)) {

throw new Error(createLivenessSessionResponse.body.error.message);

}

console.log('Session created.');

console.log(`Session ID: ${createLivenessSessionResponse.body.sessionId}`);

console.log(`Auth token: ${createLivenessSessionResponse.body.authToken}`);

curl --request POST --location "%FACE_ENDPOINT%/face/v1.1-preview.1/detectliveness/singlemodal/sessions" ^

--header "Ocp-Apim-Subscription-Key: %FACE_APIKEY%" ^

--header "Content-Type: application/json" ^

--data ^

"{ ^

""livenessOperationMode"": ""passive"", ^

""deviceCorrelationId"": ""723d6d03-ef33-40a8-9682-23a1feb7bccd"", ^

""sendResultsToClient"": ""false"" ^

}"

curl --request POST --location "${FACE_ENDPOINT}/face/v1.1-preview.1/detectliveness/singlemodal/sessions" \

--header "Ocp-Apim-Subscription-Key: ${FACE_APIKEY}" \

--header "Content-Type: application/json" \

--data \

'{

"livenessOperationMode": "passive",

"deviceCorrelationId": "723d6d03-ef33-40a8-9682-23a1feb7bccd",

"sendResultsToClient": "false"

}'

回應主體的範例:

{

"sessionId": "a6e7193e-b638-42e9-903f-eaf60d2b40a5",

"authToken": "<session-authorization-token>"

}

應用程式伺服器會將工作階段授權權杖傳回前端應用程式。

前端應用程式會在 Azure AI 視覺 SDK 的初始化期間提供工作階段授權權杖。

mServiceOptions?.setTokenCredential(com.azure.android.core.credential.TokenCredential { _, callback ->

callback.onSuccess(com.azure.android.core.credential.AccessToken("<INSERT_TOKEN_HERE>", org.threeten.bp.OffsetDateTime.MAX))

})

serviceOptions?.authorizationToken = "<INSERT_TOKEN_HERE>"

azureAIVisionFaceAnalyzer.token = "<INSERT_TOKEN_HERE>"

SDK 接著會啟動相機、引導使用者調整至正確位置,然後準備承載以呼叫臉部活動偵測服務端點。

SDK 會呼叫 Azure AI 視覺臉部服務來執行臉部活動偵測。 服務回應之後,SDK 會通知前端應用程式已完成臉部活動檢查。

前端應用程式會將完成臉部活動檢查轉送至應用程式伺服器。

應用程式伺服器現在可以查詢來自 Azure AI 視覺臉部服務的臉部活動偵測結果。

var getResultResponse = await sessionClient.GetLivenessSessionResultAsync(sessionId);

var sessionResult = getResultResponse.Value;

Console.WriteLine($"Session id: {sessionResult.Id}");

Console.WriteLine($"Session status: {sessionResult.Status}");

Console.WriteLine($"Liveness detection request id: {sessionResult.Result?.RequestId}");

Console.WriteLine($"Liveness detection received datetime: {sessionResult.Result?.ReceivedDateTime}");

Console.WriteLine($"Liveness detection decision: {sessionResult.Result?.Response.Body.LivenessDecision}");

Console.WriteLine($"Session created datetime: {sessionResult.CreatedDateTime}");

Console.WriteLine($"Auth token TTL (seconds): {sessionResult.AuthTokenTimeToLiveInSeconds}");

Console.WriteLine($"Session expired: {sessionResult.SessionExpired}");

Console.WriteLine($"Device correlation id: {sessionResult.DeviceCorrelationId}");

LivenessSession sessionResult = sessionClient.getLivenessSessionResult(creationResult.getSessionId());

System.out.println("Session id: " + sessionResult.getId());

System.out.println("Session status: " + sessionResult.getStatus());

System.out.println("Liveness detection request id: " + sessionResult.getResult().getRequestId());

System.out.println("Liveness detection received datetime: " + sessionResult.getResult().getReceivedDateTime());

System.out.println("Liveness detection decision: " + sessionResult.getResult().getResponse().getBody().getLivenessDecision());

System.out.println("Session created datetime: " + sessionResult.getCreatedDateTime());

System.out.println("Auth token TTL (seconds): " + sessionResult.getAuthTokenTimeToLiveInSeconds());

System.out.println("Session expired: " + sessionResult.isSessionExpired());

System.out.println("Device correlation id: " + sessionResult.getDeviceCorrelationId());

liveness_result = await face_session_client.get_liveness_session_result(

created_session.session_id

)

print(f"Session id: {liveness_result.id}")

print(f"Session status: {liveness_result.status}")

print(f"Liveness detection request id: {liveness_result.result.request_id}")

print(f"Liveness detection received datetime: {liveness_result.result.received_date_time}")

print(f"Liveness detection decision: {liveness_result.result.response.body.liveness_decision}")

print(f"Session created datetime: {liveness_result.created_date_time}")

print(f"Auth token TTL (seconds): {liveness_result.auth_token_time_to_live_in_seconds}")

print(f"Session expired: {liveness_result.session_expired}")

print(f"Device correlation id: {liveness_result.device_correlation_id}")

const getLivenessSessionResultResponse = await client.path('/detectLiveness/singleModal/sessions/{sessionId}', createLivenessSessionResponse.body.sessionId).get();

if (isUnexpected(getLivenessSessionResultResponse)) {

throw new Error(getLivenessSessionResultResponse.body.error.message);

}

console.log(`Session id: ${getLivenessSessionResultResponse.body.id}`);

console.log(`Session status: ${getLivenessSessionResultResponse.body.status}`);

console.log(`Liveness detection request id: ${getLivenessSessionResultResponse.body.result?.requestId}`);

console.log(`Liveness detection received datetime: ${getLivenessSessionResultResponse.body.result?.receivedDateTime}`);

console.log(`Liveness detection decision: ${getLivenessSessionResultResponse.body.result?.response.body.livenessDecision}`);

console.log(`Session created datetime: ${getLivenessSessionResultResponse.body.createdDateTime}`);

console.log(`Auth token TTL (seconds): ${getLivenessSessionResultResponse.body.authTokenTimeToLiveInSeconds}`);

console.log(`Session expired: ${getLivenessSessionResultResponse.body.sessionExpired}`);

console.log(`Device correlation id: ${getLivenessSessionResultResponse.body.deviceCorrelationId}`);

curl --request GET --location "%FACE_ENDPOINT%/face/v1.1-preview.1/detectliveness/singlemodal/sessions/<session-id>" ^

--header "Ocp-Apim-Subscription-Key: %FACE_APIKEY%"

curl --request GET --location "${FACE_ENDPOINT}/face/v1.1-preview.1/detectliveness/singlemodal/sessions/<session-id>" \

--header "Ocp-Apim-Subscription-Key: ${FACE_APIKEY}"

回應主體的範例:

{

"status": "ResultAvailable",

"result": {

"id": 1,

"sessionId": "a3dc62a3-49d5-45a1-886c-36e7df97499a",

"requestId": "cb2b47dc-b2dd-49e8-bdf9-9b854c7ba843",

"receivedDateTime": "2023-10-31T16:50:15.6311565+00:00",

"request": {

"url": "/face/v1.1-preview.1/detectliveness/singlemodal",

"method": "POST",

"contentLength": 352568,

"contentType": "multipart/form-data; boundary=--------------------------482763481579020783621915",

"userAgent": ""

},

"response": {

"body": {

"livenessDecision": "realface",

"target": {

"faceRectangle": {

"top": 59,

"left": 121,

"width": 409,

"height": 395

},

"fileName": "content.bin",

"timeOffsetWithinFile": 0,

"imageType": "Color"

},

"modelVersionUsed": "2022-10-15-preview.04"

},

"statusCode": 200,

"latencyInMilliseconds": 1098

},

"digest": "537F5CFCD8D0A7C7C909C1E0F0906BF27375C8E1B5B58A6914991C101E0B6BFC"

},

"id": "a3dc62a3-49d5-45a1-886c-36e7df97499a",

"createdDateTime": "2023-10-31T16:49:33.6534925+00:00",

"authTokenTimeToLiveInSeconds": 600,

"deviceCorrelationId": "723d6d03-ef33-40a8-9682-23a1feb7bccd",

"sessionExpired": false

}

如果您不再查詢其結果,應用程式伺服器可以刪除該工作階段。

await sessionClient.DeleteLivenessSessionAsync(sessionId);

Console.WriteLine($"The session {sessionId} is deleted.");

sessionClient.deleteLivenessSession(creationResult.getSessionId());

System.out.println("The session " + creationResult.getSessionId() + " is deleted.");

await face_session_client.delete_liveness_session(

created_session.session_id

)

print(f"The session {created_session.session_id} is deleted.")

await face_session_client.close()

const deleteLivenessSessionResponse = await client.path('/detectLiveness/singleModal/sessions/{sessionId}', createLivenessSessionResponse.body.sessionId).delete();

if (isUnexpected(deleteLivenessSessionResponse)) {

throw new Error(deleteLivenessSessionResponse.body.error.message);

}

console.log(`The session ${createLivenessSessionResponse.body.sessionId} is deleted.`);

curl --request DELETE --location "%FACE_ENDPOINT%/face/v1.1-preview.1/detectliveness/singlemodal/sessions/<session-id>" ^

--header "Ocp-Apim-Subscription-Key: %FACE_APIKEY%"

curl --request DELETE --location "${FACE_ENDPOINT}/face/v1.1-preview.1/detectliveness/singlemodal/sessions/<session-id>" \

--header "Ocp-Apim-Subscription-Key: ${FACE_APIKEY}"

將臉部驗證與臉部活動偵測結合,可讓特定感興趣的人員進行生物特徵辨識驗證,並保證該人員實際存在於系統中。

整合臉部活動與驗證分成兩個部分:

選取良好的參照影像。

使用驗證設定臉部活動的協調流程。

選取參考影像

使用下列祕訣以確保您的輸入影像能夠提供最精確的辨識結果。

技術需求

支援的輸入影像格式包括 JPEG、PNG、GIF (第一個畫面格)、BMP。

影像檔案大小應大於 6 MB。

使用適用的偵測模型作為一般標準,判斷影像品質是否足以嘗試進行臉部辨識時,您可以在臉部偵測 作業中使用 qualityForRecognition 屬性。 人員註冊建議只使用 "high" 品質影像,識別案例則建議至少使用 "medium" 品質影像。

組合需求

相片清晰銳利,不可模糊、像素化、扭曲或損壞。

相片未經修改,包含移除臉部瑕疵或臉部外觀之修改。

相片必須是支援 RGB 色彩的格式 (JPEG、PNG、WEBP、BMP)。 建議的臉部大小是 200 像素 x 200 像素。 大於 200 像素 x 200 像素的臉部大小不會產生更好的 AI 品質,且大小不得超過 6 MB。

使用者不配戴眼鏡、面具、帽子、耳機、頭罩或面罩。 臉上不應有任何遮擋物。

允許臉部配飾,前提是不會遮擋到您的臉。

相片中應只有一張臉。

臉部應處於中性正面姿勢、雙眼睜開、嘴巴閉合,沒有極端的面部表情或頭部傾斜。

臉部不應有任何陰影或紅眼睛。 若發生任一情形,請重新拍攝相片。

背景應為樸素簡單,沒有任何陰影。

臉部應置中於影像內,並填滿至少 50% 的影像。

使用驗證設定臉部活動的協調流程。

涉及臉部活動驗證協調流程的高階步驟如下所示:

請擇一透過下列兩種方法提供驗證參照影像:

應用程式伺服器會在建立臉部活動工作階段時提供參考影像。 如需更多關於建立臉部活動工作階段中每個要求參數的資訊,請參閱臉部活動驗證建立工作階段作業 。

var endpoint = new Uri(System.Environment.GetEnvironmentVariable("FACE_ENDPOINT"));

var credential = new AzureKeyCredential(System.Environment.GetEnvironmentVariable("FACE_APIKEY"));

var sessionClient = new FaceSessionClient(endpoint, credential);

var createContent = new CreateLivenessWithVerifySessionContent(LivenessOperationMode.Passive)

{

DeviceCorrelationId = "723d6d03-ef33-40a8-9682-23a1feb7bccd"

};

using var fileStream = new FileStream("test.png", FileMode.Open, FileAccess.Read);

var createResponse = await sessionClient.CreateLivenessWithVerifySessionAsync(createContent, fileStream);

var sessionId = createResponse.Value.SessionId;

Console.WriteLine("Session created.");

Console.WriteLine($"Session id: {sessionId}");

Console.WriteLine($"Auth token: {createResponse.Value.AuthToken}");

Console.WriteLine("The reference image:");

Console.WriteLine($" Face rectangle: {createResponse.Value.VerifyImage.FaceRectangle.Top}, {createResponse.Value.VerifyImage.FaceRectangle.Left}, {createResponse.Value.VerifyImage.FaceRectangle.Width}, {createResponse.Value.VerifyImage.FaceRectangle.Height}");

Console.WriteLine($" The quality for recognition: {createResponse.Value.VerifyImage.QualityForRecognition}");

String endpoint = System.getenv("FACE_ENDPOINT");

String accountKey = System.getenv("FACE_APIKEY");

FaceSessionClient sessionClient = new FaceSessionClientBuilder()

.endpoint(endpoint)

.credential(new AzureKeyCredential(accountKey))

.buildClient();

CreateLivenessWithVerifySessionContent parameters = new CreateLivenessWithVerifySessionContent(LivenessOperationMode.PASSIVE)

.setDeviceCorrelationId("723d6d03-ef33-40a8-9682-23a1feb7bccd")

.setSendResultsToClient(false);

Path path = Paths.get("test.png");

BinaryData data = BinaryData.fromFile(path);

CreateLivenessWithVerifySessionResult creationResult = sessionClient.createLivenessWithVerifySession(parameters, data);

System.out.println("Session created.");

System.out.println("Session id: " + creationResult.getSessionId());

System.out.println("Auth token: " + creationResult.getAuthToken());

System.out.println("The reference image:");

System.out.println(" Face rectangle: " + creationResult.getVerifyImage().getFaceRectangle().getTop() + " " + creationResult.getVerifyImage().getFaceRectangle().getLeft() + " " + creationResult.getVerifyImage().getFaceRectangle().getWidth() + " " + creationResult.getVerifyImage().getFaceRectangle().getHeight());

System.out.println(" The quality for recognition: " + creationResult.getVerifyImage().getQualityForRecognition());

endpoint = os.environ["FACE_ENDPOINT"]

key = os.environ["FACE_APIKEY"]

face_session_client = FaceSessionClient(endpoint=endpoint, credential=AzureKeyCredential(key))

reference_image_path = "test.png"

with open(reference_image_path, "rb") as fd:

reference_image_content = fd.read()

created_session = await face_session_client.create_liveness_with_verify_session(

CreateLivenessWithVerifySessionContent(

liveness_operation_mode=LivenessOperationMode.PASSIVE,

device_correlation_id="723d6d03-ef33-40a8-9682-23a1feb7bccd",

),

verify_image=reference_image_content,

)

print("Session created.")

print(f"Session id: {created_session.session_id}")

print(f"Auth token: {created_session.auth_token}")

print("The reference image:")

print(f" Face rectangle: {created_session.verify_image.face_rectangle}")

print(f" The quality for recognition: {created_session.verify_image.quality_for_recognition}")

const endpoint = process.env['FACE_ENDPOINT'];

const apikey = process.env['FACE_APIKEY'];

const credential = new AzureKeyCredential(apikey);

const client = createFaceClient(endpoint, credential);

const createLivenessSessionResponse = await client.path('/detectLivenessWithVerify/singleModal/sessions').post({

contentType: 'multipart/form-data',

body: [

{

name: 'VerifyImage',

// Note that this utilizes Node.js API.

// In browser environment, please use file input or drag and drop to read files.

body: readFileSync('test.png'),

},

{

name: 'Parameters',

body: {

livenessOperationMode: 'Passive',

deviceCorrelationId: '723d6d03-ef33-40a8-9682-23a1feb7bccd',

},

},

],

});

if (isUnexpected(createLivenessSessionResponse)) {

throw new Error(createLivenessSessionResponse.body.error.message);

}

console.log('Session created:');

console.log(`Session ID: ${createLivenessSessionResponse.body.sessionId}`);

console.log(`Auth token: ${createLivenessSessionResponse.body.authToken}`);

console.log('The reference image:');

console.log(` Face rectangle: ${createLivenessSessionResponse.body.verifyImage.faceRectangle}`);

console.log(` The quality for recognition: ${createLivenessSessionResponse.body.verifyImage.qualityForRecognition}`)

curl --request POST --location "%FACE_ENDPOINT%/face/v1.1-preview.1/detectlivenesswithverify/singlemodal/sessions" ^

--header "Ocp-Apim-Subscription-Key: %FACE_APIKEY%" ^

--form "Parameters=""{\\\""livenessOperationMode\\\"": \\\""passive\\\"", \\\""deviceCorrelationId\\\"": \\\""723d6d03-ef33-40a8-9682-23a1feb7bccd\\\""}""" ^

--form "VerifyImage=@""test.png"""

curl --request POST --location "${FACE_ENDPOINT}/face/v1.1-preview.1/detectlivenesswithverify/singlemodal/sessions" \

--header "Ocp-Apim-Subscription-Key: ${FACE_APIKEY}" \

--form 'Parameters="{

\"livenessOperationMode\": \"passive\",

\"deviceCorrelationId\": \"723d6d03-ef33-40a8-9682-23a1feb7bccd\"

}"' \

--form 'VerifyImage=@"test.png"'

回應主體的範例:

{

"verifyImage": {

"faceRectangle": {

"top": 506,

"left": 51,

"width": 680,

"height": 475

},

"qualityForRecognition": "high"

},

"sessionId": "3847ffd3-4657-4e6c-870c-8e20de52f567",

"authToken": "<session-authorization-token>"

}

前端應用程式會在初始化 SDK 時提供參考影像。 Web 解決方案不支援此案例。

val singleFaceImageSource = VisionSource.fromFile("/path/to/image.jpg")

mFaceAnalysisOptions?.setRecognitionMode(RecognitionMode.valueOfVerifyingMatchToFaceInSingleFaceImage(singleFaceImageSource))

if let path = Bundle.main.path(forResource: "<IMAGE_RESOURCE_NAME>", ofType: "<IMAGE_RESOURCE_TYPE>"),

let image = UIImage(contentsOfFile: path),

let singleFaceImageSource = try? VisionSource(uiImage: image) {

try methodOptions.setRecognitionMode(.verifyMatchToFaceIn(singleFaceImage: singleFaceImageSource))

}

應用程式伺服器現在除了臉部活動結果之外,也可以查詢驗證結果。

var getResultResponse = await sessionClient.GetLivenessWithVerifySessionResultAsync(sessionId);

var sessionResult = getResultResponse.Value;

Console.WriteLine($"Session id: {sessionResult.Id}");

Console.WriteLine($"Session status: {sessionResult.Status}");

Console.WriteLine($"Liveness detection request id: {sessionResult.Result?.RequestId}");

Console.WriteLine($"Liveness detection received datetime: {sessionResult.Result?.ReceivedDateTime}");

Console.WriteLine($"Liveness detection decision: {sessionResult.Result?.Response.Body.LivenessDecision}");

Console.WriteLine($"Verification result: {sessionResult.Result?.Response.Body.VerifyResult.IsIdentical}");

Console.WriteLine($"Verification confidence: {sessionResult.Result?.Response.Body.VerifyResult.MatchConfidence}");

Console.WriteLine($"Session created datetime: {sessionResult.CreatedDateTime}");

Console.WriteLine($"Auth token TTL (seconds): {sessionResult.AuthTokenTimeToLiveInSeconds}");

Console.WriteLine($"Session expired: {sessionResult.SessionExpired}");

Console.WriteLine($"Device correlation id: {sessionResult.DeviceCorrelationId}");

LivenessWithVerifySession sessionResult = sessionClient.getLivenessWithVerifySessionResult(creationResult.getSessionId());

System.out.println("Session id: " + sessionResult.getId());

System.out.println("Session status: " + sessionResult.getStatus());

System.out.println("Liveness detection request id: " + sessionResult.getResult().getRequestId());

System.out.println("Liveness detection received datetime: " + sessionResult.getResult().getReceivedDateTime());

System.out.println("Liveness detection decision: " + sessionResult.getResult().getResponse().getBody().getLivenessDecision());

System.out.println("Verification result: " + sessionResult.getResult().getResponse().getBody().getVerifyResult().isIdentical());

System.out.println("Verification confidence: " + sessionResult.getResult().getResponse().getBody().getVerifyResult().getMatchConfidence());

System.out.println("Session created datetime: " + sessionResult.getCreatedDateTime());

System.out.println("Auth token TTL (seconds): " + sessionResult.getAuthTokenTimeToLiveInSeconds());

System.out.println("Session expired: " + sessionResult.isSessionExpired());

System.out.println("Device correlation id: " + sessionResult.getDeviceCorrelationId());

liveness_result = await face_session_client.get_liveness_with_verify_session_result(

created_session.session_id

)

print(f"Session id: {liveness_result.id}")

print(f"Session status: {liveness_result.status}")

print(f"Liveness detection request id: {liveness_result.result.request_id}")

print(f"Liveness detection received datetime: {liveness_result.result.received_date_time}")

print(f"Liveness detection decision: {liveness_result.result.response.body.liveness_decision}")

print(f"Verification result: {liveness_result.result.response.body.verify_result.is_identical}")

print(f"Verification confidence: {liveness_result.result.response.body.verify_result.match_confidence}")

print(f"Session created datetime: {liveness_result.created_date_time}")

print(f"Auth token TTL (seconds): {liveness_result.auth_token_time_to_live_in_seconds}")

print(f"Session expired: {liveness_result.session_expired}")

print(f"Device correlation id: {liveness_result.device_correlation_id}")

const getLivenessSessionResultResponse = await client.path('/detectLivenessWithVerify/singleModal/sessions/{sessionId}', createLivenessSessionResponse.body.sessionId).get();

if (isUnexpected(getLivenessSessionResultResponse)) {

throw new Error(getLivenessSessionResultResponse.body.error.message);

}

console.log(`Session id: ${getLivenessSessionResultResponse.body.id}`);

console.log(`Session status: ${getLivenessSessionResultResponse.body.status}`);

console.log(`Liveness detection request id: ${getLivenessSessionResultResponse.body.result?.requestId}`);

console.log(`Liveness detection received datetime: ${getLivenessSessionResultResponse.body.result?.receivedDateTime}`);

console.log(`Liveness detection decision: ${getLivenessSessionResultResponse.body.result?.response.body.livenessDecision}`);

console.log(`Verification result: ${getLivenessSessionResultResponse.body.result?.response.body.verifyResult.isIdentical}`);

console.log(`Verification confidence: ${getLivenessSessionResultResponse.body.result?.response.body.verifyResult.matchConfidence}`);

console.log(`Session created datetime: ${getLivenessSessionResultResponse.body.createdDateTime}`);

console.log(`Auth token TTL (seconds): ${getLivenessSessionResultResponse.body.authTokenTimeToLiveInSeconds}`);

console.log(`Session expired: ${getLivenessSessionResultResponse.body.sessionExpired}`);

console.log(`Device correlation id: ${getLivenessSessionResultResponse.body.deviceCorrelationId}`);

curl --request GET --location "%FACE_ENDPOINT%/face/v1.1-preview.1/detectlivenesswithverify/singlemodal/sessions/<session-id>" ^

--header "Ocp-Apim-Subscription-Key: %FACE_APIKEY%"

curl --request GET --location "${FACE_ENDPOINT}/face/v1.1-preview.1/detectlivenesswithverify/singlemodal/sessions/<session-id>" \

--header "Ocp-Apim-Subscription-Key: ${FACE_APIKEY}"

回應主體的範例:

{

"status": "ResultAvailable",

"result": {

"id": 1,

"sessionId": "3847ffd3-4657-4e6c-870c-8e20de52f567",

"requestId": "f71b855f-5bba-48f3-a441-5dbce35df291",

"receivedDateTime": "2023-10-31T17:03:51.5859307+00:00",

"request": {

"url": "/face/v1.1-preview.1/detectlivenesswithverify/singlemodal",

"method": "POST",

"contentLength": 352568,

"contentType": "multipart/form-data; boundary=--------------------------590588908656854647226496",

"userAgent": ""

},

"response": {

"body": {

"livenessDecision": "realface",

"target": {

"faceRectangle": {

"top": 59,

"left": 121,

"width": 409,

"height": 395

},

"fileName": "content.bin",

"timeOffsetWithinFile": 0,

"imageType": "Color"

},

"modelVersionUsed": "2022-10-15-preview.04",

"verifyResult": {

"matchConfidence": 0.9304124,

"isIdentical": true

}

},

"statusCode": 200,

"latencyInMilliseconds": 1306

},

"digest": "2B39F2E0EFDFDBFB9B079908498A583545EBED38D8ACA800FF0B8E770799F3BF"

},

"id": "3847ffd3-4657-4e6c-870c-8e20de52f567",

"createdDateTime": "2023-10-31T16:58:19.8942961+00:00",

"authTokenTimeToLiveInSeconds": 600,

"deviceCorrelationId": "723d6d03-ef33-40a8-9682-23a1feb7bccd",

"sessionExpired": true

}

如果您不再查詢其結果,應用程式伺服器可以刪除該工作階段。

await sessionClient.DeleteLivenessWithVerifySessionAsync(sessionId);

Console.WriteLine($"The session {sessionId} is deleted.");

sessionClient.deleteLivenessWithVerifySession(creationResult.getSessionId());

System.out.println("The session " + creationResult.getSessionId() + " is deleted.");

await face_session_client.delete_liveness_with_verify_session(

created_session.session_id

)

print(f"The session {created_session.session_id} is deleted.")

await face_session_client.close()

const deleteLivenessSessionResponse = await client.path('/detectLivenessWithVerify/singleModal/sessions/{sessionId}', createLivenessSessionResponse.body.sessionId).delete();

if (isUnexpected(deleteLivenessSessionResponse)) {

throw new Error(deleteLivenessSessionResponse.body.error.message);

}

console.log(`The session ${createLivenessSessionResponse.body.sessionId} is deleted.`);

curl --request DELETE --location "%FACE_ENDPOINT%/face/v1.1-preview.1/detectlivenesswithverify/singlemodal/sessions/<session-id>" ^

--header "Ocp-Apim-Subscription-Key: %FACE_APIKEY%"

curl --request DELETE --location "${FACE_ENDPOINT}/face/v1.1-preview.1/detectlivenesswithverify/singlemodal/sessions/<session-id>" \

--header "Ocp-Apim-Subscription-Key: ${FACE_APIKEY}"

清除資源

如果您想要清除和移除 Azure AI 服務訂用帳戶,則可以刪除資源或資源群組。 刪除資源群組也會刪除與其相關聯的任何其他資源。

相關內容

若要了解臉部活動 API 中的其他選項,請參閱 Azure AI 視覺 SDK 參考。

若要深入了解可用來協調臉部活動解決方案的功能,請參閱工作階段 REST API 參考。