如何使用自定義實體模式比對來辨識意圖

Azure AI 服務語音 SDK 有內建的功能,可使用簡單的語言模式比對來提供意圖辨識。 意圖是使用者想要執行的動作:關閉視窗、標記複選框、插入一些文字等等。

在本指南中,您會使用語音 SDK 來開發控制台應用程式,以從透過您裝置的麥克風語音表達衍生意圖。 您將學習如何:

- 建立參考語音 SDK NuGet 套件的 Visual Studio 專案

- 建立語音設定並取得意圖辨識器

- 透過語音 SDK API 新增意圖和模式

- 透過語音 SDK API 新增自訂實體

- 使用異步、事件驅動的連續辨識

使用模式比對的時機

使用模式比對,要是:

- 您只想要嚴格比對使用者所說的內容。 這些模式相比交談語言理解 (CLU) 而言更為嚴格。

- 您沒有 CLU 模型的存取權,但仍想得知意圖。

如需詳細資訊,請參閱 模式比對概觀。

必要條件

開始本指南之前,請確定您有下列專案:

- Azure AI 服務資源或統一語音資源

- Visual Studio 2019 (任何版本)。

建立專案

在 Visual Studio 2019 中建立新的 C# 控制台應用程式專案,並 安裝語音 SDK。

從重複使用程式碼開始著手

讓我們開啟 Program.cs 並新增一些可作為專案基本架構的程序代碼。

using System;

using System.Threading.Tasks;

using Microsoft.CognitiveServices.Speech;

using Microsoft.CognitiveServices.Speech.Intent;

namespace helloworld

{

class Program

{

static void Main(string[] args)

{

IntentPatternMatchingWithMicrophoneAsync().Wait();

}

private static async Task IntentPatternMatchingWithMicrophoneAsync()

{

var config = SpeechConfig.FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

}

}

}

建立語音設定

您必須先建立使用 Azure AI 服務預測資源索引鍵和 Azure 區域的組態,才能夠初始化 IntentRecognizer 物件。

- 將

"YOUR_SUBSCRIPTION_KEY"取代為 Azure AI 服務預測索引鍵。 - 將

"YOUR_SUBSCRIPTION_REGION"取代為 Azure AI 服務資源區域。

此範例會使用 FromSubscription() 方法來建置 SpeechConfig。 如需可用方法的完整清單,請參閱 SpeechConfig 類別 \(英文\)。

初始化 IntentRecognizer

現在建立 IntentRecognizer。 將此程式碼插入您的語音設定的下方。

using (var recognizer = new IntentRecognizer(config))

{

}

新增一些意圖

您必須將某些模式與產生 PatternMatchingModel 關聯,並將它套用至 IntentRecognizer。

首先,我們會建立 PatternMatchingModel 並新增一些意圖。

注意

我們可以將多個模式新增至 PatternMatchingIntent。

在區塊內 using 插入此程式碼:

// Creates a Pattern Matching model and adds specific intents from your model. The

// Id is used to identify this model from others in the collection.

var model = new PatternMatchingModel("YourPatternMatchingModelId");

// Creates a pattern that uses groups of optional words. "[Go | Take me]" will match either "Go", "Take me", or "".

var patternWithOptionalWords = "[Go | Take me] to [floor|level] {floorName}";

// Creates a pattern that uses an optional entity and group that could be used to tie commands together.

var patternWithOptionalEntity = "Go to parking [{parkingLevel}]";

// You can also have multiple entities of the same name in a single pattern by adding appending a unique identifier

// to distinguish between the instances. For example:

var patternWithTwoOfTheSameEntity = "Go to floor {floorName:1} [and then go to floor {floorName:2}]";

// NOTE: Both floorName:1 and floorName:2 are tied to the same list of entries. The identifier can be a string

// and is separated from the entity name by a ':'

// Creates the pattern matching intents and adds them to the model

model.Intents.Add(new PatternMatchingIntent("ChangeFloors", patternWithOptionalWords, patternWithOptionalEntity, patternWithTwoOfTheSameEntity));

model.Intents.Add(new PatternMatchingIntent("DoorControl", "{action} the doors", "{action} doors", "{action} the door", "{action} door"));

新增一些自定義實體

若要充分利用模式比對器,您可以自定義實體。 我們會將 「floorName」 設為可用樓層的清單。 我們也會將 「parkingLevel」 設為整數實體。

將此程式代碼插入您的意圖下方:

// Creates the "floorName" entity and set it to type list.

// Adds acceptable values. NOTE the default entity type is Any and so we do not need

// to declare the "action" entity.

model.Entities.Add(PatternMatchingEntity.CreateListEntity("floorName", EntityMatchMode.Strict, "ground floor", "lobby", "1st", "first", "one", "1", "2nd", "second", "two", "2"));

// Creates the "parkingLevel" entity as a pre-built integer

model.Entities.Add(PatternMatchingEntity.CreateIntegerEntity("parkingLevel"));

將模型套用至辨識器

現在必須將模型套用至 IntentRecognizer。 一次可以使用多個模型,因此 API 會採用一組模型。

在您的實體下方插入此程式碼:

var modelCollection = new LanguageUnderstandingModelCollection();

modelCollection.Add(model);

recognizer.ApplyLanguageModels(modelCollection);

辨識意圖

從 IntentRecognizer 物件,您將呼叫 RecognizeOnceAsync() 方法。 此方法會要求語音服務以單一詞組辨識語音,並在識別片語之後停止辨識語音。

在套用語言模型之後插入此程式碼:

Console.WriteLine("Say something...");

var result = await recognizer.RecognizeOnceAsync();

顯示辨識結果 (或錯誤)

語音服務傳回辨識結果時,我們會列印結果。

在 var result = await recognizer.RecognizeOnceAsync(); 下方插入此程式碼:

if (result.Reason == ResultReason.RecognizedIntent)

{

Console.WriteLine($"RECOGNIZED: Text={result.Text}");

Console.WriteLine($" Intent Id={result.IntentId}.");

var entities = result.Entities;

switch (result.IntentId)

{

case "ChangeFloors":

if (entities.TryGetValue("floorName", out string floorName))

{

Console.WriteLine($" FloorName={floorName}");

}

if (entities.TryGetValue("floorName:1", out floorName))

{

Console.WriteLine($" FloorName:1={floorName}");

}

if (entities.TryGetValue("floorName:2", out floorName))

{

Console.WriteLine($" FloorName:2={floorName}");

}

if (entities.TryGetValue("parkingLevel", out string parkingLevel))

{

Console.WriteLine($" ParkingLevel={parkingLevel}");

}

break;

case "DoorControl":

if (entities.TryGetValue("action", out string action))

{

Console.WriteLine($" Action={action}");

}

break;

}

}

else if (result.Reason == ResultReason.RecognizedSpeech)

{

Console.WriteLine($"RECOGNIZED: Text={result.Text}");

Console.WriteLine($" Intent not recognized.");

}

else if (result.Reason == ResultReason.NoMatch)

{

Console.WriteLine($"NOMATCH: Speech could not be recognized.");

}

else if (result.Reason == ResultReason.Canceled)

{

var cancellation = CancellationDetails.FromResult(result);

Console.WriteLine($"CANCELED: Reason={cancellation.Reason}");

if (cancellation.Reason == CancellationReason.Error)

{

Console.WriteLine($"CANCELED: ErrorCode={cancellation.ErrorCode}");

Console.WriteLine($"CANCELED: ErrorDetails={cancellation.ErrorDetails}");

Console.WriteLine($"CANCELED: Did you set the speech resource key and region values?");

}

}

檢查您的程式碼

此時,您的程式碼應會如下所示:

using System;

using System.Threading.Tasks;

using Microsoft.CognitiveServices.Speech;

using Microsoft.CognitiveServices.Speech.Intent;

namespace helloworld

{

class Program

{

static void Main(string[] args)

{

IntentPatternMatchingWithMicrophoneAsync().Wait();

}

private static async Task IntentPatternMatchingWithMicrophoneAsync()

{

var config = SpeechConfig.FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

using (var recognizer = new IntentRecognizer(config))

{

// Creates a Pattern Matching model and adds specific intents from your model. The

// Id is used to identify this model from others in the collection.

var model = new PatternMatchingModel("YourPatternMatchingModelId");

// Creates a pattern that uses groups of optional words. "[Go | Take me]" will match either "Go", "Take me", or "".

var patternWithOptionalWords = "[Go | Take me] to [floor|level] {floorName}";

// Creates a pattern that uses an optional entity and group that could be used to tie commands together.

var patternWithOptionalEntity = "Go to parking [{parkingLevel}]";

// You can also have multiple entities of the same name in a single pattern by adding appending a unique identifier

// to distinguish between the instances. For example:

var patternWithTwoOfTheSameEntity = "Go to floor {floorName:1} [and then go to floor {floorName:2}]";

// NOTE: Both floorName:1 and floorName:2 are tied to the same list of entries. The identifier can be a string

// and is separated from the entity name by a ':'

// Adds some intents to look for specific patterns.

model.Intents.Add(new PatternMatchingIntent("ChangeFloors", patternWithOptionalWords, patternWithOptionalEntity, patternWithTwoOfTheSameEntity));

model.Intents.Add(new PatternMatchingIntent("DoorControl", "{action} the doors", "{action} doors", "{action} the door", "{action} door"));

// Creates the "floorName" entity and set it to type list.

// Adds acceptable values. NOTE the default entity type is Any and so we do not need

// to declare the "action" entity.

model.Entities.Add(PatternMatchingEntity.CreateListEntity("floorName", EntityMatchMode.Strict, "ground floor", "lobby", "1st", "first", "one", "1", "2nd", "second", "two", "2"));

// Creates the "parkingLevel" entity as a pre-built integer

model.Entities.Add(PatternMatchingEntity.CreateIntegerEntity("parkingLevel"));

var modelCollection = new LanguageUnderstandingModelCollection();

modelCollection.Add(model);

recognizer.ApplyLanguageModels(modelCollection);

Console.WriteLine("Say something...");

var result = await recognizer.RecognizeOnceAsync();

if (result.Reason == ResultReason.RecognizedIntent)

{

Console.WriteLine($"RECOGNIZED: Text={result.Text}");

Console.WriteLine($" Intent Id={result.IntentId}.");

var entities = result.Entities;

switch (result.IntentId)

{

case "ChangeFloors":

if (entities.TryGetValue("floorName", out string floorName))

{

Console.WriteLine($" FloorName={floorName}");

}

if (entities.TryGetValue("floorName:1", out floorName))

{

Console.WriteLine($" FloorName:1={floorName}");

}

if (entities.TryGetValue("floorName:2", out floorName))

{

Console.WriteLine($" FloorName:2={floorName}");

}

if (entities.TryGetValue("parkingLevel", out string parkingLevel))

{

Console.WriteLine($" ParkingLevel={parkingLevel}");

}

break;

case "DoorControl":

if (entities.TryGetValue("action", out string action))

{

Console.WriteLine($" Action={action}");

}

break;

}

}

else if (result.Reason == ResultReason.RecognizedSpeech)

{

Console.WriteLine($"RECOGNIZED: Text={result.Text}");

Console.WriteLine($" Intent not recognized.");

}

else if (result.Reason == ResultReason.NoMatch)

{

Console.WriteLine($"NOMATCH: Speech could not be recognized.");

}

else if (result.Reason == ResultReason.Canceled)

{

var cancellation = CancellationDetails.FromResult(result);

Console.WriteLine($"CANCELED: Reason={cancellation.Reason}");

if (cancellation.Reason == CancellationReason.Error)

{

Console.WriteLine($"CANCELED: ErrorCode={cancellation.ErrorCode}");

Console.WriteLine($"CANCELED: ErrorDetails={cancellation.ErrorDetails}");

Console.WriteLine($"CANCELED: Did you set the speech resource key and region values?");

}

}

}

}

}

}

建置並執行您的應用程式

現在您已準備好使用語音服務來建立應用程式,並測試我們的語音辨識。

- 編譯程式碼 - 從 Visual Studio 的功能表列中,選擇 [建置]>[建置解決方案]。

- 啟動應用程式 - 從功能表列中,選擇 [偵錯]>[開始偵錯],或按 F5。

- 開始辨識 - 它會提示您說些什麼。 預設語言為英文。 您的語音會傳送至語音服務、轉譯為文字,並在主控台中轉譯。

例如,如果您說「帶我到第 2 層」,這應該是輸出:

Say something...

RECOGNIZED: Text=Take me to floor 2.

Intent Id=ChangeFloors.

FloorName=2

另一個範例是,如果您說「帶我到 7 樓」,這應該是輸出:

Say something...

RECOGNIZED: Text=Take me to floor 7.

Intent not recognized.

無法辨識意圖,因為 7 不在 floorName 的有效值清單中。

建立專案

在 Visual Studio 2019 中建立新的 C++ 控制台應用程式專案,並 安裝語音 SDK。

從重複使用程式碼開始著手

讓我們開啟 helloworld.cpp 並新增一些可作為專案基本架構的程序代碼。

#include <iostream>

#include <speechapi_cxx.h>

using namespace Microsoft::CognitiveServices::Speech;

using namespace Microsoft::CognitiveServices::Speech::Intent;

int main()

{

std::cout << "Hello World!\n";

auto config = SpeechConfig::FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

}

建立語音設定

您必須先建立使用 Azure AI 服務預測資源索引鍵和 Azure 區域的組態,才能夠初始化 IntentRecognizer 物件。

- 將

"YOUR_SUBSCRIPTION_KEY"取代為 Azure AI 服務預測索引鍵。 - 將

"YOUR_SUBSCRIPTION_REGION"取代為 Azure AI 服務資源區域。

此範例會使用 FromSubscription() 方法來建置 SpeechConfig。 如需可用方法的完整清單,請參閱 SpeechConfig 類別 \(英文\)。

初始化 IntentRecognizer

現在建立 IntentRecognizer。 將此程式碼插入您的語音設定的下方。

auto intentRecognizer = IntentRecognizer::FromConfig(config);

新增一些意圖

您必須將某些模式與產生 PatternMatchingModel 關聯,並將它套用至 IntentRecognizer。

首先,我們會建立 PatternMatchingModel 並新增一些意圖。 PatternMatchingIntent 是結構,因此我們只會使用內嵌語法。

注意

我們可以將多個模式新增至 PatternMatchingIntent。

auto model = PatternMatchingModel::FromId("myNewModel");

model->Intents.push_back({"Take me to floor {floorName}.", "Go to floor {floorName}."} , "ChangeFloors");

model->Intents.push_back({"{action} the door."}, "OpenCloseDoor");

新增一些自定義實體

若要充分利用模式比對器,您可以自定義實體。 我們會將 「floorName」 設為可用樓層的清單。

model->Entities.push_back({ "floorName" , Intent::EntityType::List, Intent::EntityMatchMode::Strict, {"one", "1", "two", "2", "lobby", "ground floor"} });

將模型套用至辨識器

現在必須將模型套用至 IntentRecognizer。 一次可以使用多個模型,因此 API 會採用一組模型。

std::vector<std::shared_ptr<LanguageUnderstandingModel>> collection;

collection.push_back(model);

intentRecognizer->ApplyLanguageModels(collection);

辨識意圖

從 IntentRecognizer 物件,您將呼叫 RecognizeOnceAsync() 方法。 此方法會要求語音服務以單一詞組辨識語音,並在識別片語之後停止辨識語音。 為了簡單起見,我們將等待傳回的結果完成。

將此程式代碼插入您的意圖下方:

std::cout << "Say something ..." << std::endl;

auto result = intentRecognizer->RecognizeOnceAsync().get();

顯示辨識結果 (或錯誤)

語音服務傳回辨識結果時,我們會列印結果。

在 auto result = intentRecognizer->RecognizeOnceAsync().get(); 下方插入此程式碼:

switch (result->Reason)

{

case ResultReason::RecognizedSpeech:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << "NO INTENT RECOGNIZED!" << std::endl;

break;

case ResultReason::RecognizedIntent:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << " Intent Id = " << result->IntentId.c_str() << std::endl;

auto entities = result->GetEntities();

if (entities.find("floorName") != entities.end())

{

std::cout << " Floor name: = " << entities["floorName"].c_str() << std::endl;

}

if (entities.find("action") != entities.end())

{

std::cout << " Action: = " << entities["action"].c_str() << std::endl;

}

break;

case ResultReason::NoMatch:

{

auto noMatch = NoMatchDetails::FromResult(result);

switch (noMatch->Reason)

{

case NoMatchReason::NotRecognized:

std::cout << "NOMATCH: Speech was detected, but not recognized." << std::endl;

break;

case NoMatchReason::InitialSilenceTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only silence, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::InitialBabbleTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only noise, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::KeywordNotRecognized:

std::cout << "NOMATCH: Keyword not recognized" << std::endl;

break;

}

break;

}

case ResultReason::Canceled:

{

auto cancellation = CancellationDetails::FromResult(result);

if (!cancellation->ErrorDetails.empty())

{

std::cout << "CANCELED: ErrorDetails=" << cancellation->ErrorDetails.c_str() << std::endl;

std::cout << "CANCELED: Did you set the speech resource key and region values?" << std::endl;

}

}

default:

break;

}

檢查您的程式碼

此時,您的程式碼應會如下所示:

#include <iostream>

#include <speechapi_cxx.h>

using namespace Microsoft::CognitiveServices::Speech;

using namespace Microsoft::CognitiveServices::Speech::Intent;

int main()

{

auto config = SpeechConfig::FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

auto intentRecognizer = IntentRecognizer::FromConfig(config);

auto model = PatternMatchingModel::FromId("myNewModel");

model->Intents.push_back({"Take me to floor {floorName}.", "Go to floor {floorName}."} , "ChangeFloors");

model->Intents.push_back({"{action} the door."}, "OpenCloseDoor");

model->Entities.push_back({ "floorName" , Intent::EntityType::List, Intent::EntityMatchMode::Strict, {"one", "1", "two", "2", "lobby", "ground floor"} });

std::vector<std::shared_ptr<LanguageUnderstandingModel>> collection;

collection.push_back(model);

intentRecognizer->ApplyLanguageModels(collection);

std::cout << "Say something ..." << std::endl;

auto result = intentRecognizer->RecognizeOnceAsync().get();

switch (result->Reason)

{

case ResultReason::RecognizedSpeech:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << "NO INTENT RECOGNIZED!" << std::endl;

break;

case ResultReason::RecognizedIntent:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << " Intent Id = " << result->IntentId.c_str() << std::endl;

auto entities = result->GetEntities();

if (entities.find("floorName") != entities.end())

{

std::cout << " Floor name: = " << entities["floorName"].c_str() << std::endl;

}

if (entities.find("action") != entities.end())

{

std::cout << " Action: = " << entities["action"].c_str() << std::endl;

}

break;

case ResultReason::NoMatch:

{

auto noMatch = NoMatchDetails::FromResult(result);

switch (noMatch->Reason)

{

case NoMatchReason::NotRecognized:

std::cout << "NOMATCH: Speech was detected, but not recognized." << std::endl;

break;

case NoMatchReason::InitialSilenceTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only silence, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::InitialBabbleTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only noise, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::KeywordNotRecognized:

std::cout << "NOMATCH: Keyword not recognized." << std::endl;

break;

}

break;

}

case ResultReason::Canceled:

{

auto cancellation = CancellationDetails::FromResult(result);

if (!cancellation->ErrorDetails.empty())

{

std::cout << "CANCELED: ErrorDetails=" << cancellation->ErrorDetails.c_str() << std::endl;

std::cout << "CANCELED: Did you set the speech resource key and region values?" << std::endl;

}

}

default:

break;

}

}

建置並執行您的應用程式

現在您已準備好使用語音服務來建立應用程式,並測試我們的語音辨識。

- 編譯程式碼 - 從 Visual Studio 的功能表列中,選擇 [建置]>[建置解決方案]。

- 啟動應用程式 - 從功能表列中,選擇 [偵錯]>[開始偵錯],或按 F5。

- 開始辨識 - 它會提示您說些什麼。 預設語言為英文。 您的語音會傳送至語音服務、轉譯為文字,並在主控台中轉譯。

例如,如果您說「帶我到第 2 層」,這應該是輸出:

Say something ...

RECOGNIZED: Text = Take me to floor 2.

Intent Id = ChangeFloors

Floor name: = 2

如果您說「帶我到第 7 層」,另一個範例應該是輸出:

Say something ...

RECOGNIZED: Text = Take me to floor 7.

NO INTENT RECOGNIZED!

意圖標識碼是空的,因為7不在我們的清單中。

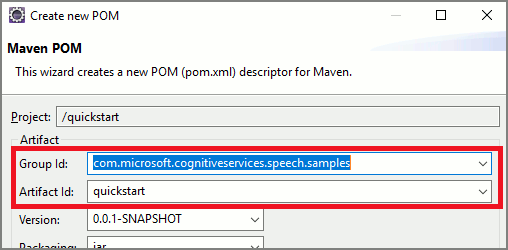

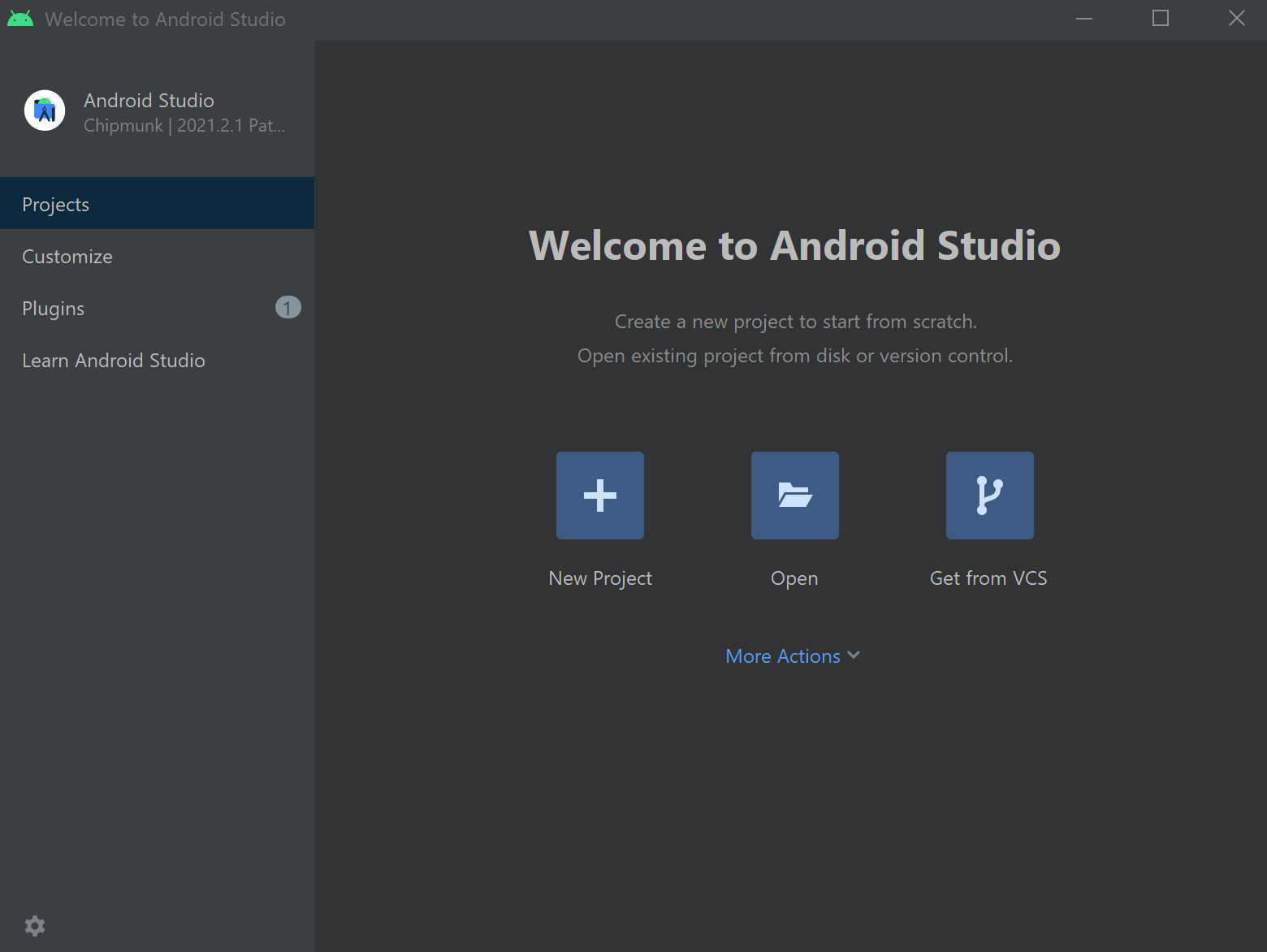

在本快速入門中,您會安裝適用於 Java 的 語音 SDK 。

平臺需求

選擇您的目標環境:

適用於 Java 的語音 SDK 與 Windows、Linux 和 macOS 相容。

在 Windows 上,您必須使用 64 位目標架構。 需要 Windows 10 或更新版本。

為您的平臺安裝適用於 Visual Studio 2015、2017、2019 和 2022 的 Microsoft C++ 可轉散發套件。 第一次安裝此套件可能需要重新啟動。

適用於 Java 的語音 SDK 不支援 ARM64 上的 Windows。

安裝 Java 開發工具組,例如 Azul Zulu OpenJDK。 OpenJDK 的 Microsoft Build 或您慣用的 JDK 也應該運作。

安裝適用於 Java 的語音 SDK

某些指示會使用特定的 SDK 版本, 例如 1.24.2。 若要檢查最新版本, 請搜尋我們的 GitHub 存放庫。

選擇您的目標環境:

本指南說明如何在 Java 執行時間上安裝適用於 Java 的語音 SDK 。

受支援的作業系統

適用於 Java 的語音 SDK 套件適用於下列作業系統:

- Windows:僅限 64 位。

- Mac:macOS X 10.14 版或更新版本。

- Linux:請參閱 支援的Linux發行版和目標架構。

請遵循下列步驟,使用 Apache Maven 安裝適用於 Java 的語音 SDK:

安裝 Apache Maven。

開啟您想要新專案的命令提示字元,然後建立新的 pom.xml 檔案。

將下列 XML 內容複製到 pom.xml:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.microsoft.cognitiveservices.speech.samples</groupId> <artifactId>quickstart-eclipse</artifactId> <version>1.0.0-SNAPSHOT</version> <build> <sourceDirectory>src</sourceDirectory> <plugins> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>3.7.0</version> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> </plugins> </build> <dependencies> <dependency> <groupId>com.microsoft.cognitiveservices.speech</groupId> <artifactId>client-sdk</artifactId> <version>1.37.0</version> </dependency> </dependencies> </project>執行下列 Maven 命令來安裝語音 SDK 和相依性。

mvn clean dependency:copy-dependencies

從重複使用程式碼開始著手

從 src dir 開啟

Main.java。以下列內容取代檔案的內容:

import java.util.ArrayList;

import java.util.Dictionary;

import java.util.concurrent.ExecutionException;

import com.microsoft.cognitiveservices.speech.*;

import com.microsoft.cognitiveservices.speech.intent.*;

public class Main {

public static void main(String[] args) throws InterruptedException, ExecutionException {

IntentPatternMatchingWithMicrophone();

}

public static void IntentPatternMatchingWithMicrophone() throws InterruptedException, ExecutionException {

SpeechConfig config = SpeechConfig.fromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

}

}

建立語音設定

您必須先建立使用 Azure AI 服務預測資源索引鍵和 Azure 區域的組態,才能夠初始化 IntentRecognizer 物件。

- 將

"YOUR_SUBSCRIPTION_KEY"取代為 Azure AI 服務預測索引鍵。 - 將

"YOUR_SUBSCRIPTION_REGION"取代為 Azure AI 服務資源區域。

此範例會使用 fromSubscription() 方法來建置 SpeechConfig。 如需可用方法的完整清單,請參閱 SpeechConfig 類別 \(英文\)。

初始化 IntentRecognizer

現在建立 IntentRecognizer。 將此程式碼插入您的語音設定的下方。 我們會在嘗試中執行這項操作,以便利用可自動封閉的介面。

try (IntentRecognizer recognizer = new IntentRecognizer(config)) {

}

新增一些意圖

您必須將某些模式與產生 PatternMatchingModel 關聯,並將它套用至 IntentRecognizer。

首先,我們會建立 PatternMatchingModel 並新增一些意圖。

注意

我們可以將多個模式新增至 PatternMatchingIntent。

在區塊內 try 插入此程式碼:

// Creates a Pattern Matching model and adds specific intents from your model. The

// Id is used to identify this model from others in the collection.

PatternMatchingModel model = new PatternMatchingModel("YourPatternMatchingModelId");

// Creates a pattern that uses groups of optional words. "[Go | Take me]" will match either "Go", "Take me", or "".

String patternWithOptionalWords = "[Go | Take me] to [floor|level] {floorName}";

// Creates a pattern that uses an optional entity and group that could be used to tie commands together.

String patternWithOptionalEntity = "Go to parking [{parkingLevel}]";

// You can also have multiple entities of the same name in a single pattern by adding appending a unique identifier

// to distinguish between the instances. For example:

String patternWithTwoOfTheSameEntity = "Go to floor {floorName:1} [and then go to floor {floorName:2}]";

// NOTE: Both floorName:1 and floorName:2 are tied to the same list of entries. The identifier can be a string

// and is separated from the entity name by a ':'

// Creates the pattern matching intents and adds them to the model

model.getIntents().put(new PatternMatchingIntent("ChangeFloors", patternWithOptionalWords, patternWithOptionalEntity, patternWithTwoOfTheSameEntity));

model.getIntents().put(new PatternMatchingIntent("DoorControl", "{action} the doors", "{action} doors", "{action} the door", "{action} door"));

新增一些自定義實體

若要充分利用模式比對器,您可以自定義實體。 我們會將 「floorName」 設為可用樓層的清單。 我們也會將 「parkingLevel」 設為整數實體。

將此程式代碼插入您的意圖下方:

// Creates the "floorName" entity and set it to type list.

// Adds acceptable values. NOTE the default entity type is Any and so we do not need

// to declare the "action" entity.

model.getEntities().put(PatternMatchingEntity.CreateListEntity("floorName", PatternMatchingEntity.EntityMatchMode.Strict, "ground floor", "lobby", "1st", "first", "one", "1", "2nd", "second", "two", "2"));

// Creates the "parkingLevel" entity as a pre-built integer

model.getEntities().put(PatternMatchingEntity.CreateIntegerEntity("parkingLevel"));

將模型套用至辨識器

現在必須將模型套用至 IntentRecognizer。 一次可以使用多個模型,因此 API 會採用一組模型。

在您的實體下方插入此程式碼:

ArrayList<LanguageUnderstandingModel> modelCollection = new ArrayList<LanguageUnderstandingModel>();

modelCollection.add(model);

recognizer.applyLanguageModels(modelCollection);

辨識意圖

從 IntentRecognizer 物件,您將呼叫 RecognizeOnceAsync() 方法。 此方法會要求語音服務以單一詞組辨識語音,並在識別片語之後停止辨識語音。

在套用語言模型之後插入此程式碼:

System.out.println("Say something...");

IntentRecognitionResult result = recognizer.recognizeOnceAsync().get();

顯示辨識結果 (或錯誤)

語音服務傳回辨識結果時,我們會列印結果。

在 IntentRecognitionResult result = recognizer.recognizeOnceAsync.get(); 下方插入此程式碼:

if (result.getReason() == ResultReason.RecognizedSpeech) {

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s", "Intent not recognized."));

}

else if (result.getReason() == ResultReason.RecognizedIntent)

{

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s %s", "Intent Id=", result.getIntentId() + "."));

Dictionary<String, String> entities = result.getEntities();

switch (result.getIntentId())

{

case "ChangeFloors":

if (entities.get("floorName") != null) {

System.out.println(String.format("%17s %s", "FloorName=", entities.get("floorName")));

}

if (entities.get("floorName:1") != null) {

System.out.println(String.format("%17s %s", "FloorName:1=", entities.get("floorName:1")));

}

if (entities.get("floorName:2") != null) {

System.out.println(String.format("%17s %s", "FloorName:2=", entities.get("floorName:2")));

}

if (entities.get("parkingLevel") != null) {

System.out.println(String.format("%17s %s", "ParkingLevel=", entities.get("parkingLevel")));

}

break;

case "DoorControl":

if (entities.get("action") != null) {

System.out.println(String.format("%17s %s", "Action=", entities.get("action")));

}

break;

}

}

else if (result.getReason() == ResultReason.NoMatch) {

System.out.println("NOMATCH: Speech could not be recognized.");

}

else if (result.getReason() == ResultReason.Canceled) {

CancellationDetails cancellation = CancellationDetails.fromResult(result);

System.out.println("CANCELED: Reason=" + cancellation.getReason());

if (cancellation.getReason() == CancellationReason.Error)

{

System.out.println("CANCELED: ErrorCode=" + cancellation.getErrorCode());

System.out.println("CANCELED: ErrorDetails=" + cancellation.getErrorDetails());

System.out.println("CANCELED: Did you update the subscription info?");

}

}

檢查您的程式碼

此時,您的程式碼應會如下所示:

package quickstart;

import java.util.ArrayList;

import java.util.concurrent.ExecutionException;

import java.util.Dictionary;

import com.microsoft.cognitiveservices.speech.*;

import com.microsoft.cognitiveservices.speech.intent.*;

public class Main {

public static void main(String[] args) throws InterruptedException, ExecutionException {

IntentPatternMatchingWithMicrophone();

}

public static void IntentPatternMatchingWithMicrophone() throws InterruptedException, ExecutionException {

SpeechConfig config = SpeechConfig.fromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

try (IntentRecognizer recognizer = new IntentRecognizer(config)) {

// Creates a Pattern Matching model and adds specific intents from your model. The

// Id is used to identify this model from others in the collection.

PatternMatchingModel model = new PatternMatchingModel("YourPatternMatchingModelId");

// Creates a pattern that uses groups of optional words. "[Go | Take me]" will match either "Go", "Take me", or "".

String patternWithOptionalWords = "[Go | Take me] to [floor|level] {floorName}";

// Creates a pattern that uses an optional entity and group that could be used to tie commands together.

String patternWithOptionalEntity = "Go to parking [{parkingLevel}]";

// You can also have multiple entities of the same name in a single pattern by adding appending a unique identifier

// to distinguish between the instances. For example:

String patternWithTwoOfTheSameEntity = "Go to floor {floorName:1} [and then go to floor {floorName:2}]";

// NOTE: Both floorName:1 and floorName:2 are tied to the same list of entries. The identifier can be a string

// and is separated from the entity name by a ':'

// Creates the pattern matching intents and adds them to the model

model.getIntents().put(new PatternMatchingIntent("ChangeFloors", patternWithOptionalWords, patternWithOptionalEntity, patternWithTwoOfTheSameEntity));

model.getIntents().put(new PatternMatchingIntent("DoorControl", "{action} the doors", "{action} doors", "{action} the door", "{action} door"));

// Creates the "floorName" entity and set it to type list.

// Adds acceptable values. NOTE the default entity type is Any and so we do not need

// to declare the "action" entity.

model.getEntities().put(PatternMatchingEntity.CreateListEntity("floorName", PatternMatchingEntity.EntityMatchMode.Strict, "ground floor", "lobby", "1st", "first", "one", "1", "2nd", "second", "two", "2"));

// Creates the "parkingLevel" entity as a pre-built integer

model.getEntities().put(PatternMatchingEntity.CreateIntegerEntity("parkingLevel"));

ArrayList<LanguageUnderstandingModel> modelCollection = new ArrayList<LanguageUnderstandingModel>();

modelCollection.add(model);

recognizer.applyLanguageModels(modelCollection);

System.out.println("Say something...");

IntentRecognitionResult result = recognizer.recognizeOnceAsync().get();

if (result.getReason() == ResultReason.RecognizedSpeech) {

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s", "Intent not recognized."));

}

else if (result.getReason() == ResultReason.RecognizedIntent)

{

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s %s", "Intent Id=", result.getIntentId() + "."));

Dictionary<String, String> entities = result.getEntities();

switch (result.getIntentId())

{

case "ChangeFloors":

if (entities.get("floorName") != null) {

System.out.println(String.format("%17s %s", "FloorName=", entities.get("floorName")));

}

if (entities.get("floorName:1") != null) {

System.out.println(String.format("%17s %s", "FloorName:1=", entities.get("floorName:1")));

}

if (entities.get("floorName:2") != null) {

System.out.println(String.format("%17s %s", "FloorName:2=", entities.get("floorName:2")));

}

if (entities.get("parkingLevel") != null) {

System.out.println(String.format("%17s %s", "ParkingLevel=", entities.get("parkingLevel")));

}

break;

case "DoorControl":

if (entities.get("action") != null) {

System.out.println(String.format("%17s %s", "Action=", entities.get("action")));

}

break;

}

}

else if (result.getReason() == ResultReason.NoMatch) {

System.out.println("NOMATCH: Speech could not be recognized.");

}

else if (result.getReason() == ResultReason.Canceled) {

CancellationDetails cancellation = CancellationDetails.fromResult(result);

System.out.println("CANCELED: Reason=" + cancellation.getReason());

if (cancellation.getReason() == CancellationReason.Error)

{

System.out.println("CANCELED: ErrorCode=" + cancellation.getErrorCode());

System.out.println("CANCELED: ErrorDetails=" + cancellation.getErrorDetails());

System.out.println("CANCELED: Did you update the subscription info?");

}

}

}

}

}

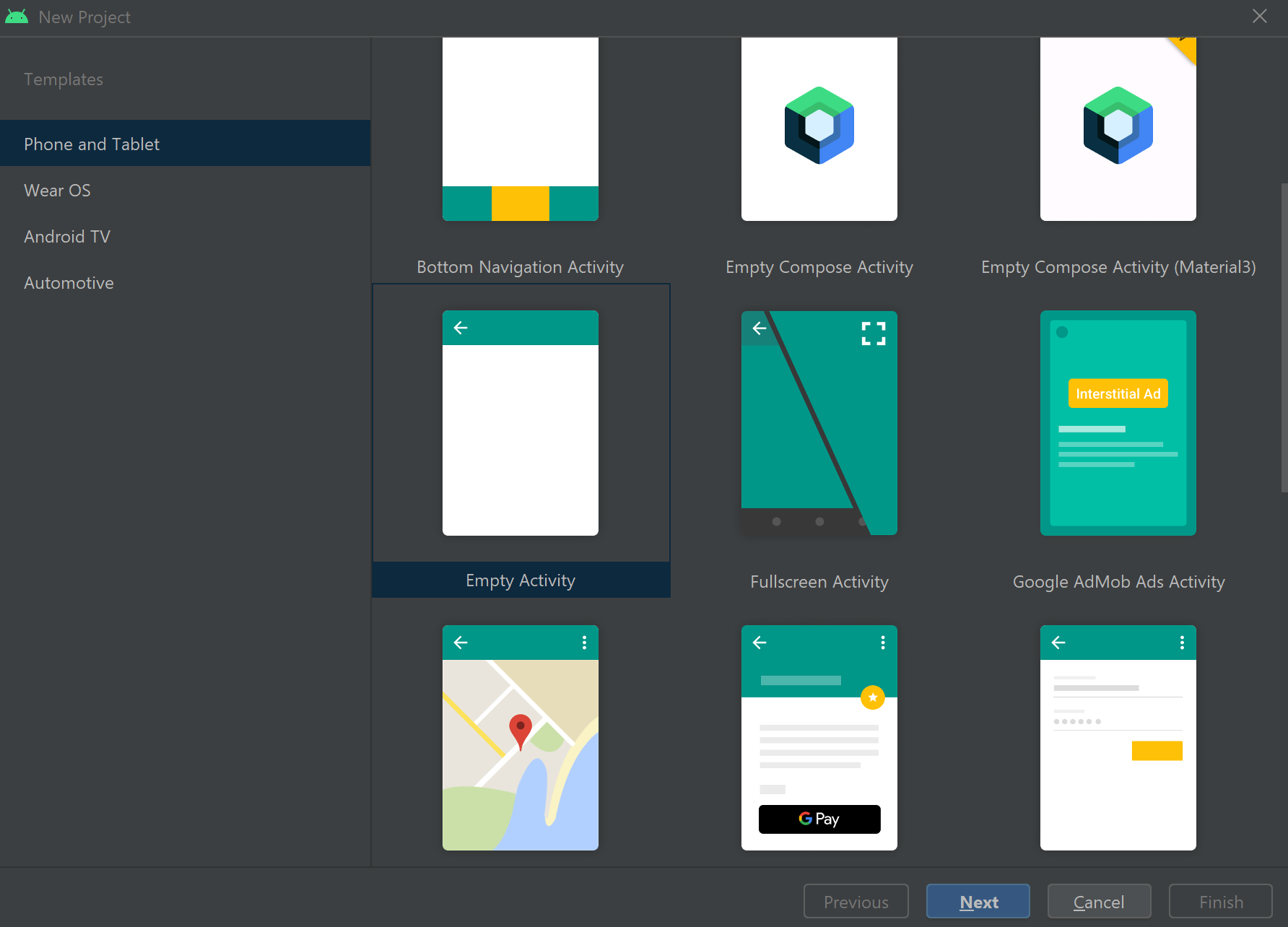

建置並執行您的應用程式

現在您已準備好建置您的應用程式,並使用語音服務和內嵌模式比對器來測試我們的意圖辨識。

選取 Eclipse 中的 [執行] 按鈕或按 ctrl+F11,然後監看「說出某些內容...」提示的輸出。 一旦出現,就會說出您的語句並監看輸出。

例如,如果您說「帶我到第 2 層」,這應該是輸出:

Say something...

RECOGNIZED: Text=Take me to floor 2.

Intent Id=ChangeFloors.

FloorName=2

另一個範例是,如果您說「帶我到 7 樓」,這應該是輸出:

Say something...

RECOGNIZED: Text=Take me to floor 7.

Intent not recognized.

無法辨識意圖,因為 7 不在 floorName 的有效值清單中。

![[新增專案] 對話框的螢幕快照,其中已醒目提示 Java 專案。](media/sdk/qs-java-jre-02-select-wizard.png)

![[新增 Java 專案精靈] 的螢幕快照,其中包含用於建立 Java 專案的選取專案。](media/sdk/qs-java-jre-03-create-java-project.png)