Hello, @Abhay Chandramouli !

There are a lot of questions here and I think the code samples will answer them. It looks like you'll ultimately want to use Python so I'll start with that but you can use many different approaches including .NET and the CLI. Look through the code samples and sample repositories and let me know if there's anything else you need. I think this should give you what you are looking for.

How do I use Azure Batch with python?

Azure Batch includes several code heavy use cases so even some of the quickstarts will point you to GitHub repositories. Here are some code samples for running Azure Batch with Python:

- Microsoft Learn: Quickstart: Use Python API to run an Azure Batch job

- GitHub: Azure Batch Python Quickstart

- Microsoft Learn: Tutorial: Run a parallel workload with Azure Batch using the Python API

- GitHub: Batch Python File Processing with ffmpeg

- GitHub: Getting started - Managing Batch using Azure Python SDK

- GitHub: Azure Batch samples

How do I use a start task to install Python?

This is a common question and a great Microsoft blog was written to answer this:

https://techcommunity.microsoft.com/t5/azure-paas-blog/install-python-on-a-windows-node-using-a-start-task-with-azure/ba-p/2341854

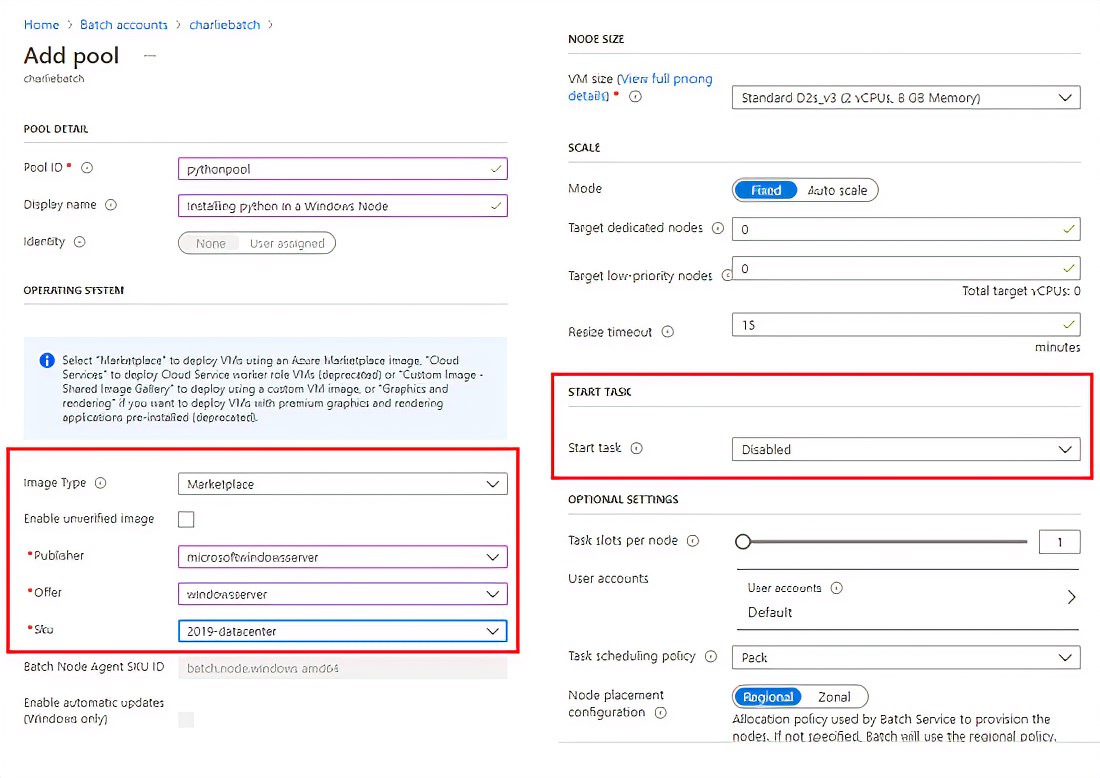

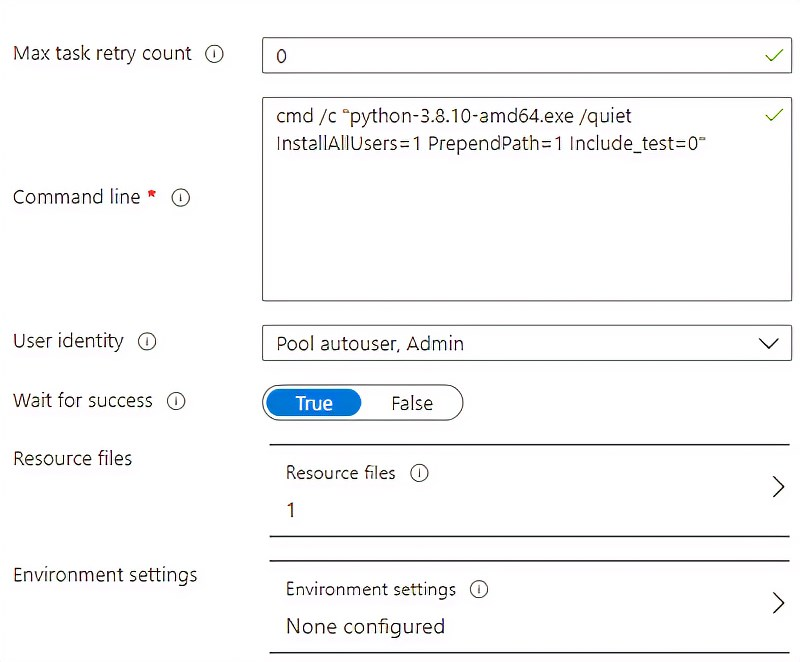

In the blog, Carlos has you get the Python release that you want to install, add the installer into a public storage container, create a Windows Pool, and define the required start task and resource files.

How do I provide a start task? How do I run multiple commands?

Using the Python quickstart GitHub sample and starting on line 213 of python_quickstart_client.py you see an example of a simple loop that will create a task for each input file:

def add_tasks(batch_service_client: BatchServiceClient, job_id: str, resource_input_files: list):

"""

Adds a task for each input file in the collection to the specified job.

:param batch_service_client: A Batch service client.

:param str job_id: The ID of the job to which to add the tasks.

:param list resource_input_files: A collection of input files. One task will be

created for each input file.

"""

print(f'Adding {resource_input_files} tasks to job [{job_id}]...')

tasks = []

for idx, input_file in enumerate(resource_input_files):

command = f"/bin/bash -c \"cat {input_file.file_path}\""

tasks.append(batchmodels.TaskAddParameter(

id=f'Task{idx}',

command_line=command,

resource_files=[input_file]

)

)

batch_service_client.task.add_collection(job_id, tasks)

Looking at the Batch Python File Processing with ffmpeg example, batch_python_tutorial_ffmpeg.py starting on line 223 goes about this more explicitly:

def add_tasks(batch_service_client, job_id, input_files, output_container_sas_url):

"""

Adds a task for each input file in the collection to the specified job.

:param batch_service_client: A Batch service client.

:type batch_service_client: `azure.batch.BatchServiceClient`

:param str job_id: The ID of the job to which to add the tasks.

:param list input_files: A collection of input files. One task will be

created for each input file.

:param output_container_sas_token: A SAS token granting write access to

the specified Azure Blob storage container.

"""

print('Adding {} tasks to job [{}]...'.format(len(input_files), job_id))

tasks = list()

for idx, input_file in enumerate(input_files):

input_file_path = input_file.file_path

output_file_path = "".join((input_file_path).split('.')[:-1]) + '.mp3'

command = "/bin/bash -c \"ffmpeg -i {} {} \"".format(

input_file_path, output_file_path)

tasks.append(batch.models.TaskAddParameter(

id='Task{}'.format(idx),

command_line=command,

resource_files=[input_file],

output_files=[batchmodels.OutputFile(

file_pattern=output_file_path,

destination=batchmodels.OutputFileDestination(

container=batchmodels.OutputFileBlobContainerDestination(

container_url=output_container_sas_url)),

upload_options=batchmodels.OutputFileUploadOptions(

upload_condition=batchmodels.OutputFileUploadCondition.task_success))]

)

)

batch_service_client.task.add_collection(job_id, tasks)