Hi @Devender ,

Thankyou for using Microsoft Q&A platform and thanks for posting your question here.

As per my understanding, you want to know if the updated data would reflect in your lake database if the source file gets updated. Please let me know if my understanding is incorrect.

Lake databases use a data lake on the Azure Storage account to store the data of the database. The data can be stored in Parquet, Delta or CSV format and different settings can be used to optimize the storage. Every lake database uses a linked service to define the location of the root data folder

Since tables in lake databases are the reference point to the ADLS files, it would show the latest data from the file.

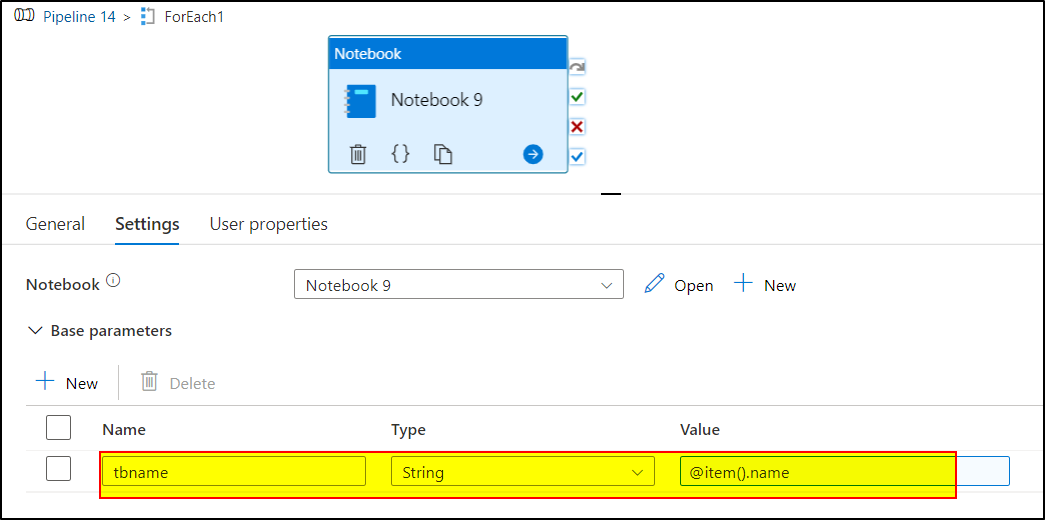

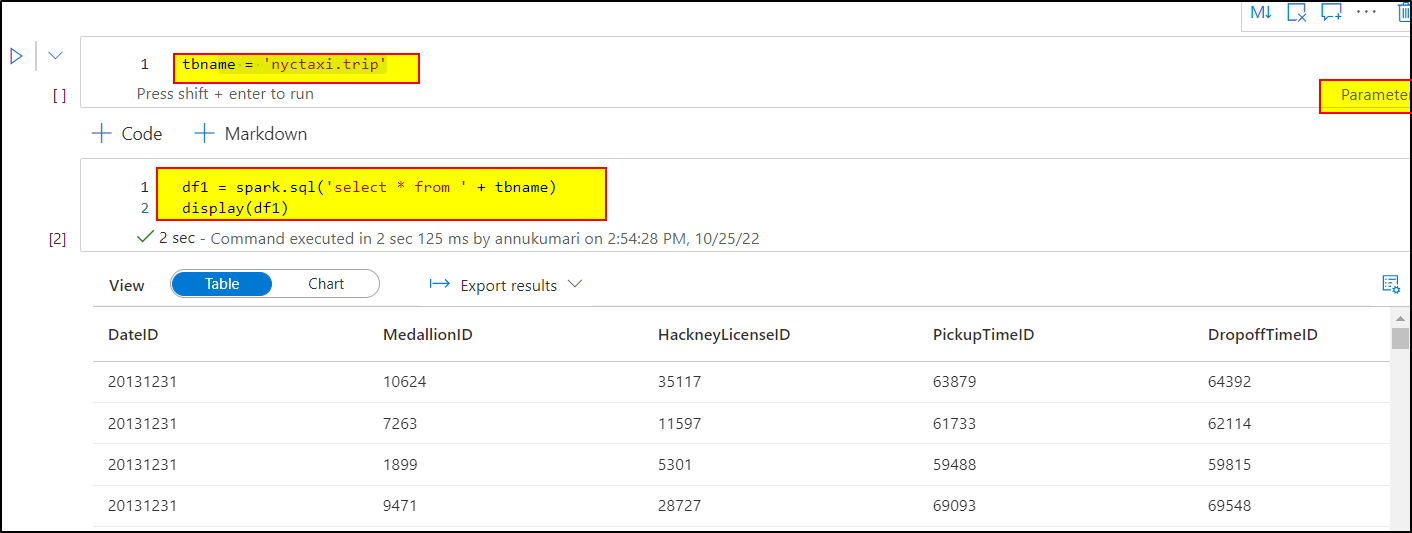

Coming to the next query about how to make the notebook dynamic for new tables . You can parameterize the above query and get the tablenames dynamically to the synapse notebook.

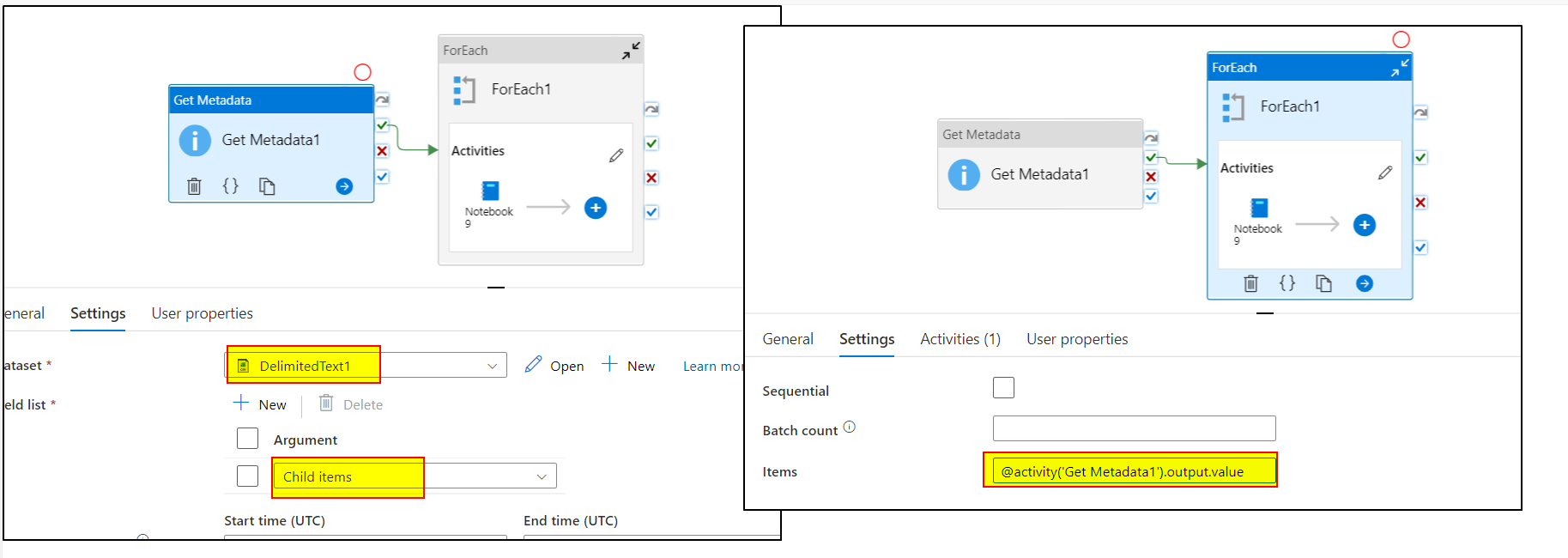

First of all, in your synapse pipeline, using GetMetadata activity, you can get all the filenames present in the ADLS folder . Then iterate through all the files using ForEach activity and pass on the filenames to the Synapse notebook as a toggle parameter inside Foreach dynamically.

To check how to parameterize your notebook, kindly refer to the following video: Parameterize Synapse notebook in Azure Synapse Analytics

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators