Being a data processing problem this is best handled by dataflow. Source and use the split transform to place data into 3 different folders/files in a temporary destination on lake(SFTP is not yet available as a sink in dataflow). Then use a copy activity to write the data to SFTP location.

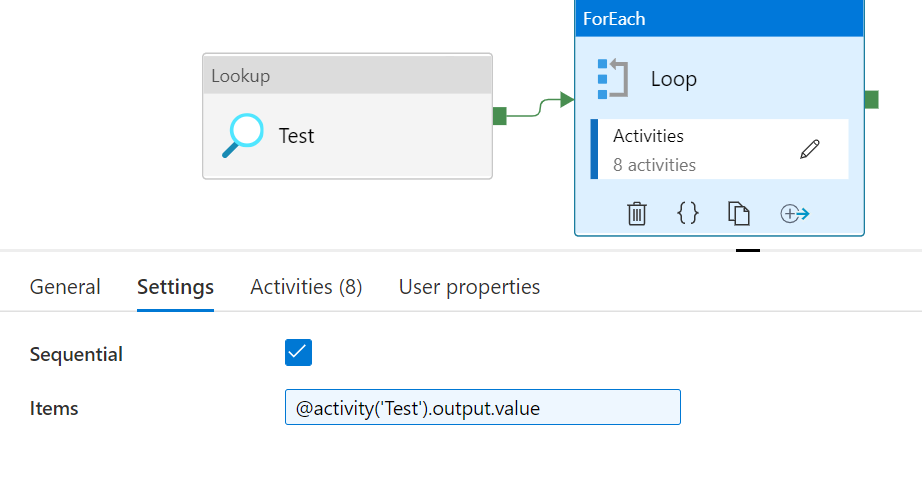

I also assume you want this solution to scale. Iterating rows in foreach is not a scalable solution and will work only for small data loads.