Hello @BjornD Jensen ,

Thanks for the question and using MS Q&A platform.

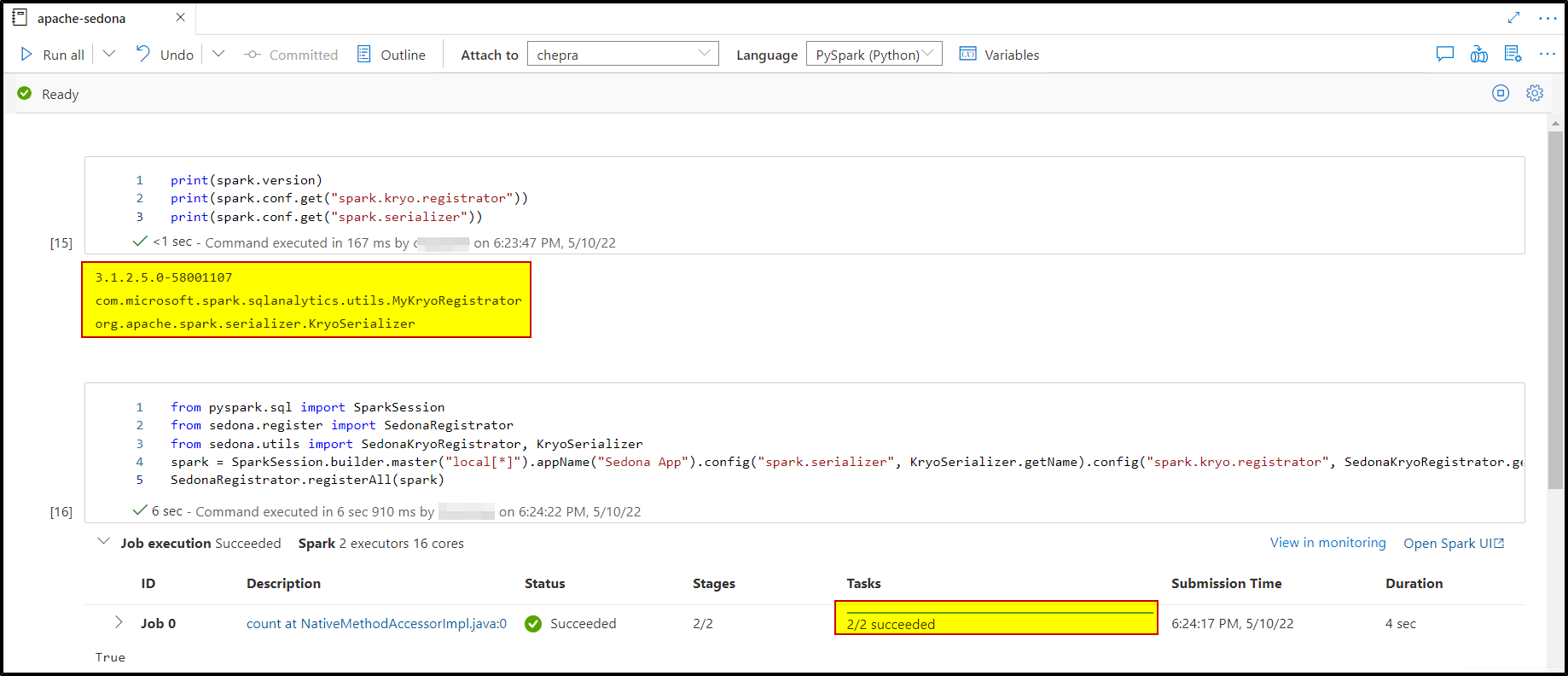

As per the repro from my end, I'm able to successfully run the above commands without any issue.

I just installed the requirement[dot]txt file and downloaded below two jar files:

- sedona-python-adapter-3.0_2.12–1.0.0-incubating.jar

- geotools-wrapper-geotools-24.0.jar

Note: config[dot]txt file is not required.

If you are still facing the same error message, I would request you to share the complete stack trace of the error message which you are experiencing.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators