Hi @Inma ,

Thankyou for using Microsoft Q&A platform and thanks for posting your question here.

As I understand your issue, you are trying to copy the data from Azure sql table to .parquet file in ADLS. Since the table content is huge, it is throwing the above stated error. Please let me know if my understanding is incorrect.

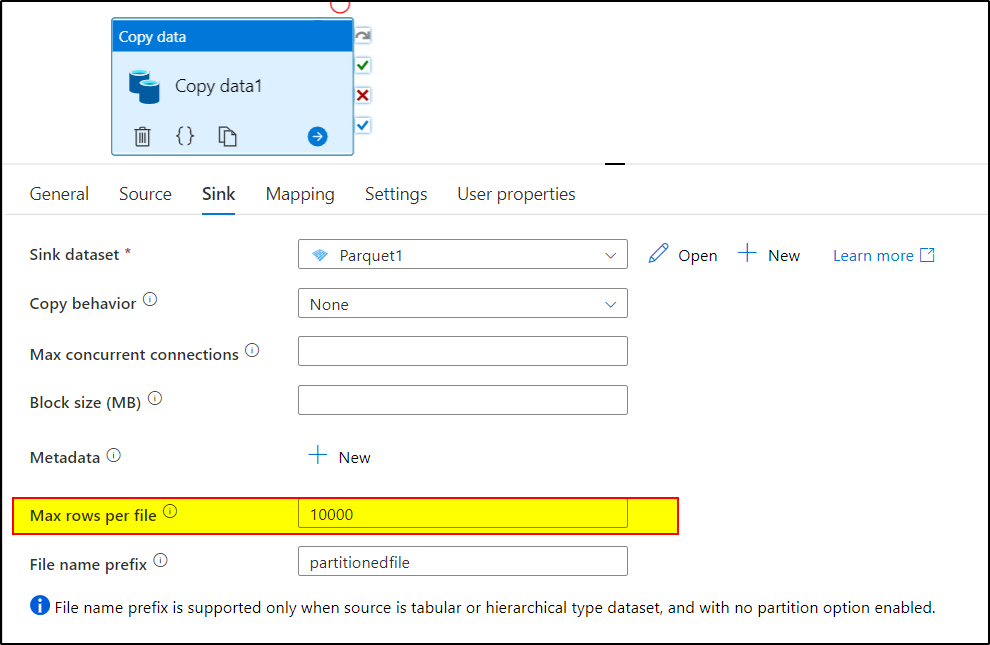

You can consider loading the data into partitioned file instead of one single file.

To do that, you need to take care of below points:

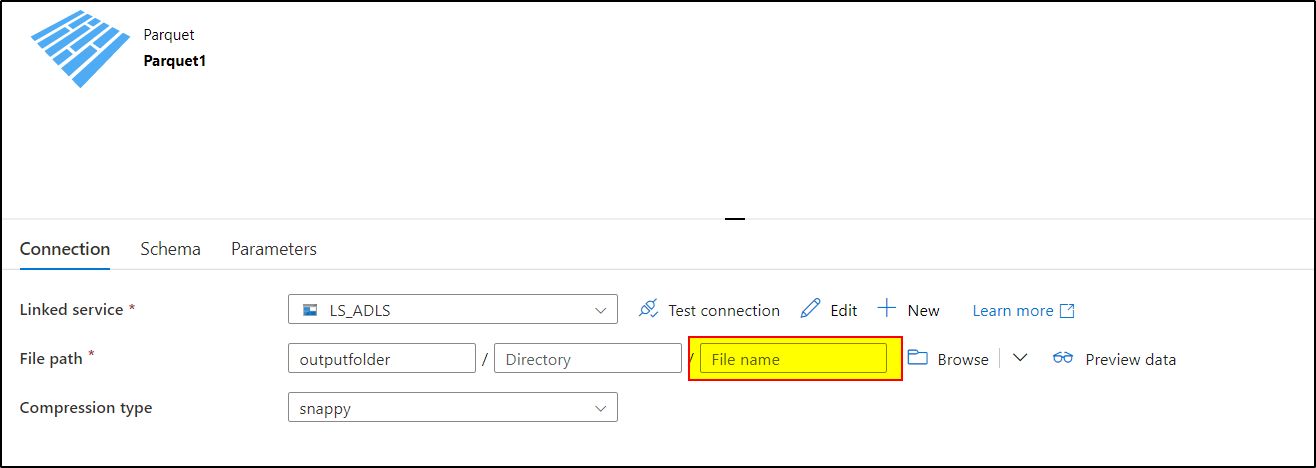

- Leave the file name section of sink dataset as blank instead of hardcoding it

- In the sink settings, provide an integer value (example: 10000) in the 'max row' option to load 10000 rows of data per partitioned file.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators