Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

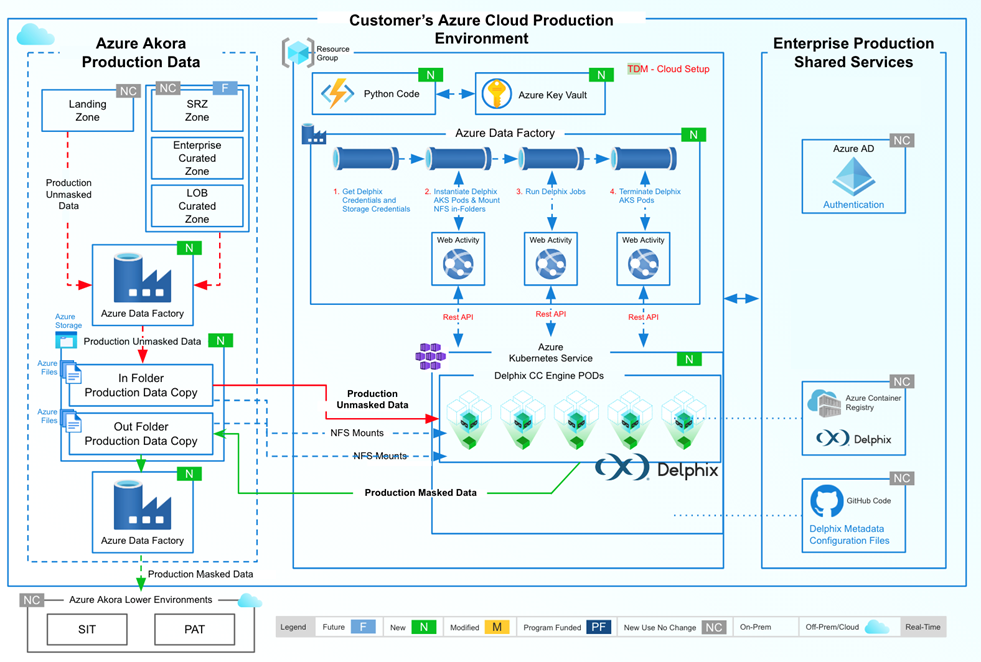

The following architecture outlines the use of Delphix Continuous Compliance (Delphix CC) in an Azure Data Factory extract, transform, and load (ETL) pipeline to identify and mask sensitive data.

Architecture

Download a Visio file of this architecture.

Note

This solution is specific to Azure Data Factory and Azure Synapse Analytics Pipelines. Delphix CC Profiling and Delphix CC Masking templates are not yet available for Microsoft Fabric Data Factory. Contact your Perforce Delphix account representative about Microsoft Fabric support.

Dataflow

The following dataflow corresponds to the previous diagram:

Data Factory extracts data from source data stores to a container in Azure Files by using the Copy Data activity. This container is referred to as the source data container, and the data is in CSV format.

Data Factory initiates an iterator (ForEach activity) that loops through a list of masking jobs configured within Delphix. These preconfigured masking jobs mask sensitive data in the source data container.

For each job in the list, the Initiate Masking activity authenticates and initiates the masking job by calling the REST API endpoints on the Delphix CC engine.

The Delphix CC engine reads data from the source data container and runs through the masking process.

In this masking process, Delphix masks data in-memory and writes the resultant masked data back to a target Azure Files container, which is referred to as the target data container.

Data Factory initiates a second iterator (ForEach activity) that monitors the implementations.

For each implementation (masking job) that starts, the Check Status activity checks the result of masking.

After all masking jobs complete successfully, Data Factory loads the masked data from the target data container to the specified destination.

Components

Data Factory is an ETL service for scale-out serverless data integration and data transformation. It provides a code-free UI for intuitive authoring and unified monitoring and management. In this architecture, Data Factory orchestrates the entire data masking workflow. This workflow includes extracting data, initiating masking jobs, monitoring operations, and loading masked data into destination stores.

Azure Synapse Analytics is an analytics service that combines data integration, enterprise data warehousing, and big data analytics. In this architecture, Azure Synapse Analytics can serve as the destination for masked data and includes Data Factory pipelines for data integration.

Azure Storage is a cloud-based solution that provides scalable storage for both structured and unstructured data. In this architecture, it stores both the raw source data and the masked output data. Azure Storage serves as the intermediary storage layer for data that loads into destination data stores.

Azure Virtual Network is a private, isolated network environment in Azure. In this architecture, Virtual Network provides private networking capabilities for Azure resources that aren't a part of the Azure Synapse Analytics workspace. It allows you to manage access, security, and routing between resources.

Other components might include various source and destination data stores, depending on the specific use case. These components integrate into the architecture based on the data sources that you use, such as SAP, Salesforce, or Oracle EBS.

Alternatives

You can also perform data obfuscation by using Microsoft Presidio. For more information, see Presidio data protection and de-identification SDK.

Scenario details

Data volume rapidly increased in recent years. To unlock the strategic value of data, it needs to be dynamic and portable. Data in silos limits its strategic value and is difficult to use for analytical purposes.

Breaking down data silos presents challenges:

Data must be manipulated to fit to a common format. ETL pipelines must be adapted to each system of record and must scale to support the massive datasets of modern enterprises.

Compliance with regulations regarding sensitive information must be maintained when data is moved from systems of record. Customer data and other sensitive elements must be obscured without affecting the business value of the dataset.

What is Data Factory?

Data Factory is a managed, serverless data integration service. It provides a visual experience for integrating data sources with more than 100 built-in, maintenance-free connectors at no added cost. Easily construct ETL and extract, load, transform (ELT) processes code-free in an intuitive environment, or write your own code. To unlock your data's power through business insights, deliver integrated data to Azure Synapse Analytics. Azure Synapse Analytics also includes Data Factory pipelines.

What is Delphix CC?

Delphix CC identifies sensitive information and automates data masking. It offers an automated, API-driven way to provide secure data.

How do Delphix CC and Data Factory solve automating compliant data?

Delphix simplifies consistent data compliance, while Data Factory enables connecting and moving data. Together, Delphix and Data Factory combine industry-leading compliance and automation offerings to simplify the delivery of on-demand, compliant data.

This solution uses Data Factory data source connectors to create two ETL pipelines that automate the following steps:

Read data from the system of record and write it to CSV files in Azure Blob Storage.

Provide Delphix CC with requirements to identify columns that might contain sensitive data and assign appropriate masking algorithms.

Run a Delphix masking job against the files to replace sensitive data elements with similar but fictitious values.

Load the compliant data to any Data Factory-supported data store.

Potential use cases

Activate Azure data services for industry-specific solutions safely

Identify and mask sensitive data in large and complex applications, where customer data is otherwise difficult to identify. Delphix enables users to automatically move compliant data from sources like SAP, Salesforce, and Oracle E-Business Suite (EBS) to high-value service layers, like Azure Synapse Analytics.

Use Microsoft Azure connectors to safely unlock, mask, and migrate your data from any source.

Solve complex regulatory compliance for data

Use the Delphix Algorithm Framework to address regulatory requirements for your data.

Apply data-ready rules for regulatory needs, such as California Consumer Privacy Act (CCPA), General Data Protection Law (Lei Geral de Proteção de Dados, LGPD), and Health Insurance Portability and Accountability Act (HIPAA).

Accelerate the DevSecOps shift left

Provide production-grade data to your development and analytics pipelines, such as Azure DevOps, Jenkins, and Harness, and other automation workflows. To do so, mask sensitive data in centralized Data Factory pipelines.

Mask data consistently across data sources to maintain referential integrity for integrated application testing. For example, the name George must always be masked to Elliot. Or a given social security number (SSN) must always be masked to the same SSN, whether George and George's SSN appear in Oracle, Salesforce, or SAP.

Speed up AI and machine learning algorithm training by using compliant analytics

Mask data without increasing training cycles.

Retain data integrity while masking to avoid affecting model and prediction accuracy.

Use any Data Factory or Azure Synapse Analytics connector to facilitate a given use case.

Key benefits

- Universal connectivity

- Realistic, deterministic masking that maintains referential integrity

- Preemptive identification of sensitive data for key enterprise applications

- Native cloud implementation

- Template-based deployment

- Scalable

Example architecture

The following example shows how you might architect an environment for this masking use case.

The previous example architecture has the following components:

- Data Factory or Azure Synapse Analytics ingests and connects to production, unmasked data in the landing zone.

- Data is moved to data staging in Storage.

- A Network File System (NFS) mount of production data to Delphix CC PODs enables the pipeline to call the Delphix CC service.

- Masked data is returned for distribution within Data Factory and lower environments.

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that you can use to improve the quality of a workload. For more information, see Well-Architected Framework.

Security

Security provides assurances against deliberate attacks and the misuse of your valuable data and systems. For more information, see Design review checklist for Security.

Delphix CC irreversibly masks data values with realistic data that remains fully functional, which enables the development of higher-quality code. Among the set of algorithms available to transform data to user specifications, Delphix CC has a patented algorithm. The algorithm intentionally produces data collisions and allows you to salt data with specific values needed for potential validation routines on the masked dataset. From a zero trust perspective, operators don't need access to the actual data to mask it. The entire delivery of masked data from point A to point B can be automated via APIs.

Cost Optimization

Cost Optimization focuses on ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Design review checklist for Cost Optimization.

To see how your specific requirements affect cost, adjust values in the Azure pricing calculator.

Azure Synapse Analytics: You can scale compute and storage levels independently. Compute resources are charged per hour, and you can scale or pause these resources on demand. Storage resources are billed per terabyte, so your costs increase as you ingest data.

Data Factory or Azure Synapse Analytics: Costs are based on the number of read and write operations, monitoring operations, and orchestration activities for each workload. Costs increase with each extra data stream and the amount of data that each one processes.

Delphix CC: Unlike other data compliance products, Delphix doesn't require a full physical copy of the environment to perform masking.

Environment redundancy can be expensive because of several reasons:

- The time that it takes to set up and maintain the infrastructure

- The cost of the infrastructure itself

- The time that you spend repeatedly loading physical data into the masking environment

Performance Efficiency

Performance Efficiency refers to your workload's ability to scale to meet user demands efficiently. For more information, see Design review checklist for Performance Efficiency.

Delphix CC is horizontally and vertically scalable. The transformations occur in memory and can be parallelized. The product runs both as a service and as a multi-node appliance, so you can design solution architectures of any size based on the application. Delphix is the market leader in delivering large masked datasets.

Masking streams can be increased to engage multiple CPU cores in a job. For more information about how to alter memory allocation, see Create masking jobs.

For optimal performance of datasets larger than 1 TB in size, Delphix Hyperscale Masking breaks the datasets into numerous modules and then orchestrates the masking jobs across multiple continuous compliance engines.

Deploy this scenario

In Data Factory, deploy both the Delphix CC Profiling and Delphix CC Masking templates. These templates work for both Azure Synapse Analytics and Data Factory pipelines.

In the Copy Data components, configure the desired source and target data stores. In the Web Activity components, input the Delphix application IP address or host name and the credentials to authenticate with Delphix CC APIs.

Run the Delphix CC Profiling Data Factory template for initial setup and anytime you want to re-identify sensitive data, such as a schema change. This template provides Delphix CC with the initial configuration that it requires to scan for columns that might contain sensitive data.

Create a rule set that indicates the collection of data that you want to profile. Run a profiling job in the Delphix UI to identify and classify sensitive fields for that rule set and assign appropriate masking algorithms.

Review and modify results from the inventory screen as desired. When you want to apply masking, create a masking job.

In the Data Factory UI, open the Delphix CC Masking Data Factory template. Provide the masking job ID from the previous step, then run the template.

Masked data appears in the target data store of your choice.

Note

You need the Delphix application IP address and host name with credentials to authenticate to the Delphix APIs.

Contributors

Microsoft maintains this article. The following contributors wrote this article.

Principal authors:

- Tess Maggio | Product Manager 2

- Arun Saju | Senior Staff Engineer

- David Wells | Senior Director, Continuous Compliance Product Lead

Other contributors:

- Jon Burchel | Senior Content Developer

- Abhishek Narain | Senior Program Manager

- Doug Smith | Global Practice Director, DevOps, CI/CD

- Michael Torok | Senior Director, Community Management & Experience

To see nonpublic LinkedIn profiles, sign in to LinkedIn.

Next steps

See the following Delphix resources:

Learn more about the key Azure services in this solution:

- What is Data Factory?

- What is Azure Synapse Analytics?

- Introduction to Storage

- What is Virtual Network?