Load Testing Visual Studio Online

By Ed Glas, Jiange Sun, and Bill Barnett

In this post we discuss how we load test Visual Studio Online. This post illustrates how vital load testing is to our development lifecycle and to having a healthy service.

Cloud Cadence

First some background on our engineering processes. This is an important context for the rest of the paper.

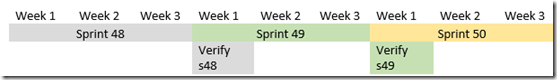

Our development teams work in 3 week sprints, and at the end of each sprint, in parallel with the first week of the next sprint, we validate the bits and then deploy to the service:

An important principle we have is that our product is ready to ship at the end of the sprint. Verification week is not for finishing up testing new features, it is for doing final verification that we are ready to deploy. We do not want to find new issues during verification week, as our schedules are tight and blocking bugs will knock us off schedule and cause us to miss our deployment day. Once we get off schedule, it ends up snowballing into the next sprint. During verification week, we spend Monday through Wednesday verifying the product in our test environments. If we are green on Thursday we deploy to a pre-production environment, which we verify on Thursday and Friday. Once we have the green light in pre-prod, we deploy to production, which is targeted for Monday morning.

How do we make sure that at the end of the sprint our bits are ready to ship? We keep a constant high level of quality in our working branch. The tests we use during verification week are the same tests we run continuously in our working branch. If a test goes from green to red, we react quickly to get it back to green.

We have a similar approach with our load tests. We never want to stray far from being ready to deploy. However, our load test results require analysis to determine if we are green or red, so we do not run them continuously but we do run them regularly throughout a sprint.

Why Load Testing?

Load testing is a critical part of our software development process. We find many serious issues in load testing, everything from performance regressions, deadlocks and other timing-related bugs, to memory leaks that can’t be effectively found with functional tests. Load testing is critical to being able to confidently deploy.

Visual Studio Online and Team Foundation Server

Throughout this paper I refer to both Visual Studio Online and Team Foundation Server. Several of the services available in Visual Studio Online were previously packaged as an offering named Team Foundation Service (which has been retired as a product name). Most of the capabilities of Visual Studio Online are derived from a common code base that is shared with Team Foundation Server, Microsoft’s on-premises offering for software development teams. Our product team builds both Visual Studio Online and Team Foundation Server, and these offerings will continue to co-evolve. Since they are derived from the same code base, we are able to leverage test assets from TFS to test Visual Studio Online.

Load Testing Visual Studio Online and Team Foundation Server

As you will see, we use load tests to test a variety of different things in VSO and TFS. The TFS team was the very first adopter of Visual Studio load tests back in the 2003 and 2004 time frame, and TFS continues to use Visual Studio load tests for all load testing today. In addition to using load agents to test our on-premises product, we recently began using Visual Studio Online cloud-based load testing service to load test Visual Studio Online.

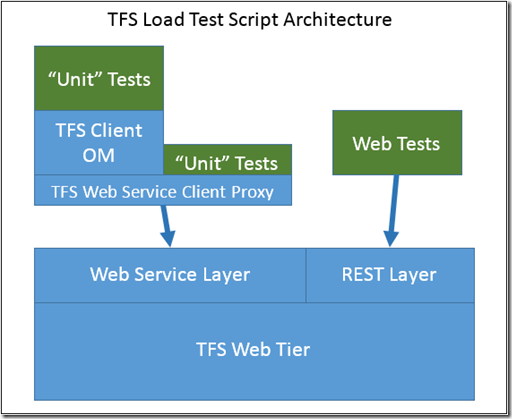

At the heart of all our load tests are a set of “scripts” that we use to drive load to the various subsystems in TFS. The test scripts we use in our load tests use different test types and target different layers of our architecture, depending on the subsystem they are targeting. TFS has web service end points, rest end points, and web front ends. Visual Studio and other thick clients call TFS via the client object model, while our web clients call TFS via a set of private REST endpoints on the server.

In order to generate the massive loads we need, our test scripts must be very efficient. We use Web tests to drive load to our Web site and Web pages, and “unit” tests to drive load to our Web services. I put the quote around unit tests as these are really API integration tests, not unit tests in the purist sense. Most of our tests drive load at the client OM layer. We have a few tests that target the underlying (non-public) Web services proxy layer because the client OM doesn’t scale well on a single client as we scale up the load.

All test scripts use common configuration to determine which TFS instance to target in the test, and scripts can be configured to target an on-premises server or an instance of Visual Studio Online running on Azure. This is a fundamental point, and key to us being able to re-use the same scripts to drive load to different TFS instances configured in a variety of ways.

We vary the script test mix and load patterns to suit the purpose of each load test. Below in this paper we will go through the different types of load tests we run and their purpose, and as we do we will outline the type of load patterns and test mix we use.

Testing On Azure vs. On-premises

Two critical aspects to successfully load testing TFS are 1) carefully scripting the server to simulate realistic load, and 2) carefully managing our data shape. TFS runs both on-premises and in the cloud on Azure. The code that runs on-premises and in the cloud is largely the same, but the topology and data scale are different. In our on-premises installations, we see scale up of a single instance, while in our cloud service we see scale out of many, many customers and many collection databases.

One difference between TFS on-premises and TFS on Azure is TFS on Azure we have many thousands of customer collections. In our on-premises product, a large installation will have on the order of tens of collections, but each of these collections can have many terabytes of data.

When load testing TFS on Azure, we start by load testing on Azure dev fabric. Azure dev fabric is a high-fidelity emulator that runs in a local development environment. While running on dev fabric is not sufficient, we are able to test a significant part of the product, and as a result catch a significant number of bugs before going to real azure. Azure dev fabric has the advantage that it is fast to set up our large data scale environments, and easier to debug. But of course there are major architectural differences, the most prominent being in dev fabric we use on-premises SQL, whereas on Azure we use SQL Azure. To get to an “interesting” number of collections on dev fabric, we really crank up the tenant density per database.

Once we have stabilized on Azure dev fabric, we move to load testing on Azure. When testing on Azure, we use the Visual Studio Online load testing service.

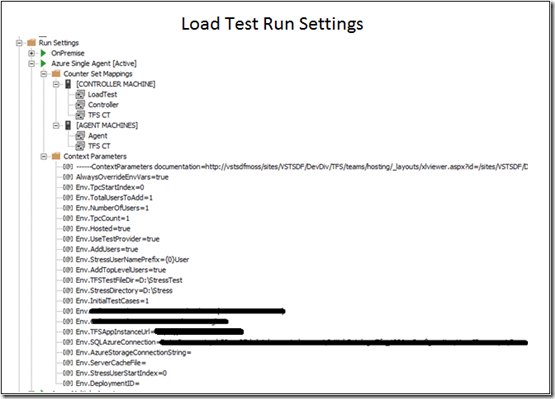

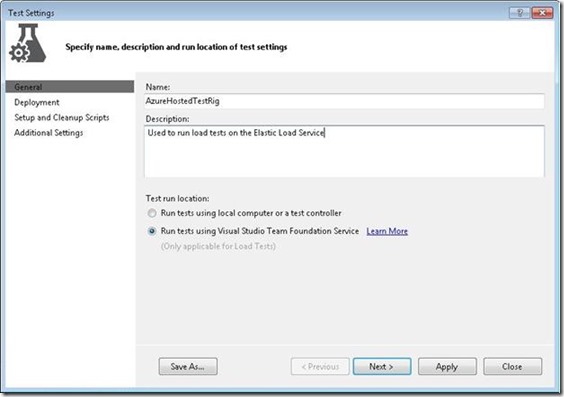

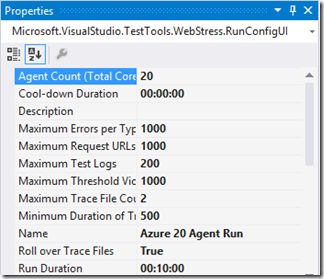

Here is the test setting we use to our load tests using the Visual Studio Online load testing agents:

And here is the Agent Count property in the load test run settings in the load test, which controls the number of agents our test will run on:

See the help topic here for a complete walkthrough of how to use the load testing service.

Load Generation on Azure

A big difference between testing on Azure and testing on-premises is the load generators we use. When testing our on-premises product, we simply use on-premises load agents. Until recently when we started dogfooding the Visual Studio Online load testing service, when testing on Azure we did not have a good answer for load generators. We tried a lot of different things:

- Load agents on corpnet. Our Corpnet network proxy was the bottleneck and shut us down at relatively light loads.

- Load agents on azure with load controller on corpnet. The network connection between the agents and controller was too flaky, leading to unreliable test runs (run would fail part way in to a many hour run).

- Load agents and controller on Azure VMs. Again the agent and controller network connection wasn’t reliable enough, causing runs to fail. Fundamentally we see more network noise in the cloud, and the connections between the agent and controller weren’t built for the noisy network we see in the cloud.

- Load agents running independently on VMs, test results manually merged. Merging results was painful, but at least it was reliable!

When we are on our tight deployment schedules, and our load runs go for 8 hours, so we must be able to reliably get results from our load tests or we risk deploying with blind spots for failed runs, or delaying the deployment until we get a complete set of results.

Over the past six months we have been dogfooding the Visual Studio Online load testing service which is hosted in Azure. This is a great service that has enabled us to drive load reliably at scale from Azure, solving one of our critical needs for load testing the service.

Data Collection on Azure

Another big challenge testing in Azure has been collecting performance data from the system under test. For TFS, we have the following types of interesting data we collect from the TFS application tier:

- Perf counters. We collect CPU, memory, disk, network, plus TFS-specific counters and ASP.NET counters

- Event log data

- TFS Activity logs. The activity log has a log entry for each call into the TFS web service or REST APIs. It records the command, time of the request and response (so we can compute server response time), the outcome (success/failure), as well as a few other fields.

- TFS ETW traces. These are highly configurable internal traces in our product code that we use to debug issues.

The on-premises Visual Studio load testing product enables you to collect performance counters from the system under test, but in order to do so the load test controller must be able to connect to the machines under test via non-internet friendly ports (read: not port 80 or 443). This simply does not work well against machines running in the cloud, and in fact is not supported by the load testing service. On-premises you can also configure a data collection agent on the machine under test, but installing and configuring this agent is not supported by the load testing service.

On our production service, we have a pretty rich data collection and monitoring system in place for our service that collects all this data and stores it in a data warehouse for mining and analysis.

We leverage this system for our cloud load tests as well. Really I believe this is the right pattern to follow for load testing – use the same system you use to collect data and monitor your production service to monitor your test runs. This has a bunch of advantages: your monitoring system gets tested too, and any improvements you make for your test environments carries over to prod, and visa-versa.

The one exception here is perfmon counters, as we collect those via a different means on the service. We are manually collecting perf counters on the TFS web and worker roles (the system under test) by kicking off perfmon to log the counters to a file before each run.

The downside with this approach is you do not get the nice view in the load test analyzer that ties together performance data collected from the load agents with the perf counter data collected from the system under test. We are able to construct these views using our monitoring system, however.

The load testing service does not solve our problem of collecting perf counters from the system under test, as this is not yet supported. I know they have plans to cover this, but it is too early to disclose them. The load testing service does supply the same rich set of data from the load test agents, and we collect our activity log data using a data collector.

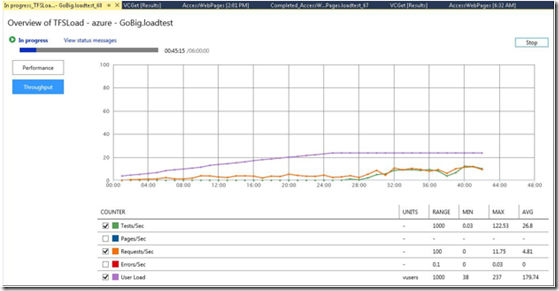

Here is a screen shot of our GoBig load test running on the load testing service.

This is a simplified experience when compared to data we get when running a load test using local load agents. However, we get enough data to determine if the test is running successfully, which is the main thing we are looking for while the test is running. We want to make sure we don’t let the test run for 8 hours only to find it wasn’t configured properly.

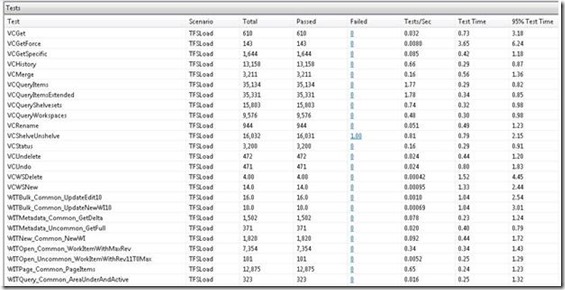

Below are a two screen shots of using the Load Test Analyzer to analyze a GoBig load test result. Post-run analysis is the same experience as analyzing a run using local load agents.

Here is the key indicators graph:

And the Tests table with a roll up of test results per test in the load test. Again, this is the same experience whether generating load from Azure or from local load agents.

Direction We Are Headed

I have long been a proponent that you should use your production environment monitoring system to also monitor your system under test during a load test, and am happy that we are doing that in our load testing. That way you are testing your operational environment and operational readiness along with the app. After all, the tools you need to debug a problem during or after a load test are the same tools you need to debug problems in production. This is a key tenet of DevOps.

We are now actively working on adopting our DevOps monitoring solutions for Visual Studio Online. This includes performance monitoring on the app tier, as well as out-side in monitors from Global Service Monitoring. This configuration will enable us to relatively easily configure monitoring in our test environments in the same way as production.

Types of Load Testing We Do

At this point we have described how we do load testing on premises and in the cloud. Now we will move to describing the different types of load tests we run, and what the purpose of each of these tests is.

Performance Regression Testing

One of our most important Load Testing activities each Sprint is to ensure that no performance or scale regressions are introduced when an update is deployed to the TFS Service. The data scale on the service has grown very large, as have our peak loads are we regularly see over 1000 RPS on the service. Many of these requests are for a large amount of data (Version Control file downloads for example) or require a fair amount of SQL processing (Work Item queries for example).

In order to ensure that the service can handle this load, we need to test on a similar configuration with a similar amount of load. To generate the amount of load needed we need to use the Load Testing Service with multiple load test agents because the Version Control tests included in our load test make heavy use of the local disk to create, edit, check in, and later get files.

By using a large test environment and using the Load Testing Service to generate high load levels, we have been able to identify several performance regressions during verification rather than in production.

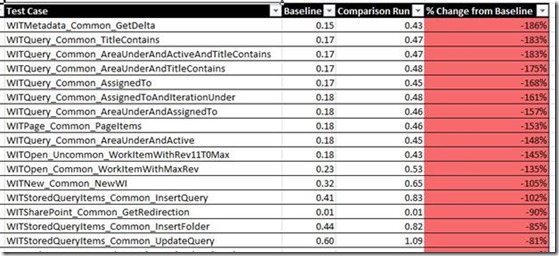

We use the Load Testing service to run a six hour load test with a load of over 800 requests per second. At the end of the run we download the load test result into our on-premises load test results database where we can view and analyze the results in either Visual Studio or in Excel using the Load Test reports plugin. In Excel we ran the Load Test Comparison report to compare the result of the current Sprint with the previous one. On the sheet that compares the test results we noticed that many of the Work Item Tracking tests in our load test had a higher execution time than the baseline result from the previous sprint:

This led us to further investigation and a fix for this regression.

Scale Testing

One of the big challenges with running the TFS service has been staying ahead of the scale curve. The pattern we have seen is that with each order of magnitude increase in the number of accounts and users, we have encountered new bottlenecks in scale. Logic would tell you that scale is linear and you can predict the next server tipping point by extrapolating out resource utilization on the server to see when you will run out resources, and thus fall over. For example, if each new user takes 10KB of memory, then we can multiply out to see how many concurrent users when you will run out of memory. At that point, you can simply add another AT, or increase your role size on Azure so the machine will have more memory.

However in practice, it is never linear, and the server almost always tips over in ways you didn’t foresee. As a result, it is very hard to predict where your system will fall over. And often there is a lot of engineering work required to overcome the scale bottleneck, so if you wait until it happens in production you will not have enough time to react and keep your site healthy.

To stay ahead of outages due to scale on TFS on Azure, we do targeted scale testing. On Azure, our most interesting scale factor is the number of accounts and users. In order to run interesting scale tests, we need to run against TFS instances with hundreds of thousands of accounts and users. Our initial approach to preparing this test environment was to start fresh and then run the scripts that do account and user creation to build up interesting data sets. As you can imagine, we found a number of stress bugs in our account and user creation code this way, but it still took at two to three days to get an environment populated which was unacceptable.

We changed our strategy for data population to instead create what we called a “golden instance” of the data, which could then be replicated into our test environments. We had already developed the data replication code for our functional tests, now we needed to do it at scale, which was a matter of doing the data population one time and then saving off the data. This has the advantage of being faster and more reliable, and the data has been through the same migration path as data on the service.

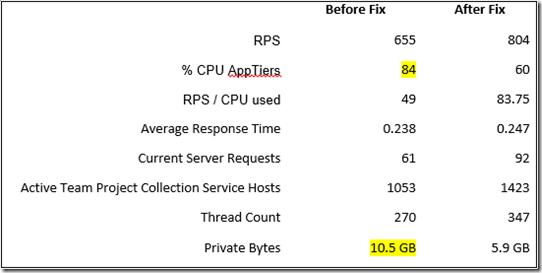

In a recent run using the load testing service, we found a large regression in both memory and CPU that was caused by one bug. This table shows the results gathered using perfmon during the load test before and after the bug was fixed:

Deployment Testing

As described above, we deploy to Azure at the end of each sprint. Visual Studio Online is architected into separate services that can be deployed to and serviced independently. The current set of services are:

- Shared Services, which handles user authentication, access control, and account management.

- Visual Studio Online Services, which manages the core TFS functions of work item tracking, version control, and build.

- Machine Pool Management Service, which manages the set of machines we use to do builds for customers on Visual Studio Online.

- Load testing service, which manages running load tests on the machine pool service.

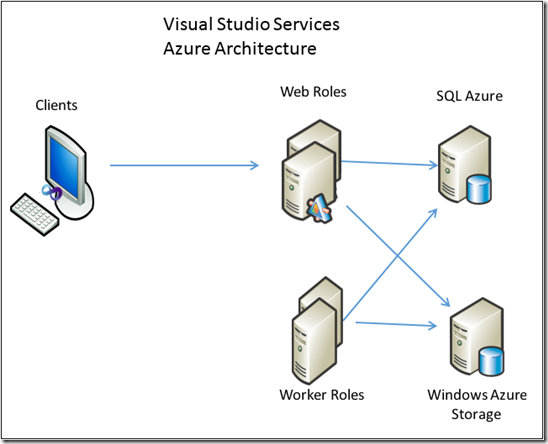

Each service has a unique architecture, but follows a similar pattern of web front ends hosted on web roles, job agents hosted on worker roles, and SQL databases hosted in SQL Azure.

Our deployments are designed to achieve zero downtime to our customers. In order to achieve that, we deploy in three phases:

Phase 1: deploy new binaries to our web front ends and job agents.

Phase 2: Run jobs to upgrade the the databases.

Phase 3: Run jobs to do any necessary modifications of the data stored in the databases.

In order for this to result in zero down time, each service must keep compatibility at the web front end, and more importantly, the service must be able to stay up even through the different phases of the upgrade, which means that new binaries must be able to work with the old db schema and data shapes.

What’s more, customer data is stored in 1000s of databases in SQL azure. The code we use to service the databases actually runs as a set of jobs in the job service. We have found that running these jobs will put a large load and thus a large stress on the service. We need to ensure the service remains operational and responsive to our customers while sustaining the load from servicing.

To test our upgrades, we run a set of functional tests at each phase of the deployment to ensure the parts are compatible. But this isn’t sufficient for measuring downtime. To test the system remains available throughout the deployment, we run a load test while the upgrade is happening. The load test has the advantage of keeping constant activity going against the service so we can detect any outages. In this test it is important that we have a data shape on the test system that is similar to the data we have in production.

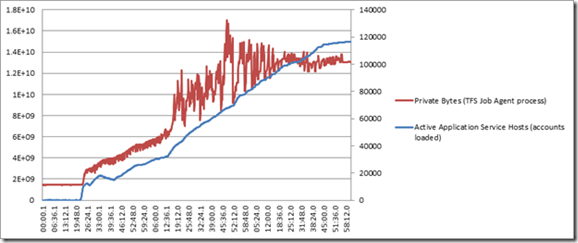

In a recent run, we found that we were processing upgrade jobs “too fast”, resulting in loading account specific data into memory for all 120,000 accounts in our test deployment. This eventually led to running out of memory. Here’s a graph showing how the number of accounts loaded went up to 120,000 accounts, driving the memory used up to 16 GB, running the process out of memory. The short term fix was to throttle the rate of upgrade jobs, while the long term fix was to manage the number of loaded account by memory consumption as well as time, and start unloading accounts on the job agent as we see memory pressure.

Directed Load Testing

Another set of load tests we run are directed tests, aimed at isolating a particular component or service for performance, stress, and scale testing. We have found that targeting load testing at a particular component is a much more effective way to quickly find stress-related bugs in that component, vs. just adding that component into the larger load test that hits everything on the service.

By focusing a load test on a particular component or service we are able to drive more load to it and thus reduce the time to find timing or concurrency related bugs. It also isolates the bugs to that particular service, which helps with debugging. Whenever we are bringing on a new service or introducing a new architectural pattern to an existing service, the team bringing the change needs to do this type of targeted testing before bringing it to production. Recent examples of these changes are introducing Shared Platform Services and the load testing service itself. An example of an architectural change was the introduction of Team Rooms to TFS, or the switch from PaaS build machines to IaaS build machines.

Testing in Production

The larger our service becomes, the harder and costlier it is to simulate scale in our load tests. A fundamental tenet is that we introduce change incrementally to avoid large destabilizing changes. In addition to preventing performance and scale issues, we are spending a lot more time analyzing the rich data we are getting back from production. One of the challenges we face is we collect so much data, how do we make sense of it all?

We systematically are analyzing the following data to look for regressions:

- Activity log analysis. The activity log logs all calls to a service.

- Increases in failed command counts

- Increases in response times, which indicates a perf regression

- Increases in call counts, which indicates a client chattiness regression

- CPU and memory usage. We analyze CPU perf counters to look for increased CPU usage after a deployment, and memory to look for increased memory usage as well as memory leaks

- PerfView analysis. We are now taking PerfView dumps in production after each deployment to find memory regressions and CPU regressions in production. PerfView has become our favorite tool for doing live site debugging.

Generally we want to do enough prior to deployment to ensure the deployment is successful, but we also recognize that we can improve the service a lot by systematically analyzing the rich set of data we are collecting and using that data to drive improvements back into the service.

Conclusion

You can see from this paper how intrinsic load testing is to our software development lifecycle. Regularly running load tests is critical to maintaining a high level of quality during our sprints and deploying every three weeks. The Visual Studio Online load testing service has been a welcome addition to our toolset, as it enables us to reliably generate large loads to our test environments in Azure.

Comments

Anonymous

December 02, 2013

Hi Just to make sure, the load tests are meant to be executed on the cloud or on premise as well?Anonymous

December 03, 2013

The comment has been removedAnonymous

December 03, 2013

@Assaf, the scripts we use to simulate users run in load tests both on-premises and in the cloud. Some of our load tests are used both in the cloud and on premises, like our server-perf load test. Some are designed to be used only in the cloud, such as our "go-big" tests which really targeted at the unique scale we have in the cloud.Anonymous

January 20, 2014

Currenly zero downtime is for the vsonline version of tfs. Is there also some ways to achieve zero downtime for the on-premise variant.With the increase speeds of updates for TFS, this is something that would help companies to "keep up".Anonymous

May 06, 2014

Hi Is there any way of giving inputs during run time in a load test?Anonymous

May 15, 2014

The comment has been removedAnonymous

July 10, 2014

Hi, Is load testing visual studio only work with vs2013 ? or earlier version can also accepted ?? RegardsAnonymous

October 22, 2014

Does anyone have any ideas on how to trigger a load test from a TFS build? My current problem is detailed here: stackoverflow.com/.../running-loadtests-against-visual-studios-online-from-tfs-build