Securely Upload to Azure Storage with Angular

This post will show you how to securely upload blob content to Azure Storage from an Angular app.

The source code for this solution is available at https://github.com/kaevans/globalscaledemo.

Background

Our team has been busy the past few months traveling the globe and hosting readiness workshops for our top global system integrator partners. One of the sessions that I wrote for the workshop, Architecting Global Scale Solutions, includes a demonstration of an application that operates on a global scale… you can deploy it to as many Azure regions as you want and it will scale horizontally across all of them. One of the themes in the talk is about performance and the things that limit the ability to scale linearly as the amount of work increases. The scenario is a web application that lets authenticated users upload a photograph.

Think about the problem for a moment and it will be evident. If a user uploads a photo to our web server, then this can have drastic repercussions on our web application. Our web application would need to read the HTTP request, de-serialize the byte stream, and then move that stream to some type of storage. If we stream to the local disk, then we are potentially creating a bottleneck for IO on the local disk. If we load the image into memory, then this will cause performance issues as the amount of work increases. If we save to some external store, we likely first have to de-serialize into memory and then call to an external network resource… we now have a potentially unavailable service along with a network bottleneck. Ideally, we would love to avoid dealing with the problem at all and enable users to upload directly to storage. But how do we do this securely?

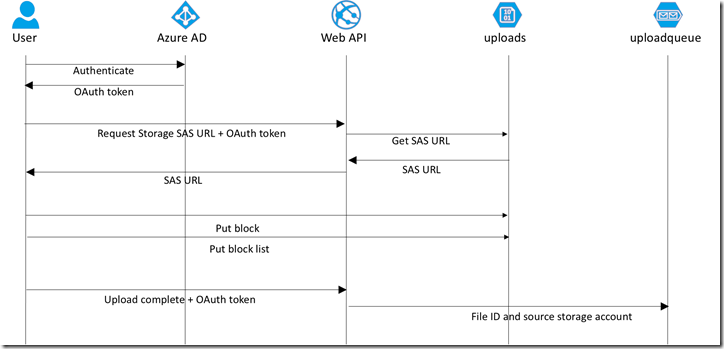

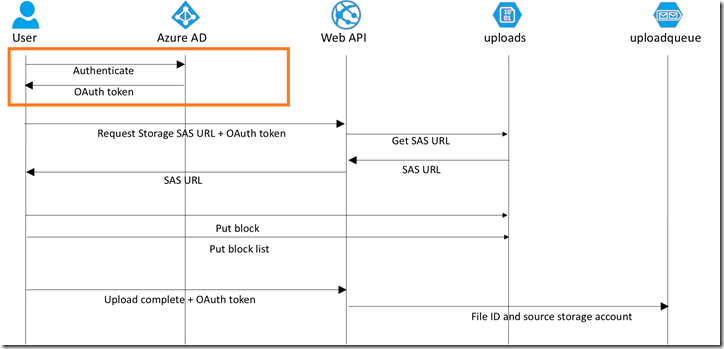

Our solution will authenticate our users with Azure AD. Azure Storage does not provide a per-user authentication and does not integrate with Azure AD for authentication. Rather than put our storage key in JavaScript (which they could easily obtain and disclose to non-authenticated users), we want to use an ad-hoc Shared Access Signature. This allows us to provide a limited permission set to a limited set of resources for a limited amount of time. In our case, we will only allow writing to a single blob container for 2 minutes. In order to obtain that SAS URL, they have to be an authenticated user, which we will handle using Active Directory Authentication Library (ADAL) for JavaScript.

Patterns and Practices

If you are working with distributed systems, you should be aware of the work that the Patterns and Practices team has done to identify common patterns and prescriptive architecture guidance for cloud applications. This post will leverage two of those patterns: Federated Identity Pattern and Valet Key Pattern. I highly suggest that you spend time reading through these patterns, if for no other reason than to become familiar with problems you weren’t aware existed and to help you design and build more reliable systems.

Authenticating Users

Rather than start from scratch, I started from an existing repository (https://github.com/Azure-Samples/active-directory-angularjs-singlepageapp) that shows how to Integrate Azure AD into an AngularJS single page app. That repository has a complete walkthrough of how to create the single page app and how to register the app in Azure AD. For brevity’s sake, refer to that repo to understand how to create an Angular app that authenticates to Azure AD. I have also written an example, The API Economy: Consuming Our Web API from a Single Page App, which shows the value of using Azure AD to authenticate to a custom Web API as well as downstream services such as Office365.

In the active-directory-angularjs-singlepageapp repo you will see that you have to hard code the client ID into the app.js file. My app is automatically deployed from GitHub, I don’t want to create a process that updates the app.js file. Instead, I’d rather just update the appSettings for my Azure web app. To enable this, I create a model class that contains the information needed in the app.js file for ADAL.js.

ADALConfigResponse.cs

- using GlobalDemo.DAL;

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Web;

- namespace GlobalDemo.Web.Models

- {

- public class ADALConfigResponse

- {

- public string ClientId { get { return SettingsHelper.Audience; } }

- public string Tenant { get { return SettingsHelper.Tenant; } }

- public string Instance { get { return "https://login.microsoftonline.com/"; } }

- }

- }

Next, I created a Web API in my project that will read the appSettings and return the client ID.

ADALConfigController.cs

- using GlobalDemo.Web.Models;

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Net;

- using System.Net.Http;

- using System.Threading.Tasks;

- using System.Web.Http;

- using System.Web.Http.Description;

- namespace GlobalDemo.Web.Controllers

- {

- public class ADALConfigController : ApiController

- {

- [ResponseType(typeof(ADALConfigResponse))]

- public IHttpActionResult Get()

- {

- return Ok(new ADALConfigResponse());

- }

- }

- }

I suck at Angular (and JavaScript in general), so I modified the adal-angular.js file, created a file “adal-angular-modified.js”, that will call the Web API endpoint (ADALConfigController.cs above) in my web application that returns the configuration information. Only the relevant portions are shown here.

adal-angular-modified

- /*

- Modified 11/24/2015 by @kaevans

- Call a service to configure ADAL instead of

- providing a hard coded value

- */

- if (configOptions.clientId) {

- //Use the value provided by the user

- }

- else {

- //HACK. Call a service using jQuery. Got sick of fighting

- //angular injector.

- var myConfig = { instance: "", clientId: "", tenant: "" };

- var resultText = $.ajax(

- {

- url: '/api/adalconfig',

- dataType: JSON,

- async: false

- }).responseText;

- console.log(resultText);

- var myConfig = $.parseJSON(resultText)

- configOptions.clientId = myConfig.ClientId;

- configOptions.instance = myConfig.Instance;

- configOptions.tenant = myConfig.Tenant;

- }

- /* End of modification */

There’s probably a much cleaner way to do this, a more “Angular-y” way, but this worked. If anyone has a better approach, please submit a pull request! Now I don’t have to embed the configuration details in the JavaScript file itself, my Web API will provide those for me.

app.js

- 'use strict';

- angular.module('todoApp', ['ngRoute','AdalAngular','azureBlobUpload'])

- .config(['$routeProvider', '$httpProvider', 'adalAuthenticationServiceProvider', function ($routeProvider, $httpProvider, adalProvider) {

- $routeProvider.when("/Home", {

- controller: "homeCtrl",

- templateUrl: "/App/Views/Home.html",

- }).when("/MyPhotos", {

- controller: "myPhotosCtrl",

- templateUrl: "/App/Views/MyPhotos.html",

- requireADLogin: true,

- }).when("/Upload", {

- controller: "uploadCtrl",

- templateUrl: "/App/Views/Upload.html",

- requireADLogin: true,

- }).when("/UserData", {

- controller: "userDataCtrl",

- templateUrl: "/App/Views/UserData.html",

- }).otherwise({ redirectTo: "/Home" });

- adalProvider.init(

- {

- instance: '',

- tenant: '',

- clientId: '',

- extraQueryParameter: 'nux=1',

- cacheLocation: 'localStorage', // enable this for IE, as sessionStorage does not work for localhost.

- },

- $httpProvider

- );

- }]);

The user is now required to authenticate with Azure AD when accessing Upload.html or MyPhotos.html. This will obtain an OAuth token using the implicit grant flow (make sure you follow the directions in the active-directory-angularjs-singlepageapp repo to update the manifest and enable this). This is an example of the Federated Identity Pattern, it removes the authentication and administration functions for your app and enables your app to focus on its core functions.

Setting Up Storage and CORS

In order for our application to work, we need to make sure that the storage container exists and the storage account enables CORS (which is disabled by default). To do this, I created a class, StorageConfig.cs, that is called when the application is started. You might want to provide a more restricted CORS configuration. Our app doesn’t need all of the CORS methods, so we should probably remove a few (like GET, HEAD, and PUT). Further, we could restrict the allowed origins as well.

Setting up Storage

- using Microsoft.WindowsAzure.Storage;

- using Microsoft.WindowsAzure.Storage.Blob;

- using Microsoft.WindowsAzure.Storage.Shared.Protocol;

- using System.Collections.Generic;

- using System.Threading.Tasks;

- namespace GlobalDemo.Web

- {

- public static class StorageConfig

- {

- /// <summary>

- /// Configures the storage account used by the application.

- /// Configures to support CORS, and creates the blob, table,

- /// and queue needed for the app if they don't already exist.

- /// </summary>

- /// <param name="localStorageConnectionString">The storage account connection string</param>

- /// <returns></returns>

- public static async Task Configure(string storageConnectionString)

- {

- var account = CloudStorageAccount.Parse(storageConnectionString);

- var client = account.CreateCloudBlobClient();

- var serviceProperties = client.GetServiceProperties();

- //Configure CORS

- serviceProperties.Cors = new CorsProperties();

- serviceProperties.Cors.CorsRules.Add(new CorsRule()

- {

- AllowedHeaders = new List<string>() { "*" },

- AllowedMethods = CorsHttpMethods.Put | CorsHttpMethods.Get | CorsHttpMethods.Head | CorsHttpMethods.Post,

- AllowedOrigins = new List<string>() { "*" },

- ExposedHeaders = new List<string>() { "*" },

- MaxAgeInSeconds = 3600 // 60 minutes

- });

- await client.SetServicePropertiesAsync(serviceProperties);

- //Create the public container if it doesn't exist as publicly readable

- var container = client.GetContainerReference(GlobalDemo.DAL.Azure.StorageConfig.PhotosBlobContainerName);

- await container.CreateIfNotExistsAsync(BlobContainerPublicAccessType.Container, new BlobRequestOptions(), new OperationContext { LogLevel = LogLevel.Informational });

- //Create the thumbnail container if it doesn't exist as publicly readable

- container = client.GetContainerReference(GlobalDemo.DAL.Azure.StorageConfig.ThumbnailsBlobContainerName);

- await container.CreateIfNotExistsAsync(BlobContainerPublicAccessType.Container, new BlobRequestOptions(), new OperationContext { LogLevel = LogLevel.Informational });

- //Create the private user uploads container if it doesn't exist

- container = client.GetContainerReference(GlobalDemo.DAL.Azure.StorageConfig.UserUploadBlobContainerName);

- await container.CreateIfNotExistsAsync();

- //Create the "uploadqueue" queue if it doesn't exist

- var queueClient = account.CreateCloudQueueClient();

- var queue = queueClient.GetQueueReference(GlobalDemo.DAL.Azure.StorageConfig.QueueName);

- await queue.CreateIfNotExistsAsync();

- //Create the "photos" table if it doesn't exist

- var tableClient = account.CreateCloudTableClient();

- var table = tableClient.GetTableReference(GlobalDemo.DAL.Azure.StorageConfig.TableName);

- await table.CreateIfNotExistsAsync();

- }

- }

- }

Something to point out… our client will upload to the container named “uploads” (line 47 above). The other two containers are marked as public access, but this one is not. It is only available by going through our server-side application first.

Obtaining a SAS URL

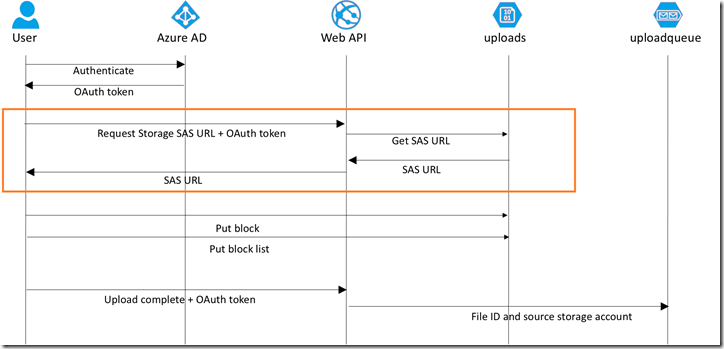

The JavaScript client needs to obtain a SAS URL. We only want authenticated users to have the ability to upload. We will use the Valet Key Pattern to obtain a SAS URL and return it to clients via an authenticated Web API.

We do that through a Web API controller, UploadController.cs.

UploadController.cs

- using GlobalDemo.DAL;

- using GlobalDemo.DAL.Azure;

- using GlobalDemo.Web.Models;

- using Microsoft.WindowsAzure.Storage;

- using System;

- using System.Configuration;

- using System.Security.Claims;

- using System.Text.RegularExpressions;

- using System.Threading.Tasks;

- using System.Web.Http;

- using System.Web.Http.Description;

- namespace GlobalDemo.Web.Controllers

- {

- [Authorize]

- public class UploadController : ApiController

- {

- /// <summary>

- /// Gets a SAS token to add files to blob storage.

- /// The SAS token is good for 2 minutes.

- /// </summary>

- /// <returns>String for the SAS token</returns>

- [ResponseType(typeof(StorageResponse))]

- [HttpGet]

- [Route("api/upload/{extension}")]

- public IHttpActionResult Get(string extension)

- {

- Regex rg = new Regex(@"^[a-zA-Z0-9]{1,3}$");

- if(!rg.IsMatch(extension))

- {

- throw new HttpResponseException(System.Net.HttpStatusCode.BadRequest);

- }

- string connectionString = SettingsHelper.LocalStorageConnectionString;

- var account = CloudStorageAccount.Parse(connectionString);

- StorageRepository repo = new StorageRepository(account);

- //Get the SAS token for the container. Allow writes for 2 minutes

- var sasToken = repo.GetBlobContainerSASToken();

- //Get the blob so we can get the full path including container name

- var id = Guid.NewGuid().ToString();

- var newFileName = id + "." + extension;

- string blobURL = repo.GetBlobURI(

- newFileName,

- DAL.Azure.StorageConfig.UserUploadBlobContainerName).ToString();

- //This function determines which storage account the blob will be

- //uploaded to, enabling the future possibility of sharding across

- //multiple storage accounts.

- var client = account.CreateCloudBlobClient();

- var response = new StorageResponse

- {

- ID = id,

- StorageAccountName = client.BaseUri.Authority.Split('.')[0],

- BlobURL = blobURL,

- BlobSASToken = sasToken,

- ServerFileName = newFileName

- };

- return Ok(response);

- }

Rather than put all of the logic into the controller itself, I created a repository class as a dumping ground for all operations related to storage.

GetBlobContainerSASToken

- /// <summary>

- /// Gets a blob container's SAS token

- /// </summary>

- /// <param name="containerName">The container name</param>

- /// <param name="permissions">The permissions</param>

- /// <param name="minutes">Number of minutes the permissions are effective</param>

- /// <returns>System.String - The SAS token</returns>

- public string GetBlobContainerSASToken(

- string containerName,

- SharedAccessBlobPermissions permissions,

- int minutes)

- {

- var client = _account.CreateCloudBlobClient();

- var policy = new SharedAccessBlobPolicy();

- policy.Permissions = permissions;

- policy.SharedAccessStartTime = System.DateTime.UtcNow.AddMinutes(-10);

- policy.SharedAccessExpiryTime = System.DateTime.UtcNow.AddMinutes(10);

- var container = client.GetContainerReference(containerName);

- //Get the SAS token for the container.

- var sasToken = container.GetSharedAccessSignature(policy);

- return sasToken;

- }

- /// <summary>

- /// Gets the blob container's SAS token without any parameters.

- /// Defaults are Write permissions for 2 minutes

- /// </summary>

- /// <returns>System.String - the SAS token</returns>

- public string GetBlobContainerSASToken()

- {

- return GetBlobContainerSASToken(

- DAL.Azure.StorageConfig.UserUploadBlobContainerName,

- SharedAccessBlobPermissions.Write,

- 2);

- }

Notice that line 15 has the Authorize attribute applied to the controller. This protects that only authenticated users can access this API, so you must have an OAuth token that the app trusts in order to obtain the SAS URL. That OAuth token is handled in the Starup.Auth.cs library which sets up Bearer authentication.

Bearer Authentication

- using System;

- using System.Threading.Tasks;

- using Microsoft.Owin;

- using Owin;

- using Microsoft.Owin.Security.ActiveDirectory;

- using System.Configuration;

- using GlobalDemo.DAL;

- namespace GlobalDemo.Web

- {

- public partial class Startup

- {

- public void ConfigureAuth(IAppBuilder app)

- {

- app.UseWindowsAzureActiveDirectoryBearerAuthentication(

- new WindowsAzureActiveDirectoryBearerAuthenticationOptions

- {

- Tenant = SettingsHelper.Tenant,

- TokenValidationParameters = new System.IdentityModel.Tokens.TokenValidationParameters

- {

- ValidAudience = SettingsHelper.Audience

- }

- });

- }

- }

- }

The angular client can now call the service to obtain the SAS URL.

uploadSvc.js

- 'use strict';

- angular.module('todoApp')

- .factory('uploadSvc', ['$http', function ($http) {

- return {

- getSASToken: function (extension) {

- return $http.get('/api/Upload/' + extension);

- },

- postItem: function (item) {

- return $http.post('/api/Upload/', item);

- }

- };

- }]);

This is the Valet Key Pattern, where the client doesn’t actually get the storage key, but rather a short-lived SAS URL with limited permission to access a resource.

The Angular Client

The client references a series of scripts, including app.js and uploadsvc.js that we’ve shown previously.

JavaScript references

- <script src="https://ajax.googleapis.com/ajax/libs/jquery/1.11.1/jquery.min.js"></script>

- <script src="https://ajax.googleapis.com/ajax/libs/angularjs/1.2.25/angular.min.js"></script>

- <script src="https://code.angularjs.org/1.2.25/angular-route.js"></script>

- <script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.2.0/js/bootstrap.min.js"></script>

- <script src="https://secure.aadcdn.microsoftonline-p.com/lib/1.0.7/js/adal.min.js"></script>

- <!-- Modified version of adal-angular -->

- <script src="App/Scripts/adal-angular-modified.js"></script>

- <script src="App/Scripts/app.js"></script>

- <script src="App/Scripts/azure-blob-upload.js"></script>

- <script src="App/Scripts/homeCtrl.js"></script>

- <script src="App/Scripts/homeSvc.js"></script>

- <script src="App/Scripts/userDataCtrl.js"></script>

- <script src="App/Scripts/myPhotosCtrl.js"></script>

- <script src="App/Scripts/myPhotosSvc.js"></script>

- <script src="App/Scripts/uploadCtrl.js"></script>

- <script src="App/Scripts/uploadSvc.js"></script>

There is one to call out, azure-blob-upload.js (line 11). That is an Angular library by Stephen Brannan that wraps the Azure storage blob upload operations into an Angular module. We create an Angular controller that calls the upload service to obtain the SAS URL, then it calls the azureBlob module to actually upload to Azure Storage.

uploadCtrl.js

- 'use strict';

- angular.module('todoApp')

- .controller('uploadCtrl', ['$scope', '$location', 'azureBlob', 'uploadSvc', 'adalAuthenticationService', function ($scope, $location, azureBlob, uploadSvc, adalService) {

- $scope.error = "";

- $scope.sasToken = "";

- $scope.config = null;

- $scope.uploadComplete = false;

- $scope.progress = 0;

- $scope.cancellationToken = null;

- $scope.fileChanged = function () {

- console.log($scope.file.name);

- };

- $scope.upload = function () {

- var myFileTemp = document.getElementById("myFile");

- console.log("File name: " + myFileTemp.files[0].name);

- var extension = myFileTemp.files[0].name.split('.').pop();

- uploadSvc.getSASToken(extension).success(function (results) {

- console.log("SASToken: " + results.BlobSASToken);

- $scope.config =

- {

- baseUrl: results.BlobURL,

- sasToken: results.BlobSASToken,

- file: myFileTemp.files[0],

- blockSize: 1024 * 32,

- progress: function (amount) {

- console.log("Progress - " + amount);

- $scope.progress = amount;

- console.log(amount);

- },

- complete: function () {

- console.log("Completed!");

- $scope.progress = 99.99;

- uploadSvc.postItem(

- {

- 'ID' : results.ID,

- 'ServerFileName': results.ServerFileName,

- 'StorageAccountName': results.StorageAccountName,

- 'BlobURL': results.BlobURL

- }).success(function () {

- $scope.uploadComplete = true;

- }).error(function (err) {

- console.log("Error - " + err);

- $scope.error = err;

- $scope.uploadComplete = false;

- });

- },

- error: function (data, status, err, config) {

- console.log("Error - " + data);

- $scope.error = data;

- }

- };

- azureBlob.upload($scope.config);

- }).error(function (err) {

- $scope.error = err;

- });

- };

- }])

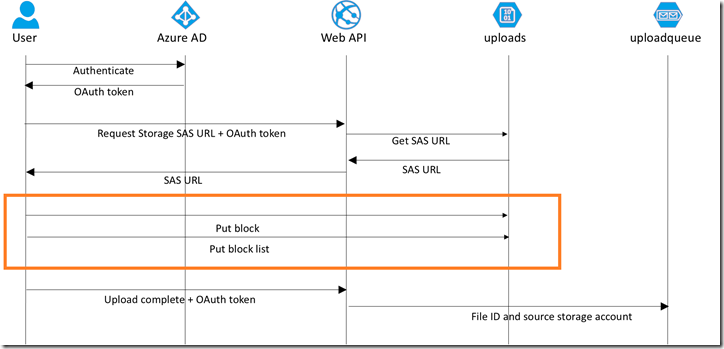

The upload is performed on line 60. Inside the azureBlob module, it uses the putBlock and putBlockList APIs for Azure Storage to upload a block blob. We can do that from a JavaScript client because we’ve enabled CORS.

When the upload is complete, the Angular client sends a message back to the Web API to indicate the upload is complete and is available for further processing (line 41). That Web API looks like this:

CompleteRequest

- /// <summary>

- /// Notify the backend that a new file was uploaded

- /// by sending a queue message.

- /// </summary>

- /// <param name="value">The name of the blob to be processed</param>

- /// <returns>Void</returns>

- public async Task Post(CompleteRequest item)

- {

- string owner = ClaimsPrincipal.Current.FindFirst(ClaimTypes.NameIdentifier).Value;

- //Get the owner name field

- string ownerName = ClaimsPrincipal.Current.FindFirst("name").Value;

- //Replace any commas with periods

- ownerName = ownerName.Replace(',', '.');

- string message = string.Format("{0},{1},{2},{3}, {4}, {5}", item.ID, item.ServerFileName, item.StorageAccountName, owner, ownerName, item.BlobURL);

- //Send a queue message to each storage account registered

- //in AppSettings prefixed with "Storage"

- foreach (string key in ConfigurationManager.AppSettings.Keys)

- {

- if(key.ToLower().StartsWith("storage"))

- {

- //This is a storage configuration

- var repo = new StorageRepository(ConfigurationManager.AppSettings[key]);

- await repo.SendQueueMessageAsync(message);

- }

- }

- }

All this code is doing is sending a queue message to every storage account in appSettings, which allows us to send a message to every region around the world if we so desire.

Update Your Code

I know quite a few developers have been taking advantage of ADAL.js for some time now. It bears explaining that this scenario didn’t work until very recently. There was a recent change in ADAL.js that added a check to see if the call was to the app backend or not. Prior to this fix, a call to Azure Storage from the Angular client would have failed because ADAL.js would have sent the OAuth token to storage and it would have failed (because Azure Storage doesn’t understand the OAuth token). If you have existing ADAL.js investments and want to add the ability to call additional services, this requires that you update your ADAL.js reference to at least 1.0.7 in order for this scenario to work.

Download the Code

You can obtain the complete solution that includes the code accompanying this post at https://github.com/kaevans/globalscaledemo.

For More Information

https://github.com/kaevans/globalscaledemo – The code accompanying this post

Cloud Design Patterns: Prescriptive Architecture Guidance for Cloud Applications

Shared Access Signatures: Understanding the SAS Model