Troubleshooting Scenario 6 – Role Recycling After Running For Some Time

In Troubleshooting Scenario 2 we looked at a scenario where the role would recycle after running fine for some time due to a bug in a startup task triggered by a role recycle such as an OS update. This blog post will show another example of this same type of behavior, but with a different, and more difficult to find, root cause. This is a continuation of the troubleshooting series.

Symptom

Similar to what we looked at in Scenario 2, your role has been running fine for some time but seemingly without cause it enters a recycling state and the service health dashboard shows that everything is operating normally.

Get the Big Picture

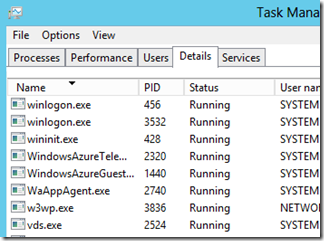

As we have in the other troubleshooting scenarios we will start by looking at Task Manager for a minute to see what processes are running, or starting and stopping. When we initially look we only see WindowsAzureGuestAgent.exe:

After watching for a minute or two we see WindowsAzureGuestAgent.exe consuming CPU cycles, so we know it is doing some work, but we don’t see any other processes. We know that the guest agent is supposed to start WaHostBootstrapper.exe, but we never see this process in task manager.

From the ‘Get the Big Picture’ section in Troubleshooting Scenario 1 we know that if we don’t see WaHostBootstrapper running then the problem is most likely within the Azure guest agent (WindowsAzureGuestAgent.exe) itself.

Guidelines for analyzing Windows Azure Guest Agent logs

From the diagnostic data blog post we know that there are 2 types of guest agent logs – App Agent Runtime Logs in AppAgentRuntime.log, and App Agent Heartbeat Logs in WaAppAgent.log. This section will briefly describe the content in the logs and how to look at them.

App Agent Runtime (AppAgentRuntime.log)

- These logs are written by WindowsAzureGuestAgent.exe and contain information about events happening within the guest agent and the VM. This includes information such as firewall configuration, role state changes, recycles, reboots, health status changes, role stops/starts, certificate configuration, etc.

- This log is useful to get a quick overview of the events happening over time to a role since it logs major changes to the role without logging heartbeats.

- If the guest agent is not able to start the role correctly (ie. a locked file preventing directory cleanup) then you will see it in this log.

The app agent runtime logs are normally filled with lots of error messages and hresults which look like problems, but are expected during the normal execution of the guest agent. This makes analyzing these logs very difficult and more art than science. Here are some general guidelines for how to look at these files and what messages to look for.

- Compare guest agent logs from a good and bad VM so that you can see where the differences are. This is probably the most effective way to rule out a lot of the noise and benign error messages.

- Scroll to the bottom of the log and start looking from there. The start and middle of the log includes a lot of basic setup messages that you are most likely not interested in. Any failures will be occurring later in the logs.

- Look for repeating patterns of messages. The Azure guest agent works like a state machine. If the current goal state is Running then the guest agent will continue retrying the same set of actions until it reaches that goal state.

- Look for _Context_Start and _Context_Ends messages. These correspond to the actions taken as the guest agent tries to reach the goal state. Every Context_Start will have a Context_End. A context can contain subcontexts, so you can see multiple Context_Start events before you see a Context_End event.

- Lines that begin with a “<-“ are function returns, along with the hresult being returned. So a line of “<- RuntimepDestroyResource” means that the function RuntimepDestroyResource is returning. A series of lines in a row showing “<- {some function}” can be looked at much like a callstack.

- The normal order of Context actions are (indented to show sub-contexts):

- AgentFirewallInitialize

- RuntimeHttpMonitor

- AgentCreateContainer

- AgentpCreateContainerWorker

- AgentCreateContainer

- SendConfig

- StartContainer

- AgentpStartContainerWorker

- GetTransportCertificate

- SendConfig

- StartRole

- AgentpStartRoleWorker

- The _Context_Ends should always have a Return Value of 00000000 indicating success. If a context in the log does not have a success return value, then that is the context to focus on for the source of the problem. You can typically trace the same failed HRESULT back up in the log to see where it originates.

- Some common entries in the log file that look like failures, but can be ignored:

- {most HRESULTS, unless they are part of a CONTEXT_END}

- Failed to remove CIS from Lsa. (or Failed to remove CIS\{guid} from Lsa.

- TIMED OUT waiting for LB ping. Proceeding to start the role.

- Failed to delete URLACL

- RuntimeFindContainer=0x80070490

- Once the host bootstrapper is started successfully you will see an entry in the log with the PID for WaHostBootstrapper: Role process with id {pid} is successfully resumed

App Agent Heartbeat (WaAppAgent.log)

- These logs are written by WindowsAzureGuestAgent.exe and contain information about the status of the health probes to the host bootstrapper.

- The guest agent process is responsible for reporting health status (ie. Ready, Busy, etc) back to the fabric, so the health status as reported by these logs is the same status that you will see in the Management Portal.

- These logs are typically useful for determining what is the current state of the role within the VM, as well as determining what the state was at some time in the past. With a problem description like "My website was down from 10:00am to 11:30am yesterday", these heartbeat logs are very useful to determine what the health status of the role was during that time.

The heartbeat logs are very verbose and are typically best used to determine the status of the VM at a given point in time. Here are some guidelines on how to look at these files:

- Every time the role starts (initial start, VM reboot, role recycle) you will see a large group of lines with ipconfig.exe and route.exe output. This can be ignored.

- When the role starts you will see a few messages showing the state as NotReady with sub-status of Starting.

- If the role never leaves the Busy state then it usually means that startup tasks are still executing or the role host is still in the OnStart method. The role can also show as Busy if you use the StatusCheck event.

- Once the role is running you will see Role {roleid{ is reporting state Ready.

- The software load balancer communicates with the guest agent to determine when to put an instance into LB rotation. If the role is reporting state Ready then the instance will be in LB rotation. Note that this is using the default LB configuration which can be overridden by use a custom LB probe.

- Common entries that look like failures but can be ignored:

- GetMachineGoalState() Error: 410 - Goal State not yet available from server. Will retry later.

- Caught exception in pre-initialization heartbeat thread, will continue heartbeats: System.NullReferenceException: Object reference not set to an instance of an object.

- Unable to get SDKVersion. System.IO.FileNotFoundException: Could not find file 'D:\Packages\GuestAgent\GuestAgent\RoleModel.xml'.

Check the Logs

Normally for role recycles we should start with the Windows Azure event logs, Application event logs, and WaHostBootstrapper log files. But in this scenario we know that the problem is in the guest agent so we will start with the guest agent logs in C:\Logs. The runtime logs are where the guest agent logs the major events that occur so that is usually the first place to start looking when something is preventing the guest agent from correctly starting the host bootstrapper.

From the guidelines above we know to start with the AppAgentRuntime logs because those track the major events that happen with the guest agent, and we know to start off by scrolling to the bottom of the file and working our way up. We also know to start looking for a _Context_Ends entry with a non-success hresult.

The first entry we find is:

<<<<_Context_Ends: {B7B98274-CF7B-4D0B-95B5-A13E3D973E4C} Return value = 80070490. Context={{ AgentpStopRoleWorker

The interesting aspect of this line is that it is occurring on a StopRole context, but we know that we are trying to start the role. Whenever a StartRole fails the guest agent will then do a StopRole in order to tear everything down to prepare for another StartRole. So most likely this HRESULT is just a symptom of the real root cause and can be ignored. We also know that the hresult 0x80070490 is one of the ones that can usually be ignored.

Continuing to search up we find another Context_Ends with a non-success return value:

<<<<_Context_Ends: {28E5D4C1-654E-4631-8B8C-C9809E4074C7} Return value = 80070020. Context={{ AgentpStartRoleWorker

This one looks more promising since we know the failure is occurring while the guest agent is trying to start the role. Continuing to search up in the file on that hresult (80070020) we find several more entries, and finally we find the origination point:

<- RuntimepDestroyResource=0x80070020 Context={{ AgentpStartRoleWorker

So we know that the RuntimepDestroyResource function call returned an 0x80070020 hresult which bubbled up to the StartRole context and caused the role to fail to start. Next we want to continue to look up in the log file to see what other details are being logged about the execution of RuntimepDestroyResource and any logged failures. The very next line up in the log file is:

Failed to delete file C:\Resources\directory\31fa1ff786e645beb0ecd18eb9854fa9.DiagnosticStoreCleanOnRoleRecycle.DiagnosticStore\LogFiles\Web\W3SVC1273337584\u_ex13092320.log Context={{ AgentpStartRoleWorker

Nothing else looks interesting in the few lines preceding this delete file entry so this must be the root cause of the problem. The section of the log file where we see this error is:

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] Failed to delete file C:\Resources\directory\31fa1ff786e645beb0ecd18eb9854fa9.DiagnosticStoreCleanOnRoleRecycle.DiagnosticStore\LogFiles\Web\W3SVC1273337584\u_ex13092320.log Context={{ AgentpStartRoleWorker: ConfigFileName=31fa1ff786e645beb0ecd18eb9854fa9.31fa1ff786e645beb0ecd18eb9854fa9.DiagnosticStoreCleanOnRoleRecycle_IN_0.1.xml ContainerId=68fdd1f2-865b-4ebf-b2b9-c9b0288526ba RoleInstanceId=31fa1ff786e645beb0ecd18eb9854fa9.DiagnosticStoreCleanOnRoleRecycle_IN_0 }}

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimepDestroyResource=0x80070020

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimepSetupDirectoryResource=0x80070020

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimeSetupRoleResources=0x80070020

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimeRole::SetupEnvironment(0x000000001BEA1CC0) =0x80070020

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimeRole::StartUnsafe(0x000000001BEA1CC0) =0x80070020

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimeRole::Start(0x000000001BEA1CC0) =0x80070020

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimeBaseContainer::StartRole(0x0000000001327280) =0x80070020

[00001440:00002292, 2013/09/23, 20:32:26.993, ERROR] <- RuntimeStartRole=0x80070020

As we know from the guidelines above, the series of lines starting with “<-“ can be seen as a callstack. This tells us that the failure to delete a file is coming from the RuntimeSetupRoleResources function.

We know that the failure is due to the fact that the guest agent can’t delete a specific file, and that the file is trying to be deleted when executing RuntimeSetupRoleResources. At this point some experience with the Azure service model and VM setup is helpful, along with some knowledge of the DiagnosticStore folder (the folder where the file is being deleted from). The RuntimeSetupRoleResources function is responsible for setting up the <LocalResources> as defined in the service’s ServiceDefinition.csdef.

Solution

This hosted service is changing the size of the DiagnosticStore LocalStorage resource in order to accommodate a larger diagnostic store for Windows Azure Diagnostics, per the information at https://msdn.microsoft.com/en-us/library/windowsazure/microsoft.windowsazure.diagnostics.diagnosticmonitorconfiguration.overallquotainmb.aspx. The entry in the csdef looks like this:

<LocalResources>

< LocalStorage name="DiagnosticStore" sizeInMB="8192" cleanOnRoleRecycle="true"/>

</LocalResources>

The problem is that this definition is incorrect. The cleanOnRoleRecycle setting is set to true, which instructs the guest agent to delete the folder and recreate it during every role start. But if a file in that folder is locked (in this case it is w3wp.exe locking an IIS log file) then the delete will fail, causing the guest agent to fail to start the role. The solution is to change cleanOnRoleRecycle=”false” and then redeploy.

Comments

- Anonymous

May 29, 2014

It may be that visual studio defaults to cleanOnRoleRecycle="true"