Poznámka:

Přístup k této stránce vyžaduje autorizaci. Můžete se zkusit přihlásit nebo změnit adresáře.

Přístup k této stránce vyžaduje autorizaci. Můžete zkusit změnit adresáře.

In this post I’d like to explain the accelerator_view::create_marker function. It is one of those APIs that is not necessary for every program, but may be useful in some specific scenarios.

Some implementation background

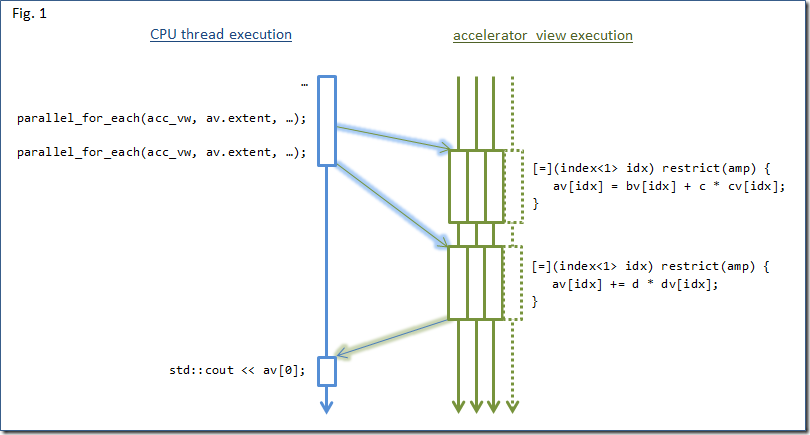

In the current version of C++ AMP, when you run a program on an end-user machine you have high probability it will be executed on heterogeneous machine, where sections of the code marked as restrict(cpu) will be executed on the traditional CPU and those stemming from the parallel_for_each with restrict(amp) on the machine’s GPU. As these two are most likely separate pieces of silicon with communication between them supervised by the OS, you can safely bet on them executing asynchronously to each other. In C++ AMP these technical details are hidden for the sake of ease-of-use, and code executed on an accelerator_view behaves as-if it was a sequential program, see Figure 1 for a mental model.

As you can see on the figure, the program performs two consecutive computations on the array view av and prints out the result. The correct ordering, synchronization and data transfers are managed by the C++ AMP runtime, giving you the final value as soon as it is possible. That way, you don’t have to worry about the intermediate state of execution. Unless you want to…

Markers overview

If you want to be aware of the progress of execution on the accelerator_view (this includes not only parallel_for_each but also explicit asynchronous copy) and you don’t want to do it in a blocking fashion (e.g. using accelerator_view::wait or queueing_mode_immediate) you may want to instrument the sequence of commands that are scheduled by your program with some markers:

class accelerator_view

{

public:

completion_future create_marker();

…

};

Marker binds an accelerator_view-side command with a host-side completion_future (which basically is std::shared_future with added support for continuations). The future will be ready once the accelerator_view execution passes the associated command, see Figure 2 for a mental model.

There are two observations related with this mechanism.

First, you don’t have to trouble yourself with restraining commands from reordering – markers themselves constitute a fence with regard to other commands. In other words C++ AMP guarantees that neither the parallel_for_each nor the copy command will be moved before or after a marker.

Second, the granularity of a marker is a single command issued from the host. That means that you cannot create a marker in the middle of parallel_for_each or copy operation.

Example

In the attached project you will find a scenario similar to the one presented on the Figure 2. I create there a “watchdog” thread to report on progress of executing 100 consecutive parallel_for_each loops on the main thread. The only part that I have not covered here is a data structure used to pass std::shared_futures safely between two threads, but I tried to keep it simple and use only standard C++11 mechanisms, so you should have no problem following the code.

As a disclaimer, I am obligated to add that, in production quality code, breaking the parallel_for_each loop into multiple parts just to add markers in between them is a bad practice, as it would incur an additional overhead.

Other scenarios

Markers may also be used in an implementation of a coarse-grained timer for accelerator_view-side execution – i.e. you may create two markers: before and after the interesting operation, and measure the time difference between them being made ready.

One more advanced use may be to keep a specified number of parallel_for_each loops in flight in an algorithm performing a work balancing between multiple GPUs. A solution of this problem is left as the exercise for the reader :).

As always, your feedback is welcome below or in our MSDN Forum.

Comments

- Anonymous

February 28, 2012

The comment has been removed - Anonymous

March 01, 2012

The comment has been removed - Anonymous

March 01, 2012

The comment has been removed