Image morphing C++ AMP sample

This sample demonstrates implementation of image morphing. This image transformation technique, dating back to late 80s, is calculating smooth transition between two images, keeping the selected features (e.g. eyes, nose) in images matching each other throughout the process. You can see this Morph sample in action in this short video.

From the technical perspective, the interesting point is that the computation is implemented in C++ AMP, enabling it to be executed more efficiently on an accelerator such as GPU, while the user interface and disk I/O are implemented in C#.

C# application – the beauty

Most of the C# application is of lesser interest to us within the context of this blog post. It mainly deals with the user interface, loading the input files and storing the output either as series of images or a movie clip. The app was originally created by Stephen Toub using TPL and based on the Feature-Based Image Metamorphosis algorithm from SIGGRAPH ’92. I took the project and carved out the TPL part, replacing it with a C++ AMP backend.

The C++ AMP backend is compiled into a dynamic-link library (DLL) and as such, it can be invoked from C# code through PInvoke as demonstrated in an earlier sample on C# interoperability with C++ AMP. The exported DLL interface is imported to C# in MorphLibrary.cs file. Specifically, the following C# snippet defines a mapping to the C++ function shown below.

// C#

[DllImport("Morph_lib", CallingConvention = CallingConvention.StdCall)]

[return: MarshalAs(UnmanagedType.U1)]

public extern unsafe static bool ComputeMorphStep(byte* output, double percentage);

// C++

extern "C" __declspec(dllexport) bool _stdcall ComputeMorphStep(char* output_ptr, double percentage_dbl);

Remember that only fundamental types and raw data may be passed through such inter-op. Therefore the MorphLibrary class also provides convenience functions for flattening C# structures to arrays meant to be passed through PInvoke, as well as ListAccelerators method returning a list of available accelerators obtained through multiple calls to the native DLL.

C++ AMP backend – the (performance) beast

On the native side there is a bit of utility code and the core computation. Saving the best for last, let’s start with the housekeeping part.

Enumerating accelerators API

The triple of BeginEnumAccelerators, EnumAccelerator, EndEnumAccelerators is responsible for providing the list of available accelerators to the DLL consumer. There is not much to see from the C++ AMP point of view, yet it may be interesting to note that I have decided to return a pointer to data allocated on the native heap from BeginEnumAccelerators and accept the same pointer as a parameter for the other two functions. The other possibility would be to store the pointer in a global variable on the DLL side and share it among these three functions opaquely for the DLL user. While the first solution puts the burden of calling EndEnumAccelerators on the callers, it also removes the possibility of any data race (in the case of multiple consumers calling these functions) by eliminating sharing.

Algorithm API

The algorithm is set up by the DLL user with a call to InitializeMorph. Based on the selected mode, an object is created that encapsulates its specific implementation (either C++ AMP, PPL or sequential). In the case of C++ AMP it is also necessary to define on which accelerator the computation should be performed – the necessary device_path may be obtained through the aforementioned enumeration API. The rest of the parameters are the input for the morphing algorithm – two images, set of lines identifying pairs of features on images and three tweakable numerical constants.

The primary computation is fired with a call to ComputeMorphStep, where the user passes a pointer to an output data block (which should be the same size as the inputs) and the percentage of the morph (e.g. an effect of passing 0% is exactly the start image while 50% is equally similar to start and end images).

Symmetrical to InitializeMorph, a call to ShutdownMorph tears down the state of the algorithm.

Note that this API is not thread-safe, i.e. it does not expect to have multiple morphs in flight. However, if it becomes necessary, the modification should be quite straightforward.

compute_morph class

This is a base class for the real algorithm implementations. It stores some common data structures and implements member functions for the shared part of the algorithm. Note that I’m using restriction specifiers on two of these functions, for example:

float_2 compute_morph::get_preimage_location(const index<2>& idx, const line_pair* line_pairs, unsigned line_pairs_num, float const_a, float const_b, float const_p) restrict(cpu,amp) ;

It allows me to call such a function from any implementation of the algorithm, no matter whether it is executing on the host or on the accelerator. I can even use references and pointers as parameters, without the need to accommodate the code in any way.

compute_morph_cpp class

This class is one of the implementations of compute_morph, where the computation is performed on the CPU – either on a single core with the “traditional” C++ constructs or on multiple cores with the aid of the Parallel Patterns Library.

The class constructor copies the passed image data to char[] arrays allocated on the heap.

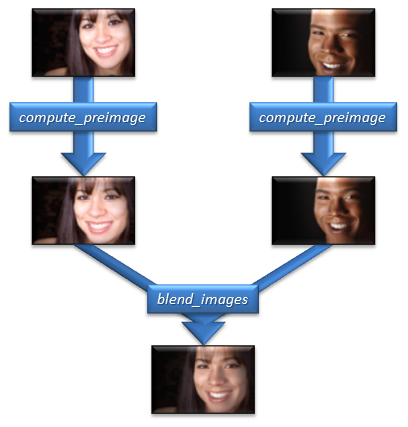

The compute member function is the algorithm entry point. First, the lines associated with the image features are transformed to the requested morph percentage in a call to interpolate_lines. Next, both images are warped as directed by the calculated lines in compute_preimage. Finally, these intermediate images are blended into one result in blend_images, the output being written directly to the buffer provided by the DLL user.

Code of the compute_preimage and blend_images member functions is practically identical for sequential and PPL implementation. Both functions have to iterate over all pixels of the image and the only difference in the PPL version is that the outer loop for statement is replaced with the parallel_for library function.

// Sequential version

for(int y = 0; y < size[0]; y++)

{

for(int x = 0; x < size[1]; x++)

{

// do work

}

}

// PPL version

parallel_for(0, size[0], 1, [=](int y)

{

for(int x = 0; x < size[1]; x++)

{

// do work

}

} );

What I like about it is that this simple one (+epsilon) line change brings great performance improvement. The reason is the PPL runtime dynamically distributes the parallel_for work among all available processor cores, meaning up 8x speed up on the commodity hardware.

compute_morph_amp class

Even with all the PPL goodness, the whole morph process for the sample images and 48 output frames takes around 1 minute on my machine. That’s not bad, but why not improve even more. The compute_morph_amp class is an alternative implementation of compute_morph using C++ AMP.

The class constructor creates a set of textures on the selected accelerator_view to be used as input (these are initialized with the passed images data), output and intermediate buffers for the algorithm. The choice of textures over array_views or arrays was dictated by two factors:

- The input image data is stored in a char array where each consecutive 4 chars describe the intensity of RGBA channels of a single pixel – this perfectly matches the unorm_4 texture structure, so no data conversion is necessary.

- Data is accessed in a spatially localized manner – what texture caches on GPUs are optimized for.

The compute member function looks almost the same as in compute_morph_cpu. The only difference being that the data from the output texture object (which is located on the accelerator) must be explicitly copied to the destination buffer on the host.

There are more changes in the workhorse member functions: compute_preimage and blend_images. Since differences in one map to another, let’s go through the first one.

You will notice that the parameters that were previously read directly from class data members are now copied to local variables and read from there. This is due to one of the limitations of restrict(amp) lambdas which mandates that data cannot be accessed through the this pointer (i.e. the pointer to the current class object). Although you will not find the word “this” in PPL parallel_for version, it is added implicitly by the compiler to every data member access.

Another point of interest is that the input data structure contains a fixed sized array where line data is copied to. The reasoning here is when the algorithm is executed on the GPU, this data will be placed in the constant buffer, which will cause it to be automatically replicated to all execution units. This may be beneficial as all threads need to read all this data anyway and serving it from the on-chip storage will reduce the number of global memory transactions. And since the size of this array is moderate this will not put much strain on the constant buffer too. The one drawback of this approach is that the array must have a predefined maximum capacity, as we currently cannot capture dynamically sized array in the amp-restricted lambda. Of course, from the correctness point of view, marshaling the same data through an array_view instead will give the same result.

The last difference in the C++ AMP approach is, as probably expected by our avid readers, the presence of parallel_for_each. It not only allows us to offload the computation to the accelerator, but also makes the code cleaner, thanks to its multidimensional semantics. In particular, the two nested loops from the PPL version are replaced by one loop over two-dimensional domain:

// C++ AMP version

parallel_for_each(output.extent, [=, &source](index<2> idx) restrict(amp)

{

// do work

} );

The ultimate question is whether the result was worth the effort. After all, I have to admit that going to C++ AMP required a few more changes than a simple one line modification to enable PPL. To answer I will just say that on my machine (Intel Core i7-930, NVIDIA GTX 580) the C++ AMP computation takes a fraction of 1 second to execute (compared to ~1 minute of PPL and ~6 minutes of sequential code) and actually the vast majority of the total execution time is spent on copying the data back to the C# part and saving it as images to the disk. And even if I choose to run on WARP instead of physical GPU, the total time is still only 22 seconds. So, in the best case scenario, I am observing the C++ AMP implementation being over 100 times faster than the PPL version and over 600 times faster than the serial version. Yay!

Download

Please download the attached sample project and try it yourself. Note that the configuration shown on the pictures in this blog post is saved in SampleMorph/WomanToMan.morph file. To load your image in the program just double-click on the picture box, then click-and-drag with right mouse button to draw lines identifying features and adjust them with dragging using left mouse button. Yes, this user interface was designed by a programmer.

Should you have any questions or comments, feel free to voice out below or in our MSDN forum.

The attached sample code is released under the MS-RSL license .

Comments

Anonymous

November 23, 2014

it is very very very slow.Anonymous

November 23, 2014

but it is fast in amp really. thanks for sharing.Anonymous

May 02, 2015

excuse me, I can't do it. Anybody can help me!