TSQL - Solve it YOUR Way - Finding the percentage of NULL values for each column in a table

Introduction:

As part of the blog series TSQL - Solve it YOUR Way, today's topic will cover a question asked in the Transact-SQL MSDN Forums here, followed by three different solutions from three of the more helpful and creative contributors in the TSQL MSDN forums, Jens Suessmeyer, Naomi Nosonovsky, and Jingyang Li.

Topic: How can I find the percentage of NULL values for each column in my table?

For various reasons, people may need to determine the percentage of values in each column that are NULL. Perhaps you are trying to calculate the percentage to determine if your table could save disk space by leveraging SPARSE columns. In another real-world example from the forums, a user had an implementation where partial rows in a table were being updated at different times. When all rows were updated with a value and were thus NOT NULL, the table was considered "complete" and could be processed. For this reason, the customer was trying to determine when the percentage of NULL values hit 0%. In yet another case, the user wanted to present a comparison chart throughout the entire database showing the ratio of NULL values to NOT NULL values. For the final example, take a look at the explanation provided below by Jens where he solved this problem at a customer site.

Solution #1 - Provided by Jens Suessmeyer

Code Snippet

SET NOCOUNT ON

DECLARE @Statement NVARCHAR(MAX) = ''

DECLARE @Statement2 NVARCHAR(MAX) = ''

DECLARE @FinalStatement NVARCHAR(MAX) = ''

DECLARE @TABLE_SCHEMA SYSNAME = 'Production'

DECLARE @TABLE_NAME SYSNAME = 'Product'

SELECT

@Statement = @Statement + 'SUM(CASE WHEN ' + COLUMN_NAME + ' IS NULL THEN 1 ELSE 0 END) AS ' + COLUMN_NAME + ',' + CHAR(13) ,

@Statement2 = @Statement2 + COLUMN_NAME + '*100 / OverallCount AS ' + COLUMN_NAME + ',' + CHAR(13) FROM

INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_NAME = @TABLE_NAME AND TABLE_SCHEMA = @TABLE_SCHEMA

IF @@ROWCOUNT = 0

RAISERROR('TABLE OR VIEW with schema "%s" and name "%s" does not exists or you do not have appropriate permissions.',16,1, @TABLE_SCHEMA, @TABLE_NAME)

ELSE

BEGIN

SELECT @FinalStatement =

'SELECT ' + LEFT(@Statement2, LEN(@Statement2) -2) + ' FROM (SELECT ' + LEFT(@Statement, LEN(@Statement) -2) +

', COUNT(*) AS OverallCount FROM ' + @TABLE_SCHEMA + '.' + @TABLE_NAME + ') SubQuery'

EXEC(@FinalStatement)

Explanation of Jens' solution:

To put a bit more context to my solution, I was at a customer site where they found out that their API was occasionally losing the information on the way from the front end to the database, therefore NULLing out specific fields which should not be NULL. While they checked through their database and wrote queries for their hundreds of tables, they asked me working for another work stream to write something they can use. The simple idea is to grab a Table Name (and of course the appropriate schema) and create a Dynamic SQL statement which will calculate the percentage of NULL values in a specific table.

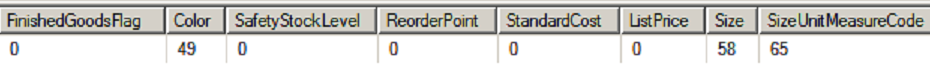

From the query the following information will be returned:

In detail we see that the Color (on purpose or not) is not always entered showing a 49% of NULL values in that column. 58% for Size, 65% for SizeUnitMeasureCode, etc. The rest does have (at least NON NULL) values. If you would be also interested in the percentage of that are NULL OR Empty the query can be easily tweaked by changing the source code the following line from:

@Statement = @Statement + 'SUM(CASE WHEN ' + COLUMN_NAME + ' IS NULL THEN 1 ELSE 0 END) AS ' + COLUMN_NAME + ',' + CHAR(13) ,

to

@Statement = @Statement + 'SUM(CASE WHEN LEN(ISNULL(' + COLUMN_NAME + ',' + REPLICATE(CHAR(39),2) + ')) = 0 THEN 1 ELSE 0 END) AS ' + COLUMN_NAME + ',' + CHAR(13) ,

Leading to a change in the generated code of:

SUM(CASE WHEN LEN(ISNULL(CompanyName, '')) = 0 THEN 1 ELSE 0 END) AS CompanyName,

Feel free to include your own logic and forensic pattern to find all the black holes in your databases.

Solution #2 - Provided by Naomi Nosonovsky

Code Snippet

DECLARE @TotalCount DECIMAL(10, 2)

,@SQL NVARCHAR(MAX)

SELECT @TotalCount = COUNT(*)

FROM [AdventureWorks].[Production].[Product]

SELECT @SQL = STUFF((

SELECT ', CAST(SUM(CASE WHEN ' + Quotename(C.COLUMN_NAME) + ' IS NULL THEN 1 ELSE 0 END) * 100.00

/@TotalCount AS decimal(10,2)) AS [' + C.COLUMN_NAME + ' NULL %]

'

FROM INFORMATION_SCHEMA.COLUMNS C

WHERE TABLE_NAME = 'Product'

AND TABLE_SCHEMA = 'Production'

ORDER BY C.ORDINAL_POSITION

FOR XML PATH('')

,type

).value('.', 'nvarchar(max)'), 1, 2, '')

SET @SQL = 'SET @TotalCount = NULLIF(@TotalCount,0)

SELECT ' + @SQL + '

FROM [AdventureWorks].Production.Product'

PRINT @SQL

EXECUTE SP_EXECUTESQL @SQL

,N'@TotalCount decimal(10,2)'

,@TotalCount

Explanation of Naomi's solution:

To get the percent of null values in a column we use this formula

sum(case when ColumnName IS NULL then 1 else 0 end) * 100.00 / @TotalCount

where @TotalCount is the number of rows in a table.

We need to generate the above statement dynamically, so we use INFORMATION_SCHEMA.Columns view to do so.

I use FOR XML PATH('') approach to concatenate information into one variable

I use sp_executeSQL system stored procedure to run the dynamic SQL in order to supply a variable. Alternatively, of course, I could have declared and calculated @TotalCount inside the dynamic SQL.

Solution #3 - Provided by Jingyang Li

Code Snippet

SET NOCOUNT ON;

DECLARE @TABLE_NAMENVARCHAR(50) = 'Product',

@TABLE_SCHEMA NVARCHAR(50) = 'Production',

@sql NVARCHAR(4000),

@col NVARCHAR(50)

CREATE TABLE #t

(

id INT IDENTITY(1, 1),

ColName VARCHAR(50),

[NULL%] DECIMAL(8, 2)

)

DECLARE c_cursor CURSOR FOR

SELECT column_Name

FROM [INFORMATION_SCHEMA].[COLUMNS]

WHERE TABLE_NAME = @TABLE_NAME

AND TABLE_SCHEMA = @TABLE_SCHEMA

AND IS_Nullable = 'YES'

OPEN c_cursor;

FETCH NEXT FROM c_cursor INTO @col;

WHILE ( @@FETCH_STATUS = 0 )

BEGIN

SET @sql = N' INSERT INTO #t (ColName, [NULL%])

SELECT TOP 1 ''' + @col

+ ''' , (COUNT(*) over() *1.0- COUNT(' + @col

+ ') OVER()*1.0)/COUNT(*) Over() *100.0 as [NULL%] FROM '

+ Quotename(@TABLE_SCHEMA) + '.'

+ Quotename( @TABLE_NAME)

-- print @sql

EXEC (@sql);

FETCH NEXT FROM c_cursor INTO @col;

END

CLOSE c_cursor;

DEALLOCATE c_cursor;

SELECT ColName, [NULL%] FROM#t

DROP TABLE #t

Explanation of Jingyang's solution:

Jingyang initially sent a solution very similar to that of Naomi, so in order to provide an additional technique, he also provided the CURSOR based solution above. The cursor is built by leveraging the INFORMATION_SCHEMA table as used in all three solutions, but Jingyang uses a nice optimization technique of only querying for Is_Nullable = 'YES' to only obtain NULLable fields. In the CURSOR loop, the solution relies on some creative use of the OVER() function to generate the percentage and results, which are displayed in a way that is very readable and concise.

Conclusion:

As you can see, all three of the above solutions provide the intended result we were looking for, but do so in creatively different styles, this time including our first CURSOR based solution. I hope that you are able to learn a lot by trying out the problem yourself and then reading through the additional solutions.

Special thanks to Jens, Naomi, and Jingyang for their valuable forums contribution and for contributing to this series!

Hope that helps,

Sam Lester (MSFT)

Contributor Bios:

Jens Suessmeyer is an MCS Consultant in Germany and has been working with SQL Server since version 6.0. He is very active in the forums and in his blog (https://blogs.msdn.com/b/jenss), providing outstanding real-world solutions and insight.

Naomi Nosonovsky, Senior Software Developer, has more than 15 years of enterprise experience in analysis, design, development and implementation of high-performance client/server data management solutions. She is a Personality of the Year for 2008, 2009, 2010 and 2011 at www.universalthread.com in .NET, SQL Server & VFP categories. She is also an All Star contributor/MCC Community Contributor at forums.asp.net and MSDN T-SQL forum. She also actively blogs at https://blogs.lessthandot.com/index.php/All/?disp=authdir&author=218 and https://beyondrelational.com/members/naomi/modules/2/posts.aspx?Tab=16.

Jingyang Li has been working with SQL Server since he began his IT career as an ASP.NET developer in 2001. He enjoys working with T-SQL and recently took a full time job as a DBA. He has been participating in the Microsoft forums under the alias Limno.

Comments

Anonymous

September 23, 2012

A CLR function could prove to be more flexible for such a task, allowing to address a wider range of Data Profiling problems. Here is an example based on ExecuteScalarToInt CLR function: SELECT s.name [schema name] , T.name [table name] , C.name [column name] , dbo.ExecuteScalarToInt('SELECT SUM(CASE WHEN ' + c.name + ' IS NULL THEN 1 ELSE 0 END) * 100.00/count(*) Percentage FROM ' + S.name + '.' + T.name) Percentage FROM sys.columns C JOIN sys.tables T ON C.object_id = T.object_id JOIN sys.schemas S ON T.schema_id = S.schema_id Function's definition can be found in Data Profiling Using CLR Functions blog post (sql-troubles.blogspot.de/.../data-profiling-part-ii-using-clr.html). The query can be easily modified to use INFORMATION_SCHEMA.COLUMNS view.Anonymous

September 24, 2012

Here is the query revisited, this time based on INFORMATION_SCHEMA.COLUMNS view: SELECT TABLE_SCHEMA , TABLE_NAME , COLUMN_NAME , dbo.ExecuteScalarToInt('SELECT IsNull(SUM(CASE WHEN ' + QUOTENAME(COLUMN_NAME) + ' IS NULL THEN 1 ELSE 0 END) * 100.00/count(), 0) Percentage FROM ' + QUOTENAME(TABLE_SCHEMA) + '.' + QUOTENAME(TABLE_NAME)) Percentage FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_SCHEMA = 'Production' AND TABLE_NAME = 'Product' If decimals are needed, the ExecuteScalarToDecimal CLR function can be used (its definition is available in the above mentioned post): , dbo.ExecuteScalarToDecimal('SELECT IsNull(SUM(CASE WHEN ' + QUOTENAME(COLUMN_NAME) + ' IS NULL THEN 1 ELSE 0 END) * 100.00/count(), 0) Percentage FROM ' + QUOTENAME(TABLE_SCHEMA) + '.' + QUOTENAME(TABLE_NAME)) PercentageAnonymous

September 24, 2012

Interesting, do you think using CLR will give better performance here? Can you run a quick performance test? Thanks.Anonymous

September 25, 2012

I run all the above queries, including the one based on CLR function and for the given table, and all need about a second. I wanted to see their performance for the whole database. At a first glimpse, the third solution seemed the easiest to modify for this purpose. On the first run, the solution based on CLR function solution took 51 seconds, while the third solution took 58 seconds. On a second run the CLR function took 25 seconds, while the third took 56 seconds. Similar results as in the second run after several other runs. The results might be different on other machines, though I think the performance of CLR function is comparable at least with one of the three above solutions. The performance of a solution is quite important, though often is needed to consider also its flexibility considered as a dimension of maintenance, reuse, generalization, readability, etc.Anonymous

April 09, 2013

Out of three approach,i would vote for "Jingyang 's approach" because the real catch was using IS_NULLABLE = "YES",when we are trying to get NULL percentage in a column.Anonymous

August 18, 2016

In my query I am using cursor for getting the null counts. Count(*),Count(@ColumnNM)But I am getting the same count for both. The Count(@ColumnNm) is giving the count for entire column and not excluding the Nulls.Any help will be appreciated.- Anonymous

August 20, 2016

If you use count(@ColumnName) where @ColumnName is a variable, it is the same as COUNT(1) and it will be the same as count(*), e.g. it will count all rows in a table. You need to construct a dynamic SQL instead.

- Anonymous