ARKit Namespace

Important

Some information relates to prerelease product that may be substantially modified before it’s released. Microsoft makes no warranties, express or implied, with respect to the information provided here.

The ARKit namespace provides support for augmented-reality sessions, including both high- and low-level APIs for projecting computer-generated imagery into a video stream.

Classes

| ARAnchor |

A position, orientation, and scale that is located in the real world to which augmented reality objects can be attached. |

| ARBlendShapeLocationOptions |

A DictionaryContainer that defines the features available in T:ARKit.ARBlendShapeLocation . |

| ARCamera |

Information about the location and orientation of the camera that captured an augmented-reality frame. |

| ARConfiguration |

Configuration information for augmented reality sessions. |

| ARDirectionalLightEstimate |

Estimates real-world illumination falling on a face. |

| AREnvironmentProbeAnchor |

Source for environmentally-based lighting. |

| ARErrorCodeExtensions |

Extension methods for the ARKit.ARErrorCode enumeration. |

| ARFaceAnchor |

An ARAnchor that locates a detected face in the AR session's world coordinates. |

| ARFaceGeometry |

A mesh that represents a recognized face, including shape and expression. |

| ARFaceTrackingConfiguration |

An ARConfiguration for recognizing and tracking faces. |

| ARFrame |

A frame in an augmented-reality session. |

| ARHitTestResult |

A result generated by the HitTest(CGPoint, ARHitTestResultType) method. |

| ARImageAnchor |

A ARAnchor that tracks an image detected in the real world. |

| ARImageTrackingConfiguration |

ARConfiguration subclass that uses a recognized image as a basis for world-tracking. |

| ARLightEstimate |

An estimate of the real-world lighting environment. |

| ARObjectAnchor |

ARAnchor subclass that tracks a recognized real-world 3D object. |

| ARObjectScanningConfiguration |

A resource-intensive ARConfiguration used during development to create ARReferenceObject data. |

| AROrientationTrackingConfiguration |

An ARConfiguration that only tracks the device orientation and uses the device's rear-facing cameras. |

| ARPlaneAnchor |

A subclass of ARAnchor used to represent real-world flat surfaces. |

| ARPlaneGeometry |

Geometry representing a plane detected in the real world. |

| ARPointCloud |

A set of 3-dimensional points, indicating image-processing's belief in a fixed real-world point on a physical surface. |

| ARReferenceImage |

An image resource that contains pre-processed images to be recognized in the real-world. |

| ARReferenceObject |

Digital representation of a 3D object to be detected in the real world. |

| ARSCNDebugOptions |

Visualization options for use with the DebugOptions property of ARSCNView. |

| ARSCNFaceGeometry |

SceneKit geometry that represents a face. |

| ARSCNPlaneGeometry | |

| ARSCNView |

A subclass of SCNView that supports augmented-reality content. |

| ARSCNView.ARSCNViewAppearance |

Appearance class for objects of type ARSCNView. |

| ARSCNViewDelegate |

Delegate object for ARSCNView objects. |

| ARSCNViewDelegate_Extensions |

Extension methods to the IARSCNViewDelegate interface to support all the methods from the ARSCNViewDelegate protocol. |

| ARSession |

Manages the camera capture, motion processing, and image analysis necessary to create a mixed-reality experience. |

| ARSessionDelegate |

Delegate object for the ARSession object, allowing the developer to respond to events relating to the augmented-reality session. |

| ARSessionDelegate_Extensions |

Extension methods to the IARSessionDelegate interface to support all the methods from the ARSessionDelegate protocol. |

| ARSessionObserver_Extensions |

Optional methods of the IARSessionObserver interface. |

| ARSKView |

A subclass of SKView that places Sprite Kit objects in an augmented-reality session. |

| ARSKView.ARSKViewAppearance |

Appearance class for objects of type ARSKView. |

| ARSKViewDelegate |

Delegate object allowing the developer to respond to events relating to a ARSKView. |

| ARSKViewDelegate_Extensions |

Extension methods to the IARSKViewDelegate interface to support all the methods from the ARSKViewDelegate protocol. |

| ARVideoFormat |

Summary information about the video feed used in the AR simulation. |

| ARWorldMap |

A serializable and shareable combination of real-world spatial data points and mixed-reality anchors. |

| ARWorldTrackingConfiguration |

Configuration for a session that tracks the device position and orientation, and optionally detects horizontal surfaces. |

Interfaces

| IARAnchorCopying | |

| IARSCNViewDelegate |

Interface representing the required methods (if any) of the protocol ARSCNViewDelegate. |

| IARSessionDelegate |

Interface representing the required methods (if any) of the protocol ARSessionDelegate. |

| IARSessionObserver |

Interface defining methods that respond to events in an ARSession. |

| IARSKViewDelegate |

Interface representing the required methods (if any) of the protocol ARSKViewDelegate. |

| IARTrackable |

Interface for real-world objects that can be tracked by ARKit. |

Enums

| AREnvironmentTexturing |

Enumerates environmental texturing strategies used with T:ARKit.ARWorldTrackingProbeAnchor objects. |

| ARErrorCode |

Enumerate causes for an ARSession failure. |

| ARHitTestResultType |

Enumerates the kinds of objects detected by the HitTest(CGPoint, ARHitTestResultType) method. |

| ARPlaneAnchorAlignment |

The orientation of an ARPlaneAnchor (Currently restricted to horizontal). |

| ARPlaneClassification | |

| ARPlaneClassificationStatus | |

| ARPlaneDetection |

Enumerates the valid orientations for detected planes (currently, only horizontal). |

| ARSessionRunOptions |

Enumerates options in calls to Run(ARConfiguration, ARSessionRunOptions). |

| ARTrackingState |

Enumerates the quality of real-world tracking in an augmented-reality ARSession. |

| ARTrackingStateReason |

Enumerates the causes of Limited. |

| ARWorldAlignment |

Enumerates options for how the world coordinate system is created. |

| ARWorldMappingStatus |

Enumerates the states of a world-mapping session. |

Remarks

ARKit was added in iOS 11 and provides for mixed-reality sessions that combines camera input with computer-generated imagery that appears "attached" to the real-world.

ARKit is only available on devices running A9 and more-powerful processors: essentially iPhone 6S and newer, iPad Pros, and iPads released no earlier than 2017.

ARKit apps do not run in the Simulator.

Developers have three choices for rendering AR scenes:

| Class | Use-case |

|---|---|

| ARSCNView | Combine SceneKit 3D geometry with video |

| Combine SpriteKit 2D imagery with video | |

| Export "renderer:updateAtTime:" from their IARSCNViewDelegate. | Allows complete custom rendering. |

ARKit coordinate systems and transforms

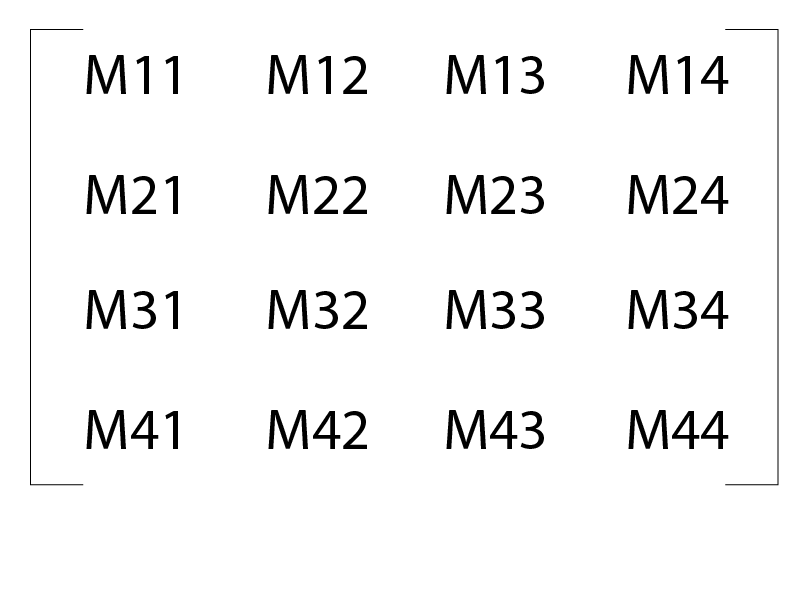

ARKit uses device motion and "visual odometry" to create a model of the device's camera and real-world "feature points" in relation to a virtual coordinate system. The coordinate system uses meters as its units. The virtual coordinate system has an origin calculated to be the camera's location at the time that the ARSession was started. Location and orientation within ARKit are primarily represented using NMatrix4 "native matrices". In the case of ARKit, these are column-major transforms:

Position or translation is in M14, M24, and M34. The 3x3 matrix defined by M11 to M33 is the rotation matrix.

SCNVector3 Position(NMatrix4 m) => new SCNVector3(m.M14, m.M24, m.M34);

Initialization

The ARSession object manages the overall augmented-reality process. The Run method takes a ARConfiguration and an ARSessionRunOptions object, as shown below:

ARSCNView SceneView = ... // initialized in Storyboard, `ViewDidLoad`, etc.

// Create a session configuration

var configuration = new ARWorldTrackingConfiguration {

PlaneDetection = ARPlaneDetection.Horizontal,

LightEstimationEnabled = true

};

// Run the view's session

SceneView.Session.Run(configuration, ARSessionRunOptions.ResetTracking);

Once a ARSession is running, it's CurrentFrame property holds the active ARFrame. Because the system attempts to run ARKit at 60 frames per second, developers who reference the CurrentFrame must be sure to Dispose the frame after they have lost it.

The system tracks high-contrast "feature points" in the camera's view. These are available to the developer as a ARPointCloud object that can be read at RawFeaturePoints. Generally, however, developers rely on the system to identify higher-level features, such as planes or human faces. When the system identifies these higher-level features, it adds ARAnchor objects whose P:ARKit.ARAnchor.Position properties are in the world-coordinate system. Developers can use the DidAddNode, DidUpdateNode, and DidRemoveNode methods to react to such events and to attach their custom geometry to real-world features.

The augmented-reality coordinates are maintained using visual odometry and the device's motion manager. Experimentally, the tracking seems very solid over distances of at least tens of meters in a continuous session.