Poznámka:

Přístup k této stránce vyžaduje autorizaci. Můžete se zkusit přihlásit nebo změnit adresáře.

Přístup k této stránce vyžaduje autorizaci. Můžete zkusit změnit adresáře.

Platí pro:SQL Server v Linuxu

Tento článek popisuje charakteristiky skupin dostupnosti (AG) v instalacích SQL Serveru se systémem Linux. Řeší také rozdíly mezi skupinami dostupnosti (AG) na bázi Linuxu a těmi na bázi Windows Serveru s podporou WSFC (převzetí služeb při selhání). Prohlédněte si Co je skupina dostupnosti Always On?, kde najdete základní informace o skupinách dostupnosti, protože fungují stejně ve Windows a Linuxu, s výjimkou WSFC.

Poznámka:

Ve skupinách dostupnosti, které nepoužívají clustering s podporou převzetí služeb při selhání Windows Serveru (WSFC), jako jsou skupiny dostupnosti na úrovni čtení nebo skupiny dostupnosti v Linuxu, mohou sloupce v DMV skupin dostupnosti související s clusterem zobrazovat informace o vnitřním výchozím clusteru. Tyto sloupce jsou určené pouze pro interní použití a je možné je ignorovat.

Z hlediska vysoké úrovně jsou skupiny dostupnosti v SYSTÉMU SQL Server v Linuxu stejné jako u implementací založených na WSFC. To znamená, že všechna omezení a funkce jsou stejné, s některými výjimkami. Mezi hlavní rozdíly patří:

- Microsoft Distributed Transaction Coordinator (DTC) je podporován v Linuxu počínaje SQL Serverem 2017 CU 16. DTC ale zatím není ve skupinách dostupnosti v Linuxu podporován. Pokud vaše aplikace vyžadují použití distribuovaných transakcí a potřebují AG, nasaďte SQL Server ve Windows.

- Nasazení založená na Linuxu, která vyžadují vysokou dostupnost, používají Pacemaker pro clustering místo WSFC.

- Na rozdíl od většiny konfigurací skupin AG ve Windows, Pacemaker, s výjimkou scénáře clusteru pracovní skupiny, nikdy nevyžaduje službu Active Directory Domain Services (AD DS).

- Způsob, jakým se provádí přesun skupiny dostupnosti z jednoho uzlu na druhý, se liší mezi Linuxem a Windows.

- Některá nastavení, jako

required_synchronized_secondaries_to_commitje například možné změnit pouze prostřednictvím Pacemakeru v Linuxu, zatímco instalace založená na WSFC používá Jazyk Transact-SQL.

Počet replik a uzlů clusteru

Skupina dostupnosti v edici SQL Server Standard může mít celkem dvě repliky: jednu primární a jednu sekundární, kterou lze použít pouze pro účely dostupnosti. Nedá se použít pro nic jiného, například pro čitelné dotazy. Skupina dostupnosti v edici SQL Server Enterprise může mít až devět replik celkem: jednu primární a až osm sekundárních, z nichž až tři (včetně primární) mohou být synchronní. Pokud používáte podkladový cluster, při zapojení Corosync může být celkem maximálně 16 uzlů. Skupina dostupnosti může zahrnovat maximálně devět z 16 uzlů s edicí SQL Server Enterprise a dvě s edicí SQL Server Standard.

Konfigurace dvou replik, která vyžaduje schopnost automatického převzetí služeb při selhání na jinou repliku, vyžaduje použití repliky určené pouze pro konfiguraci, jak je popsáno v replika určená pouze pro konfiguraci a kvórum. V kumulativní aktualizaci 1 (CU 1) pro SQL Server 2017 (14.x) byly zavedeny pouze konfigurační repliky, takže by tato konfigurace měla být nasazena jako minimální verze.

Pokud se používá Pacemaker, musí být správně nakonfigurovaný, aby zůstal v provozu. To znamená, že kromě požadavků SQL Serveru, jako je replika jen pro konfiguraci, je nutné správně implementovat kvorum a ohraničení neúspěšného uzlu z pohledu Pacemakeru.

Sekundární repliky pro čtení jsou podporovány pouze v edici SQL Server Enterprise.

Typ clusteru a režim převzetí služeb při selhání

Novinkou pro SQL Server 2017 (14.x) je zavedení typu clusteru pro skupiny AG. Pro Linux existují dvě platné hodnoty: Externí a Žádné. Typ clusteru Externí znamená, že Pacemaker se používá pod skupinu dostupnosti. Použití typu Externí pro typ clusteru vyžaduje, aby byl režim převzetí služeb při selhání nastavený také na Externí (také nový v SQL Serveru 2017 (14.x)). Automatické převzetí služeb při selhání je podporováno, ale na rozdíl od WSFC je při použití Pacemakeru režim převzetí služeb při selhání nastaven na Externí, nikoliv na automatický. Na rozdíl od WSFC se po nakonfigurování skupiny dostupnosti vytvoří součást Pacemaker pro skupiny dostupnosti.

Typ clusteru None znamená, že neexistuje žádný požadavek na použití Pacemakeru, a skupina dostupnosti (AG) ho nepoužívá. I na serverech, které mají nakonfigurované Pacemaker, pokud je skupina dostupnosti nakonfigurovaná s typem clusteru None, Pacemaker tuto skupinu dostupnosti nevidí ani nespravuje. Typ clusteru „None“ podporuje pouze ruční převzetí služeb při selhání z primární repliky na sekundární repliku. Skupina dostupnosti vytvořená pomocí možnosti Žádné je primárně zaměřená na upgrady a škálování čtení. I když může fungovat ve scénářích, jako je zotavení po havárii nebo místní dostupnost, kde není potřeba automatické převzetí služeb při selhání, její použití se nedoporučuje. Příběh posluchače je také složitější bez Pacemakeru.

Typ clusteru je uložen v zobrazení dynamické správy SQL Serveru (DMV) sys.availability_groupsve sloupcích cluster_type a cluster_type_desc.

požadováno_synchronizované_sekundární_k_závazku

Novinka systému SQL Server 2017 (14.x) je nastavení, které používá skupina AG s názvem required_synchronized_secondaries_to_commit. To říká skupině dostupnosti, kolik sekundárních replik musí být v synchronu s primární replikou. To umožňuje například automatické převzetí služeb při selhání (jenom když je integrovaný s Pacemakerem s typem externího clusteru) a řídí chování věcí, jako je dostupnost primárního serveru, pokud je správný počet sekundárních replik online nebo offline. Další informace o tom, jak to funguje, najdete v tématu Vysoká dostupnost a ochrana dat pro konfigurace skupin dostupnosti. Hodnota required_synchronized_secondaries_to_commit je nastavena ve výchozím nastavení a udržována Pacemaker / SQL Serverem. Tuto hodnotu můžete přepsat ručně.

Kombinace required_synchronized_secondaries_to_commit a nového pořadového čísla (které je uloženo v sys.availability_groups) informuje Pacemaker a SQL Server, že například může dojít k automatickému převzetí služeb při selhání. V takovém případě by sekundární replika měla stejné pořadové číslo jako primární, což znamená, že je aktuální se všemi nejnovějšími informacemi o konfiguraci.

Existují tři hodnoty, které lze nastavit pro required_synchronized_secondaries_to_commit: 0, 1 nebo 2. Řídí chování toho, co se stane, když se replika stane nedostupnou. Čísla odpovídají počtu sekundárních replik, které musí být synchronizovány s primárním serverem. Chování je v linuxu následující:

| Nastavení | Popis |

|---|---|

0 |

Sekundární repliky nemusí být ve stavu synchronizace s primární replikou. Pokud ale sekundární servery nejsou synchronizovány, neexistuje automatické převzetí služeb při selhání. |

1 |

Jedna sekundární replika musí být v synchronizovaném stavu s primárním; automatické přepnutí je možné. Primární databáze není k dispozici, dokud nebude k dispozici sekundární synchronní replika. |

2 |

Obě sekundární repliky v konfiguraci skupiny dostupnosti tří nebo více uzlů musí být synchronizovány s primární; automatické převzetí služeb při selhání je možné. |

required_synchronized_secondaries_to_commit řídí nejen chování při převzetí služeb při selhání pomocí synchronních replik, ale i ztrátu dat. S hodnotou 1 nebo 2 musí být sekundární replika vždy synchronizovaná, aby se zajistila redundance dat. To znamená, že nedojde ke ztrátě dat.

Pokud chcete změnit hodnotu required_synchronized_secondaries_to_commit, použijte následující syntaxi:

Poznámka:

Změna hodnoty způsobí restartování prostředku, což znamená krátký výpadek. Jediným způsobem, jak tomu zabránit, je nastavit prostředek tak, aby ho dočasně nespravuje cluster.

Red Hat Enterprise Linux (RHEL) a Ubuntu

sudo pcs resource update <AGResourceName> required_synchronized_secondaries_to_commit=<value>

SUSE Linux Enterprise Server (SLES)

sudo crm resource param ms-<AGResourceName> set required_synchronized_secondaries_to_commit <value>

Poznámka:

Počínaje SQL Serverem 2025 (17.x) se nepodporuje SUSE Linux Enterprise Server (SLES).

V tomto příkladu <AGResourceName> je název prostředku nakonfigurovaného pro AG a <value> je 0, 1 nebo 2. Pokud ho chcete nastavit zpět na výchozí hodnotu správy parametru Pacemaker, spusťte stejný příkaz bez hodnoty.

Automatické přepnutí při chybovém přetížení skupiny dostupnosti je možné, pokud jsou splněny následující podmínky:

- Primární a sekundární replika jsou nastavené na synchronní přesun dat.

- Sekundární má stav synchronizace (nesynchronizuje se), což znamená, že oba jsou ve stejném datovém bodě.

- Typ clusteru je nastavený na Externí. Automatické převzetí služeb při selhání není možné s typem clusteru None.

- Sekundární

sequence_numberreplika, která se stane primárním, má nejvyšší pořadové číslo – jinými slovy, sekundární replikasequence_numberodpovídá té z původní primární repliky.

Pokud jsou tyto podmínky splněny a server hostující primární repliku selže, AG přenese vlastnictví na synchronní repliku. Chování synchronních replik (z nichž může být tři celkem: jedna primární a dvě sekundární repliky) lze dále řídit required_synchronized_secondaries_to_commit. To funguje s AG na Windows i Linuxu, ale konfiguruje se jinak. Na Linuxu je hodnota automaticky nakonfigurována clusterem na samotném prostředku AG.

Replika a kvorum jen pro konfiguraci

Byla zavedena konfigurační replika, která řeší omezení při zpracování kvora pomocí Pacemakeru, zejména při oplocení selhavšího uzlu. Konfigurace se dvěma uzly nefunguje pro AG (skupinu dostupnosti). U FCI mohou být mechanismy kvora poskytované Pacemakerem v pořádku, protože všechny arbitráže převzetí služeb FCI při selhání probíhají na vrstvě clusteru. V případě skupiny dostupnosti probíhá arbitráž na SQL Serveru v Linuxu, kde jsou uložena všechna metadata. Tady vstupuje do hry replika pouze pro konfiguraci.

Bez čehokoli jiného by se vyžadoval třetí uzel a alespoň jedna synchronizovaná replika. Replika určená pouze pro konfiguraci ukládá konfiguraci skupiny dostupnosti v master databázi, stejně jako ostatní repliky skupiny dostupnosti. Replika pouze pro konfiguraci nemá uživatelské databáze, které jsou součástí skupiny dostupnosti. Konfigurační data se odesílají synchronně z primárního serveru. Tato konfigurační data se pak použijí při selhání, ať už jsou automatická nebo ruční.

Aby skupina dostupnosti udržovala kvorum a podporovala automatické převzetí služeb při selhání, pokud je typ clusteru externí, musí:

- Mají tři synchronní repliky (pouze edice SQL Server Enterprise); nebo

- Mají dvě repliky (primární a sekundární) a repliku určenou pouze pro konfiguraci.

Ruční převzetí služeb při selhání může nastat bez ohledu na to, jestli pro konfigurace skupiny dostupnosti používáte typy clusteru Externí nebo Žádné. I když repliku určenou pouze pro konfiguraci lze nakonfigurovat se sadou dostupnosti, která má typ clusteru nastavený na Žádný, nedoporučuje se to, protože to komplikuje nasazení. U těchto konfigurací ručně upravte required_synchronized_secondaries_to_commit hodnotu alespoň 1, aby byla alespoň jedna synchronizovaná replika.

Repliku jen pro konfiguraci je možné hostovat v libovolné edici SQL Serveru, včetně SQL Serveru Express. Tím se minimalizují náklady na licencování a zajistí se jeho fungování s skupinami AG v edici SQL Server Standard. To znamená, že třetí požadovaný server musí splňovat minimální specifikace potřebné pro SQL Server, protože nepřijímá provoz uživatelských transakcí pro Skupinu dostupnosti (AG).

Při použití repliky jen pro konfiguraci má následující chování:

Ve výchozím nastavení

required_synchronized_secondaries_to_commitje nastavená hodnota 0. V případě potřeby ho můžete ručně upravit na hodnotu 1.Pokud primární replika selže a

required_synchronized_secondaries_to_commitmá hodnotu 0, sekundární replika se stane novou primární replikou a umožňuje jak čtení, tak i zápis. Pokud je hodnota 1, dojde k automatickému převzetí služeb při selhání, ale nepřijímá nové transakce, dokud nebude druhá replika online.Pokud sekundární replika selže a

required_synchronized_secondaries_to_commitje 0, primární replika stále přijímá transakce, ale pokud primární selže v tu chvíli, neexistuje žádná ochrana dat ani přepnutí při chybě (ruční nebo automatické), protože sekundární replika není dostupná.Pokud replika jen pro konfiguraci selže, funguje skupina dostupnosti normálně, ale automatické převzetí služeb při selhání není možné.

Pokud synchronní sekundární replika a replika jen pro konfiguraci selžou, primární nemůže přijímat transakce a neexistuje místo, kam by se mohla primární replika po selhání převést.

Více skupin dostupnosti

Pro cluster Pacemaker nebo sadu serverů je možné vytvořit více než jednu skupinu dostupnosti. Jediným omezením jsou systémové prostředky. Vlastnictví AG je zobrazeno primárním. Různé dostupnostní skupiny (AG) mohou vlastnit různé uzly; všechny nemusí běžet na stejném uzlu.

Umístění jednotky a složky pro databáze

Stejně jako u AGs na platformě Windows by měla být struktura disků a složek pro uživatelské databáze, které se účastní AG, stejná. Pokud jsou například uživatelské databáze na /var/opt/mssql/userdata serveru A, měla by na serveru B existovat stejná složka. Jedinou výjimkou je uvedena v části Interoperabilita se skupinami dostupnosti a replikami založenými na Windows.

Naslouchač v Linuxu

Posluchač je volitelná funkce pro AG. Poskytuje jediný vstupní bod pro všechna připojení (čtení a zápis do primární repliky nebo jen pro čtení do sekundárních replik), aby aplikace a koncoví uživatelé nemuseli vědět, který server je hostitelem dat. Ve WSFC se jedná o kombinaci prostředku síťového názvu a prostředku IP, která se poté zaregistruje ve službách AD DS a DNS, pokud je to potřeba. V kombinaci se samotným zdrojem AG poskytuje tuto abstrakci. Další informace o naslouchacím zařízení najdete v tématu Připojení k naslouchacímu zařízení skupiny dostupnosti Always On.

Naslouchací proces v Linuxu je nakonfigurovaný jinak, ale jeho funkce jsou stejné. V Pacemakeru neexistuje žádný koncept prostředku síťového názvu ani objekt není vytvořen ve službě AD DS; v Pacemakeru je vytvořený jen prostředek IP adresy, který může být spuštěn na libovolném uzlu. Je potřeba vytvořit položku přidruženou k prostředku IP pro posluchač v DNS srozumitelným názvem. Prostředek IP adresy pro naslouchací službu je aktivní pouze na serveru, který hostí primární repliku pro danou skupinu dostupnosti.

Pokud se použije Pacemaker a vytvoří se prostředek pro IP adresu, který je přidružený k naslouchacímu procesu, nastane krátký výpadek, protože IP adresa se zastaví na jednom serveru a rozběhne se na druhém, bez ohledu na to, zda se jedná o automatické nebo manuální selhání. I když to poskytuje abstrakci prostřednictvím kombinace jednoho názvu a IP adresy, nezamaskuje výpadek. Aplikace musí být schopná zpracovat odpojení tím, že má určitou funkci, aby ji mohla rozpoznat a znovu připojit.

Kombinace názvu DNS a IP adresy však stále nestačí k tomu, aby poskytovala všechny funkce, které poskytuje naslouchací proces ve WSFC, například směrování jen pro čtení pro sekundární repliky. Při konfiguraci AG musí být naslouchací zařízení stále nakonfigurováno na SQL Serveru. To je vidět v průvodci a syntaxi Transact-SQL. Existují dva způsoby, jak se dá nakonfigurovat tak, aby fungovaly stejně jako ve Windows:

- V případě skupiny dostupnosti (AG) s typem externího clusteru by IP adresa přidružená k naslouchacímu zařízení vytvořenému v SQL Serveru měla být IP adresa prostředku vytvořeného v Pacemakeru.

- Pro skupinu dostupnosti (AG) vytvořenou s typem clusteru "None" použijte IP adresu přidruženou k primární replice.

Instance přidružená k zadané IP adrese se pak stane koordinátorem pro věci, jako jsou žádosti o směrování jen pro čtení z aplikací.

Interoperabilita se skupinami dostupnosti a replikami založenými na Windows

AG, která má typ clusteru externí nebo WSFC, nemůže mít repliky napříč platformami. To platí bez ohledu na to, zda je SQL Server ve verzi Standard nebo Enterprise. To znamená, že v tradiční konfiguraci AG s podkladovým clusterem nemůže být jedna replika ve WSFC a druhá v Linuxu s Pacemakerem.

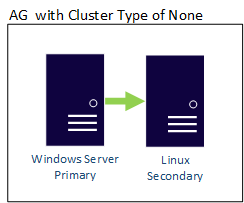

Skupina dostupnosti s typem clusteru NONE může mít své repliky napříč hranicemi operačního systému, takže ve stejné skupině dostupnosti můžou být repliky založené na Linuxu i Windows. Tady je příklad, kde je primární replika založená na Systému Windows, zatímco sekundární je v jedné z linuxových distribucí.

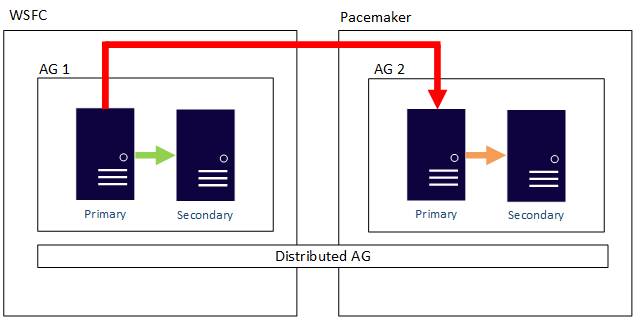

Distribuovaná skupina dostupnosti může také překračovat hranice operačního systému. Podkladové skupiny AG (Availability Groups) jsou vázané pravidly své konfigurace, jako v případě, kdy je jedna konfigurovaná jako externí pouze pro Linux, ale skupina dostupnosti, ke které je připojena, by mohla být konfigurována pomocí WSFC (Windows Server Failover Clustering). Podívejte se na následující příklad:

Související obsah

- Konfigurace skupiny dostupnosti SQL Serveru pro zajištění vysoké dostupnosti v Linuxu

- Konfigurace skupiny dostupnosti SQL Serveru pro škálování čtení v Linuxu

- Konfigurace clusteru Pacemaker pro skupiny dostupnosti SQL Serveru

- Konfigurace skupiny dostupnosti AlwaysOn SQL Serveru ve Windows a Linuxu (pro různé platformy)